吉林大学学报(工学版) ›› 2020, Vol. 50 ›› Issue (1): 227-236.doi: 10.13229/j.cnki.jdxbgxb20190116

• 计算机科学与技术 • 上一篇

基于SVM和窗口梯度的多焦距图像融合方法

- 1. 吉林大学 计算机科学与技术学院,长春 130012

2. 吉林大学 符号计算与知识工程教育部重点实验室,长春 130012

3. 吉林大学 仪器科学与电气工程学院,长春 130033

Multi-focus image fusion based on support vector machines and window gradient

Xiong-fei LI1,2( ),Jing WANG1,2,Xiao-li ZHANG1,2,Tie-hu FAN3(

),Jing WANG1,2,Xiao-li ZHANG1,2,Tie-hu FAN3( )

)

- 1. College of Computer Science and Technology,Jilin University, Changchun 130012,China

2. Key Laboratory of Symbolic Computation and Knowledge Engineering of Ministry of Education,Jilin University,Changchun 130012,China

3. College of Instrumentation and Electrical Engineering, Jilin University,Changchun 130033,China

摘要:

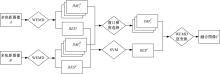

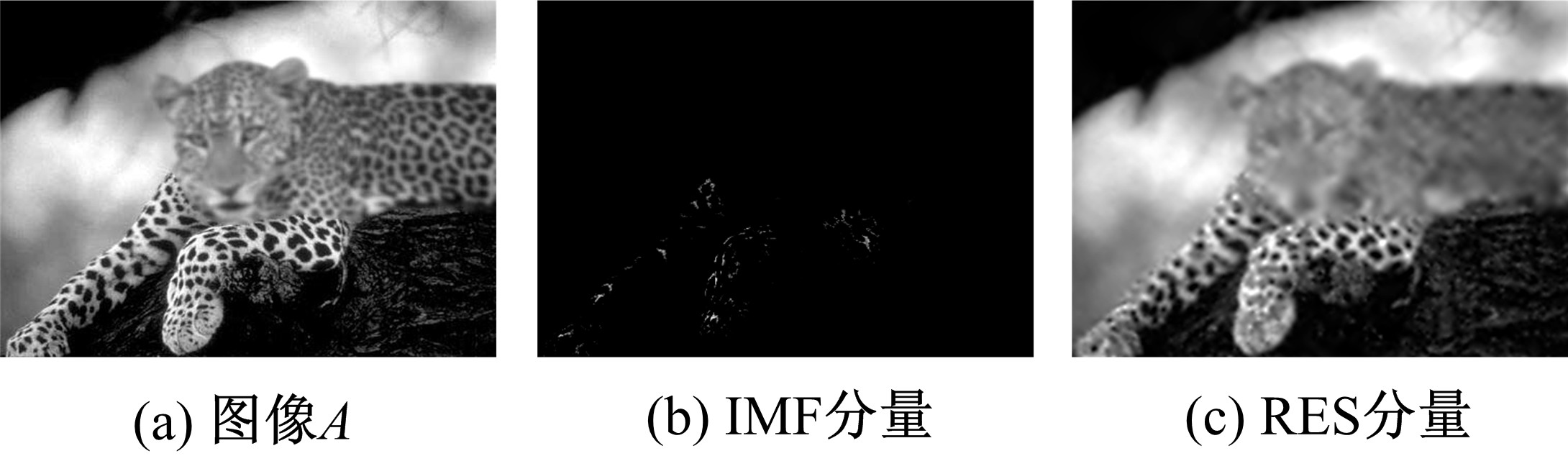

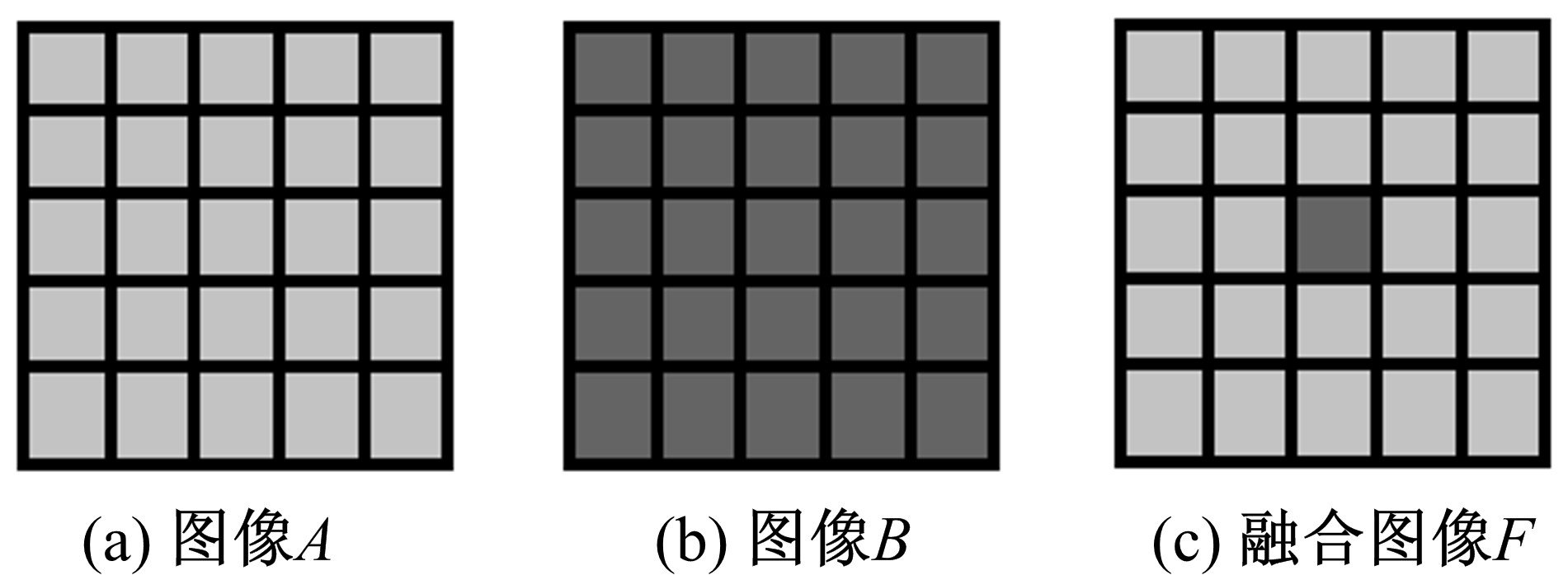

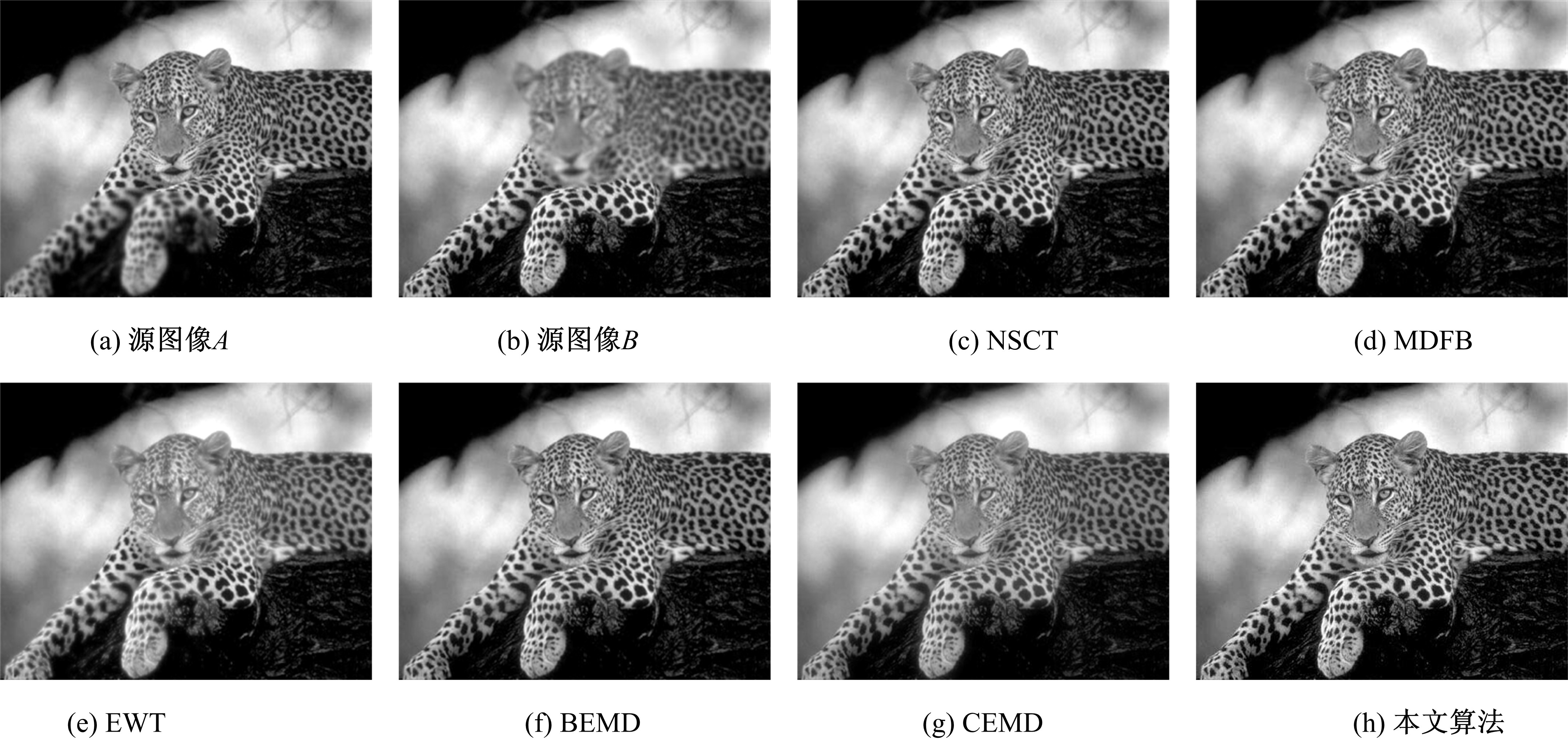

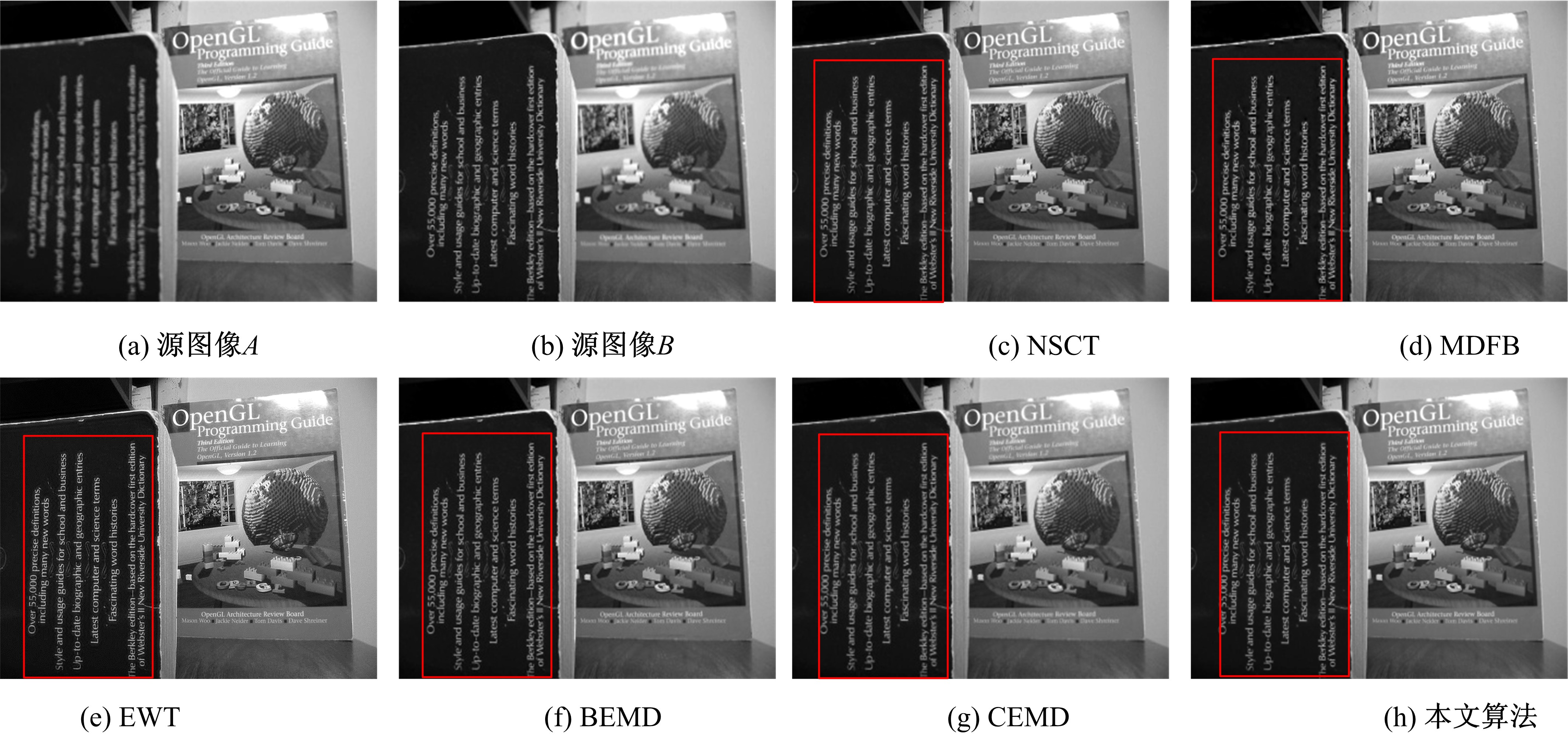

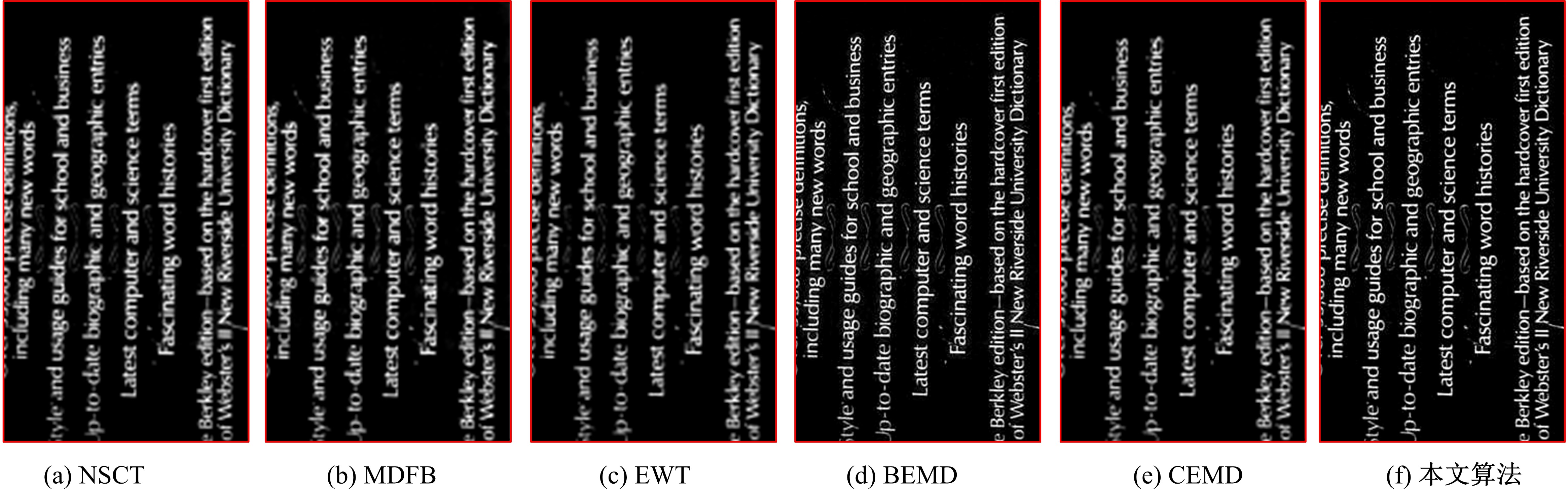

为提高多焦距图像融合质量,提出了一种基于支持向量机(SVM)和窗口梯度的多焦距图像融合方法。该方法首先对多焦距图像进行基于窗口的经验模态分解(WEMD),得到一组内涵模式函数分量(高频)和残余分量(低频),WEMD可以有效解决图像分解中的信号混叠问题;然后,利用SVM的输出指导低频分量融合,选取更清晰的聚焦区域;利用本文的窗口梯度对比算法指导高频分量融合,在保持融合图像对比度的同时保证图像的一致性;最后,经过WEMD逆变换得到融合图像。在9组多焦距图像上进行实验,从主观评价和5种客观评价指标方面,本文的融合方法相比于其他5种方法能获得更好的融合质量。

中图分类号:

- TP391

| 1 | 蔺素珍, 韩泽. 基于深度堆叠卷积神经网络的图像融合[J]. 计算机学报, 2017, 40(11): 2506-2518. |

| Lin Su-zhen, Han Ze. Images fusion based on deep stack convolutional neural network[J]. Chinese Journal of Computers, 2017, 40(11): 2506-2518. | |

| 2 | Li S, Kang X, Fang L, et al. Pixel-level image fusion: a survey of the state of the art[J]. Information Fusion, 2017, 33: 100-112. |

| 3 | Zhu Z, Yin H, Chai Y, et al. A novel multi-modality image fusion method based on image decomposition and sparse representation[J]. Information Sciences, 2018, 432: 516-529. |

| 4 | Do M N, Vetterli M. The contourlet transform: an efficient directional multiresolution image representation[J]. IEEE Transactions on Image Processing, 2005, 14(12): 2091-2106. |

| 5 | da Cunha A L, Zhou J, do Minh N. The nonsubsampled contourlet transform: theory, design, and applications[J]. IEEE Transactions on Image Processing, 2006, 15(10): 3089-3101. |

| 6 | Yang Y, Huang S Y, Gao J, et al. Multi-focus image fusion using an effective discrete wavelet transform based algorithm[J]. Meas Sci Rev, 2014,14(2): 102-108. |

| 7 | Wang H. Multi-focus image fusion algorithm based on focus detection in spatial and NSCT domain[J]. PloS One, 2018, 13(9): e0204225. |

| 8 | 杨扬, 戴明, 周箩鱼, 等. 基于非下采样 Bandelet 变换的多聚焦图像融合[J]. 吉林大学学报: 工学版, 2014,44(2): 525-530. |

| Yang Yang, Dai Ming, Zhou Luo-yu, et al. Multifocus image fusion based on nonsubsampled Bandelet transform [J]. Journal of Jilin University (Engineering and Technology Edition), 2014,44(2): 525-530. | |

| 9 | Moushmi S, Sowmya V, Soman K P. Empirical wavelet transform for multifocus image fusion[C]∥Proceedings of the International Conference on Soft Computing Systems. New Delhi,India, 2016: 257-263. |

| 10 | Chen C, Gend P, Lu K. Multifocus İmage Fusion Based on Multiwavelet and DFB[J]. Chem Engineer Trans, 2015, 46: 277-282. |

| 11 | Huang N E, Shen Z, Long S R, et al. The empirical mode decomposition and theHilbert spectrum for nonlinear and nonstationary time series analysis[J]. Proc of the Royal Society of London A, 1998, 454:903-995. |

| 12 | 李欢利, 郭立红, 陈涛, 等. 基于改进的经验模态分解的虹膜识别方法[J]. 吉林大学学报: 工学版, 2013,43(1): 198-205. |

| Li Huan-li, Guo Li-hong, Chen Tao, et al. Iris recognition based on improved empirical mode decomposition method [J]. Journal of Jilin University (Engineering and Technology Edition), 2013,43(1): 198-205. | |

| 13 | Nunes J C, Niang O, Bouaoune Y, et al. Bidimensional empirical mode decomposition modified for texture analysis[J]. Lect Notes Comp Sci, 2003,2749: 171-177. |

| 14 | Yang J, Guo L, Yu S, et al. A new multi-focus image fusion algorithm based on BEMD and improved local energy[J]. Journal of Software, 2014, 9(9): 2329-2335. |

| 15 | Yeh M H. The complex bidimensional empirical mode decomposition[J]. Signal Processing, 2012, 92(2): 523-541. |

| 16 | 梁灵飞, 平子良. 基于窗口经验模式分解的医学图像增强[J]. 光电子∙激光, 2010, 21(9): 1421-1425. |

| Liang Ling-fei, Ping Zi-liang. Medical image enhancement based on window empirical mode decomposition algorithm[J]. Journal of Optoelectronics·Laser, 2010, 21(9): 1421-1425. | |

| 17 | Huang W, Jing Z. Evaluation of focus measures in multi-focus image fusion[J]. Pattern Recognition Letters, 2007, 28(4): 493-500. |

| 18 | Qu G, Zhang D, Yan P. Information measure for performance of image fusion[J]. Electronics Letters, 2002, 38(7): 313-315. |

| 19 | Xydeas C S, Petrovic V. Objective image fusion performance measure[J]. Electronics Letters, 2000, 36(4): 308-309. |

| 20 | Piella G, Heijmans H. A new quality metric for image fusion[C]∥International Conference on Image Processing, Barcelona, Catalonia, Spain,2003. |

| [1] | 谷远利, 张源, 芮小平, 陆文琦, 李萌, 王硕. 基于免疫算法优化LSSVM的短时交通流预测[J]. 吉林大学学报(工学版), 2019, 49(6): 1852-1857. |

| [2] | 赵宏伟,王鹏,范丽丽,胡黄水,刘萍萍. 相似性保持实例检索方法[J]. 吉林大学学报(工学版), 2019, 49(6): 2045-2050. |

| [3] | 沈军,周晓,吉祖勤. 服务动态扩展网络及其结点系统模型的实现[J]. 吉林大学学报(工学版), 2019, 49(6): 2058-2068. |

| [4] | 周柚,杨森,李大琳,吴春国,王岩,王康平. 基于现场可编程门电路的人脸检测识别加速平台[J]. 吉林大学学报(工学版), 2019, 49(6): 2051-2057. |

| [5] | 周炳海,吴琼. 考虑工具和空间约束的机器人装配线平衡优化[J]. 吉林大学学报(工学版), 2019, 49(6): 2069-2075. |

| [6] | 车翔玖,刘华罗,邵庆彬. 基于Fast RCNN改进的布匹瑕疵识别算法[J]. 吉林大学学报(工学版), 2019, 49(6): 2038-2044. |

| [7] | 卢洋,王世刚,赵文婷,赵岩. 基于离散Shearlet类别可分性测度的人脸表情识别方法[J]. 吉林大学学报(工学版), 2019, 49(5): 1715-1725. |

| [8] | 赵宏伟,李明昭,刘静,胡黄水,王丹,臧雪柏. 基于自然性和视觉特征通道的场景分类[J]. 吉林大学学报(工学版), 2019, 49(5): 1668-1675. |

| [9] | 李宾,周旭,梅芳,潘帅宁. 基于K-means和矩阵分解的位置推荐算法[J]. 吉林大学学报(工学版), 2019, 49(5): 1653-1660. |

| [10] | 赵金钢,张明,占玉林,谢明志. 基于塑性应变能密度的钢筋混凝土墩柱损伤准则[J]. 吉林大学学报(工学版), 2019, 49(4): 1124-1133. |

| [11] | 李雄飞,宋璐,张小利. 基于协同经验小波变换的遥感图像融合[J]. 吉林大学学报(工学版), 2019, 49(4): 1307-1319. |

| [12] | 刘元宁,刘帅,朱晓冬,霍光,丁通,张阔,姜雪,郭书君,张齐贤. 基于决策粒子群优化与稳定纹理的虹膜二次识别[J]. 吉林大学学报(工学版), 2019, 49(4): 1329-1338. |

| [13] | 李宾,申国君,孙庚,郑婷婷. 改进的鸡群优化算法[J]. 吉林大学学报(工学版), 2019, 49(4): 1339-1344. |

| [14] | 翟凤文,党建武,王阳萍,金静,罗维薇. 基于扩展轮廓的快速仿射不变特征提取[J]. 吉林大学学报(工学版), 2019, 49(4): 1345-1356. |

| [15] | 孙延君,申铉京,陈海鹏,赵永哲. 基于局部平面线性点的翻拍图像鉴别算法[J]. 吉林大学学报(工学版), 2019, 49(4): 1320-1328. |

|

||