Journal of Jilin University(Engineering and Technology Edition) ›› 2023, Vol. 53 ›› Issue (8): 2371-2379.doi: 10.13229/j.cnki.jdxbgxb.20220005

Korean⁃Chinese translation quality estimation based on cross⁃lingual pretraining model

Ya-hui ZHAO( ),Fei-yu LI,Rong-yi CUI,Guo-zhe JIN,Zhen-guo ZHANG,De LI,Xiao-feng JIN

),Fei-yu LI,Rong-yi CUI,Guo-zhe JIN,Zhen-guo ZHANG,De LI,Xiao-feng JIN

- Department of Computer Science & Technology,Yanbian University,Yanji 133002,China

CLC Number:

- TP391.1

| 1 | Kim H, Lee J. Predictor-estimator using multilevel task learning with stack propagation for neural quality estimation[J]. ACM Transactions on Asian and Low-resource Language Information Processing, 2017, 2: 562-568. |

| 2 | Takahashi K, Sudoh K, Nakamura S. Automatic machine translation evaluation using source language inputs and cross-lingual language model[C]∥Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Seattle, Washington, United States, 2020: 3553-3558. |

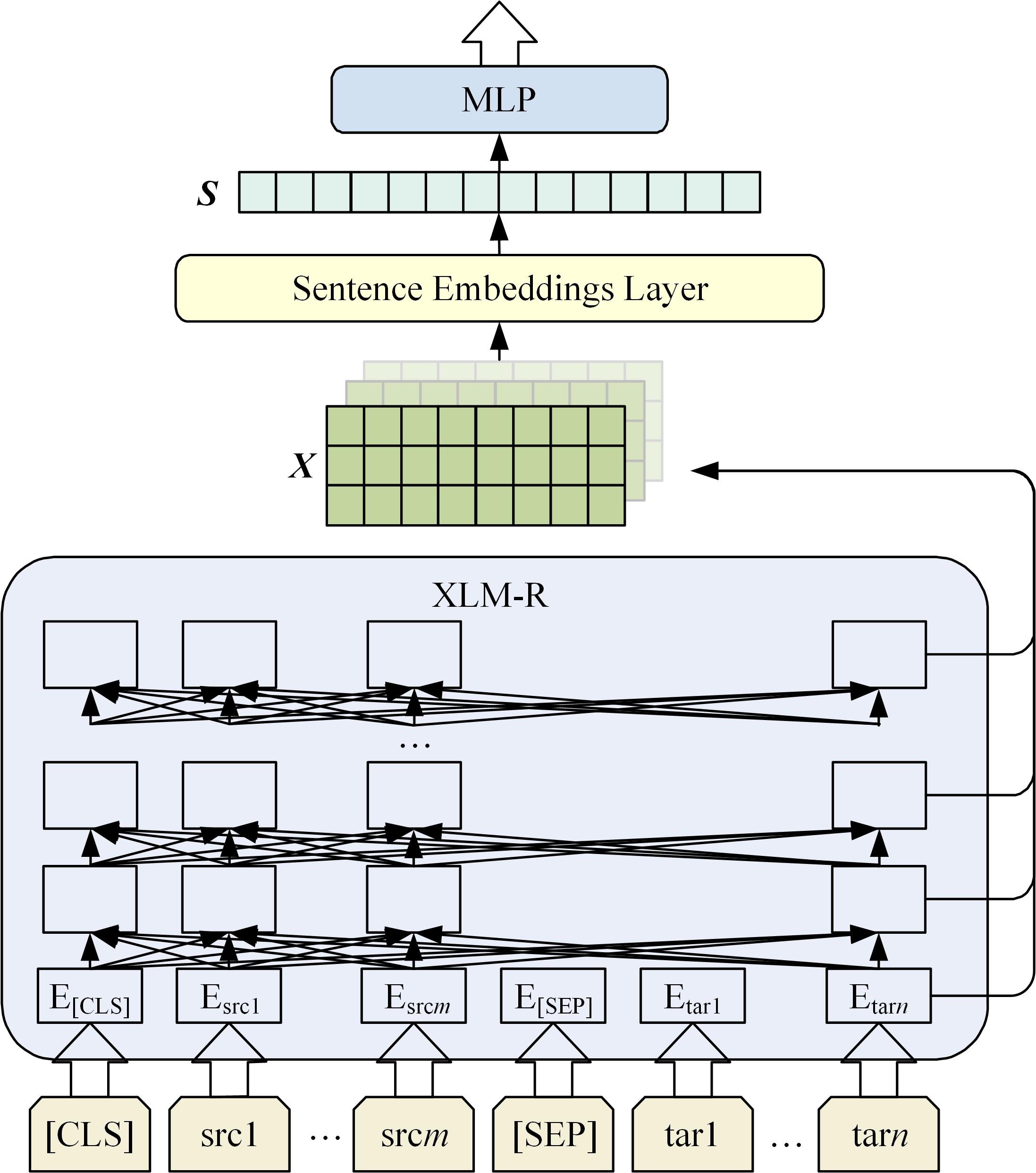

| 3 | Ranasinghe T, Orasan C, Mitkov R. TransQuest: translation quality estimation with cross-lingual transformers[C]∥Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 2020: 5070-5081. |

| 4 | Jawahar G, Sagot B, Eddah D. What does BERT learn about the structure of language? [C]∥Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 2019: 3651-3657. |

| 5 | Pires T, Schlinger E, Garrette D. How multilingual is multilingual BERT? [C]∥Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 2019: 4996-5001. |

| 6 | Specia L, Shah K, Desouza J, et al. QuEst: a translation quality estimation framework[C]∥Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Sofia, Bulgaria, 2013: 79-84. |

| 7 | Fan K, Wang J Y, Li B, et al. "Bilingual Expert" can find translation errors[C]∥Proceedings of the 33rd AAAI Conference on Artificial Intelligence, Phoenix, United States, 2019: 6367-6374. |

| 8 | Liu Y H, Ott M, Goyal N, et al. RoBERTa: a robustly optimized bert pretraining approach[C]∥Proceedings of the 2020 International Conference on Learning Representations, Addis Ababa, Ethiopia, 2020: 1-15. |

| 9 | Devlin J, Chang M, Lee K, et al. BERT: pre-training of deep bidirectional transformers for language understanding[C]∥Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, United States, 2019: 4171-4186. |

| 10 | Conneau A, Lample G. Cross-lingual language model pretraining[J]. Advances in Neural Information Processing Systems, 2019, 32: 7059-7069. |

| 11 | Conneau A, Khandelwal K, Goyal N, et al. Unsupervised cross-lingual representation learning at scale[C]∥Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Seattle, Washington, United States, 2020: 8840-8451. |

| 12 | Arora S, Liang Y Y, Ma T Y. A simple but tough-to-beat baseline for sentence embeddings[C]∥Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 2017: 1-16. |

| 13 | Conneau A, Kiela D, Schwenk H, et al. Supervised learning of universal sentence representations from natural language inference data[C]∥Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 2017: 670-680. |

| 14 | Logeswaran L, Lee H. An efficient framework for learning sentence representations[C]∥Proceedings of the 6th International Conference on Learning Representations, Pennsylvania, United States, 2018: 1-16. |

| 15 | Reimers N, Gurevych I, Reimers N, et al. Sentence-BERT: sentence embeddings using siamese BERT-networks[C]∥Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing, Hong Kong, China, 2019: 3982-3992. |

| 16 | Wang B, Kuo J. SBERT-WK: a sentence embedding method by dissecting BERT-based word models[J]. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 2020, 28: 2146-2157. |

| 17 | Hu J, Shen L, Sun G. Squeeze-and-excitation networks[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, United States, 2018: 7132-7141. |

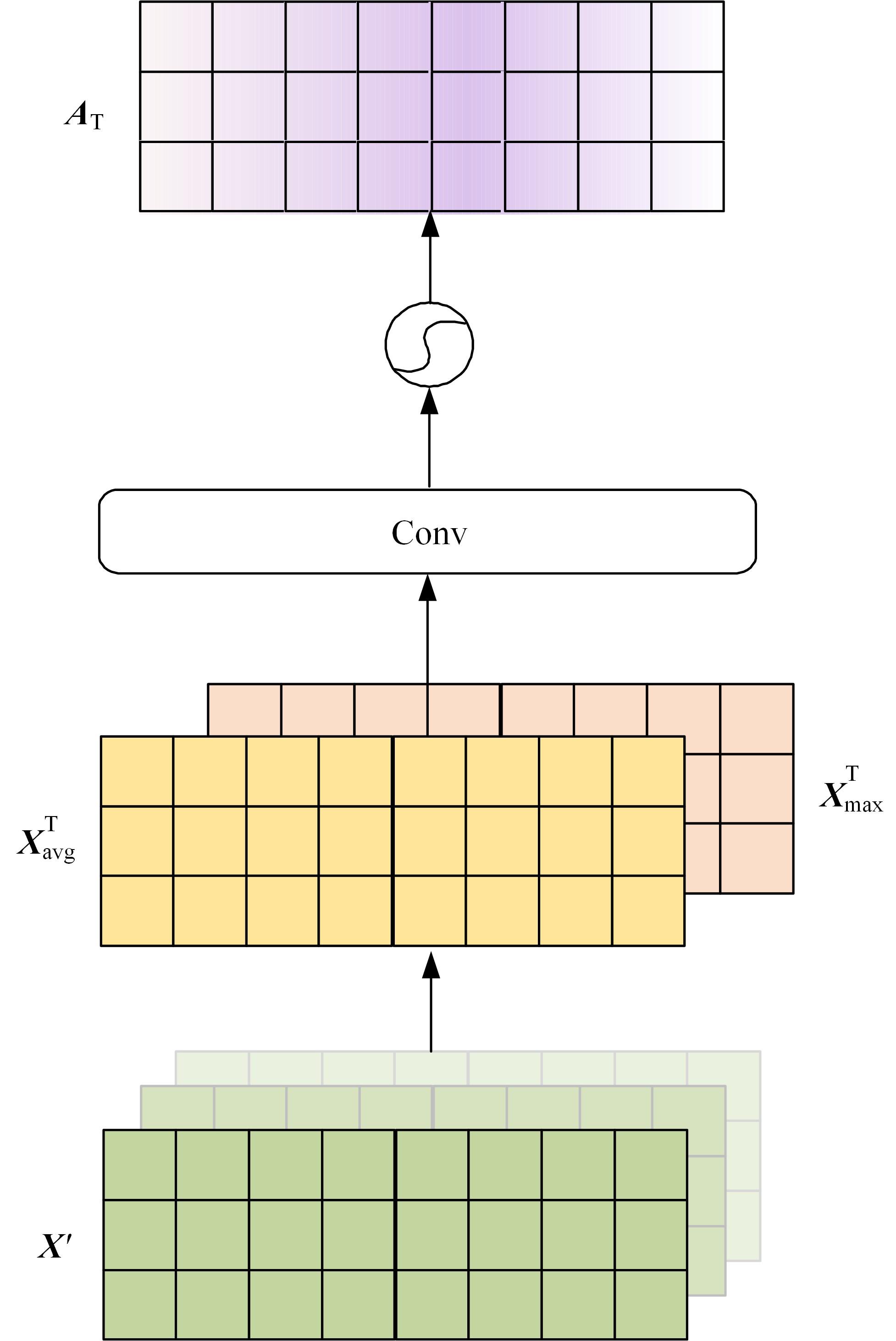

| 18 | Woo S, Park J, Lee J Y, et al. CBAM: convolutional block attention module[C]∥Proceedings of the European Conference on Computer Vision, Antibes, France, 2018: 3-19. |

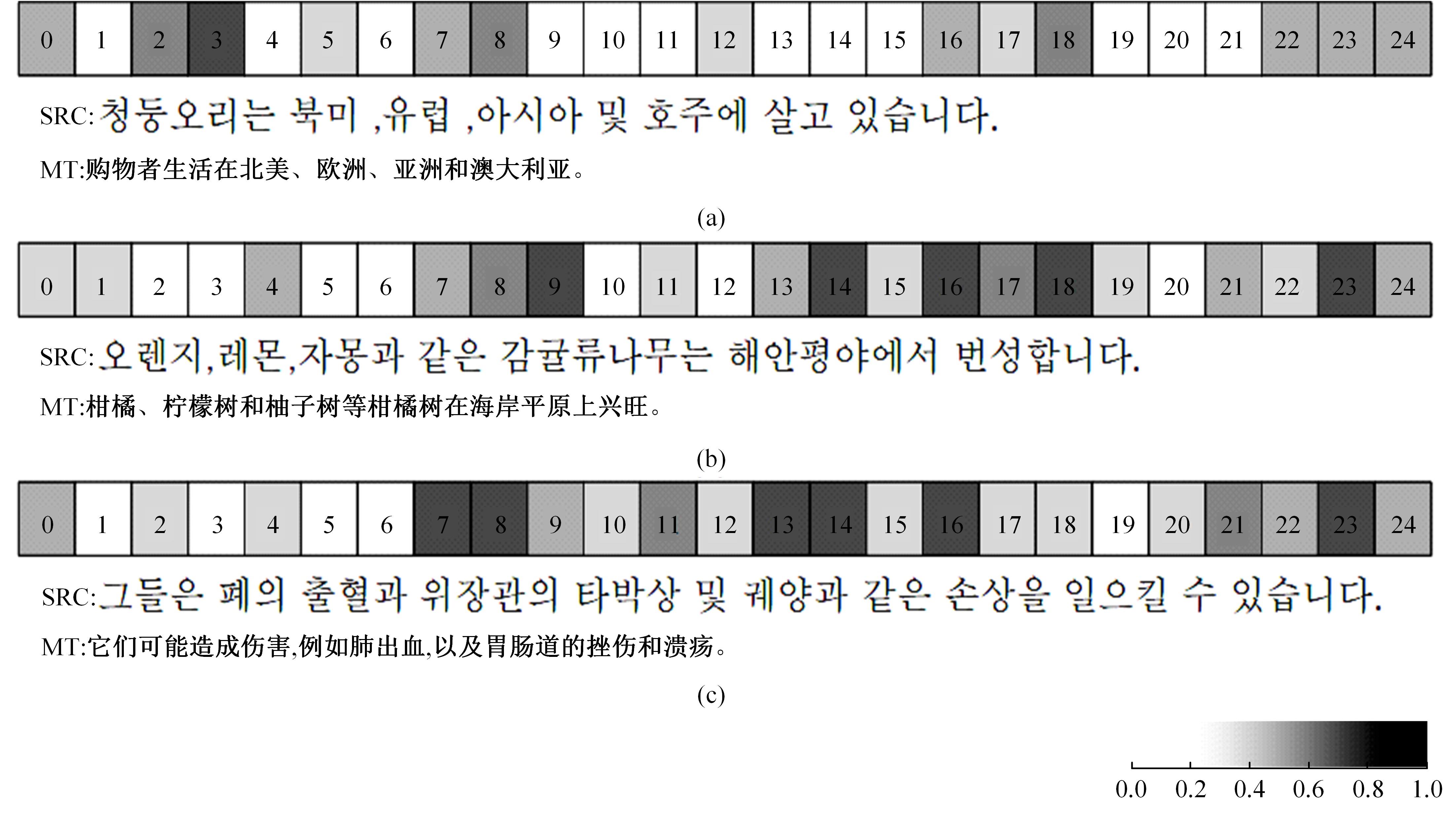

| 19 | 赵亚慧, 杨飞扬, 张振国, 等. 基于强化学习和注意力机制的朝鲜语文本结构发现[J]. 吉林大学学报: 工学版, 2021, 51(4): 1387-1395. |

| Zhao Ya-hui, Yang Fei-yang, Zhang Zhen-guo, et al. Korean text structure discovery based on reinforcement learning and attention mechanism[J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(4): 1387-1395. | |

| 20 | 李健, 熊琦, 胡雅婷, 等. 基于Transformer和隐马尔科夫模型的中文命名实体识别方法[J]. 吉林大学学报: 工学版,2023, 53(5): 1427-1434. |

| Li Jian, Xiong Qi, Hu Ya-ting, et al. Chinese named entity recognition method based on transformer and hidden markov model[J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(5): 1427-1434. |

| [1] | Shan XUE,Ya-liang ZHANG,Qiong-ying LYU,Guo-hua CAO. Anti⁃unmanned aerial vehicle system object detection algorithm under complex background [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(3): 891-901. |

| [2] | Jun-jie WANG,Yuan-jun NONG,Li-te ZHANG,Pei-chen ZHAI. Visual relationship detection method based on construction scene [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(1): 226-233. |

| [3] | Bing ZHU,Zi-wei LI,Qi LI. Building segmentation method of remote sensing image based on improved SegNet [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(1): 248-254. |

| [4] | Gui-he QIN,Jun-feng HUANG,Ming-hui SUN. Text input based on two⁃handed keyboard in virtual environment [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(8): 1881-1888. |

| [5] | Tian BAI,Ming-wei XU,Si-ming LIU,Ji-an ZHANG,Zhe WANG. Dispute focus identification of pleading text based on deep neural network [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(8): 1872-1880. |

| [6] | Fu-heng QU,Tian-yu DING,Yang LU,Yong YANG,Ya-ting HU. Fast image codeword search algorithm based on neighborhood similarity [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(8): 1865-1871. |

| [7] | Ming LIU,Yu-hang YANG,Song-lin ZOU,Zhi-cheng XIAO,Yong-gang ZHANG. Application of enhanced edge detection image algorithm in multi-book recognition [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(4): 891-896. |

| [8] | Shi-min FANG. Multiple source data selective integration algorithm based on frequent pattern tree [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(4): 885-890. |

| [9] | Sheng-sheng WANG,Chen-xu LI,Xiang-yu WANG,Zhi-lin YAO,Yi-shen LIU,Jia-qian WU,Qing-ran YANG. Brain tumor image classification based on improved residual capsule network and sparrow search [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(11): 2653-2661. |

| [10] | Xiang-jiu CHE,He-yuan CHEN. Muti⁃Object dishes detection algorithm based on improved YOLOv4 [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(11): 2662-2668. |

| [11] | Sheng-sheng WANG,Jing-yu CHEN,Yi-nan LU. COVID⁃19 chest CT image segmentation based on federated learning and blockchain [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(6): 2164-2173. |

| [12] | Hong-wei ZHAO,Zi-jian ZHANG,Jiao LI,Yuan ZHANG,Huang-shui HU,Xue-bai ZANG. Bi⁃direction segmented anti⁃collision algorithm based on query tree [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(5): 1830-1837. |

| [13] | Jie CAO,Xue QU,Xiao-xu LI. Few⁃shot image classification method based on sliding feature vectors [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(5): 1785-1791. |

| [14] | Chun-bo WANG,Xiao-qiang DI. Cloud storage integrity verification audit scheme based on label classification [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(4): 1364-1369. |

| [15] | Rong QIAN,Ru ZHANG,Ke-jun ZHANG,Xin JIN,Shi-liang GE,Sheng JIANG. Capsule graph neural network based on global and local features fusion [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(3): 1048-1054. |

|

||