Journal of Jilin University(Engineering and Technology Edition) ›› 2025, Vol. 55 ›› Issue (1): 185-197.doi: 10.13229/j.cnki.jdxbgxb.20230321

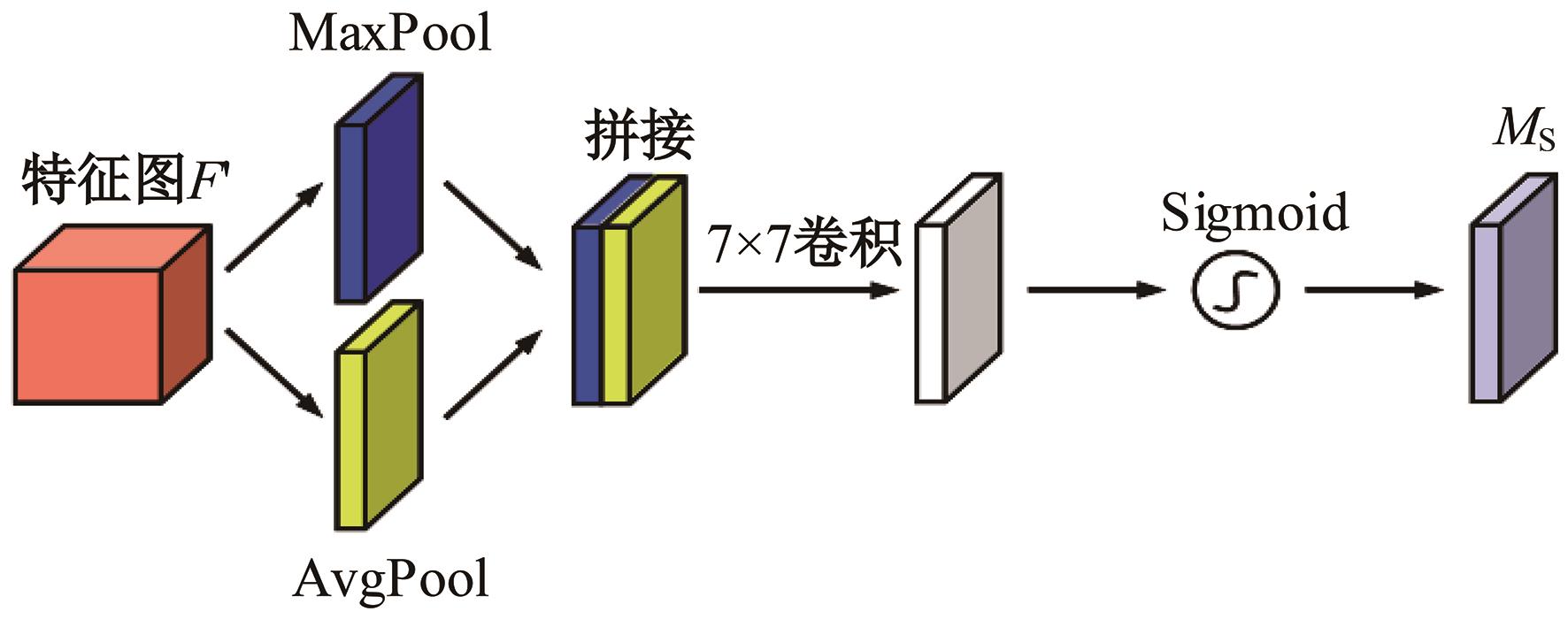

Vehicle target detection and ranging in vehicle image based on deep learning

Hui-zhi XU( ),Shi-sen JIANG,Xiu-qing WANG,Shuang CHEN

),Shi-sen JIANG,Xiu-qing WANG,Shuang CHEN

- School of Civil Engineering and Transportation,Northeast Forestry University,Harbin 150040,China

CLC Number:

- U495

| 1 | 刘旖菲, 胡学敏, 陈国文, 等. 视觉感知的端到端自动驾驶运动规划综述[J]. 中国图象图形学报, 2021, 26(1): 49-66. |

| Liu Yi-fei, Hu Xue-min, Chen Guo-wen, et al. A review of end-to-end autonomous driving motion planning with visual perception[J]. Chinese Journal of Graphical Graphics, 2021, 26(1): 49-66. | |

| 2 | 原晓佩, 陈小锋, 廉明. 基于Haar-like和LBP的多特征融合目标检测算法[J]. 计算机科学, 2021, 48(11): 219-225. |

| Yuan Xiao-pei, Chen Xiao-feng, Lian Ming. A multi-feature fusion target detection algorithm based on Haar-like and LBP[J]. Computer Science, 2021, 48(11): 219-225. | |

| 3 | Lienhart R, Maydt J. An extended set of Haar-like features for rapid object detection[C]∥The IEEE International Conference on Image Processing, New York, USA, 2002: 900-903. |

| 4 | 辛元雪, 史朋飞, 薛瑞阳. 基于区域提取与改进LBP特征的运动目标检测[J]. 计算机科学, 2021, 48(7): 233-237. |

| Xin Yuan-xue, Shi Peng-fei, Xue Rui-yang. Motion target detection based on region extraction with improved LBP features[J]. Computer Science, 2021, 48(7): 233-237. | |

| 5 | Duan J, Ye H, Zhao H, et al. Deep cascade AdaBoost with unsupervised clustering in autonomous vehicles[J]. Electronics, 2022, 12(1):No.12010044. |

| 6 | Yang J, Jun Z. Improved HOG feature vehicle recognition algorithm based on sliding window[J]. Journal of Physics: Conference Series, 2020, 1627(1):No. 012013. |

| 7 | 耿庆田, 于繁华, 王宇婷, 等. 基于特征融合的车型检测新算法[J]. 吉林大学学报:工学版, 2018, 48(3): 929-935. |

| Geng Qing-tian, Yu Fan-hua, Wang Yu-ting, et al. A new algorithm for model detection based on feature fusion[J]. Journal of Jilin University(Engineering Edition), 2018, 48(3): 929-935. | |

| 8 | 高明华, 杨璨. 基于改进卷积神经网络的交通目标检测方法[J]. 吉林大学学报:工学版, 2022, 52(6): 1353-1361. |

| Gao Ming-hua, Yang Can. An improved convolutional neural network based traffic target detection method[J]. Journal of Jilin University(Engineering Edition), 2022, 52(6): 1353-1361. | |

| 9 | Redmon J, Farhadi A. YOLOv3: an incremental improvement[DB/OL].[2023-03-22].. |

| 10 | Yue Z, Wei Z, Nan L. A novel detection method using YOLOv5 for vehicle target under complex situation[J]. Traitement du Signal, 2022, 39(4): 1153-1158. |

| 11 | Guan X, Meng Y, Meng W, et al. Research on highway vehicle detection based on faster R-CNN and domain adaptation[J]. Applied Intelligence, 2022, 52(4): 3483-3498. |

| 12 | Ren S, He K, Girshick R, et al. Faster r-cnn: towards realtime object detection with region proposal networks[J]. Advances in Neural Information Processing Systems, 2015, 28: 91-99. |

| 13 | Mescheder L, Oechsle M, Niemeyer M, et al. Occupancy networks: learning 3D reconstruction in function space[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 4460-4470. |

| 14 | 黄孝慈, 舒方林, 曹文冠. 基于双目视觉的前方车辆测距方法研究[J]. 汽车技术, 2021(12): 16-21. |

| Huang Xiao-ci, Shu Fang-lin, Cao Wen-guan. Research on forward vehicle ranging method based on binocular vision[J]. Automotive Technology, 2021(12): 16-21. | |

| 15 | Hou A L, Chen J, Jin Z J, et al. Binocular vision measurement of distance based on vehicle logo location[J]. Applied Mechanics and Materials, 2012, 229(1): 1154-1157. |

| 16 | Ho H W, Croon G D, Chu Q P. Distance and velocity estimation using optical flow from a monocular camera[J]. International Journal of Micro Air Vehicles, 2017, 9(3): 198-208. |

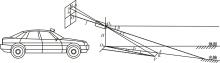

| 17 | 高维岳, 陈宇拓, 刘洋, 等. 基于单目视觉的车辆前方障碍物测距方法[J]. 计算机工程与设计, 2022, 43(4): 1016-1022. |

| Gao Wei-yue, Chen Yu-tuo, Liu Yang, et al. A monocular vision-based approach to vehicle forward obstacle ranging[J]. Computer Engineering and Design, 2022, 43(4): 1016-1022. | |

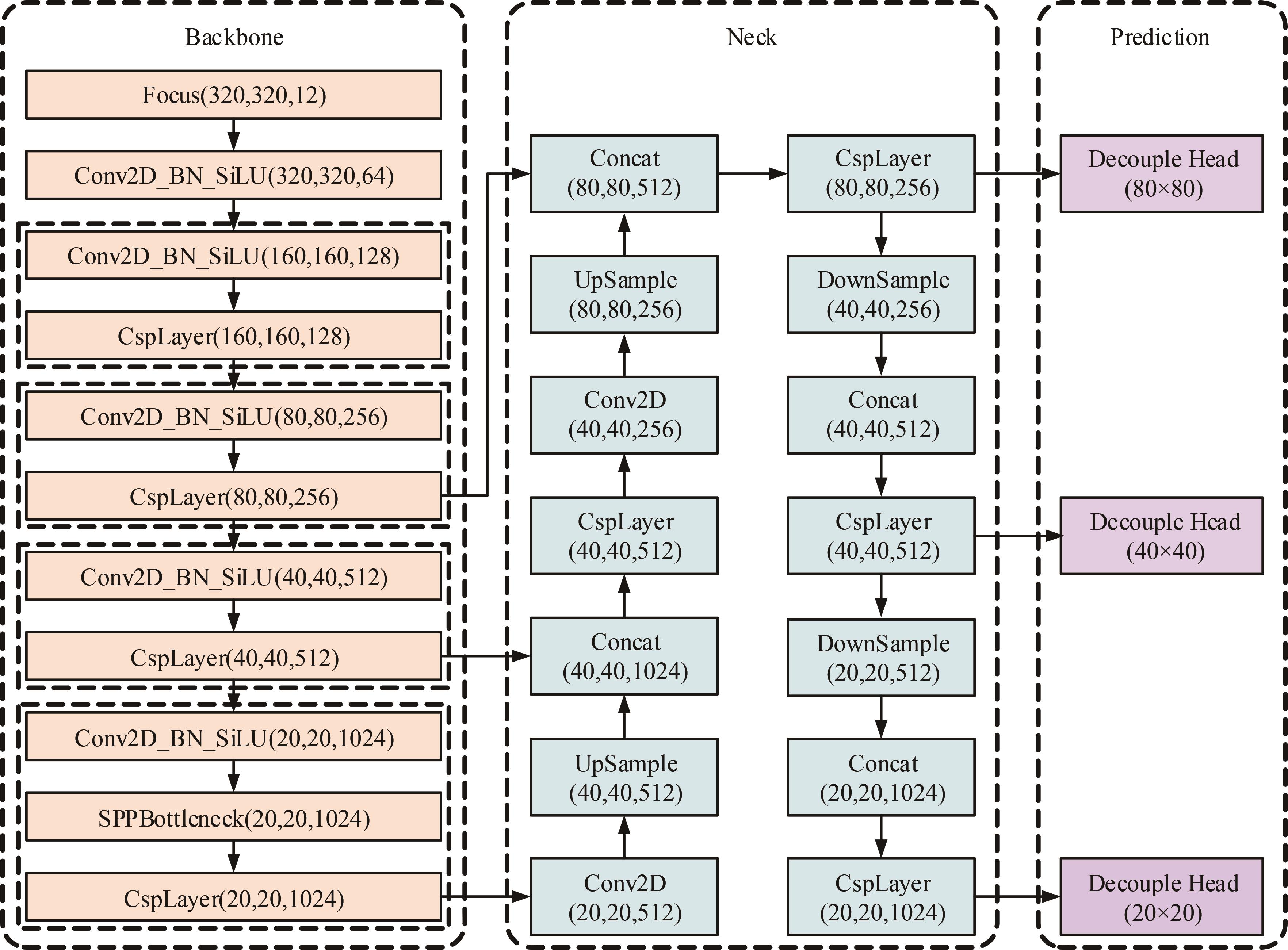

| 18 | Ge Z, Liu S, Wang F, et al. YOLOX: exceeding YOLO Series in 2021[DB/OL].[2023-01-10]. . |

| 19 | He K M, Zhang X Y, Ren S Q, et al. Spatial pyramid pooling in deep convolutional networks for visual recognition[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1904-1916. |

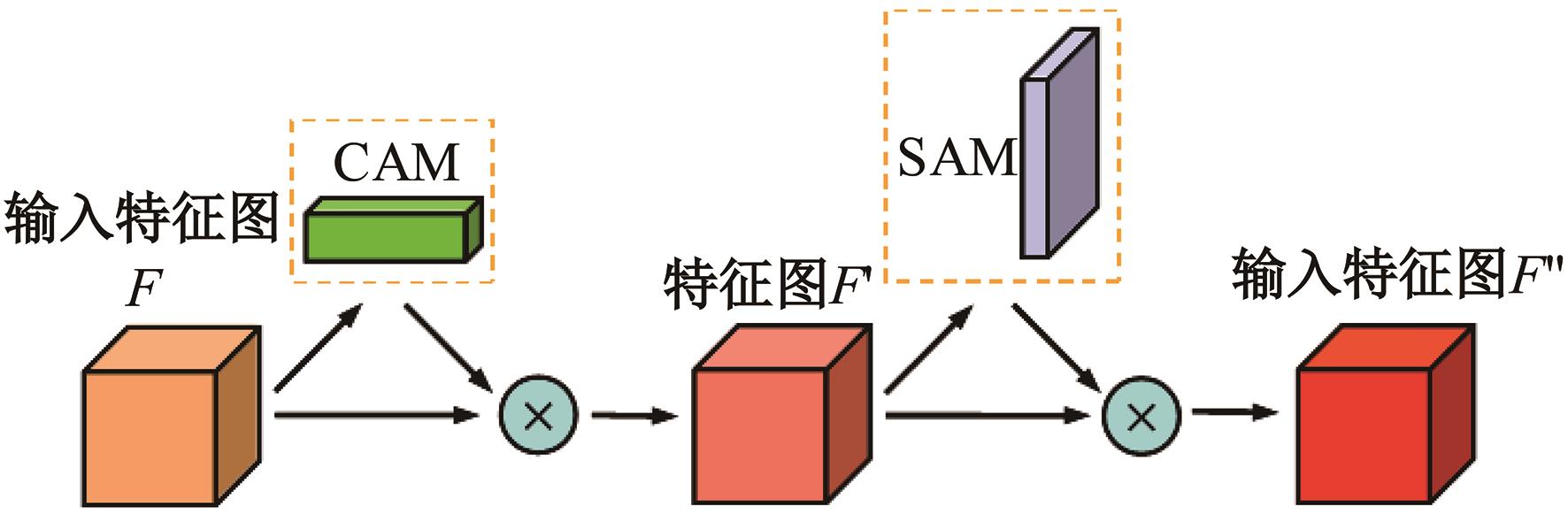

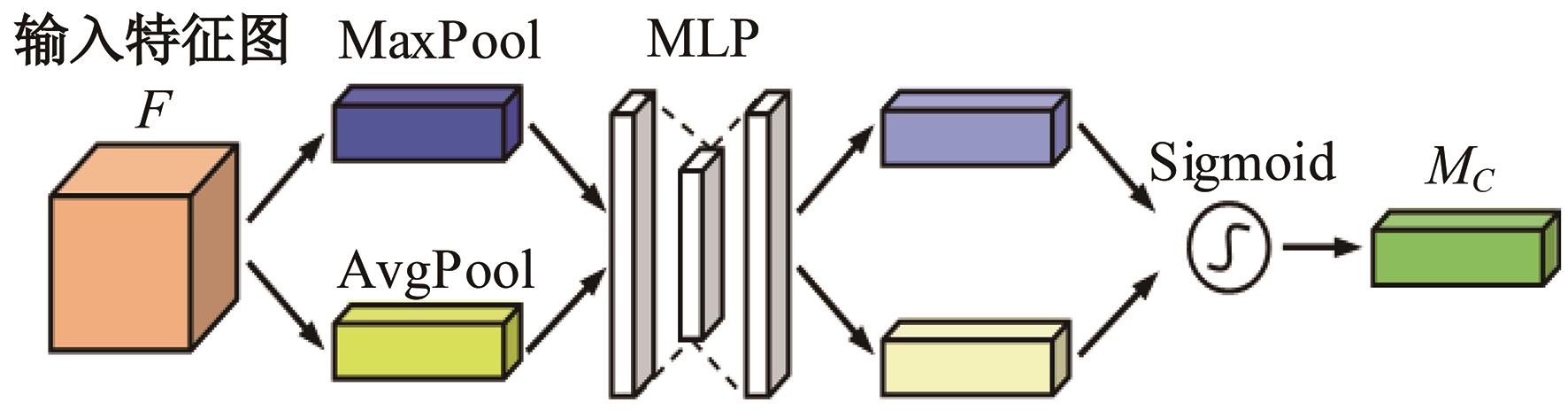

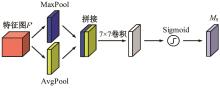

| 20 | Woo S, Park J, Lee J Y, et al. CBAM: convolutional block attention module[C]∥Proceedings of the European Conference on Computer Vision. Munich: Springer, 2018: 3-19. |

| 21 | Lin T, Goyal P, Girshick R, et al. Focal loss for dense object detection[C]∥IEEE International Conference on Computer Vision, Venice, Italy, 2017: 2980-2988. |

| 22 | 刘佳涛, 张亚萍, 杨雨薇. 基于迁移学习的高效单目图像深度估计[J]. 激光与光电子学进展, 2022, 59(16): 236-244. |

| Liu Jia-tao, Zhang Ya-ping, Yang Yu-wei. Efficient monocular image depth estimation based on migration learning[J]. Advances in Lasers and Optoelectronics, 2022, 59(16): 236-244. | |

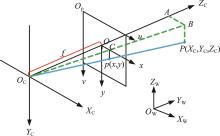

| 23 | 彭妍, 郭君斌, 于传强, 等. 基于平面变换的高精度相机标定方法[J]. 北京航空航天大学学报, 2022, 48(7): 1297-1303. |

| Peng Yan, Guo Jun-bin, Yu Chuan-qiang, et al. A high-precision camera calibration method based on planar transformation[J]. Journal of Beijing University of Aeronautics and Astronautics, 2022, 48(7): 1297-1303. |

| [1] | Lei ZHANG,Jing JIAO,Bo-xin LI,Yan-jie ZHOU. Large capacity semi structured data extraction algorithm combining machine learning and deep learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(9): 2631-2637. |

| [2] | Lu Li,Jun-qi Song,Ming Zhu,He-qun Tan,Yu-fan Zhou,Chao-qi Sun,Cheng-yu Zhou. Object extraction of yellow catfish based on RGHS image enhancement and improved YOLOv5 network [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(9): 2638-2645. |

| [3] | Chang-jiang ZHENG,Tong-tong TAO,Zhi-chao CHEN. Cascading failure model based on adjustable redistribution of traffic flow [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(9): 2441-2450. |

| [4] | Xiao-yue WEN,Guo-min QIAN,Hua-hua KONG,Yue-jie MIU,Dian-hai WANG. TrafficPro: a framework to predict link speeds on signalized urban traffic network [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(8): 2214-2222. |

| [5] | Da-yi QU,Hao-min LIU,Zi-yi YANG,Shou-chen DAI. Dynamic allocation mechanism and model of traffic flow in bottleneck section based on vehicle infrastructure cooperation [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(8): 2187-2196. |

| [6] | Yun-juan YAN,Wei-xiong ZHA,Jun-gang SHI,Li-ping YAN. Double layer optimization model of charging pile based on random charging demand [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(8): 2238-2244. |

| [7] | Bai-you QIAO,Tong WU,Lu YANG,You-wen JIANG. A text sentiment analysis method based on BiGRU and capsule network [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(7): 2026-2037. |

| [8] | Xin-gang GUO,Ying-chen HE,Chao CHENG. Noise-resistant multistep image super resolution network [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(7): 2063-2071. |

| [9] | Li-ping ZHANG,Bin-yu LIU,Song LI,Zhong-xiao HAO. Trajectory k nearest neighbor query method based on sparse multi-head attention [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(6): 1756-1766. |

| [10] | Ming-hui SUN,Hao XUE,Yu-bo JIN,Wei-dong QU,Gui-he QIN. Video saliency prediction with collective spatio-temporal attention [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(6): 1767-1776. |

| [11] | Yu-kai LU,Shuai-ke YUAN,Shu-sheng XIONG,Shao-peng ZHU,Ning ZHANG. High precision detection system for automotive paint defects [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(5): 1205-1213. |

| [12] | Gui-zhen CHEN,Hui-ting CHENG,Cai-hua ZHU,Yu-ran LI,Yan LI. A risk evaluation method for urban intersections considering drivers' physiological information [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(5): 1277-1284. |

| [13] | Ren-xiang CHEN,Chao-chao HU,Xiao-lin HU,Li-xia YANG,Jun ZHANG,Jia-le HE. Driver distracted driving detection based on improved YOLOv5 [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(4): 959-968. |

| [14] | Xiao-hua ZHAO,Chang LIU,Hang QI,Ju-shang OU,Ying YAO,Miao GUO,Hai-yi YANG. Influencing factors and heterogeneity analysis of highway traffic accidents [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(4): 987-995. |

| [15] | Xiu-jian YANG,Xiao-han JIA,Sheng-bin ZHANG. Characteristics of mixed traffic flow taking account effect of dynamics of vehicular platoon [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(4): 947-958. |

|

||