吉林大学学报(工学版) ›› 2021, Vol. 51 ›› Issue (3): 989-995.doi: 10.13229/j.cnki.jdxbgxb20200640

基于Transformer编码器的中文命名实体识别

- 1.西北民族大学 数学与计算机科学学院,兰州 730030

2.兰州交通大学 电子与信息工程学院,兰州 730070

3.西北民族大学 中国民族语言文字信息技术教育部重点实验室,兰州 730030

Chinese named entity recognition based on Transformer encoder

Xiao-ran GUO1( ),Ping LUO2,Wei-lan WANG3

),Ping LUO2,Wei-lan WANG3

- 1.School of Mathematics and Computer Science,Northwest Minzu University,Lanzhou 730030,China

2.School of Electronic and Information Engineering,Lanzhou Jiaotong University,Lanzhou 730070,China

3.Key Laboratory of China's Ethnic Languages and Information Technology,Ministry of Education,Northwest Minzu University,Lanzhou 730030,China

摘要:

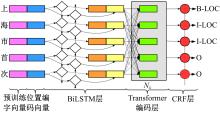

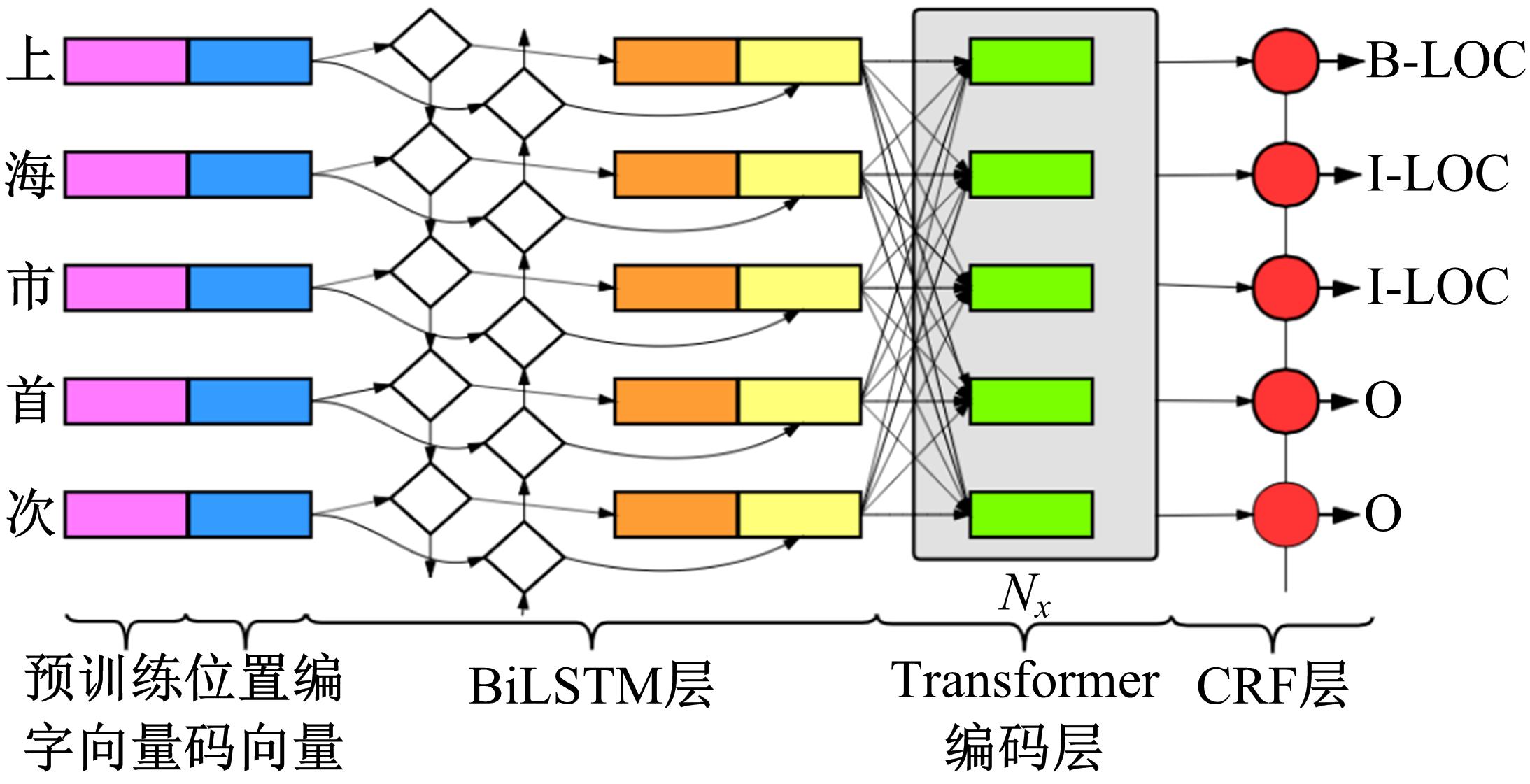

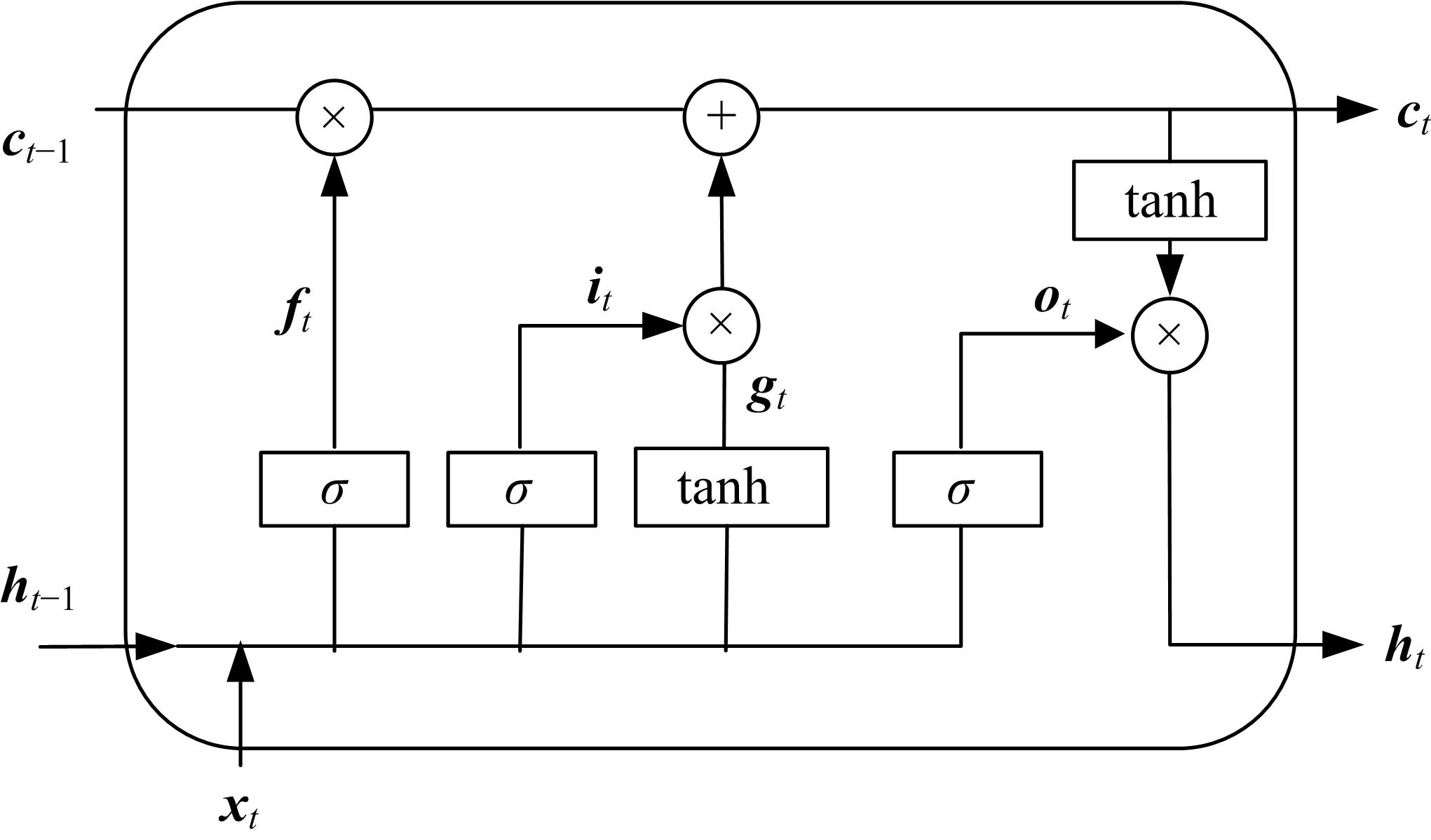

提出了一种基于Transformer编码器和BiLSTM的字级别中文命名实体识别方法,将字向量与位置编码向量拼接成联合向量作为字表示层,避免了字向量信息的损失和位置信息的丢失;利用BiLSTM为联合向量融入方向性信息,引入Transformer编码器进一步抽取字间关系特征。实验结果表明,该方法在MSRA数据集和唐卡数据集上的F1值分别达到了81.39%和86.99%,有效提升了中文命名实体识别的效果。

中图分类号:

- TP391

| 1 | 张晓艳, 王挺, 陈火旺. 命名实体识别研究[J]. 计算机科学, 2005, 32(4):44-48. |

| Zhang Xiao-yan, Wang Ting, Chen Huo-wang. Research on named entity recognition[J]. Computer Science, 2005, 32(4):44-48. | |

| 2 | 刘浏, 王东波. 命名实体识别研究综述[J]. 情报学报, 2018, 37(3): 329-340. |

| Liu Liu, Wang Dong-bo. A review on named entity recognition[J]. Journal of the China Society for Scientific and Technical Information, 2018, 37(3):329-340. | |

| 3 | 张玥杰, 徐智婷, 薛向阳. 融合多特征的最大熵汉语命名实体识别模型[J]. 计算机研究与发展, 2008, 45(6):1004-1010. |

| Zhang Yue-jie, Xu Zhi-ting, Xue Xiang-yang. Fusion of multiple features for Chinese named entity recognition based on maximum entropy model[J]. Journal of Computer Research and Development, 2008, 45(6):1004-1010. | |

| 4 | Morwal S, Jahan N, Chopra D. Named entity recognition using hidden Markov model[J]. International Journal on Natural Language Computing,2012, 1(4):15-23. |

| 5 | Ju Zhen-fei, Wang Jian, Zhu Fei. Named entity recognition from biomedical text using SVM[C]∥International Conference on Bioinformatics & Biomedical Engineering, Wuhan,China, 2011:1-4. |

| 6 | 王路路, 艾山·吾买尔, 买合木提·买买提,等. 基于CRF和半监督学习的维吾尔文命名实体识别[J]. 中文信息学报, 2018, 32(11):16-26, 33. |

| Wang Lu-lu, Wumaier Aishan, Maimaiti Maihemuti, et al. A semi-supervised approach to uyghur named entity recognition based on CRF[J]. Journal of Chinese Information Processing, 2018, 32(11):16-26, 33. | |

| 7 | Maryam Habibi, Leon Weber, Mariana Neves, et al. Deep learning with word embeddings improves biomedical named entity recognition[J]. Bioinformatics, 2017, 33(14):37-48. |

| 8 | Lei J, Tang B, Lu X, et al. Research and applications: a comprehensive study of named entity recognition in Chinese clinical text[J]. Journal of the American Medical Informatics Association, 2014, 21(5):808-814. |

| 9 | Ji Y, Tong C, Liang J, et al. A deep learning method for named entity recognition in bidding document[J]. Journal of Physics:Conference Series, 2019, 1168(3):032076. |

| 10 | Levy O, Goldberg Y. Neural word embedding asimplicit matrix factorization[J/OL].[2020-08-12]. |

| 11 | Hochreiter S, Schmidhuber J. Long short-term memory[J]. Neural Computation, 1997, 9(8):1735-1780. |

| 12 | Graves A, Jurgen S. Framewise phoneme classification with bidirectional LSTM and other neural network architectures[J]. Neural Networks, 2005, 18(5/6):602-610. |

| 13 | Huang Z H, Xu W, Yu K. Bidirectional LSTM-CRF models for sequence tagging[J/OL].[2020-08-15]. |

| 14 | 李明浩, 刘忠, 姚远哲. 基于LSTM-CRF的中医医案症状术语识别[J]. 计算机应用, 2018, 38(2):42-46. |

| Li Ming-hao, Liu Zhong, Yao Yuan-zhe. LSTM-CRF based symptom term recognition on traditional Chinese medical case[J]. Journal of Computer Applications, 2018, 38(2):42-46. | |

| 15 | Ma X Z, Hovy E. End-to-end sequence labeling via bidirectional LSTM-CNNs-CRF[J/OL]. [2020-08-15]. |

| 16 | 韩鑫鑫,贲可荣,张献. 军用软件测试领域的命名实体识别技术研究[J]. 计算机科学与探索, 2020, 14(5):740-748. |

| Han Xin-xin, Ke-rong Ben, Zhang Xian. Research on named entity recognition technology in military software testing[J]. Journal of Frontiers of Computer Science & Technology, 2020, 14(5):740-748. | |

| 17 | Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need[J]. Proceedings of Advances in Neural Information Processing Systems, 2017(12):6000-6010. |

| 18 | Devlin J, Chang M W, Lee K, et al. BERT: pre-training of deep bidirectional transformers for language understanding[J/OL].[2020-08-17]. |

| 19 | Rei M, Crichton G, Pyysalo S. Attending to characters in neural sequence labeling models[C]∥International Conference on Computational Linguistics, Osaka, Japan, 2016: 309-318. |

| 20 | 李明扬, 孔芳. 融入自注意力机制的社交媒体命名实体识别[J]. 清华大学学报:自然科学版, 2019, 59(6):461-467. |

| Li Ming-yang, Kong Fang. Combined self-attention mechanism for named entity recognition in social media[J]. Journal of Tsinghua University(Science and Technology), 2019, 59(6): 461-467. | |

| 21 | Yan H, Deng B, Li X N, et al. TENER: adapting transformer encoder for named entity recognition[J]. Computation and Language, 2019, 1:04474. |

| 22 | Li X Y, Meng Y X, Sun X F, et al. Is word segmentation necessary for deep learning of Chinese representations?[C]∥Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 2019:3242-3252. |

| [1] | 燕杨, 文敦伟, 王云吉, 王珂. 基于层叠条件随机场的中文病历命名实体识别[J]. 吉林大学学报(工学版), 2014, 44(6): 1843-1848. |

|

||