Journal of Jilin University(Engineering and Technology Edition) ›› 2024, Vol. 54 ›› Issue (12): 3423-3432.doi: 10.13229/j.cnki.jdxbgxb.20230087

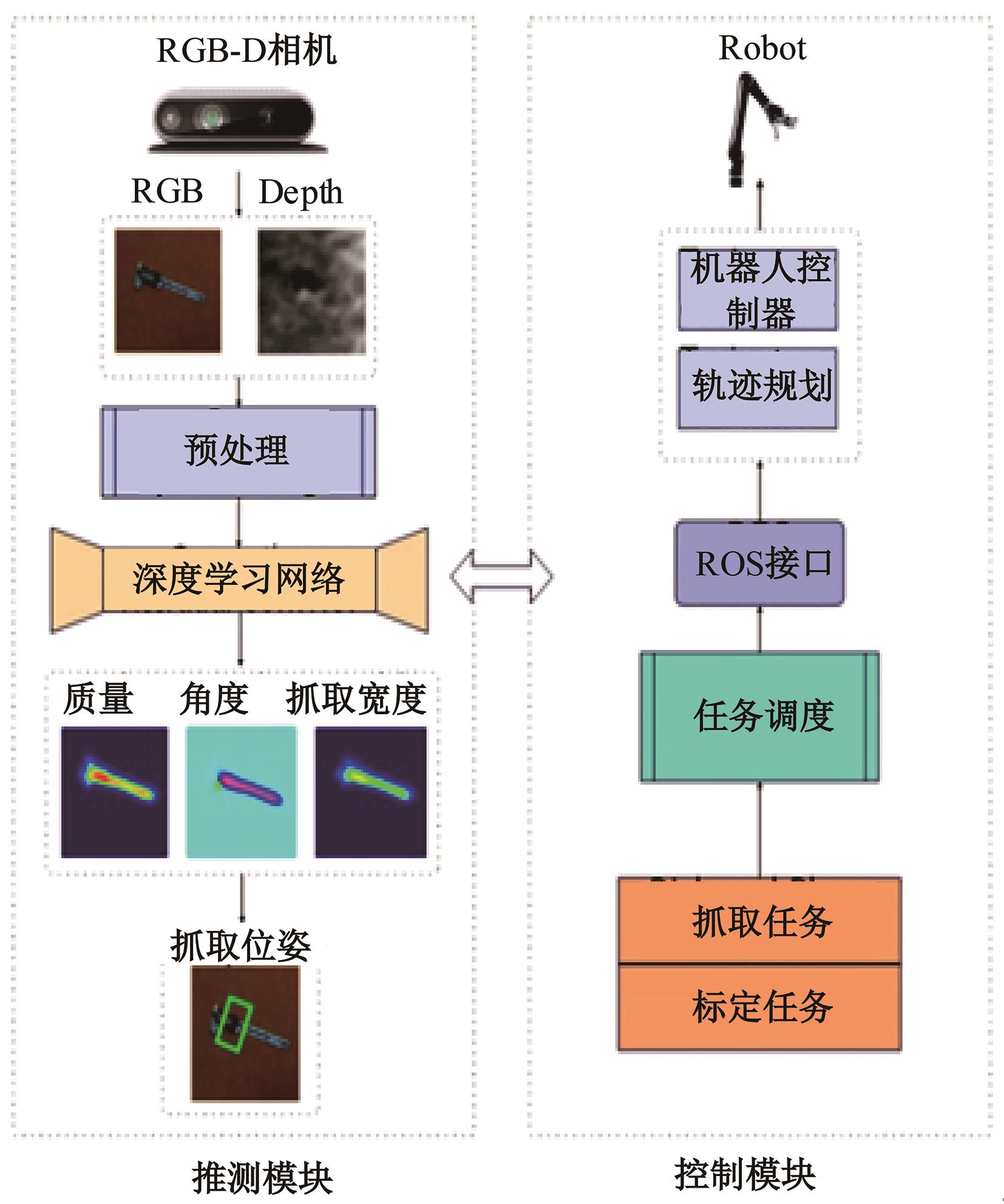

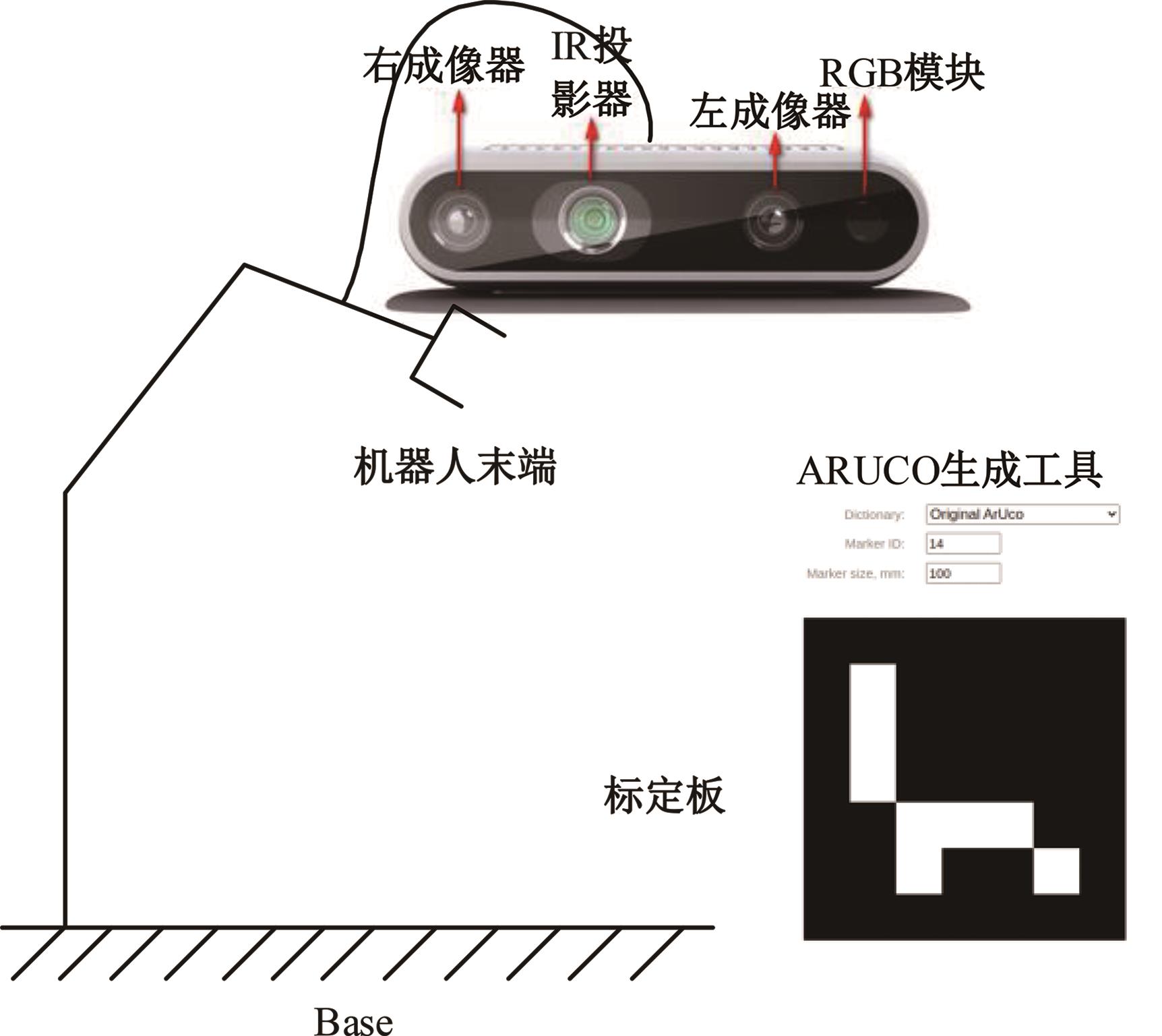

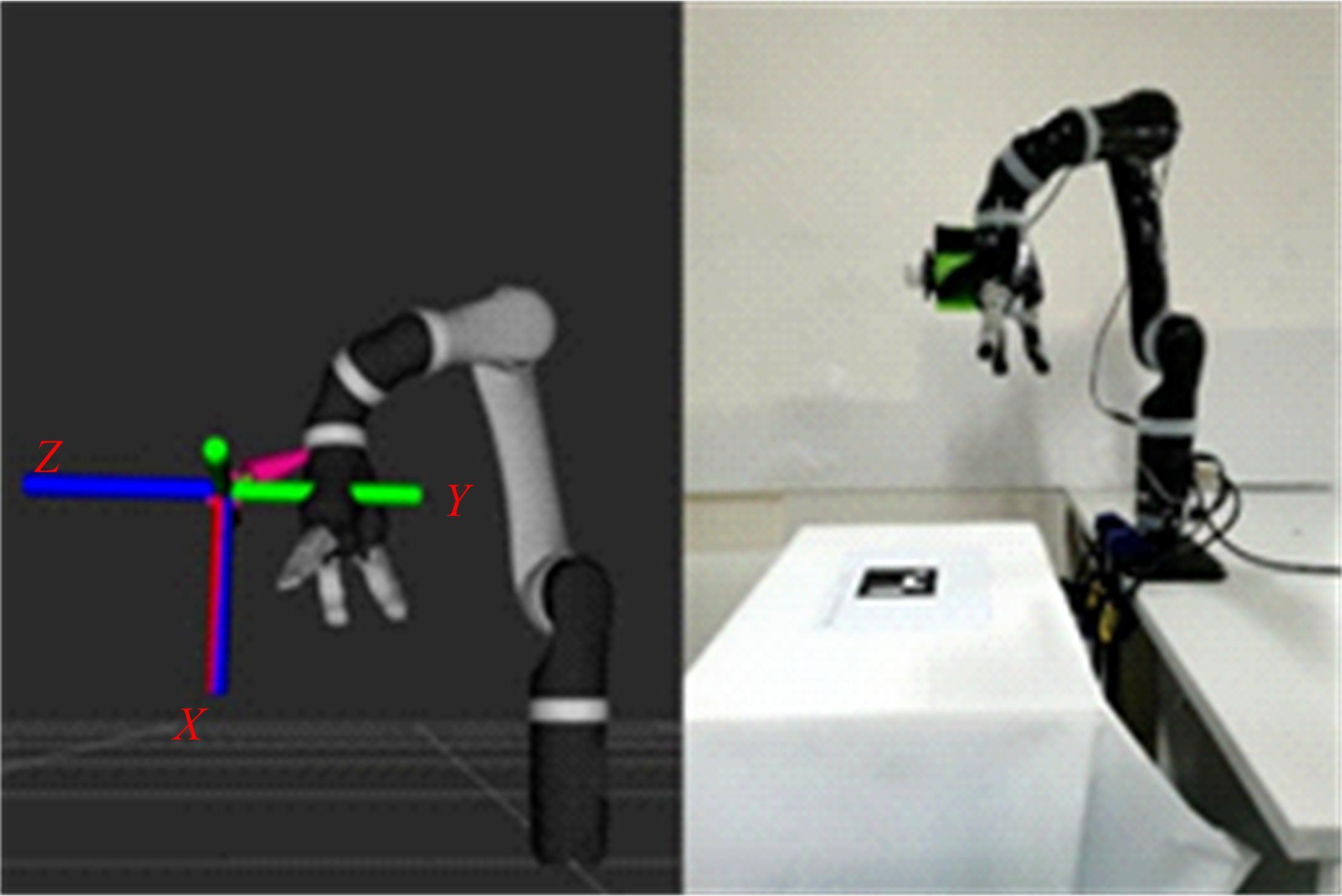

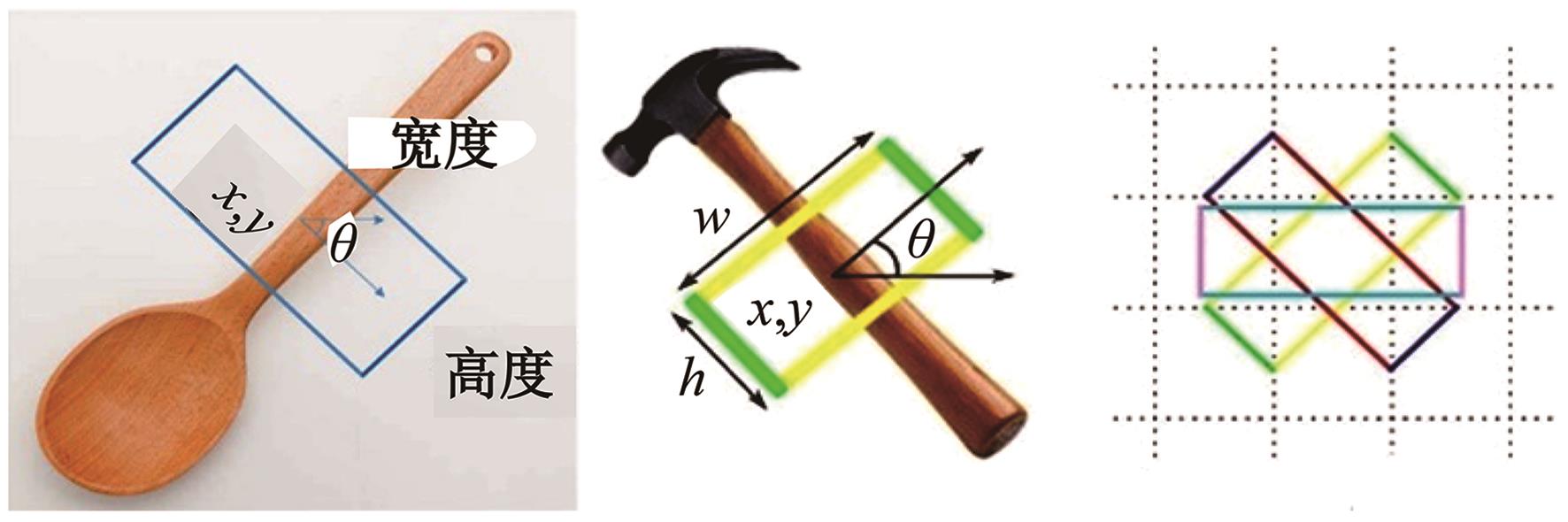

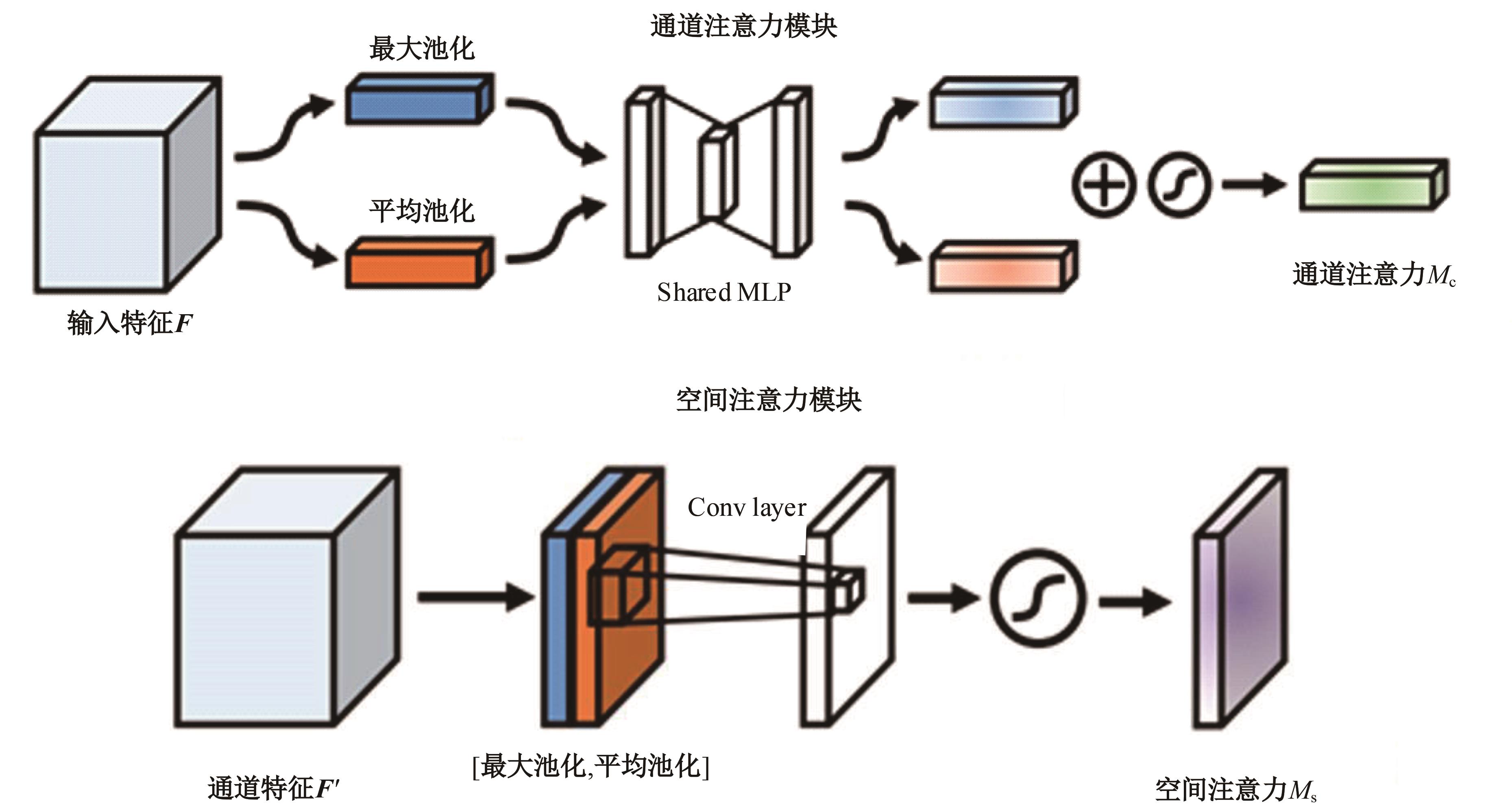

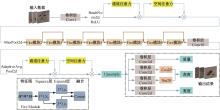

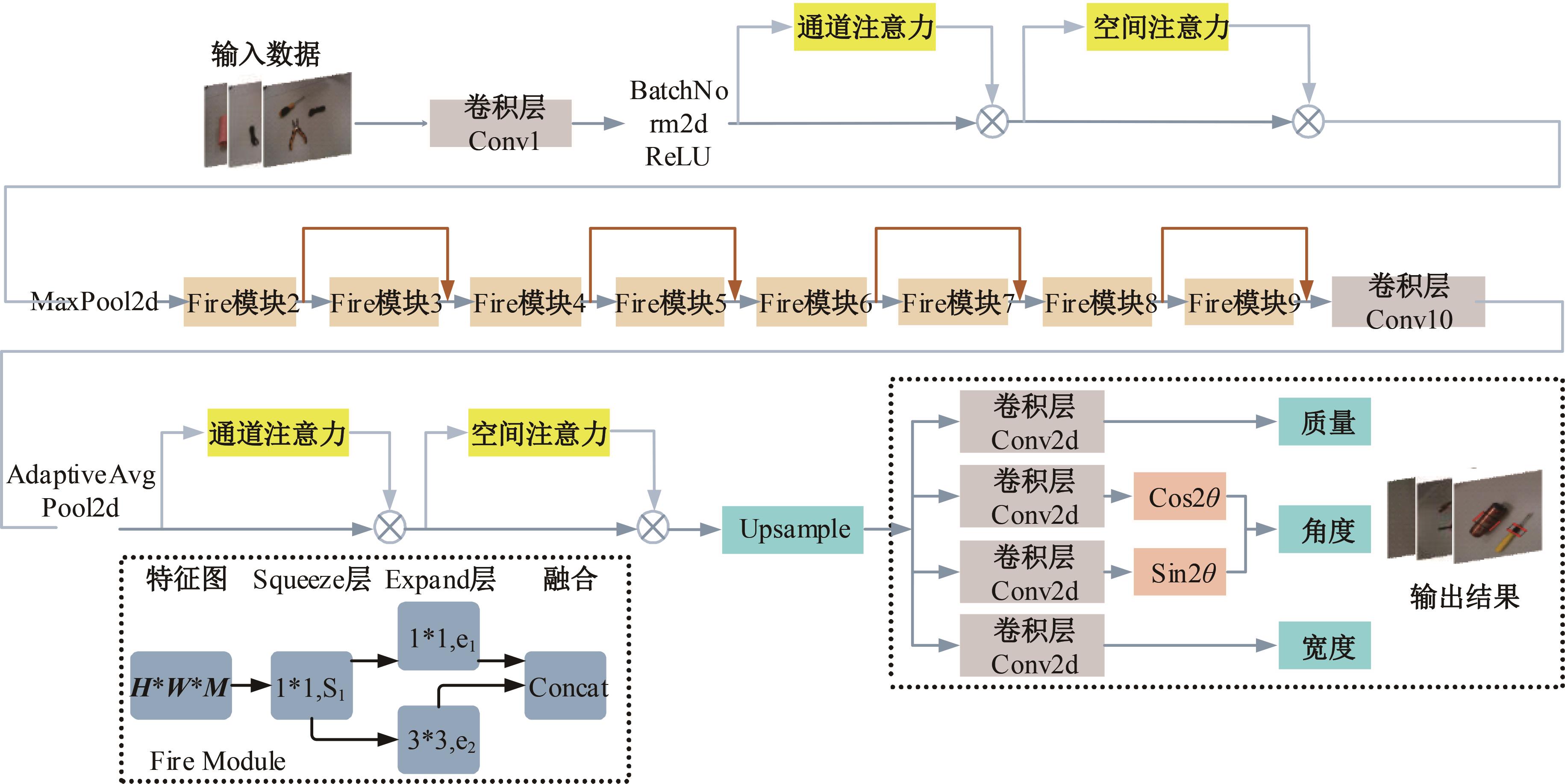

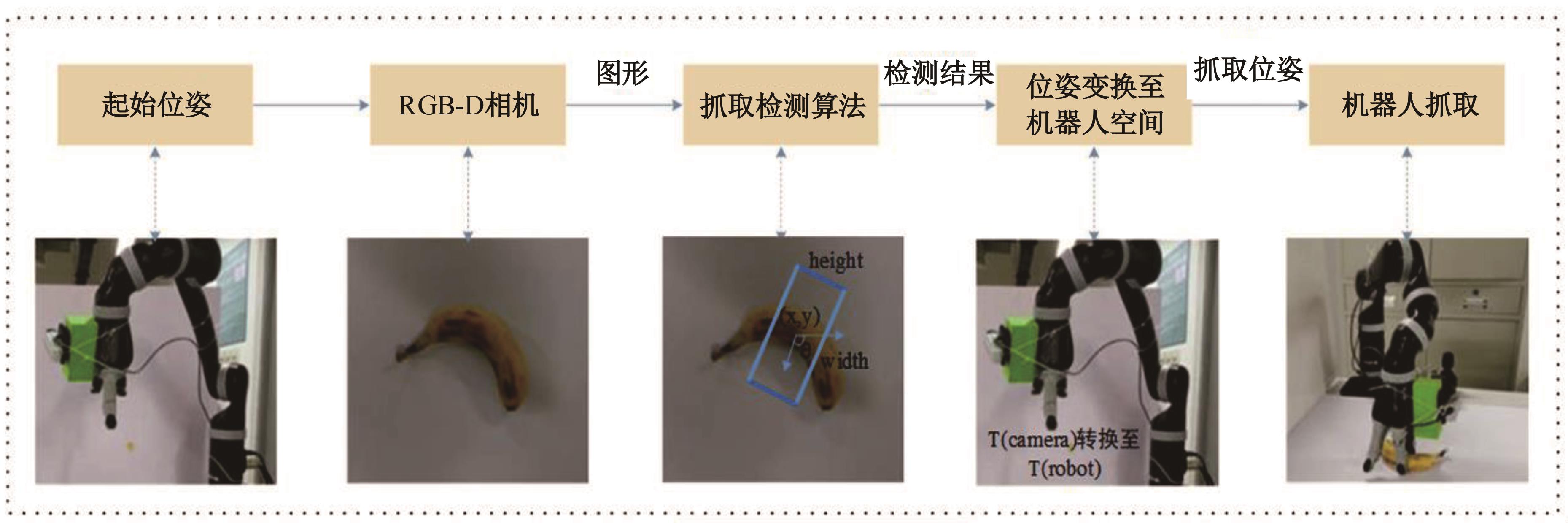

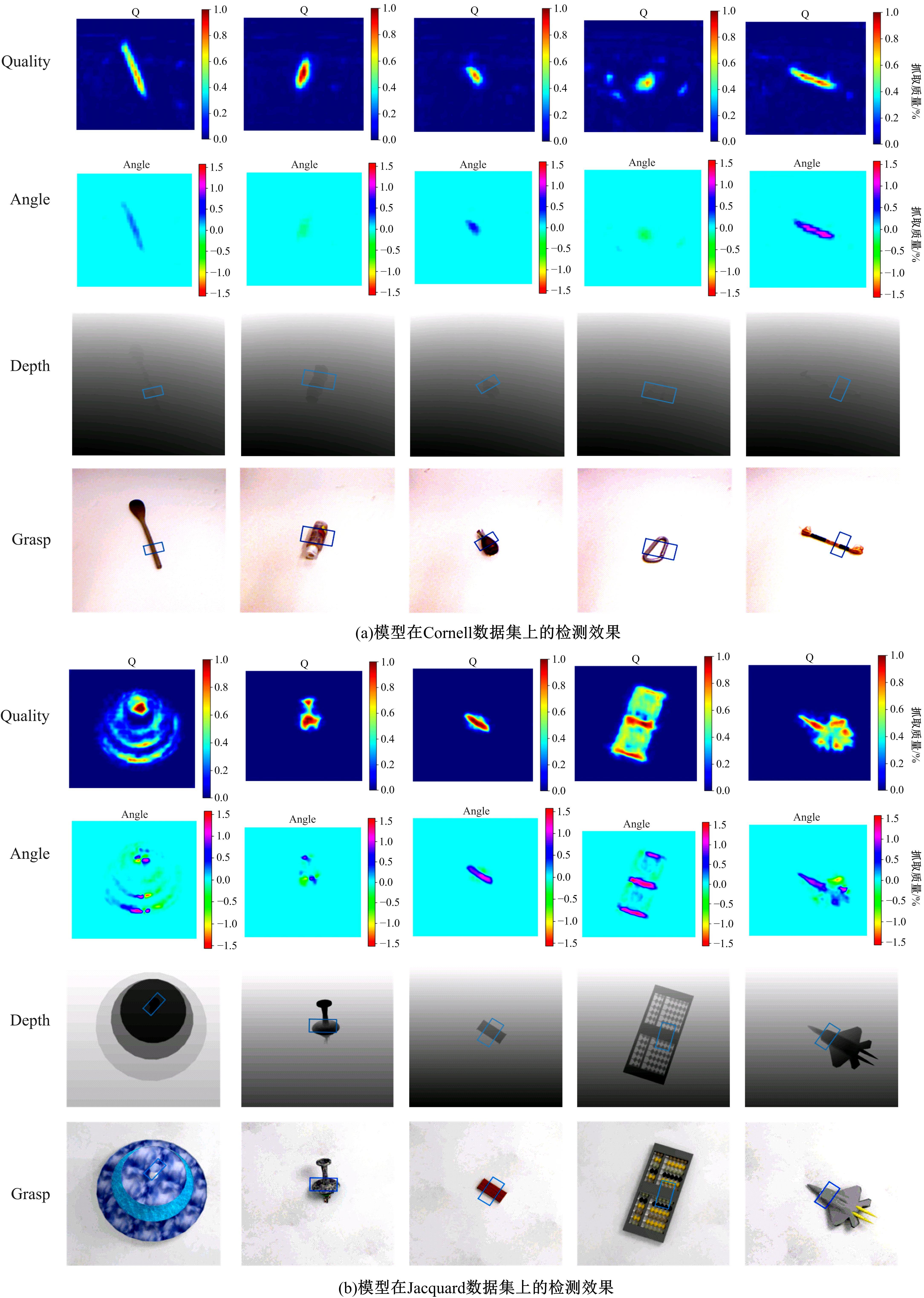

Target grasping network technology of robot manipulator based on attention mechanism

Bin ZHAO1,2,3( ),Cheng-dong WU1,3,Xue-jiao ZHANG3,Ruo-huai SUN1,Yang JIANG3

),Cheng-dong WU1,3,Xue-jiao ZHANG3,Ruo-huai SUN1,Yang JIANG3

- 1.College of Information Science and Engineering Northeastern University,Shenyang 110819,China

2.SIASUN Robot & Automation Co. ,Ltd. ,Shenyang 110168,China

3.Faculty of Robot Science and Engineering Northeastern University,Shenyang 110169,China

CLC Number:

- TP242.6

| 1 | Zhang H B, Zhou X W, Lan X G, et al. A real-time robotic grasping approach with oriented anchor box[J]. IEEE Transactions on Systems, Man, and Cybernetics Systems, 2021, 50(5): 3014-3025. |

| 2 | Patten T, Park K, Vincze M. DGCM-Net: dense geometrical correspondence matching network for incremental experience-based robotic grasping[J/OL]. [2023-01-20]. |

| 3 | Valarezo Añazco E, Rivera Lopez P, Park N, et al. Natural object manipulation using anthropomorphic robotic hand through deep reinforcement learning and deep grasping probability network[J]. Applied Intelligence, 2021,51(2): 1041-1055. |

| 4 | Lan R, Sun L, Liu Z, et al. MADNet: a fast and lightweight network for single-image super resolution[J]. IEEE Transactions on Cybernetics, 2021,51(3): 1443-1453. |

| 5 | Xiao Z J, Yang X D, Wei X, et al. Improved lightweight network in image recognition[J]. Jisuanji Kexue Yu Tansuo, 2021, 15(4): 743-753. |

| 6 | Li M, He Z, Zhu Y, et al. A method of grasping detection for kiwifruit harvesting robot based on deep learning[J]. Agronomy, 2022, 12(12): No.3096. |

| 7 | Li H, Cheng H. Lightweight neural network design for complex verification code recognition task[J]. Computer Systems and Applications, 2021, 4: No.247. |

| 8 | Song T N, Qin W W, Liang Z, et al. Improved dual-channel attention mechanism image classification method for lightweight network[J]. Hangkong Bingqi, 2021, 28(5): 81-85. |

| 9 | Zhao B, Wu C D, Zou F S, et al. Research on small sample multi-target grasping technology based on transfer learning[J]. Sensors, 2023, 23(13): No.5826. |

| 10 | Kim D, Jo H, Song J. Irregular depth tiles: automatically generated data used for network-based robotic grasping in 2D dense clutter[J]. International Journal of Control, Automation, and Systems, 2021, 19(10): 3428-3434. |

| 11 | Kumra S, Joshi S, Sahin F. GR-ConvNet v2: a real-time multi-grasp detection network for robotic grasping[J]. Sensors, 2022, 22(16): No.6208. |

| 12 | Wang D, Liu C, Chang F, et al. High-performance pixel-level grasp detection based on adaptive grasping and grasp-aware network[J].IEEE Transactions on Industrial Electronics, 2022,69(11): 11611-11621. |

| 13 | Himeur C, Lejemble T, Pellegrini T, et al. PCEDNet: a lightweight neural network for fast and interactive edge detection in 3D point clouds[J]. ACM Transactions on Graphics, 2022, 41(1):No. 3481804. |

| [1] | Lei ZHANG,Jing JIAO,Bo-xin LI,Yan-jie ZHOU. Large capacity semi structured data extraction algorithm combining machine learning and deep learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(9): 2631-2637. |

| [2] | Lu Li,Jun-qi Song,Ming Zhu,He-qun Tan,Yu-fan Zhou,Chao-qi Sun,Cheng-yu Zhou. Object extraction of yellow catfish based on RGHS image enhancement and improved YOLOv5 network [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(9): 2638-2645. |

| [3] | Ping YU,Kang ZHAO,Jie CAO. Rolling bearing fault diagnosis based on optimized A-BiLSTM [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(8): 2156-2166. |

| [4] | Yun-zuo ZHANG,Yu-xin ZHENG,Cun-yu WU,Tian ZHANG. Accurate lane detection of complex environment based on double feature extraction network [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(7): 1894-1902. |

| [5] | Bai-you QIAO,Tong WU,Lu YANG,You-wen JIANG. A text sentiment analysis method based on BiGRU and capsule network [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(7): 2026-2037. |

| [6] | Xin-gang GUO,Ying-chen HE,Chao CHENG. Noise-resistant multistep image super resolution network [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(7): 2063-2071. |

| [7] | Li-ping ZHANG,Bin-yu LIU,Song LI,Zhong-xiao HAO. Trajectory k nearest neighbor query method based on sparse multi-head attention [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(6): 1756-1766. |

| [8] | Ming-hui SUN,Hao XUE,Yu-bo JIN,Wei-dong QU,Gui-he QIN. Video saliency prediction with collective spatio-temporal attention [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(6): 1767-1776. |

| [9] | Yu-kai LU,Shuai-ke YUAN,Shu-sheng XIONG,Shao-peng ZHU,Ning ZHANG. High precision detection system for automotive paint defects [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(5): 1205-1213. |

| [10] | Yun-long GAO,Ming REN,Chuan WU,Wen GAO. An improved anchor-free model based on attention mechanism for ship detection [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(5): 1407-1416. |

| [11] | Xiong-fei LI,Zi-xuan SONG,Rui ZHU,Xiao-li ZHANG. Remote sensing change detection model based on multi⁃scale fusion [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(2): 516-523. |

| [12] | Guo-jun YANG,Ya-hui QI,Xiu-ming SHI. Review of bridge crack detection based on digital image technology [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(2): 313-332. |

| [13] | Xiao-xu LI,Wen-juan AN,Ji-jie WU,Zhen LI,Ke ZHANG,Zhan-yu MA. Channel attention bilinear metric network [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(2): 524-532. |

| [14] | Yong WANG,Yu-xiao BIAN,Xin-chao LI,Chun-ming XU,Gang PENG,Ji-kui WANG. Image dehazing algorithm based on multiscale encoding decoding neural network [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(12): 3626-3636. |

| [15] | Na CHE,Yi-ming ZHU,Jian ZHAO,Lei SUN,Li-juan SHI,Xian-wei ZENG. Connectionism based audio-visual speech recognition method [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(10): 2984-2993. |

|