Journal of Jilin University(Engineering and Technology Edition) ›› 2021, Vol. 51 ›› Issue (4): 1387-1395.doi: 10.13229/j.cnki.jdxbgxb20200358

Korean text structure discovery based on reinforcement learning and attention mechanism

Ya-hui ZHAO( ),Fei-yang YANG,Zhen-guo ZHANG(

),Fei-yang YANG,Zhen-guo ZHANG( ),Rong-yi CUI

),Rong-yi CUI

- Deptartment of Computer Science & Technology,Yanbian University,Yanji 133002,China

CLC Number:

- TP391.1

| 1 | Bengio Y, Courville A,Vincent P. Representation learning: a review and new perspectives[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence,2013,35(8):1798-1828. |

| 2 | Socher R, Pennington J, Huang E H. Semi-supervised recursive auto encoders for predicting sentiment distributions[C]∥The Conference on Empirical Methods in Natural Language Processing, Edinburgh, UK, 2011, 151-161. |

| 3 | 毕玉德.朝鲜语自然语言处理研究管窥[J].中文信息学报, 2011,25(6):166-169, 182. |

| Bi Yu-de. A research on Korean natural language processing[J]. Journal of Chinese Information Processing, 2011,25 (6): 166-169, 182. | |

| 4 | Joulin A, Grave E, Bojanowski P. Bag of tricks for efficient text classification[C]∥The European Chapter of the ACL, Valencia, Spain, 2017:427-431. |

| 5 | Kim Y. Convolutional neural networks for sentence classification[C]∥The Conference on Empirical Methods in Natural Language Processing, Doha, Qatar,2014:1746-1751. |

| 6 | Zhu X, Sobihani P, Guo H. Long short-term memory over recursive structures[C]∥International Conference on Machine Learning, Miami, Florida, USA,2015:1604-1612. |

| 7 | Yang Z, Yang D, Dyer C. Hierarchical attention networks for document classification[C]∥Annual Conference of the North American Chapter of the Association for Computational Linguistics, San Diego,USA,2016:1480-1489. |

| 8 | Yogatama D, Blunsom P, Dyer C. Learning to compose words into sentences with reinforcement learning[C]∥ International Conference on Learning Representations,Toulon, France, 2017. |

| 9 | Chung J, Gulcehre C, Cho K. Empirical evaluation of gated recurrent neural networks on sequence modeling[C]∥NIPS, Montreal, Canada, 2014. |

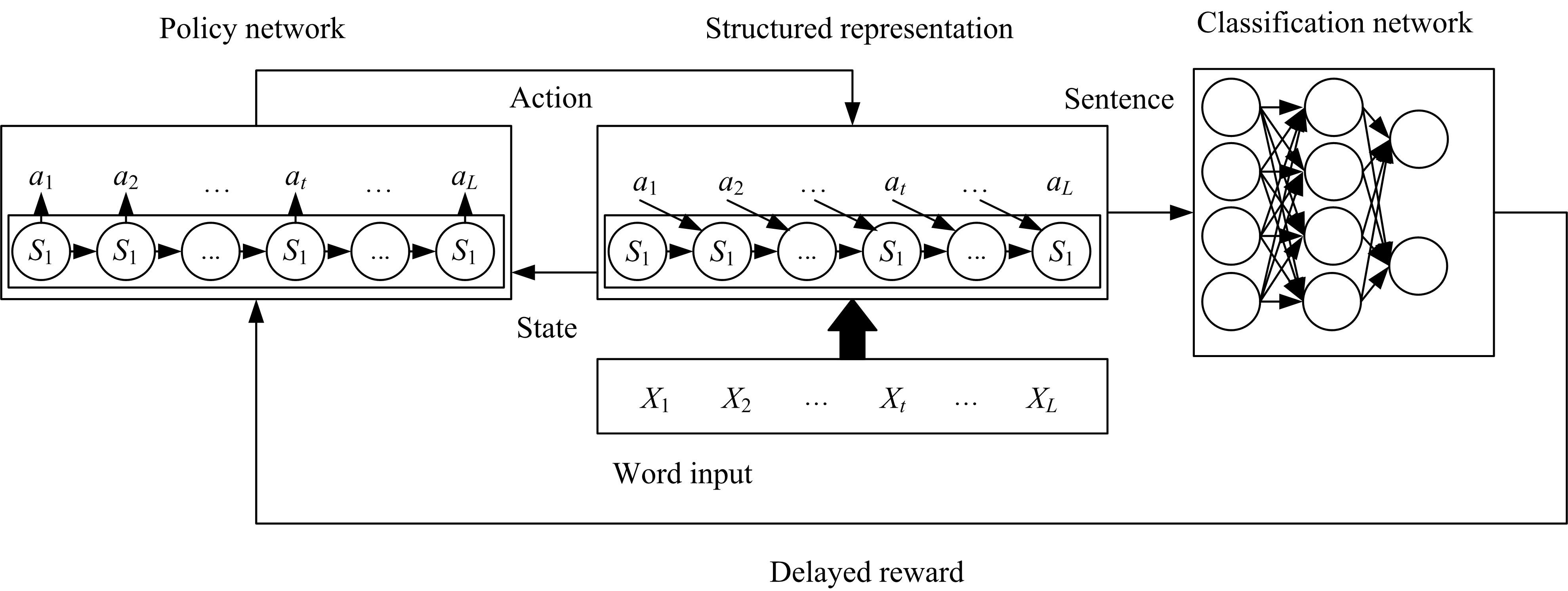

| 10 | Zhang Tian-yang, Huang Min-lie, Zhao Li. Learning structured representation for text classification via reinforcement learning[C]∥The Association for the Advance of Artificial Intelligence, New Orleans,USA, 2018:6053-6060. |

| 11 | Williams R J. Simple statistical gradient-following algorithms for connectionist reinforcement learning[J]. Machine Learning, 1992,2(3/4):229-256. |

| 12 | Keneshloo Y, Ramakrishnan N, Reddy C K. Deep transfer reinforcement learning for text summarization[J]. Society for Industrial and Applied Mathematics, 2019, arxiv:. |

| 13 | Sutton R S, McAllester D A, Singh S P. Policy gradient methods for reinforcement learning with function approximation[C]∥NIPS, Denver, USA,2000:1057-1063. |

| 14 | Graves A, Schmidhuber Jürgen. Frame wise phoneme classification with bidirectional LSTM and other neural network architectures[J]. Neural Networks, 2005, 18(5/6):602-610. |

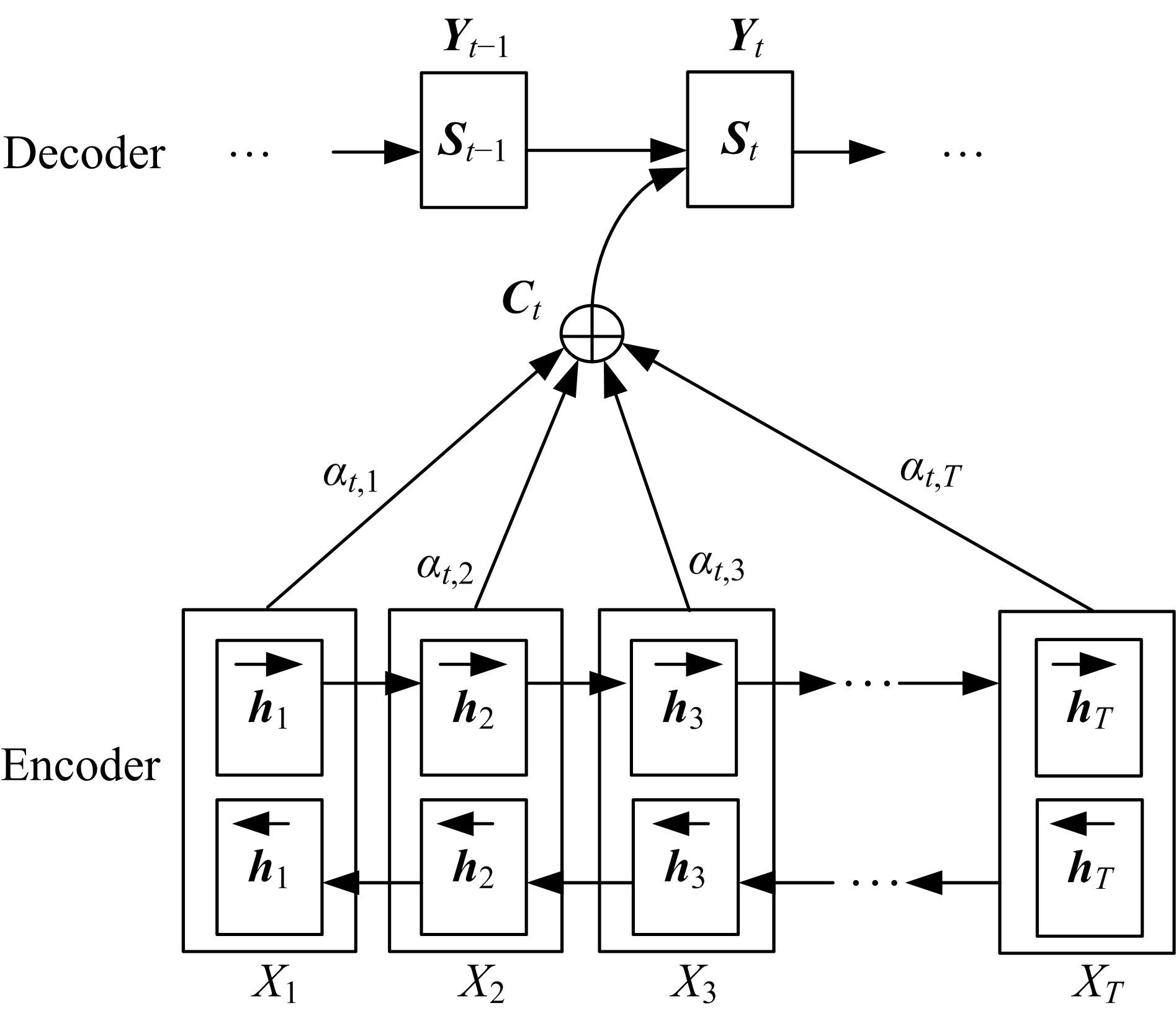

| 15 | Bahdanau D, Cho K, Bengio Y. Neural machine translation by jointly learning to align and translate[C]∥ International Conference on Machine Learning, Florida, USA,2015, arXiv:. |

| 16 | Tang D, Qin B, Liu T. Document modeling with gated recurrent neural network for sentiment classification[C]∥ Proceedings of the Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 2015:1422-1432. |

| 17 | Chung J, Ahn S, Bengio Y. Hierarchical multiscale recurrent neural networks[C]∥International Conference on Learning Representations, Toulon, France, 2017. |

| 18 | Pennington J, Socher R, Manning C D. Glove:Global vectors for word representation[C]∥Proceedings of the Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 2014:1532-1543. |

| 19 | Kingma D, Ba J. Adam: a method for stochastic optimization[C]∥International Conference on Learning Representations, Sandiego,USA,2015. |

| 20 | Mingjie T, Yahui Z, Rongyi C. Identifying word translations in scientific literature based on labeled bilingual topic model and co-occurrence features[C]∥The 17th China National Conference on Computational Linguistics, Changsha, China, 2018:79-92. |

| 21 | 孟先艳,崔荣一,赵亚慧,等.基于双向长短时记忆单元和卷积神经网络的多语种文本分类方法[J].计算机应用研究, 2020,37(9):2669-2673. |

| Meng Xian-yan, Cui Rong-yi, Zhao Ya-hui, et al. Multilingual text classification method based on bidirectional long-short memory unit and convolutional neural network[J]. Computer Application Research, 2020,37(9):2669-2673. | |

| 22 | Vaswani A,Shazeer N, Parmar N. Attention is all you need[J]. Advances in Neural Information Processing Systems, 2017:5999-6009. |

| 23 | Lin Z, Feng M, Santos C N. A structured self-attentive sentence embedding[C]∥International Conference on Learning Representations, Toulon, France,2017. |

| [1] | Yuan-ning LIU,Di WU,Xiao-dong ZHU,Qi-xian ZHANG,Shuang-shuang LI,Shu-jun GUO,Chao WANG. User interface components detection algorithm based on improved YOLOv3 [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(3): 1026-1033. |

| [2] | Fu-hua SHANG,Mao-jun CAO,Cai-zhi WANG. Local outlier data mining based on artificial intelligence technology [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(2): 692-696. |

| [3] | Hai-ying ZHAO,Wei ZHOU,Xiao-gang HOU,Xiao-li ZHANG. Double-layer annotation of traditional costume images based on multi-task learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(1): 293-302. |

| [4] | Hong-wei ZHAO,Xiao-han LIU,Yuan ZHANG,Li-li FAN,Man-li LONG,Xue-bai ZANG. Clothing classification algorithm based on landmark attention and channel attention [J]. Journal of Jilin University(Engineering and Technology Edition), 2020, 50(5): 1765-1770. |

| [5] | Dan-tong OUYANG,Cong MA,Jing-pei LEI,Sha-sha FENG. Knowledge graph embedding with adaptive sampling [J]. Journal of Jilin University(Engineering and Technology Edition), 2020, 50(2): 685-691. |

| [6] | Yi-bin LI,Jia-min GUO,Qin ZHANG. Methods and technologies of human gait recognition [J]. Journal of Jilin University(Engineering and Technology Edition), 2020, 50(1): 1-18. |

| [7] | Qian XU,Ying LI,Gang WANG. Pedestrian-vehicle detection based on deep learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2019, 49(5): 1661-1667. |

| [8] | Wan-fu GAO,Ping ZHANG,Liang HU. Nonlinear feature selection method based on dynamic change of selected features [J]. Journal of Jilin University(Engineering and Technology Edition), 2019, 49(4): 1293-1300. |

| [9] | Shun YANG,Yuan⁃de JIANG,Jian WU,Hai⁃zhen LIU. Autonomous driving policy learning based on deep reinforcement learning and multi⁃type sensor data [J]. Journal of Jilin University(Engineering and Technology Edition), 2019, 49(4): 1026-1033. |

| [10] | Dan⁃tong OUYANG,Jun XIAO,Yu⁃xin YE. Distant supervision for relation extraction with weakconstraints of entity pairs [J]. Journal of Jilin University(Engineering and Technology Edition), 2019, 49(3): 912-919. |

| [11] | GU Hai-jun, TIAN Ya-qian, CUI Ying. Intelligent interactive agent for home service [J]. Journal of Jilin University(Engineering and Technology Edition), 2018, 48(5): 1578-1585. |

| [12] | DONG Sa, LIU Da-you, OUYANG Ruo-chuan, ZHU Yun-gang, LI Li-na. Logistic regression classification in networked data with heterophily based on second-order Markov assumption [J]. Journal of Jilin University(Engineering and Technology Edition), 2018, 48(5): 1571-1577. |

| [13] | WANG Xu, OUYANG Ji-hong, CHEN Gui-fen. Measurement of graph similarity based on vertical dimension sequence dynamic time warping method [J]. 吉林大学学报(工学版), 2018, 48(4): 1199-1205. |

| [14] | ZHANG Hao, ZHAN Meng-ping, GUO Liu-xiang, LI Zhi, LIU Yuan-ning, ZHANG Chun-he, CHANG Hao-wu, WANG Zhi-qiang. Human exogenous plant miRNA cross-kingdom regulatory modeling based on high-throughout data [J]. 吉林大学学报(工学版), 2018, 48(4): 1206-1213. |

| [15] | LI Xiong-fei, FENG Ting-ting, LUO Shi, ZHANG Xiao-li. Automatic music composition algorithm based on recurrent neural network [J]. 吉林大学学报(工学版), 2018, 48(3): 866-873. |

|

||