Journal of Jilin University(Engineering and Technology Edition) ›› 2023, Vol. 53 ›› Issue (8): 2350-2357.doi: 10.13229/j.cnki.jdxbgxb.20211082

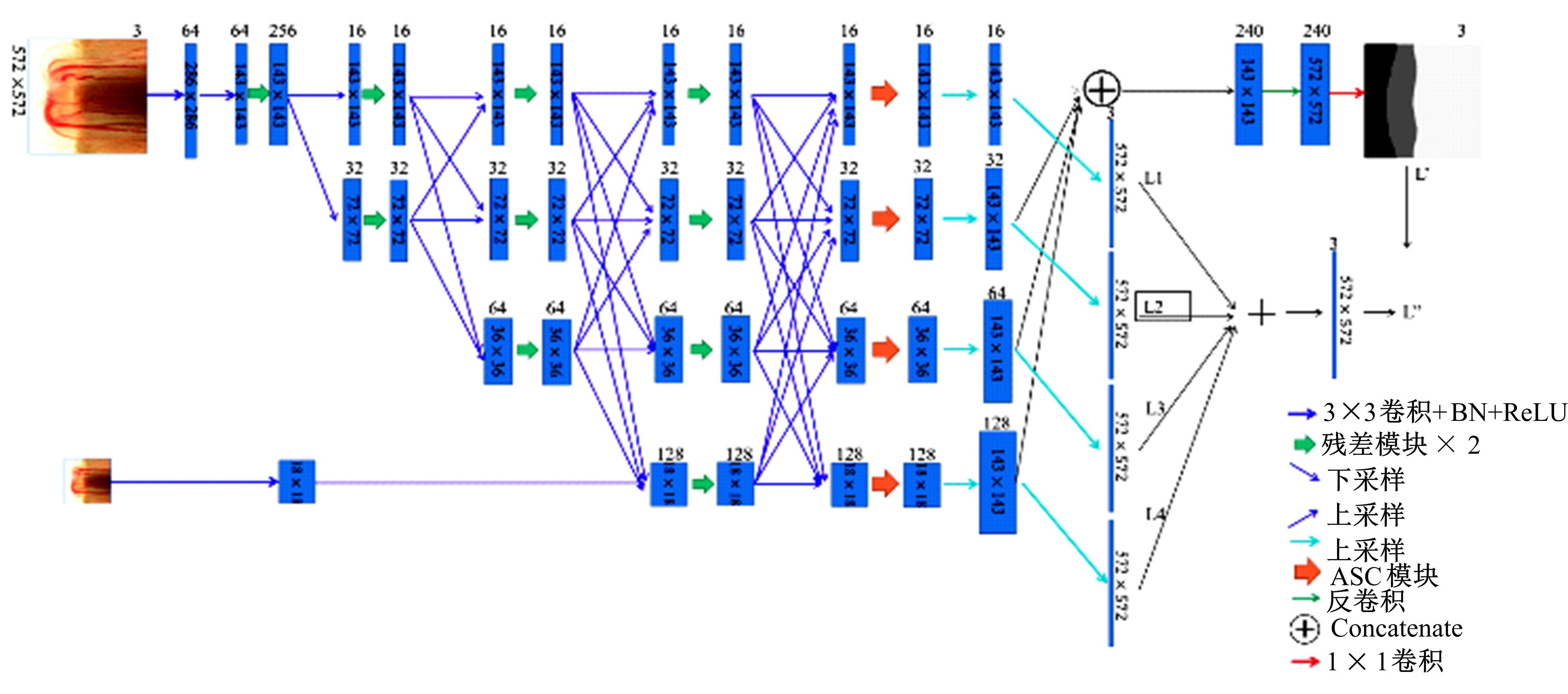

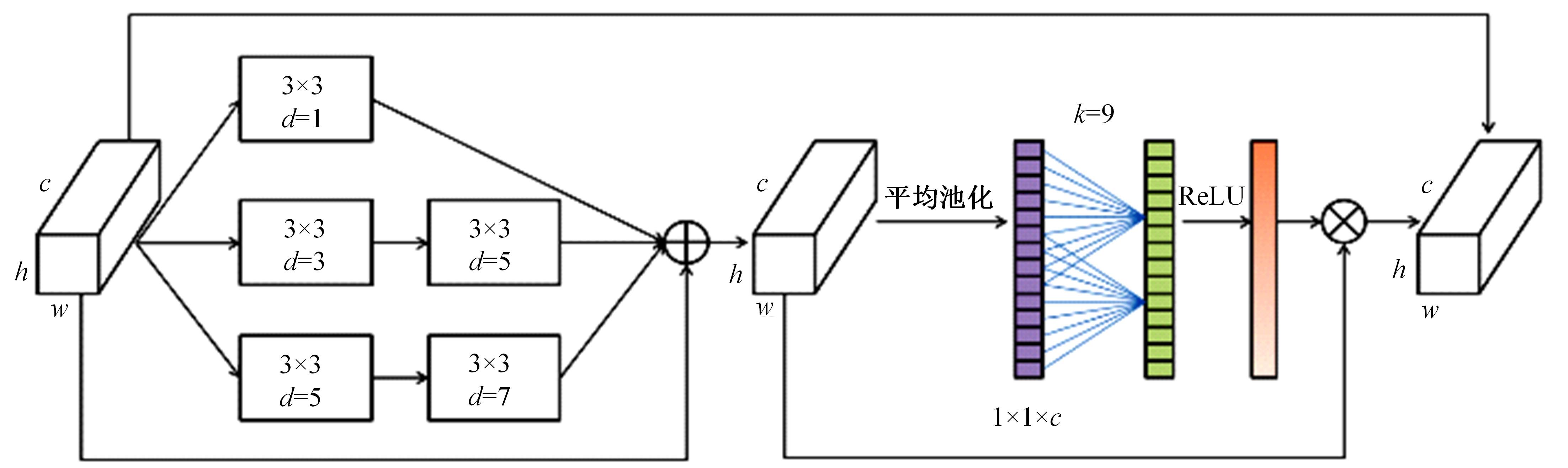

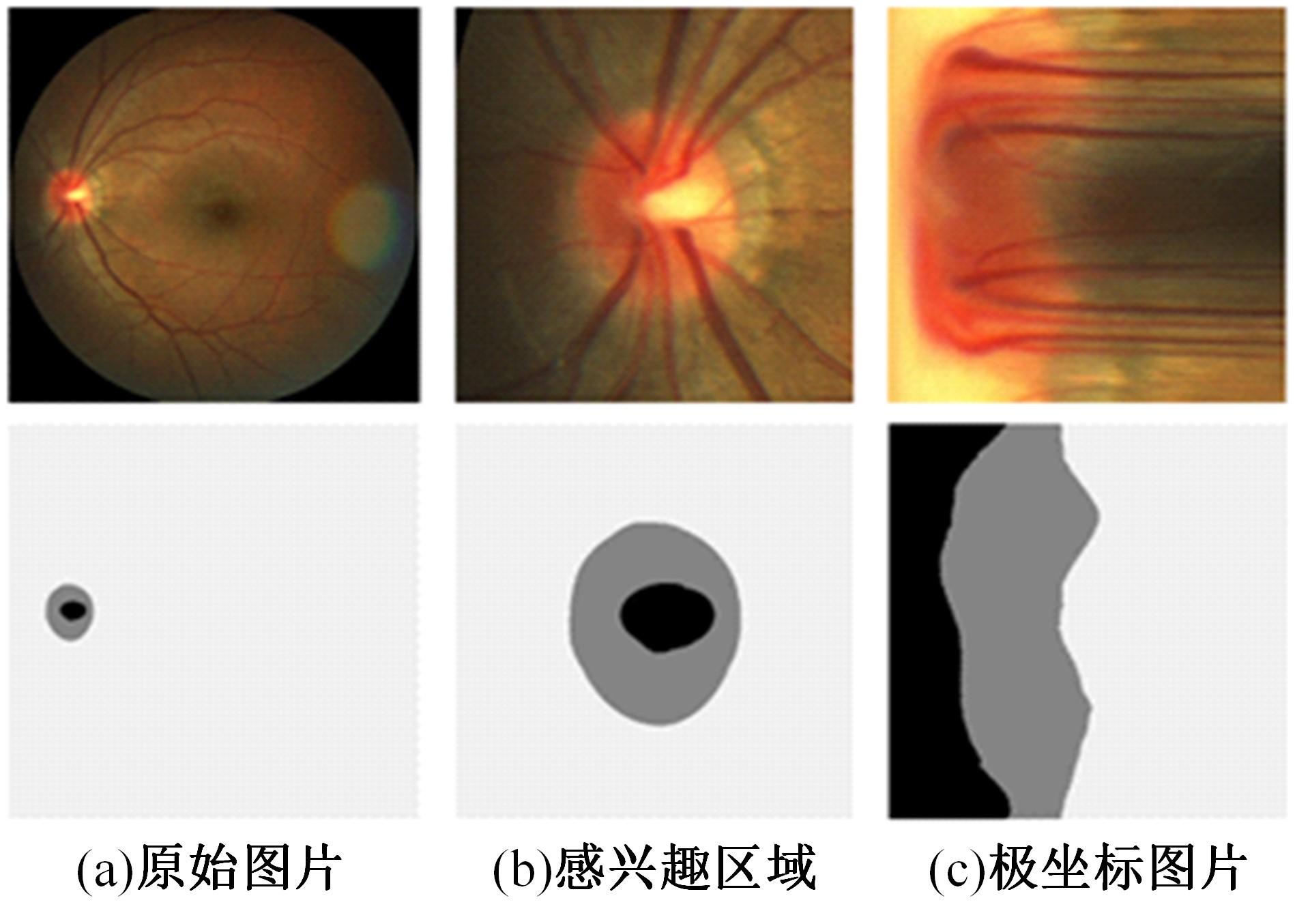

Joint segmentation of optic cup and disc based on high resolution network

Xiao-xin GUO1,2( ),Jia-hui LI1,2,Bao-liang ZHANG1,2

),Jia-hui LI1,2,Bao-liang ZHANG1,2

- 1.College of Computer Science and Technology,Jilin University,Changchun 130012,China

2.Key Laboratory of Symbol Computation and Knowledge Engineering of Ministry of Education,Jilin University,Changchun 130012,China

CLC Number:

- TP391.4

| 1 | Tham Y C, Li X, Wong T Y, et al. Global prevalence of glaucoma and projections of glaucoma burden through 2040: a systematic review and meta-analysis[J]. Ophthalmology, 2014, 121(11): 2081-2090. |

| 2 | Michelson G, Simone W, Hornegger J, et al. The papilla as screening parameter for early diagnosis of glaucoma[J]. Deutsches Rzteblatt International, 2008, 105(34/35):583-589. |

| 3 | Wong D W K, Liu J, Lim J H, et al. Level-set based automatic cup-to-disc ratio determination using retinal fundus images in ARGALI[C]∥30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancourer, 2008: 2266-2269. |

| 4 | Inoue N, Yanashima K, Magatani K, et al. Development of a simple diagnostic method for the glaucoma using ocular Fundus pictures[C]∥IEEE Engineering in Medicine and Biology 27th Annual Conference, Shanghai, China, 2006: 3355-3358. |

| 5 | Blanco M, Penedo M G, Barreira N, et al. Localization and extraction of the optic disc using the fuzzy circular hough transform[C]∥International Conference on Artificial Intelligence and Soft Computing, Berlin, Heidelberg, 2006: 712-721. |

| 6 | Sevastopolsky A. Optic disc and cup segmentation methods for glaucoma detection with modification of U-Net convolutional neural network[J]. Pattern Recognition and Image Analysis, 2017, 27(3): 618-624. |

| 7 | Fu H, Cheng J, Xu Y, et al. Joint optic disc and cup segmentation based on multi-label deep network and polar transformation[J]. IEEE Transactions on Medical Imaging, 2018, 37(7): 1597-1605. |

| 8 | Wang S, Yu L, Yang X, et al. Patch-based output space adversarial learning for joint optic disc and cup segmentation[J]. IEEE Transactions on Medical Imaging, 2019, 38(11): 2485-2495. |

| 9 | Zilly J, Buhmann J M, Mahapatra D. Glaucoma detection using entropy sampling and ensemble learning for automatic optic cup and disc segmentation[J]. Computerized Medical Imaging and Graphics, 2017, 55: 28-41. |

| 10 | Gu Z, Cheng J, Fu H, et al. Ce-net: context encoder network for 2d medical image segmentation[J]. IEEE Transactions on Medical Imaging, 2019, 38(10): 2281-2292. |

| 11 | Tabassum M, Khan T M, Arsalan M, et al. CDED-Net: Joint segmentation of optic disc and optic cup for glaucoma screening[J]. IEEE Access, 2020, 8: 102733-102747. |

| 12 | Sun K, Xiao B, Liu D, et al. Deep high-resolution representation learning for human pose estimation[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition,Long Beach, 2019: 5693-5703. |

| 13 | Zhao H S, Qi X J, Shen X Y, et al. Icnet for real-time semantic segmentation on high-resolution images[C]∥Proceedings of the European Conference on Computer Vision, 2018: 405-420. |

| 14 | Sun K, Zhao Y, Jiang B, et al. High-resolution representations for labeling pixels and regions[J]. arXiv Preprint Arxiv:. |

| 15 | Huang G, Liu Z, van der Maaten L, et al. Densely connected convolutional networks[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, 2017: 4700-4708. |

| 16 | He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Veges, 2016: 770-778. |

| 17 | Wang Q L, Wu B G, Zhu P E, et al. ECA-net: efficient channel attention for deep convolutional neural networks[J]. arXiv preprint arXiv:. |

| 18 | 袁伟,许文波,周甜.一种深度学习分割遥感影像道路的损失函数[J].中国空间科学技术,2021,41(4):134-141. |

| Yuan Wei, Xu Wen-bo, Zhou Tian. A loss function of road segmentation in remote sensing image by deep learning[J]. Chinese Space Science and Technology, 2021,41(4):134-141. | |

| 19 | Orlando J I, Fu H, Breda J B, et al. Refuge challenge: A unified framework for evaluating automated methods for glaucoma assessment from fundus photographs[J]. Medical Image Analysis, 2020, 59: 101570. |

| 20 | Sivaswamy J, Krishnadas S R, Joshi G D, et al. Drishti-gs: retinal image dataset for optic nerve head (onh) segmentation[C]∥IEEE 11th International Symposium on Biomedical Imaging,Beijing, China, 2014: 53-56. |

| 21 | Fu H, Cheng J, Xu Y, et al. Disc-aware ensemble network for glaucoma screening from fundus image[J]. IEEE Transactions on Medical Imaging, 2018, 37(11): 2493-2501. |

| 22 | Badrinarayanan V, Kendall A, Cipolla R. Segnet: a deep convolutional encoder-decoder architecture for image segmentation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(12): 2481-2495. |

| 23 | Liu S, Hong J, Lu X, et al. Joint optic disc and cup segmentation using semi-supervised conditional GANs[J]. Computers in Biology and Medicine, 2019, 115: 103485. |

| 24 | Xu Y, Lu S, Li H, et al. Mixed maximum loss design for optic disc and optic cup segmentation with deep learning from imbalanced samples[J]. Sensors, 2019, 19(20): 19204401. |

| [1] | Fei-fei TANG,Hai-lian ZHOU,Tian-jun TANG,Hong-zhou ZHU,Yong WEN. Multi⁃step prediction method of landslide displacement based on fusion dynamic and static variables [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(6): 1833-1841. |

| [2] | Yan-tao TIAN,Xing HUANG,Hui-qiu LU,Kai-ge WANG,Fu-qiang XU. Multi⁃mode behavior trajectory prediction of surrounding vehicle based on attention and depth interaction [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(5): 1474-1480. |

| [3] | Wei LYU,Jia-ze HAN,Jing-hui CHU,Pei-guang JING. Multi⁃modal self⁃attention network for video memorability prediction [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(4): 1211-1219. |

| [4] | Yan-tao TIAN,Fu-qiang XU,Kai-ge WANG,Zi-xu HAO. Expected trajectory prediction of vehicle considering surrounding vehicle information [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(3): 674-681. |

| [5] | Ji-hong OUYANG,Ze-qi GUO,Si-guang LIU. Dual⁃branch hybrid attention decision net for diabetic retinopathy classification [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(3): 648-656. |

| [6] | Xian-tong LI,Wei QUAN,Hua WANG,Peng-cheng SUN,Peng-jin AN,Yong-xing MAN. Route travel time prediction on deep learning model through spatiotemporal features [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(3): 557-563. |

| [7] | Xiao⁃lei CHEN,Yong⁃feng SUN,Ce LI,Dong⁃mei LIN. Stable anti⁃noise fault diagnosis of rolling bearing based on CNN⁃BiLSTM [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(2): 296-309. |

| [8] | Da-ke ZHOU,Chao ZHANG,Xin YANG. Self-supervised 3D face reconstruction based on multi-scale feature fusion and dual attention mechanism [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(10): 2428-2437. |

| [9] | De-xing WANG,Ruo-you WU,Hong-chun YUAN,Peng GONG,Yue WANG. Underwater image restoration based on multi-scale attention fusion and convolutional neural network [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(4): 1396-1404. |

| [10] | Ya-hui ZHAO,Fei-yang YANG,Zhen-guo ZHANG,Rong-yi CUI. Korean text structure discovery based on reinforcement learning and attention mechanism [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(4): 1387-1395. |

| [11] | Yuan-ning LIU,Di WU,Xiao-dong ZHU,Qi-xian ZHANG,Shuang-shuang LI,Shu-jun GUO,Chao WANG. User interface components detection algorithm based on improved YOLOv3 [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(3): 1026-1033. |

| [12] | Hai-ying ZHAO,Wei ZHOU,Xiao-gang HOU,Xiao-li ZHANG. Double-layer annotation of traditional costume images based on multi-task learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(1): 293-302. |

| [13] | Hong-wei ZHAO,Xiao-han LIU,Yuan ZHANG,Li-li FAN,Man-li LONG,Xue-bai ZANG. Clothing classification algorithm based on landmark attention and channel attention [J]. Journal of Jilin University(Engineering and Technology Edition), 2020, 50(5): 1765-1770. |

| [14] | Dan⁃tong OUYANG,Jun XIAO,Yu⁃xin YE. Distant supervision for relation extraction with weakconstraints of entity pairs [J]. Journal of Jilin University(Engineering and Technology Edition), 2019, 49(3): 912-919. |

| [15] | SUN Jin-Qiu, ZHANG Yan-Ning, ZHOU Jun, JIANG Lei. Small and dim target detection based on selective visual attention mechanism and curve detection method [J]. 吉林大学学报(工学版), 2010, 40(06): 1683-1687. |

|

||