Journal of Jilin University(Engineering and Technology Edition) ›› 2024, Vol. 54 ›› Issue (5): 1407-1416.doi: 10.13229/j.cnki.jdxbgxb.20221367

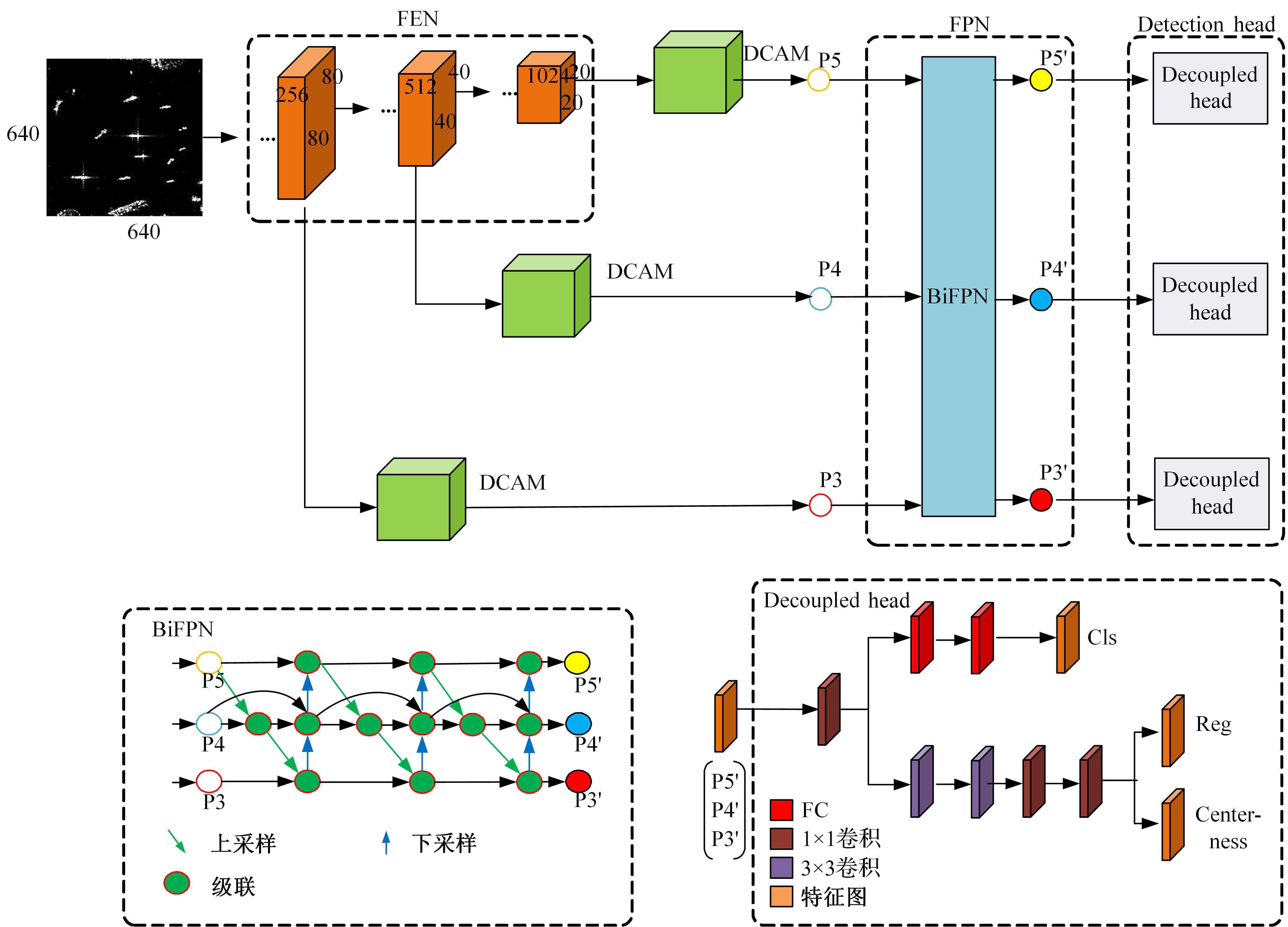

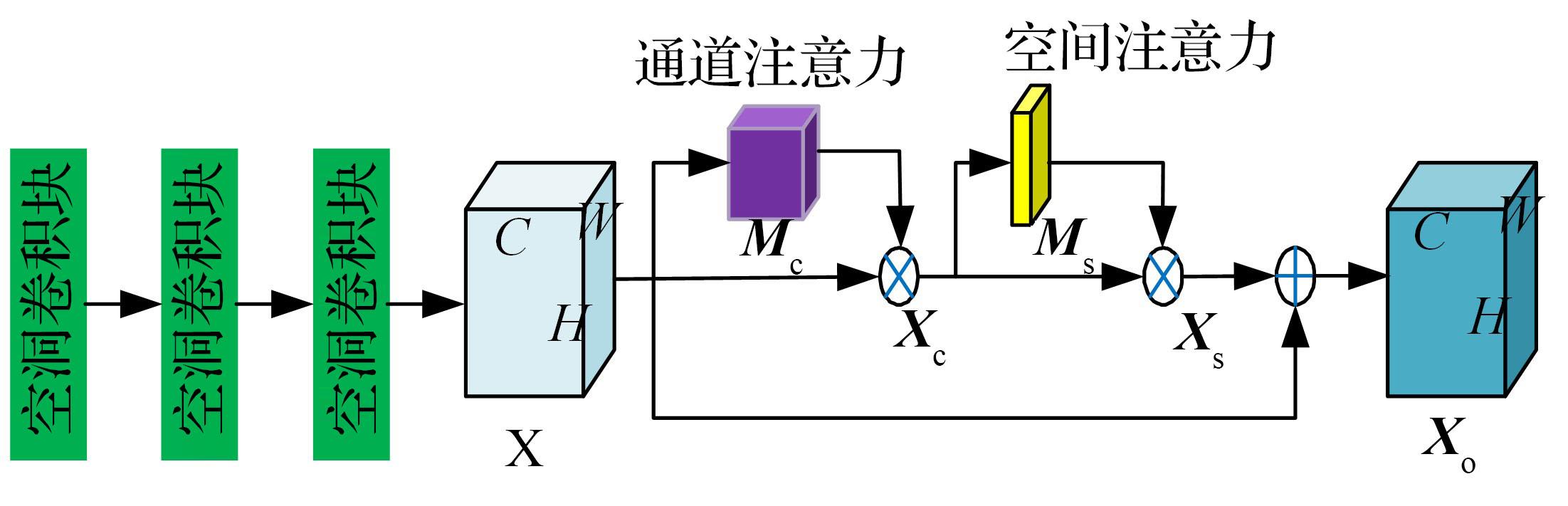

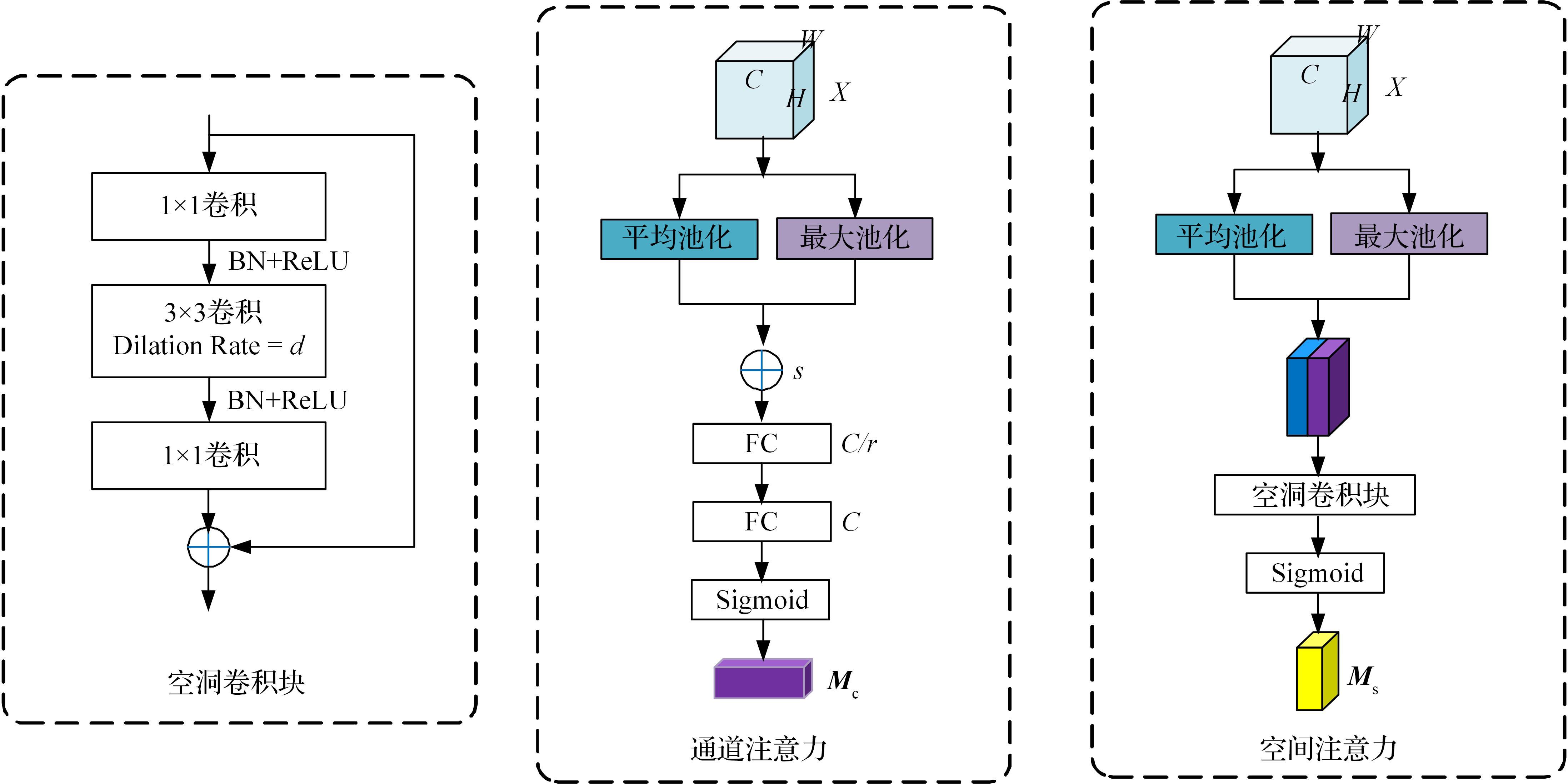

An improved anchor-free model based on attention mechanism for ship detection

Yun-long GAO( ),Ming REN,Chuan WU,Wen GAO

),Ming REN,Chuan WU,Wen GAO

- Changchun Institute of Optics,Fine Mechanics and Physics,Chinese Academy of Sciences,Changchun 130033,China

CLC Number:

- TP391

| 1 | Mao C, Huang L, Xiao Y, et al. Target recognition of SAR image based on CN-GAN and CNN in complex environment[J]. IEEE Access, 2021, 9: 39608-39617. |

| 2 | Wang C, Bi F, Zhang W, et al. An Intensity-space domain CFAR method for ship detection in HR SAR images[J]. IEEE Geoscience and Remote Sensing Letters, 2017, 14(4): 529-533. |

| 3 | Wang S, Wang M, Yang S, et al. New hierarchical saliency filtering for fast ship detection in high-resolution SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2017, 55(1): 351-362. |

| 4 | Leng X, Ji K, Xing X, et al. Area ratio invariant feature group for ship detection in SAR imagery[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2018, 11(7): 2376-2388. |

| 5 | Sun K, Liang Y, Ma X, et al. DSDet: a lightweight densely connected sparsely activated detector for ship target detection in high-resolution SAR images[J]. Remote Sensing, 2021, 13(14): No. 2743. |

| 6 | Yang R, Pan Z, Jia X, et al. A novel CNN-based detector for ship detection based on rotatable bounding box in SAR images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2021, 14: 1938-1958. |

| 7 | Avi A, Roee D. CFAR detection algorithm for objects in sonar images[J]. IET Radar, Sonar Navigation, 2020, 14(11): 1757-1766. |

| 8 | Ren S, He K, Girshick R. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis & Machine Intelligence, 2017, 39(6): 1137-1149. |

| 9 | Lin T, Goyal P, Girshick R, et al. Focal loss for dense object detection[C]∥2017 IEEE International Conference on Computer Vision,Venice, Italy, 2017: 2999-3007. |

| 10 | Redmon J, Farhadi A. YOLOv3: an incremental improvement[EB/OL]. [2022-11-24]. . |

| 11 | Kang M, Leng X, Lin Z, et al. A modified faster R-CNN based on CFAR algorithm for SAR ship detection[J]. 2017 International Workshop on Remote Sensing with Intelligent Processing (RSIP), Shanghai,China, 2017: 1-4. |

| 12 | Yang X, Zhang X, Wang N, et al. A robust one-stage detector for multiscale ship detection with complex background in massive SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 1-12. |

| 13 | Miao T, Zeng H, Yang W, et al. An improved lightweight retinanet for ship detection in SAR images[J]. Remote Sensing, 2022, 15: 4667-4679. |

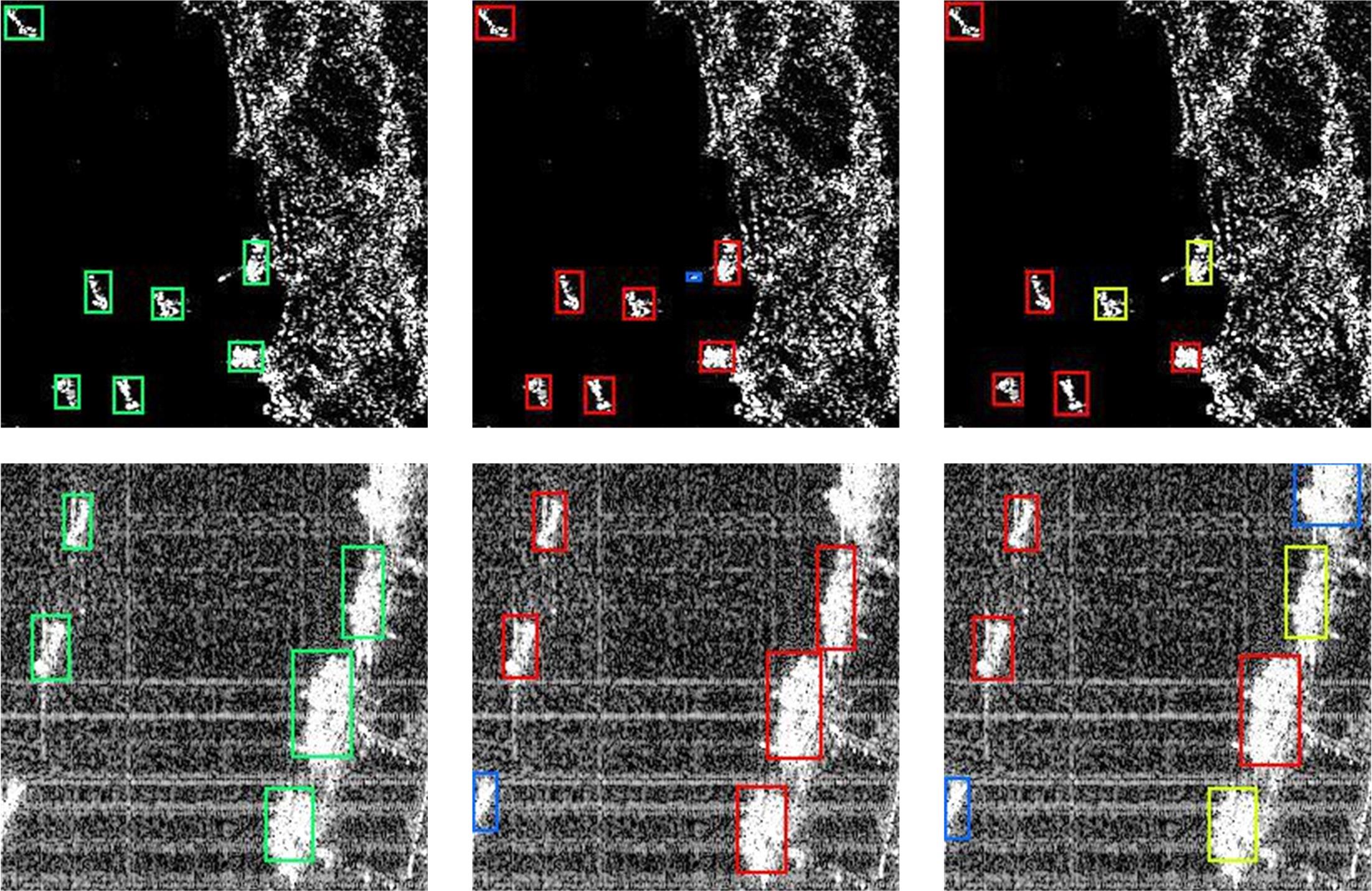

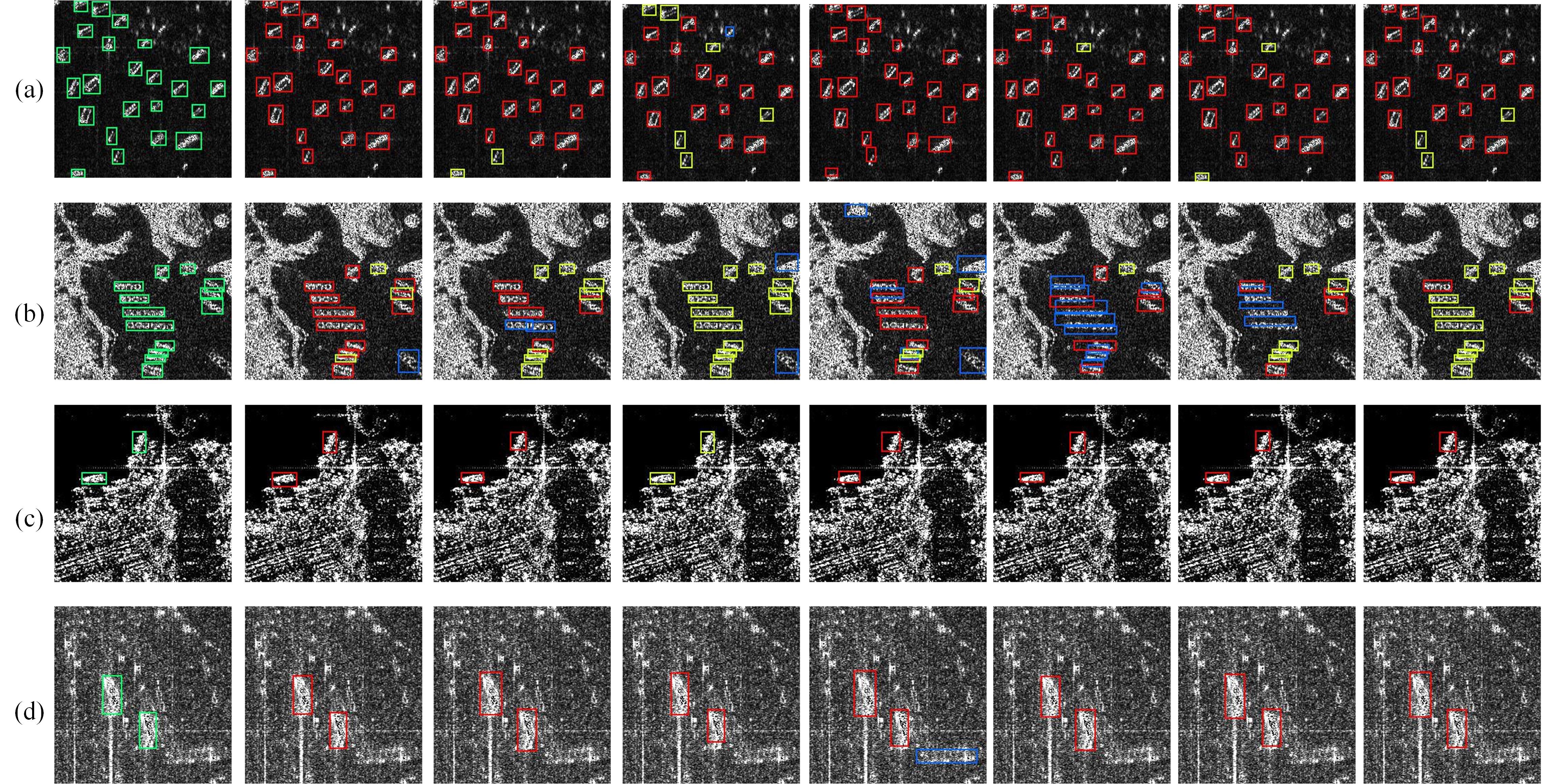

| 14 | Sun Z, Dai M, Leng X, et al. An anchor-free detection method for ship targets in high-resolution SAR images[J]. Remote Sensing, 2021, 14: 7799-7816. |

| 15 | Jiang Y, Li W, Liu L. R-CenterNet+: anchor-free detector for ship detection in SAR images[J]. Sensors, 2021, 21(17): No.5693. |

| 16 | Gao F, He Y, Wang J, et al. Anchor-free convolutional network with dense attention feature aggregation for ship detection in SAR images[J]. Remote Sensing, 2020, 12(16): No.2619. |

| 17 | Ge Z, Liu S, Wang F, et al. YOLOX: exceeding YOLO series in 2021[EB/OL].[2022-11-24]. . |

| 18 | Tian Z, Shen C, Chen H, et al. FCOS: fully convolutional one-stage object detection[C]∥2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 2019: 9626-9635. |

| 19 | Lin T, Goyal P, Girshick R, et al. Focal loss for dense object detection[C]∥2017 IEEE International Conference on Computer Vision,Venice, Italy, 2017: 2980-2988. |

| 20 | Rezatofighi H, Tsoi N, Gwak J, et al. Generalized intersection over union: a metric and a loss for bounding box regression[C]∥Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, USA, 2019: 658-666. |

| 21 | Wang Y, Wang C, Zhang H. A SAR dataset of ship detection for deep learning under complex backgrounds[J]. Remote Sensing, 2019, 11(7): No.765. |

| 22 | Huang L, Liu B, Li B, et al. OpenSARShip: a dataset dedicated to sentinel-1 ship interpretation[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2018, 11(1): 195-208. |

| 23 | Everingham M, Zisserman A, Williams C, et al. The 2005 PASCAL visual object classes challenge [J]. MLCW, 2005:117-176. |

| 24 | Sutanto A, Kang D. A novel diminish smooth L1 loss model with generative adversarial network[C]∥12th International Conference of Intelligent Human Computer Interaction, Daegu, Korea, 2020: 361-368. |

| 25 | Bochkovskiy A, Wang C, Liao M. YOLOv4: optimal speed and accuracy of object detection[EB/OL].[2022-11-24]. . |

| 26 | Tan M, Pang R, Le Q. EfficientDet: scalable and efficient object detection[C]∥Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, USA, 2020: 10778-10787. |

| 27 | Duan K, Bai S, Xie L, et al. CenterNet: keypoint triplets for object detection[C]∥2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 2019: 6568-6577. |

| [1] | Yu WANG,Kai ZHAO. Postprocessing of human pose heatmap based on sub⁃pixel location [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(5): 1385-1392. |

| [2] | Xiao-xu LI,Wen-juan AN,Ji-jie WU,Zhen LI,Ke ZHANG,Zhan-yu MA. Channel attention bilinear metric network [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(2): 524-532. |

| [3] | Guang HUO,Da-wei LIN,Yuan-ning LIU,Xiao-dong ZHU,Meng YUAN,Di GAI. Lightweight iris segmentation model based on multiscale feature and attention mechanism [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2591-2600. |

| [4] | Xiao-xin GUO,Jia-hui LI,Bao-liang ZHANG. Joint segmentation of optic cup and disc based on high resolution network [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(8): 2350-2357. |

| [5] | Fei-fei TANG,Hai-lian ZHOU,Tian-jun TANG,Hong-zhou ZHU,Yong WEN. Multi⁃step prediction method of landslide displacement based on fusion dynamic and static variables [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(6): 1833-1841. |

| [6] | Yan-tao TIAN,Xing HUANG,Hui-qiu LU,Kai-ge WANG,Fu-qiang XU. Multi⁃mode behavior trajectory prediction of surrounding vehicle based on attention and depth interaction [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(5): 1474-1480. |

| [7] | Wei LYU,Jia-ze HAN,Jing-hui CHU,Pei-guang JING. Multi⁃modal self⁃attention network for video memorability prediction [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(4): 1211-1219. |

| [8] | Yan-tao TIAN,Fu-qiang XU,Kai-ge WANG,Zi-xu HAO. Expected trajectory prediction of vehicle considering surrounding vehicle information [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(3): 674-681. |

| [9] | Gui-xia LIU,Yu-xin TIAN,Tao WANG,Ming-rui MA. Pancreas segmentation algorithm based on dual input 3D convolutional neural network [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(12): 3565-3572. |

| [10] | Jing-hong LIU,An-ping DENG,Qi-qi CHEN,Jia-qi PENG,Yu-jia ZUO. Anchor⁃free target tracking algorithm based on multiple attention mechanism [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(12): 3518-3528. |

| [11] | Sheng JIANG,Peng-lang WANG,Zhi-ji DENG,Yi-ming BIE. Image fusion algorithm for traffic accident rescue based on deep learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(12): 3472-3480. |

| [12] | Feng-le ZHU,Yi LIU,Xin QIAO,Meng-zhu HE,Zeng-wei ZHENG,Lin SUN. Analysis of hyperspectral image based on multi-scale cascaded convolutional neural network [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(12): 3547-3557. |

| [13] | Jun-jie WANG,Yuan-jun NONG,Li-te ZHANG,Pei-chen ZHAI. Visual relationship detection method based on construction scene [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(1): 226-233. |

| [14] | Ji-hong OUYANG,Ze-qi GUO,Si-guang LIU. Dual⁃branch hybrid attention decision net for diabetic retinopathy classification [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(3): 648-656. |

| [15] | Xian-tong LI,Wei QUAN,Hua WANG,Peng-cheng SUN,Peng-jin AN,Yong-xing MAN. Route travel time prediction on deep learning model through spatiotemporal features [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(3): 557-563. |

|

||