吉林大学学报(工学版) ›› 2024, Vol. 54 ›› Issue (5): 1385-1392.doi: 10.13229/j.cnki.jdxbgxb.20230268

• 计算机科学与技术 • 上一篇

基于亚像素定位的人体姿态热图后处理

- 1.长春理工大学 电子信息工程学院,长春 130022

2.黑龙江科技大学 电子与信息工程学院,哈尔滨 150022

Postprocessing of human pose heatmap based on sub⁃pixel location

- 1.School of Electronic Information Engineering,Changchun University of Science and Technology,Changchun 130022,China

2.School of Electronics and Information Engineering,Heilongjiang University of Science and Technology,Harbin 150022,China

摘要:

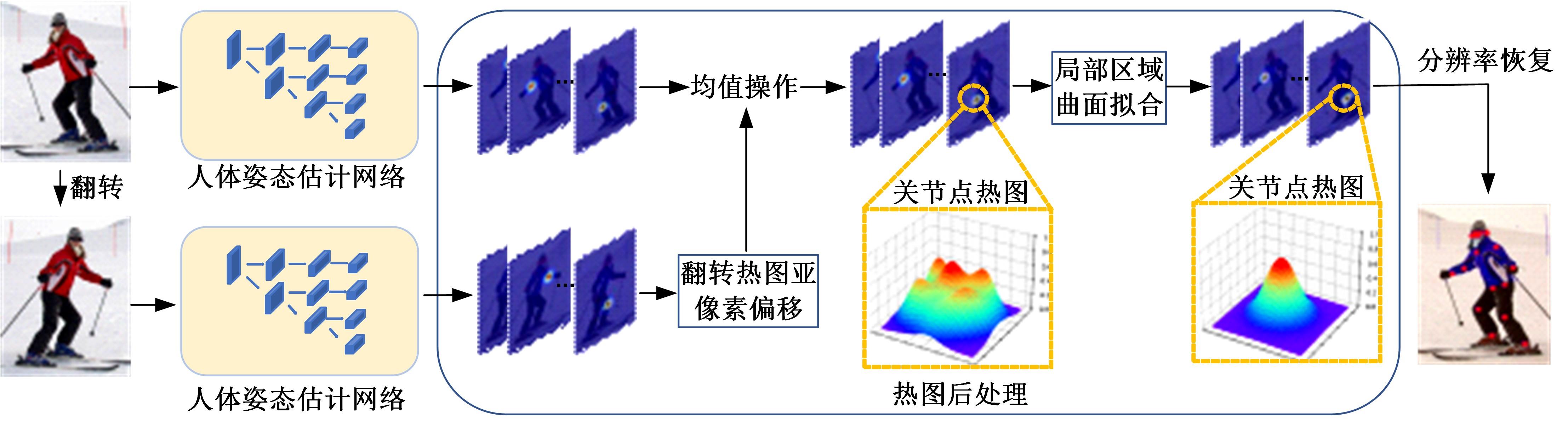

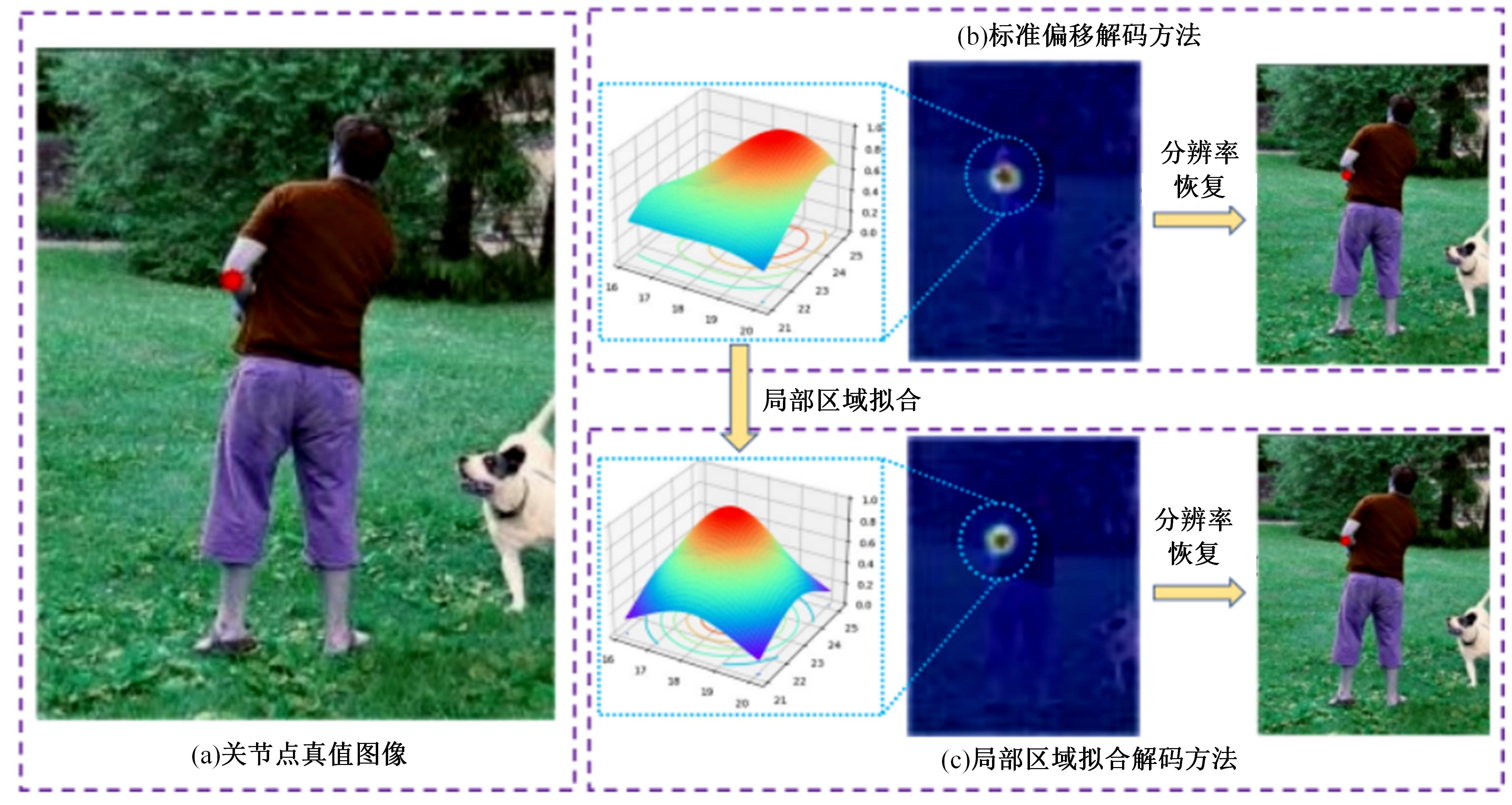

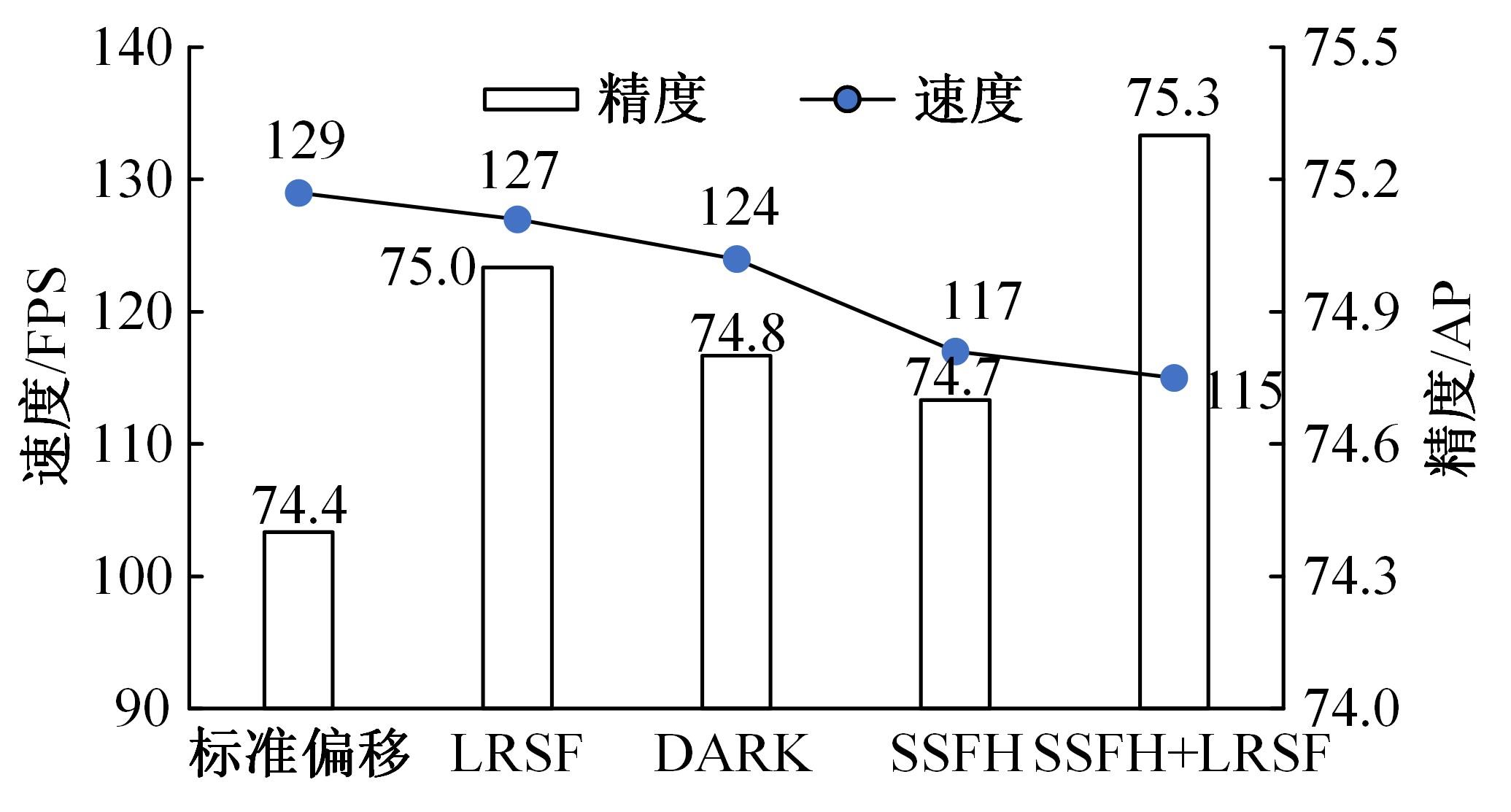

为提高热图预测关节点的精度,提出了一种基于亚像素定位的人体姿态热图后处理方法,该方法包括2个策略:第一个策略是翻转图像热图的亚像素偏移处理,可以消除与原始图像热图的未对齐偏差;第二个策略是局部区域曲面拟合的热图解码,实现关节点的亚像素定位。本文热图后处理方法独立于网络模型,不需要对模型进行任何修改即可应用于当前基于热图的人体姿态估计模型。在COCO2017和MPII数据集上对本文方法进行了实验。以HRNet-W32-256×192模型和Simple Baseline-W32-256×192模型为例,COCO2017数据集上平均精度分别提高了0.9和1.1,验证了方法的有效性。

中图分类号:

- TP391.4

| 1 | Islam M U, Mahmud H, Ashraf F B, et al. Yoga posture recognition by detecting human joint points in real time using microsoft kinect[C]∥ IEEE Region 10 Humanitarian Technology Conference (R10-HTC), Dhaka, Bangladesh, 2017: 668-673. |

| 2 | 李贻斌, 郭佳旻, 张勤. 人体步态识别方法与技术[J]. 吉林大学学报: 工学版, 2020, 50(1): 1-18. |

| Li Yi-bin, Guo Jia-min, Zhang Qin. Methods and technologies of human gait recognition[J]. Journal of Jilin University (Engineering and Technology Edition), 2020, 50(1): 1-18. | |

| 3 | 田皓宇, 马昕, 李贻斌. 基于骨架信息的异常步态识别方法[J]. 吉林大学学报: 工学版, 2022, 52(4): 725-737. |

| Tian Hao-yu, Ma Xin, Li Yi-bin. Skeleton-based abnormal gait recognition: a survey[J]. Journal of Jilin University (Engineering and Technology Edition), 2022, 52(4): 725-737. | |

| 4 | Tang S, Andriluka M, Andres B, et al. Multiple people tracking by lifted multicut and person re-identification[C]∥ Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hololulu, USA, 2017: 3539-3548. |

| 5 | 侯春萍, 杨庆元, 黄美艳, 等. 基于语义耦合和身份一致性的跨模态行人重识别方法[J]. 吉林大学学报: 工学版, 2022, 52(12): 2954-2963. |

| Hou Chun-ping, Yang Qing-yuan, Huang Mei-yan, et al. Cross⁃modality person re-identification based on semantic coupling and identity-consistence constraint[J]. Journal of Jilin University (Engineering and Technology Edition), 2022, 52(12): 2954-2963. | |

| 6 | Cheng Y, Yi P, Liu R, et al. Human-robot interaction method combining human pose estimation and motion intention recognition[C]∥ IEEE 24th International Conference on Computer Supported Cooperative Work in Design, Dalian, China, 2021: 958-963. |

| 7 | Toshev A, Szegedy C. Deeppose: human pose estimation via deep neural networks[C]∥ Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 1653-1660. |

| 8 | Sun K, Xiao B, Liu D, et al. Deep high-resolution representation learning for human pose estimation[C]∥ Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, South Korea, 2019: 5693-5703. |

| 9 | Xiao B, Wu H, Wei Y. Simple baselines for human pose estimation and tracking[C]∥ Proceedings of the European Conference on Computer Vision, Munichi, Germany, 2018: 466-481. |

| 10 | Huang J, Zhu Z, Guo F, et al. The devil is in the details: delving into unbiased data processing for human pose estimation[C]∥ Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 5700-5709. |

| 11 | Zhang F, Zhu X, Dai H, et al. Distribution-aware coordinate representation for human pose estimation[C]∥ Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 7093-7102. |

| 12 | Newell A, Yang K, Deng J. Stacked hourglass networks for human pose estimation[C]∥ Computer Vision-ECCV 2016: 14th European Conference, Amsterdam, Netherlands, 2016: 483-499. |

| 13 | Lin T Y, Maire M, Belongie S, et al. Microsoft coco: common objects in context[C]∥ Computer Vision⁃ECCV 2014: 13th European Conference, Zurich, Switzerland, 2014: 740-755. |

| 14 | Andriluka M, Pishchulin L, Gehler P, et al. 2d human pose estimation: new benchmark and state of the art analysis[C]∥ Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 3686-3693. |

| 15 | Yang Y, Ramanan D. Articulated pose estimation with flexible mixtures-of-parts[C]∥ CVPR 2011, Colorado Springs, USA, 2011: 1385-1392. |

| [1] | 刘晶红,邓安平,陈琪琪,彭佳琦,左羽佳. 基于多重注意力机制的无锚框目标跟踪算法[J]. 吉林大学学报(工学版), 2023, 53(12): 3518-3528. |

| [2] | 王侃,苏航,曾浩,覃剑. 表观增强的深度目标跟踪算法[J]. 吉林大学学报(工学版), 2022, 52(11): 2676-2684. |

| [3] | 曹洁,屈雪,李晓旭. 基于滑动特征向量的小样本图像分类方法[J]. 吉林大学学报(工学版), 2021, 51(5): 1785-1791. |

| [4] | 徐涛,马克,刘才华. 基于深度学习的行人多目标跟踪方法[J]. 吉林大学学报(工学版), 2021, 51(1): 27-38. |

| [5] | 赵宏伟,李明昭,刘静,胡黄水,王丹,臧雪柏. 基于自然性和视觉特征通道的场景分类[J]. 吉林大学学报(工学版), 2019, 49(5): 1668-1675. |

| [6] | 车翔玖, 王利, 郭晓新. 基于多尺度特征融合的边界检测算法[J]. 吉林大学学报(工学版), 2018, 48(5): 1621-1628. |

| [7] | 刘舒, 姜琦刚, 朱航, 李晓东. 基于Hyb-F组合滤波算法的向海自然保护区NDVI时间序列重构[J]. 吉林大学学报(工学版), 2018, 48(3): 957-967. |

| [8] | 许岩岩, 陈辉, 刘家驹, 袁金钊. CELL处理器并行实现立体匹配算法[J]. 吉林大学学报(工学版), 2017, 47(3): 952-958. |

| [9] | 杨焱, 刘飒, 廉世彬, 朱晓冬. 基于计算机视觉的果树害虫的形态特征分析[J]. 吉林大学学报(工学版), 2013, 43(增刊1): 235-238. |

| [10] | 商飞, 马骏骁, 姚立, 田地, 邱春玲. 基于多特征融合的科学仪器工作状态检测方法[J]. 吉林大学学报(工学版), 2010, 40(02): 545-0548. |

| [11] | 葛亮,朱庆生,傅思思,罗大江,刘金凤. 改进的立体像对稠密匹配算法[J]. 吉林大学学报(工学版), 2010, 40(01): 212-0217. |

| [12] | 殷涌光,丁筠. 基于计算机视觉的食品中大肠杆菌快速定量检测[J]. 吉林大学学报(工学版), 2009, 39(增刊2): 344-0348. |

| [13] | 管欣,贾鑫,高振海 . 基于道路图像对比度-区域均匀性图分析的自适应阈值算法[J]. 吉林大学学报(工学版), 2008, 38(04): 758-763. |

| [14] | 万鹏,孙瑜,孙永海 . 基于计算机视觉的大米粒形识别方法[J]. 吉林大学学报(工学版), 2008, 38(02): 489-0492. |

| [15] | 田建,李江,李亚桥. 道路交通事故现场摄影测量的标定技术[J]. 吉林大学学报(工学版), 2006, 36(增刊1): 136-0139. |

|

||