吉林大学学报(工学版) ›› 2022, Vol. 52 ›› Issue (11): 2676-2684.doi: 10.13229/j.cnki.jdxbgxb20210367

• 计算机科学与技术 • 上一篇

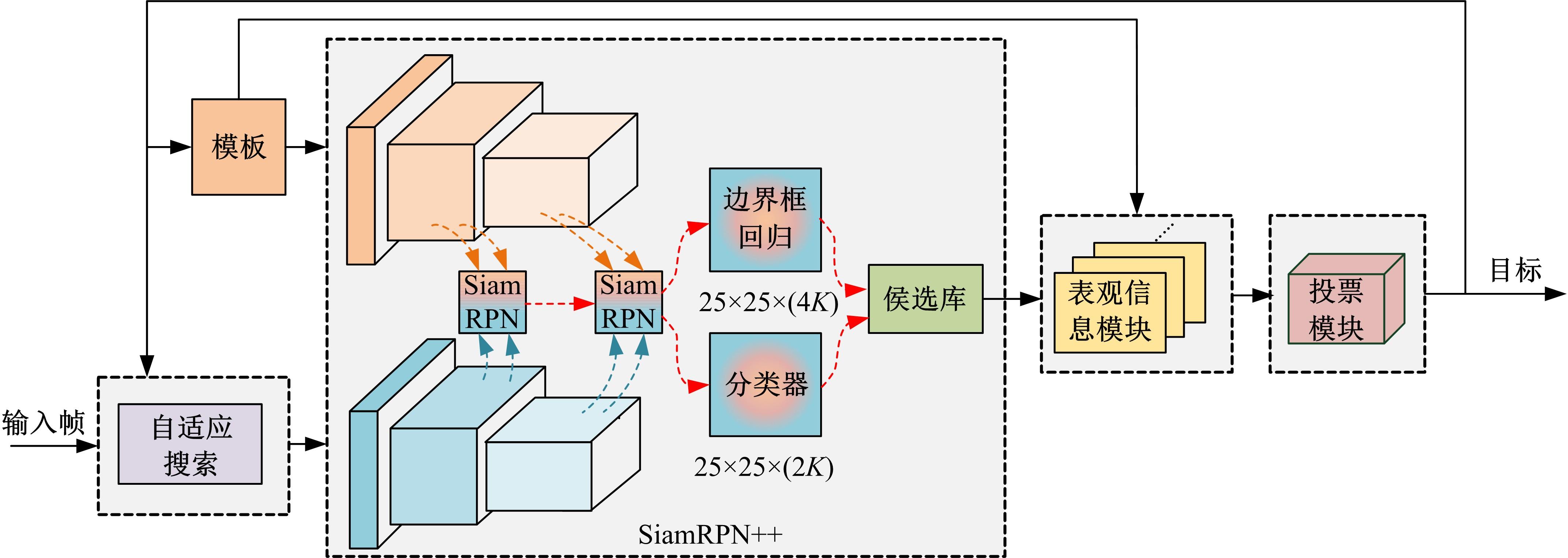

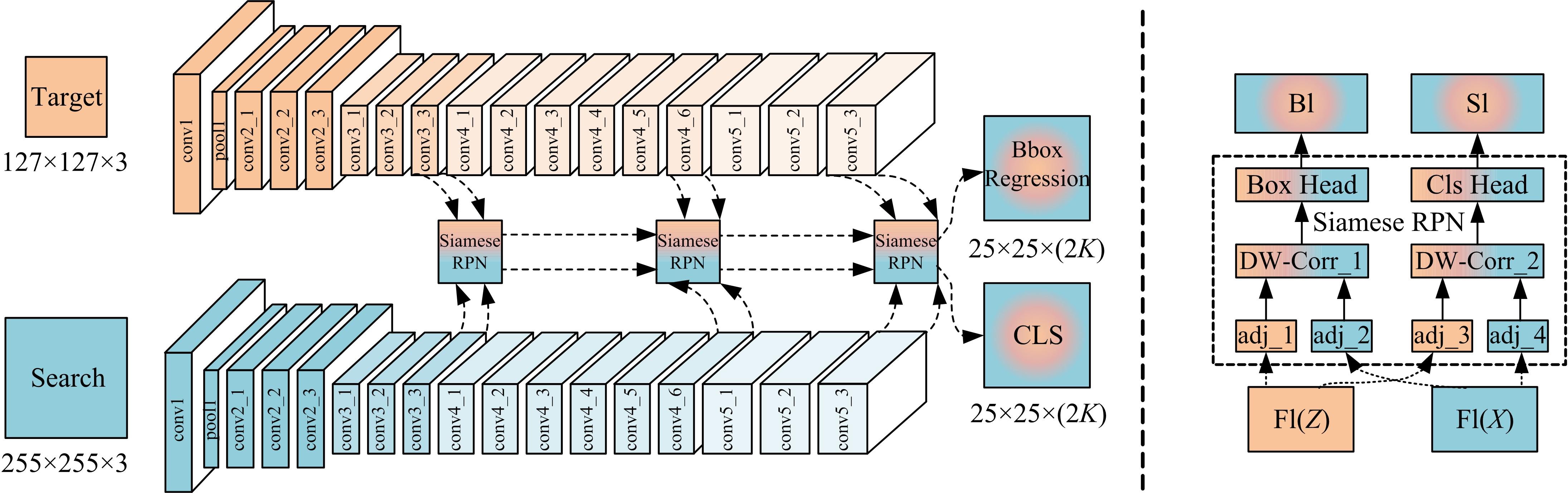

表观增强的深度目标跟踪算法

- 1.西南电子技术研究所,成都 610036

2.重庆大学 微电子与通信工程学院,重庆 400030

Deep target tracking using augmented apparent information

Kan WANG1( ),Hang SU2,Hao ZENG2,Jian QIN2(

),Hang SU2,Hao ZENG2,Jian QIN2( )

)

- 1.Institute of Southwest Electronic Technology,Chengdu 610036,China

2.Microelectronic and Communication College,Chongqing University,Chongqing 400030,China

摘要:

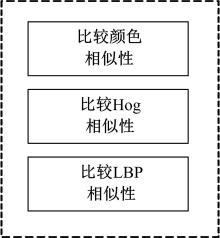

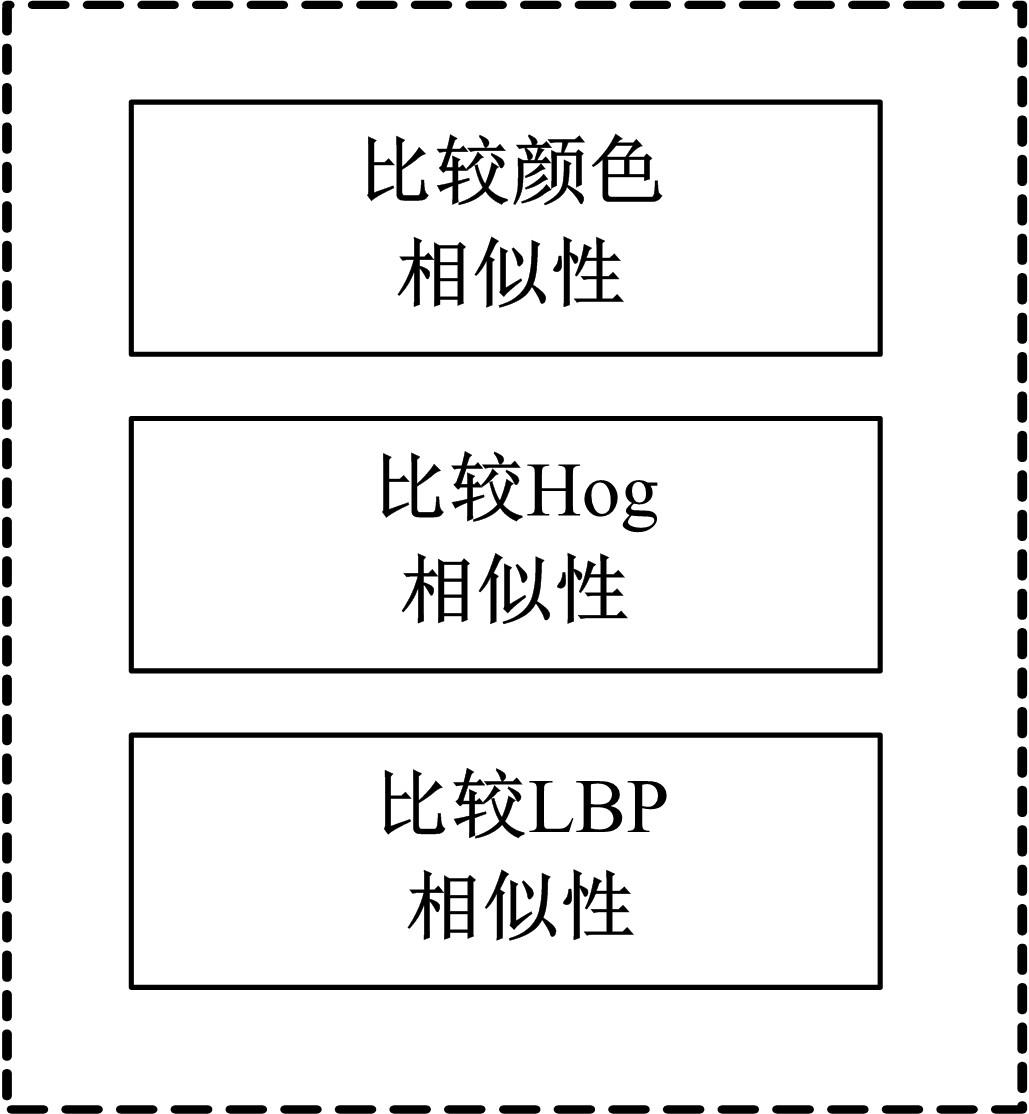

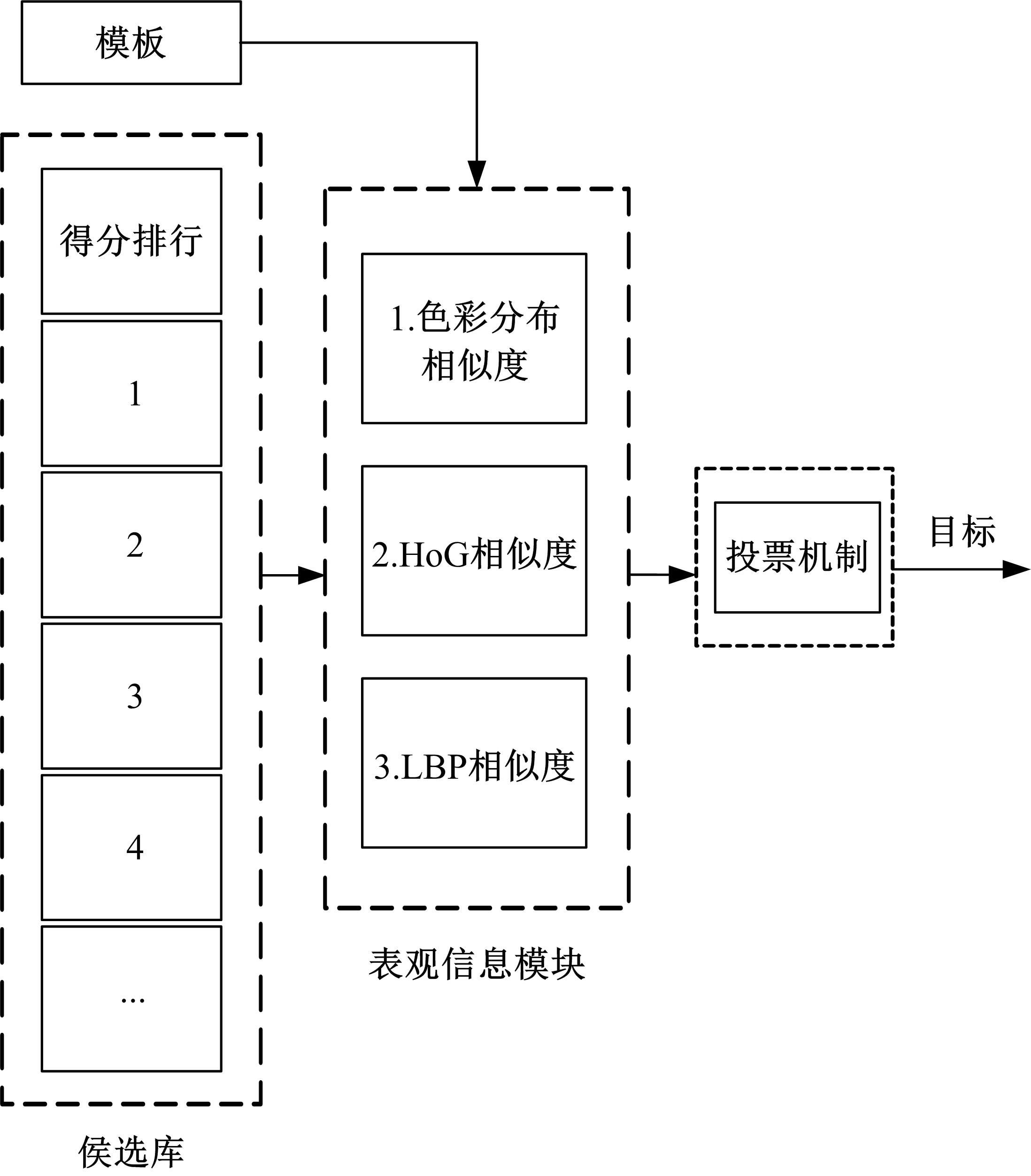

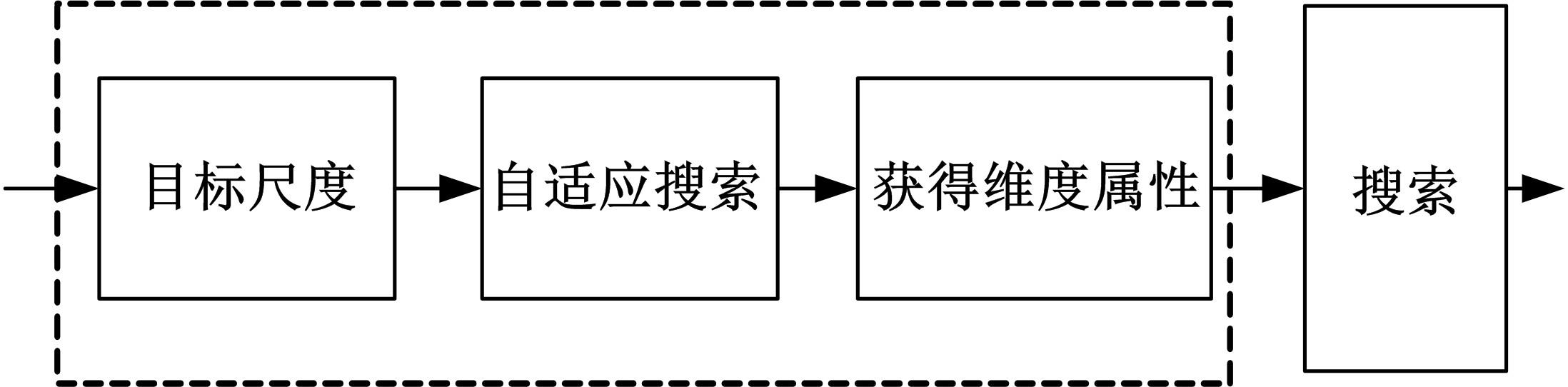

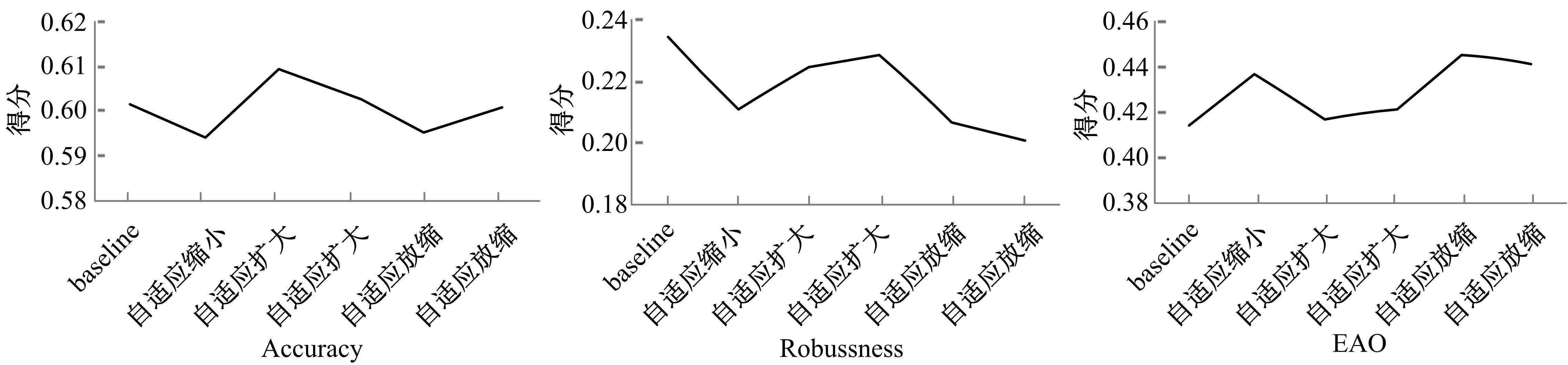

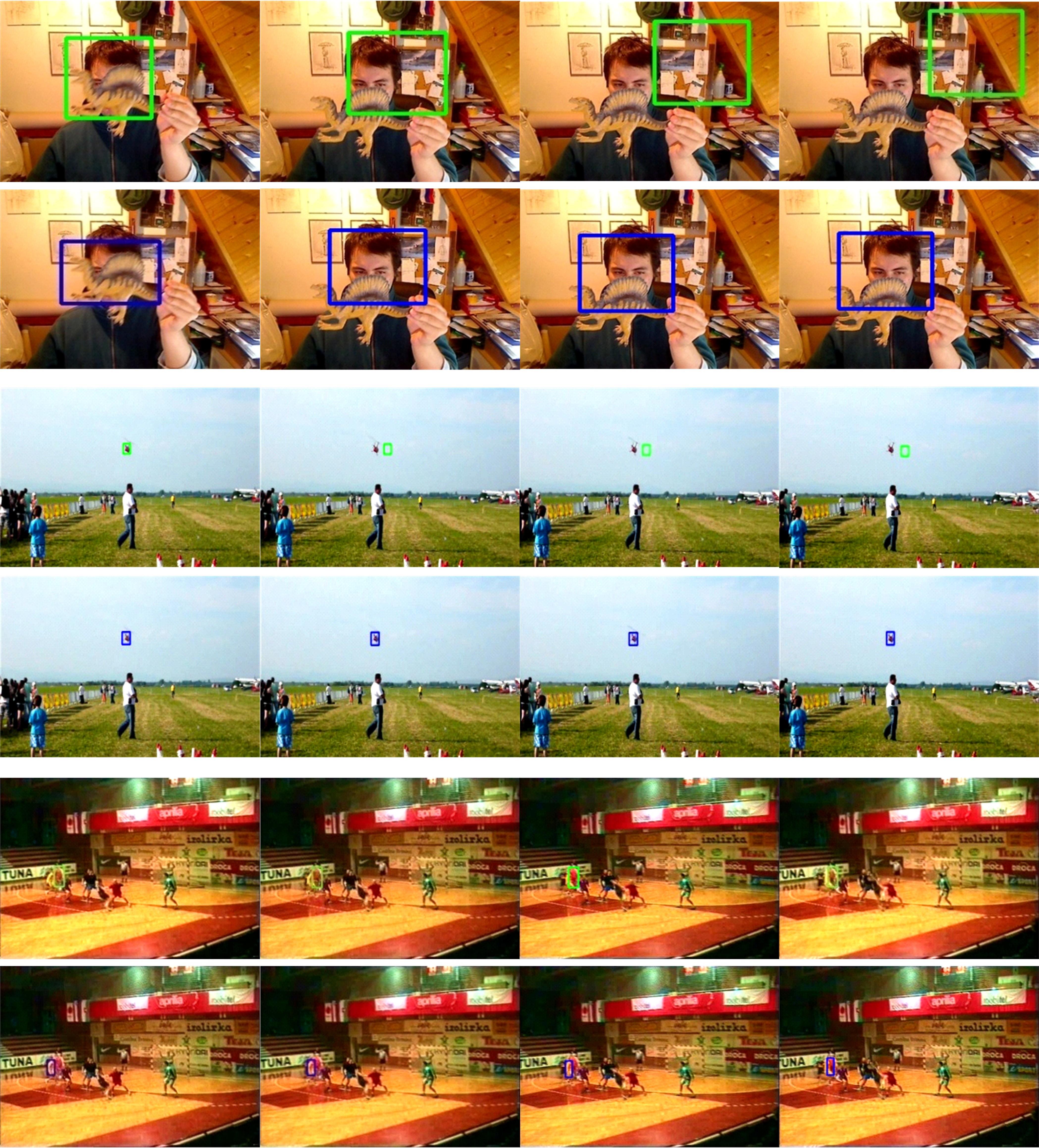

针对基于深度神经网络视频实时目标跟踪的研究主要集中在骨干网优化方面,设计及训练均比较复杂的问题,本文提出一种新的表观增强的深度目标跟踪算法。首先,通过引入简单且易于实现的传统表观特征,直接与深层语义特征融合,增强了类内目标的区分能力;其次,通过投票机制及自适应搜索模块,增强了算法跟踪的鲁棒性。在VOT系列数据集上的测试结果表明:本文算法相对基准算法在平均重叠期望(EAO)上均有2%~4%的提升,准确性及鲁棒性指标达到甚至部分超过了现有复杂优化算法。

中图分类号:

- TP391

| 1 | 徐谦,李颖,王刚.基于深度学习的行人和车辆检测[J].吉林大学学报:工学版, 2019, 49(5): 1661-1667. |

| Xu Qian, Li Ying, Wang Gang, Pedestrian-vehicle detection based on deep learning[J]. Journal of Jilin University(Engineering and Technology Edition), 2019, 49(5): 1661-1667. | |

| 2 | Li B, Yan J, Wu W, et al. High performance visual tracking with siamese region proposal network[C]∥IEEE Conference on Computer Vision and Pattern Recognition, Utah, USA, 2018: 8971-8980. |

| 3 | Matej K, Ales L, Jiri M, et al. The sixth visual object tracking VOT2018 challenge results[C]∥European Conference on Computer Vision, Munich, Germany, 2018: 3-53. |

| 4 | Wang Q, Li B. SiamRPN++: evolution of siamese visual tracking with very deep networks[C]∥IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 4277-4286. |

| 5 | Zhang Z P, Peng H W. Deeper and wider siamese networks for real-time visual tracking[C]∥IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 4586-4595. |

| 6 | 徐涛, 马克, 刘才华. 基于深度学习的行人多目标跟踪方法[J]. 吉林大学学报: 工学版, 2021, 51(1): 27-38. |

| Xu Tao, Ma Ke, Liu Cai-hua. Multi object pedestrian tracking based on deep learning[J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(1): 27-38. | |

| 7 | Voigtlaender P, Luiten J, Torr P H S, et al. Siam R-CNN: visual tracking by re-detection[C]∥IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 6577-6587. |

| 8 | Xu Y D, Wang Z Y, Li Z, et al. SiamFC++: towards robust and accurate visual tracking with target estimation guidelines[C]// Proceedings of the AAAI Conference on Artificial Intelligence, New York, USA, 2020: 12549-12556. |

| 9 | He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[C]∥IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770-778. |

| 10 | Kristan M, Leonardis A, Matas J, et al. The visual object tracking VOT2016 challenge results[C]∥IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 2015: 607-628. |

| 11 | Kristan M, Leonardis A, Matas J, et al.The seventh visual object tracking VOT2019 challenge results[C]∥IEEE International Conference on Computer Vision Workshop, Seoul, Korea, 2019: 2206-2241. |

| 12 | Martin D, Goutam B, Fahad S K, et al. ATOM: accurate tracking by overlap maximization[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 4660-4669. |

| 13 | Wang Q, Zhang L, Luca B, et al. Fast online object tracking and segmentation: a unifying approach[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 1328-1338. |

| 14 | Anelljan M, Robinson A, Khan F S, et al. Beyond correlation filters: learning continuous convolution operators for visual tracking[C]∥European Conference on Computer Vision, Amsterdam, Netherlands, 2016: 472-488. |

| 15 | Xu T, Feng Z H, Wu X J, et al. Learning adaptive discriminative correlation filters via temporal consistency preserving spatial feature selection for robust visual object tracking[J]. IEEE Transactions on Image Processing, 2019, 28(11): 5596-5609. |

| 16 | Goutam B, Martin D, Luc V G, et al. Learning discriminative model prediction for tracking[C]∥Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 2019: 6182-6191. |

| 17 | 薛英杰,韩威,周松斌,等.基于生成对抗单分类网络的异常声音检测[J].吉林大学学报:理学版,2021,59(6):1517-1524. |

| Xue Ying-jie, Han Wei, Zhou Song-bin,et al. Abnormal sound detection based on generative adversarial single classification network[J]. Journal of Jilin University(Science Edition),2021,59(6):1517-1524. | |

| 18 | 朱新丽,才华,寇婷婷,等.行人多目标跟踪算法[J]. 吉林大学学报:理学版,2021,59(5):1161-1170. |

| Zhu Xin-li, Cai Hua, Kou Ting-ting, et al. Pedestrian multi-target tracking algorithm[J]. Journal of Jilin University(Science Edition),2021,59(5):1161-1170. | |

| 19 | 龙年.基于轨迹提取算法的视频关联动作跟踪仿真[J].吉林大学学报:理学版,2022,60(3):641-646. |

| Long Nian. Simulation of video association motion tracking based on trajectory extraction algorithm[J]. Journal of Jilin University(Science Edition),2022,60(3):641-646. |

| [1] | 刘勇,徐雷,张楚晗. 面向文本游戏的深度强化学习模型[J]. 吉林大学学报(工学版), 2022, 52(3): 666-674. |

| [2] | 曹洁,屈雪,李晓旭. 基于滑动特征向量的小样本图像分类方法[J]. 吉林大学学报(工学版), 2021, 51(5): 1785-1791. |

| [3] | 徐涛,马克,刘才华. 基于深度学习的行人多目标跟踪方法[J]. 吉林大学学报(工学版), 2021, 51(1): 27-38. |

| [4] | 赵宏伟,李明昭,刘静,胡黄水,王丹,臧雪柏. 基于自然性和视觉特征通道的场景分类[J]. 吉林大学学报(工学版), 2019, 49(5): 1668-1675. |

| [5] | 车翔玖, 王利, 郭晓新. 基于多尺度特征融合的边界检测算法[J]. 吉林大学学报(工学版), 2018, 48(5): 1621-1628. |

| [6] | 王艺源, 欧阳丹彤, 张立明. 结合部件动态变化度求解最小碰集的GRASP算法[J]. 吉林大学学报(工学版), 2017, 47(3): 930-936. |

| [7] | 许岩岩, 陈辉, 刘家驹, 袁金钊. CELL处理器并行实现立体匹配算法[J]. 吉林大学学报(工学版), 2017, 47(3): 952-958. |

| [8] | 杨焱, 刘飒, 廉世彬, 朱晓冬. 基于计算机视觉的果树害虫的形态特征分析[J]. 吉林大学学报(工学版), 2013, 43(增刊1): 235-238. |

| [9] | 商飞, 马骏骁, 姚立, 田地, 邱春玲. 基于多特征融合的科学仪器工作状态检测方法[J]. 吉林大学学报(工学版), 2010, 40(02): 545-0548. |

| [10] | 葛亮,朱庆生,傅思思,罗大江,刘金凤. 改进的立体像对稠密匹配算法[J]. 吉林大学学报(工学版), 2010, 40(01): 212-0217. |

| [11] | 殷涌光,丁筠. 基于计算机视觉的食品中大肠杆菌快速定量检测[J]. 吉林大学学报(工学版), 2009, 39(增刊2): 344-0348. |

| [12] | 管欣,贾鑫,高振海 . 基于道路图像对比度-区域均匀性图分析的自适应阈值算法[J]. 吉林大学学报(工学版), 2008, 38(04): 758-763. |

| [13] | 万鹏,孙瑜,孙永海 . 基于计算机视觉的大米粒形识别方法[J]. 吉林大学学报(工学版), 2008, 38(02): 489-0492. |

| [14] | 田建,李江,李亚桥. 道路交通事故现场摄影测量的标定技术[J]. 吉林大学学报(工学版), 2006, 36(增刊1): 136-0139. |

| [15] | 管欣, 董因平, 高振海. 基于LMedSquare的车道曲线拟合算法[J]. 吉林大学学报(工学版), 2004, (2): 194-197. |

|

||