吉林大学学报(工学版) ›› 2021, Vol. 51 ›› Issue (4): 1387-1395.doi: 10.13229/j.cnki.jdxbgxb20200358

• 计算机科学与技术 • 上一篇

基于强化学习和注意力机制的朝鲜语文本结构发现

- 延边大学 计算机科学与技术系,吉林 延吉 133002

Korean text structure discovery based on reinforcement learning and attention mechanism

Ya-hui ZHAO( ),Fei-yang YANG,Zhen-guo ZHANG(

),Fei-yang YANG,Zhen-guo ZHANG( ),Rong-yi CUI

),Rong-yi CUI

- Deptartment of Computer Science & Technology,Yanbian University,Yanji 133002,China

摘要:

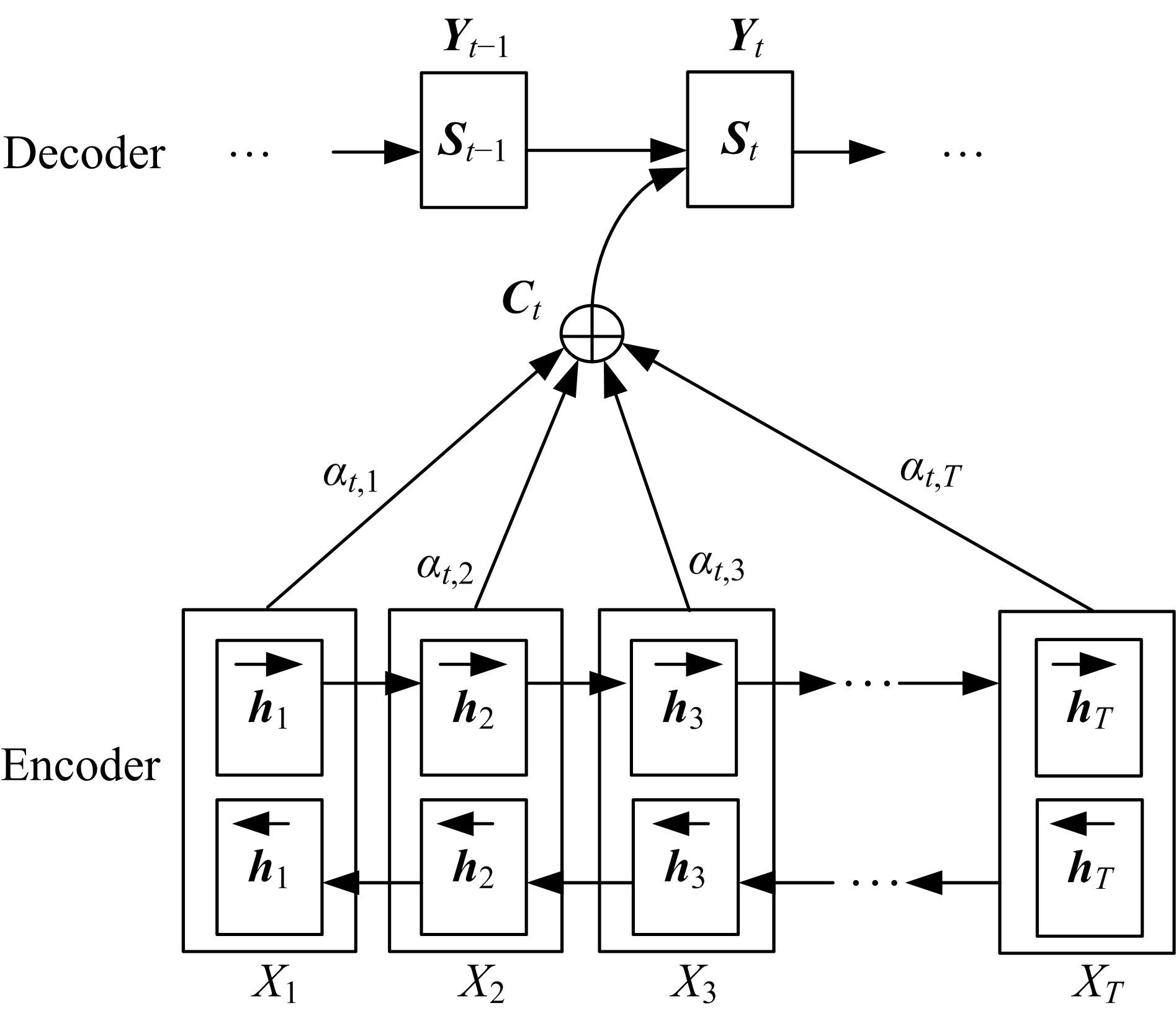

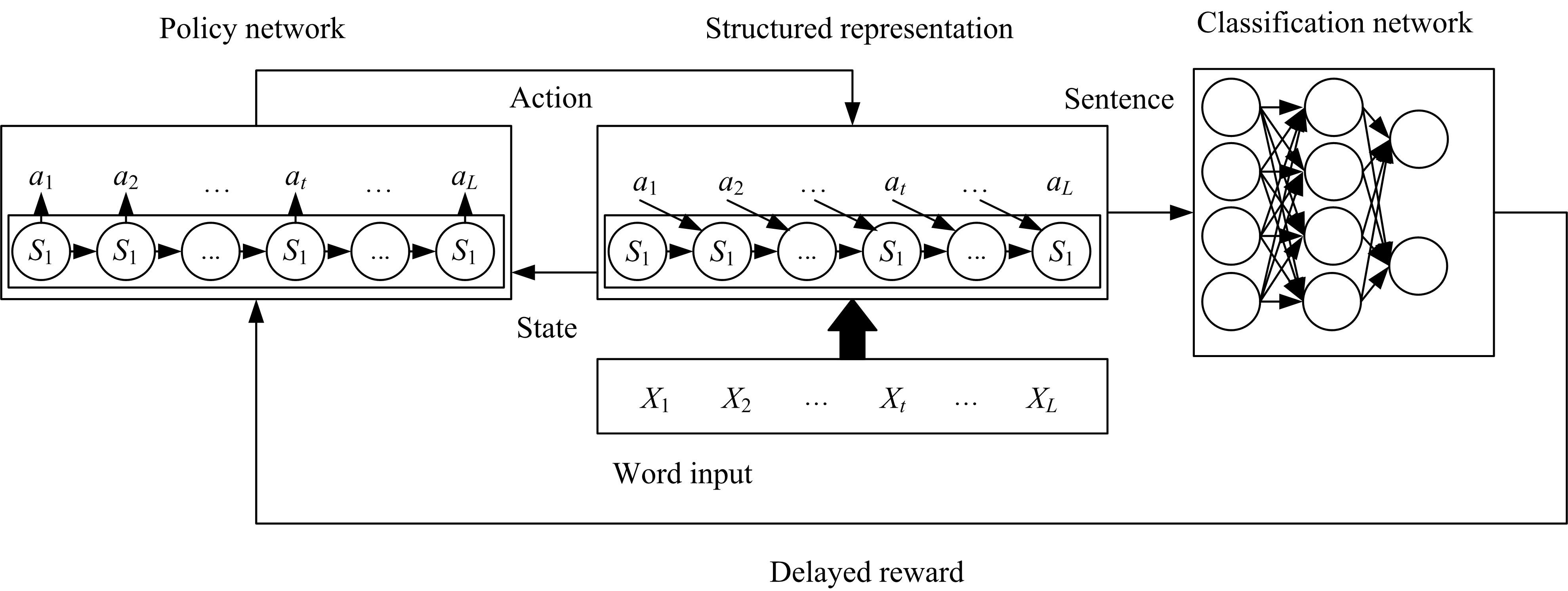

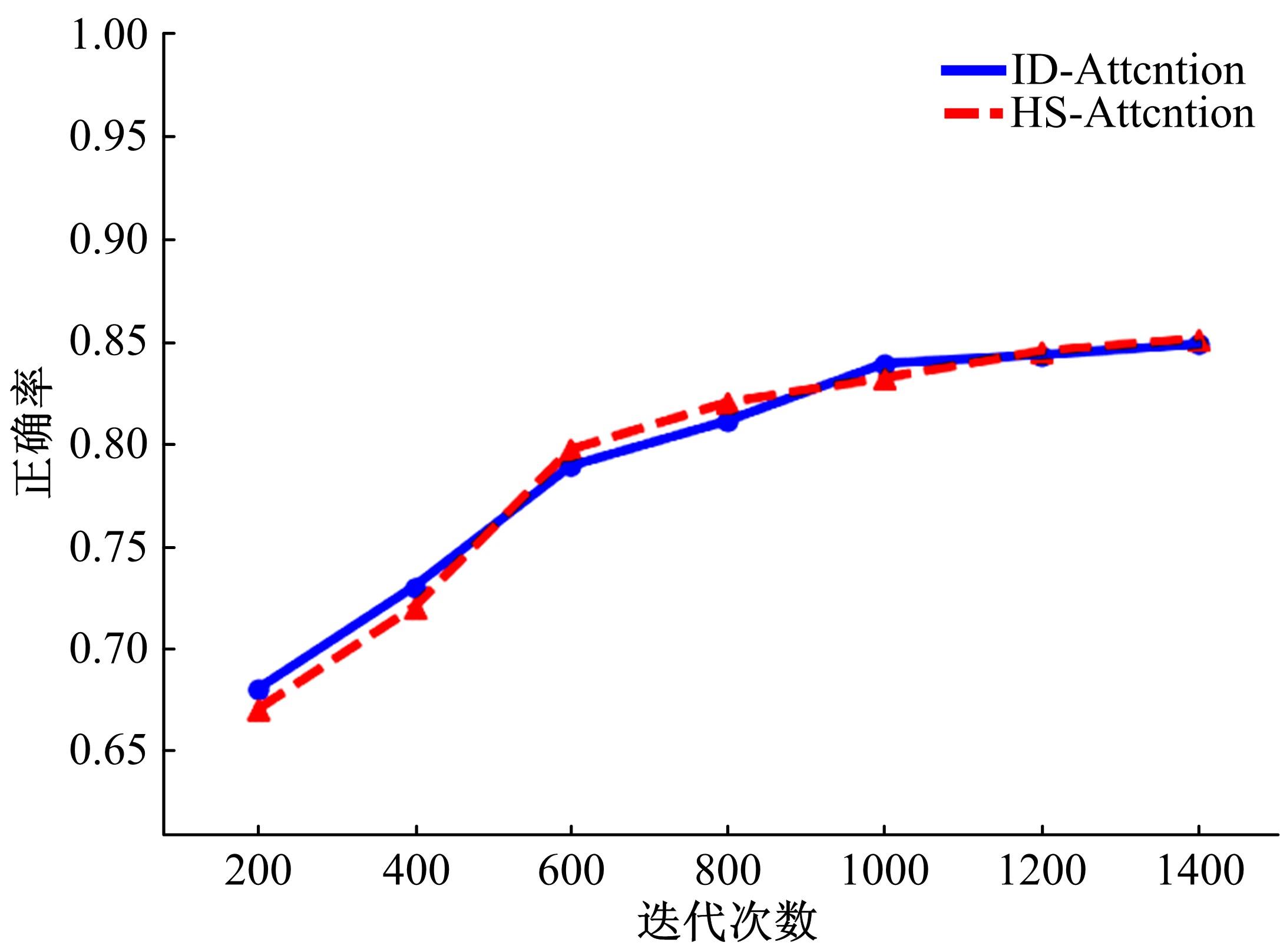

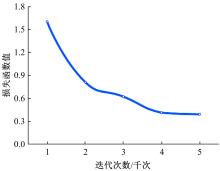

将注意力机制与深度强化学习相结合,利用标签信息研究如何自主学习出有效的朝鲜语文本结构化表示,提出了两种结构化表示模型:信息蒸馏注意力模型(ID-Attention)和层次结构注意力模型(HS-Attention)。ID-Attention选择与任务相关的重要单词,而HS-Attention在句中发现短语结构。两种表示模型中的结构发现是一个顺序决策问题,使用强化学习中的Policy Gradient实现。实验结果表明:ID-Attention能够识别朝鲜语重要单词;HS-Attention能够很好地提取出句子结构,在文本分类任务上有很好的性能表现,同时,两模型的结果对语料库的标注有很好的辅助作用。

中图分类号:

- TP391.1

| 1 | Bengio Y, Courville A,Vincent P. Representation learning: a review and new perspectives[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence,2013,35(8):1798-1828. |

| 2 | Socher R, Pennington J, Huang E H. Semi-supervised recursive auto encoders for predicting sentiment distributions[C]∥The Conference on Empirical Methods in Natural Language Processing, Edinburgh, UK, 2011, 151-161. |

| 3 | 毕玉德.朝鲜语自然语言处理研究管窥[J].中文信息学报, 2011,25(6):166-169, 182. |

| Bi Yu-de. A research on Korean natural language processing[J]. Journal of Chinese Information Processing, 2011,25 (6): 166-169, 182. | |

| 4 | Joulin A, Grave E, Bojanowski P. Bag of tricks for efficient text classification[C]∥The European Chapter of the ACL, Valencia, Spain, 2017:427-431. |

| 5 | Kim Y. Convolutional neural networks for sentence classification[C]∥The Conference on Empirical Methods in Natural Language Processing, Doha, Qatar,2014:1746-1751. |

| 6 | Zhu X, Sobihani P, Guo H. Long short-term memory over recursive structures[C]∥International Conference on Machine Learning, Miami, Florida, USA,2015:1604-1612. |

| 7 | Yang Z, Yang D, Dyer C. Hierarchical attention networks for document classification[C]∥Annual Conference of the North American Chapter of the Association for Computational Linguistics, San Diego,USA,2016:1480-1489. |

| 8 | Yogatama D, Blunsom P, Dyer C. Learning to compose words into sentences with reinforcement learning[C]∥ International Conference on Learning Representations,Toulon, France, 2017. |

| 9 | Chung J, Gulcehre C, Cho K. Empirical evaluation of gated recurrent neural networks on sequence modeling[C]∥NIPS, Montreal, Canada, 2014. |

| 10 | Zhang Tian-yang, Huang Min-lie, Zhao Li. Learning structured representation for text classification via reinforcement learning[C]∥The Association for the Advance of Artificial Intelligence, New Orleans,USA, 2018:6053-6060. |

| 11 | Williams R J. Simple statistical gradient-following algorithms for connectionist reinforcement learning[J]. Machine Learning, 1992,2(3/4):229-256. |

| 12 | Keneshloo Y, Ramakrishnan N, Reddy C K. Deep transfer reinforcement learning for text summarization[J]. Society for Industrial and Applied Mathematics, 2019, arxiv:. |

| 13 | Sutton R S, McAllester D A, Singh S P. Policy gradient methods for reinforcement learning with function approximation[C]∥NIPS, Denver, USA,2000:1057-1063. |

| 14 | Graves A, Schmidhuber Jürgen. Frame wise phoneme classification with bidirectional LSTM and other neural network architectures[J]. Neural Networks, 2005, 18(5/6):602-610. |

| 15 | Bahdanau D, Cho K, Bengio Y. Neural machine translation by jointly learning to align and translate[C]∥ International Conference on Machine Learning, Florida, USA,2015, arXiv:. |

| 16 | Tang D, Qin B, Liu T. Document modeling with gated recurrent neural network for sentiment classification[C]∥ Proceedings of the Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 2015:1422-1432. |

| 17 | Chung J, Ahn S, Bengio Y. Hierarchical multiscale recurrent neural networks[C]∥International Conference on Learning Representations, Toulon, France, 2017. |

| 18 | Pennington J, Socher R, Manning C D. Glove:Global vectors for word representation[C]∥Proceedings of the Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 2014:1532-1543. |

| 19 | Kingma D, Ba J. Adam: a method for stochastic optimization[C]∥International Conference on Learning Representations, Sandiego,USA,2015. |

| 20 | Mingjie T, Yahui Z, Rongyi C. Identifying word translations in scientific literature based on labeled bilingual topic model and co-occurrence features[C]∥The 17th China National Conference on Computational Linguistics, Changsha, China, 2018:79-92. |

| 21 | 孟先艳,崔荣一,赵亚慧,等.基于双向长短时记忆单元和卷积神经网络的多语种文本分类方法[J].计算机应用研究, 2020,37(9):2669-2673. |

| Meng Xian-yan, Cui Rong-yi, Zhao Ya-hui, et al. Multilingual text classification method based on bidirectional long-short memory unit and convolutional neural network[J]. Computer Application Research, 2020,37(9):2669-2673. | |

| 22 | Vaswani A,Shazeer N, Parmar N. Attention is all you need[J]. Advances in Neural Information Processing Systems, 2017:5999-6009. |

| 23 | Lin Z, Feng M, Santos C N. A structured self-attentive sentence embedding[C]∥International Conference on Learning Representations, Toulon, France,2017. |

| [1] | 刘元宁,吴迪,朱晓冬,张齐贤,李双双,郭书君,王超. 基于YOLOv3改进的用户界面组件检测算法[J]. 吉林大学学报(工学版), 2021, 51(3): 1026-1033. |

| [2] | 尚福华,曹茂俊,王才志. 基于人工智能技术的局部离群数据挖掘方法[J]. 吉林大学学报(工学版), 2021, 51(2): 692-696. |

| [3] | 赵海英,周伟,侯小刚,张小利. 基于多任务学习的传统服饰图像双层标注[J]. 吉林大学学报(工学版), 2021, 51(1): 293-302. |

| [4] | 赵宏伟,刘晓涵,张媛,范丽丽,龙曼丽,臧雪柏. 基于关键点注意力和通道注意力的服装分类算法[J]. 吉林大学学报(工学版), 2020, 50(5): 1765-1770. |

| [5] | 车翔玖,董有政. 基于多尺度信息融合的图像识别改进算法[J]. 吉林大学学报(工学版), 2020, 50(5): 1747-1754. |

| [6] | 欧阳丹彤,马骢,雷景佩,冯莎莎. 知识图谱嵌入中的自适应筛选[J]. 吉林大学学报(工学版), 2020, 50(2): 685-691. |

| [7] | 李贻斌,郭佳旻,张勤. 人体步态识别方法与技术[J]. 吉林大学学报(工学版), 2020, 50(1): 1-18. |

| [8] | 徐谦,李颖,王刚. 基于深度学习的行人和车辆检测[J]. 吉林大学学报(工学版), 2019, 49(5): 1661-1667. |

| [9] | 高万夫,张平,胡亮. 基于已选特征动态变化的非线性特征选择方法[J]. 吉林大学学报(工学版), 2019, 49(4): 1293-1300. |

| [10] | 杨顺,蒋渊德,吴坚,刘海贞. 基于多类型传感数据的自动驾驶深度强化学习方法[J]. 吉林大学学报(工学版), 2019, 49(4): 1026-1033. |

| [11] | 欧阳丹彤,肖君,叶育鑫. 基于实体对弱约束的远监督关系抽取[J]. 吉林大学学报(工学版), 2019, 49(3): 912-919. |

| [12] | 顾海军, 田雅倩, 崔莹. 基于行为语言的智能交互代理[J]. 吉林大学学报(工学版), 2018, 48(5): 1578-1585. |

| [13] | 董飒, 刘大有, 欧阳若川, 朱允刚, 李丽娜. 引入二阶马尔可夫假设的逻辑回归异质性网络分类方法[J]. 吉林大学学报(工学版), 2018, 48(5): 1571-1577. |

| [14] | 王旭, 欧阳继红, 陈桂芬. 基于垂直维序列动态时间规整方法的图相似度度量[J]. 吉林大学学报(工学版), 2018, 48(4): 1199-1205. |

| [15] | 张浩, 占萌苹, 郭刘香, 李誌, 刘元宁, 张春鹤, 常浩武, 王志强. 基于高通量数据的人体外源性植物miRNA跨界调控建模[J]. 吉林大学学报(工学版), 2018, 48(4): 1206-1213. |

|

||