吉林大学学报(工学版) ›› 2024, Vol. 54 ›› Issue (11): 3274-3282.doi: 10.13229/j.cnki.jdxbgxb.20230020

• 计算机科学与技术 • 上一篇

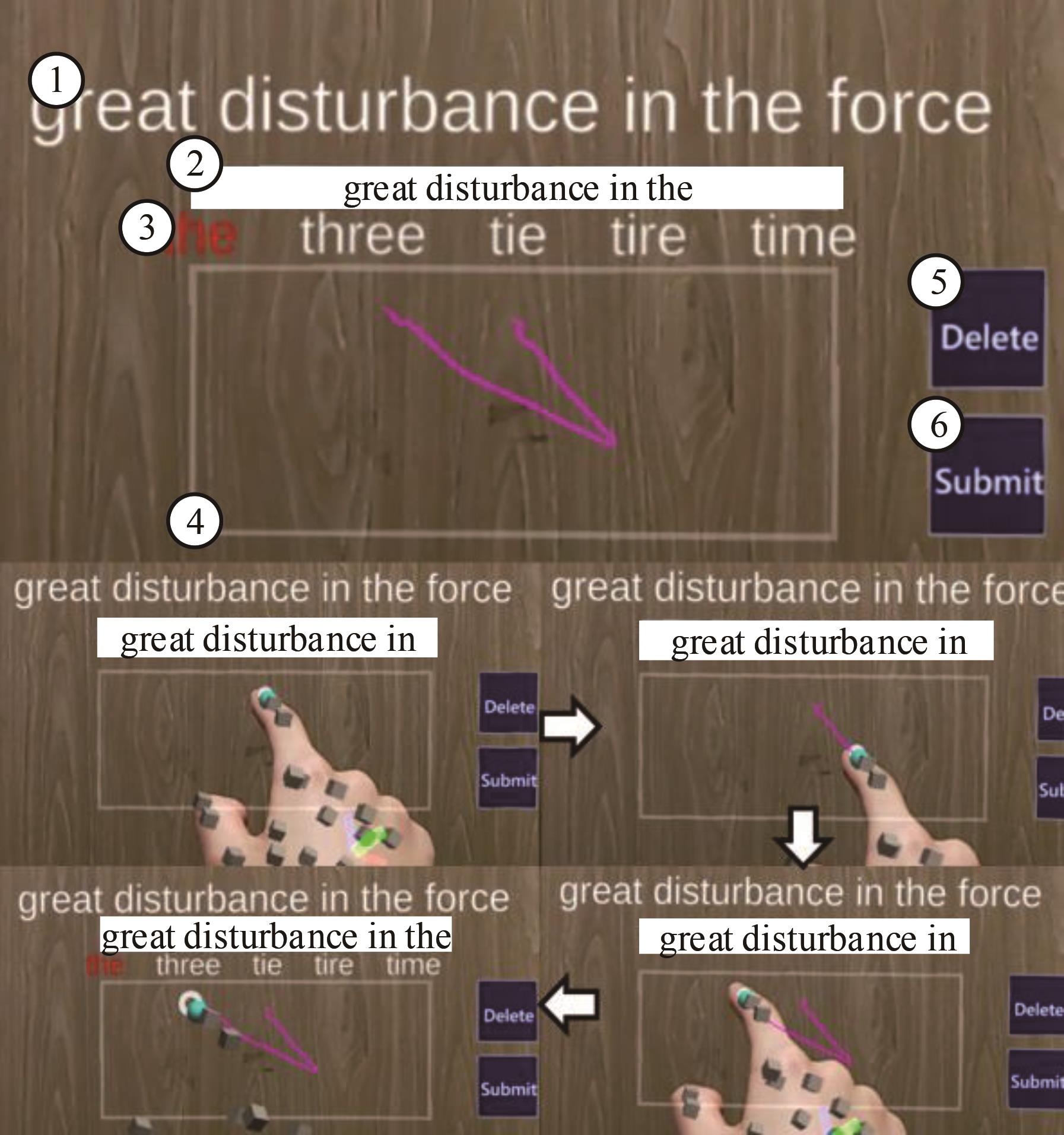

增强现实环境中基于假想键盘的手势输入方法

- 吉林大学 计算机科学与技术学院,长春 130012

Gesture input method based on transparent keyboard in augmented reality environment

Yu-xin YE( ),Luo-jia XIA,Ming-hui SUN(

),Luo-jia XIA,Ming-hui SUN( )

)

- College of Computer Science and Technology,Jilin University,Changchun 130012,China

摘要:

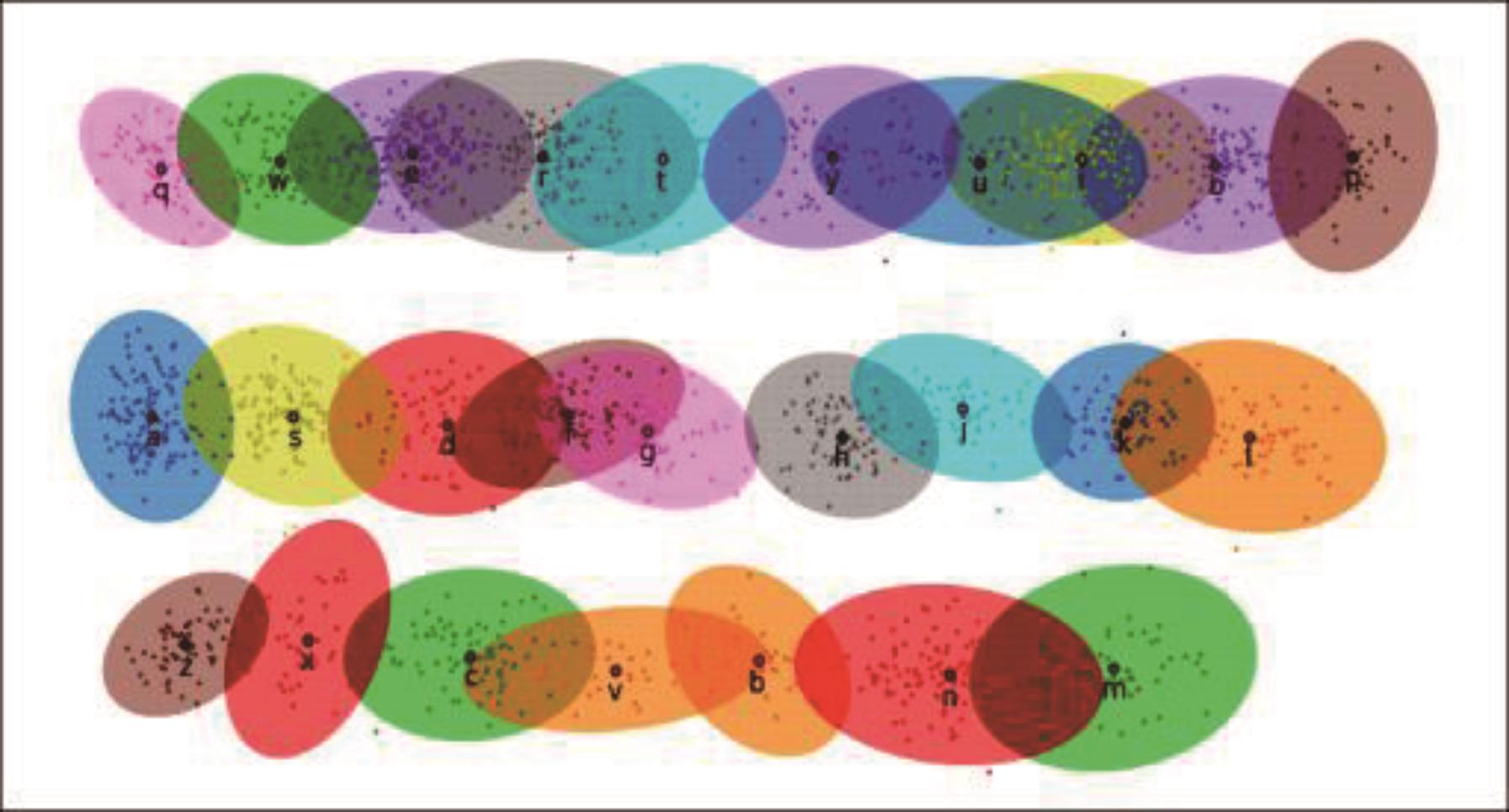

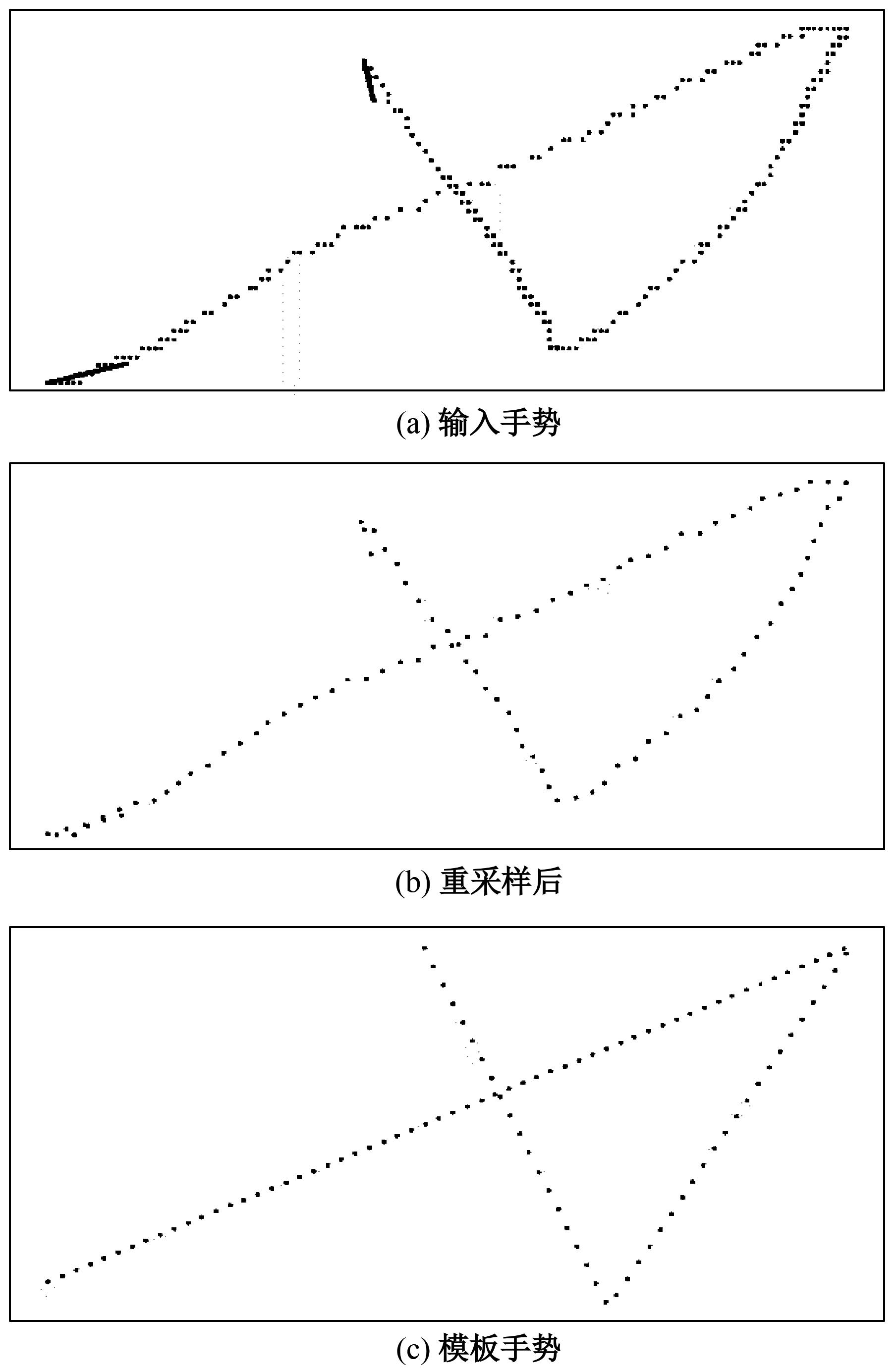

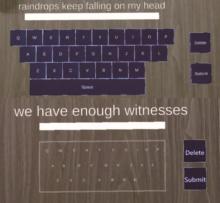

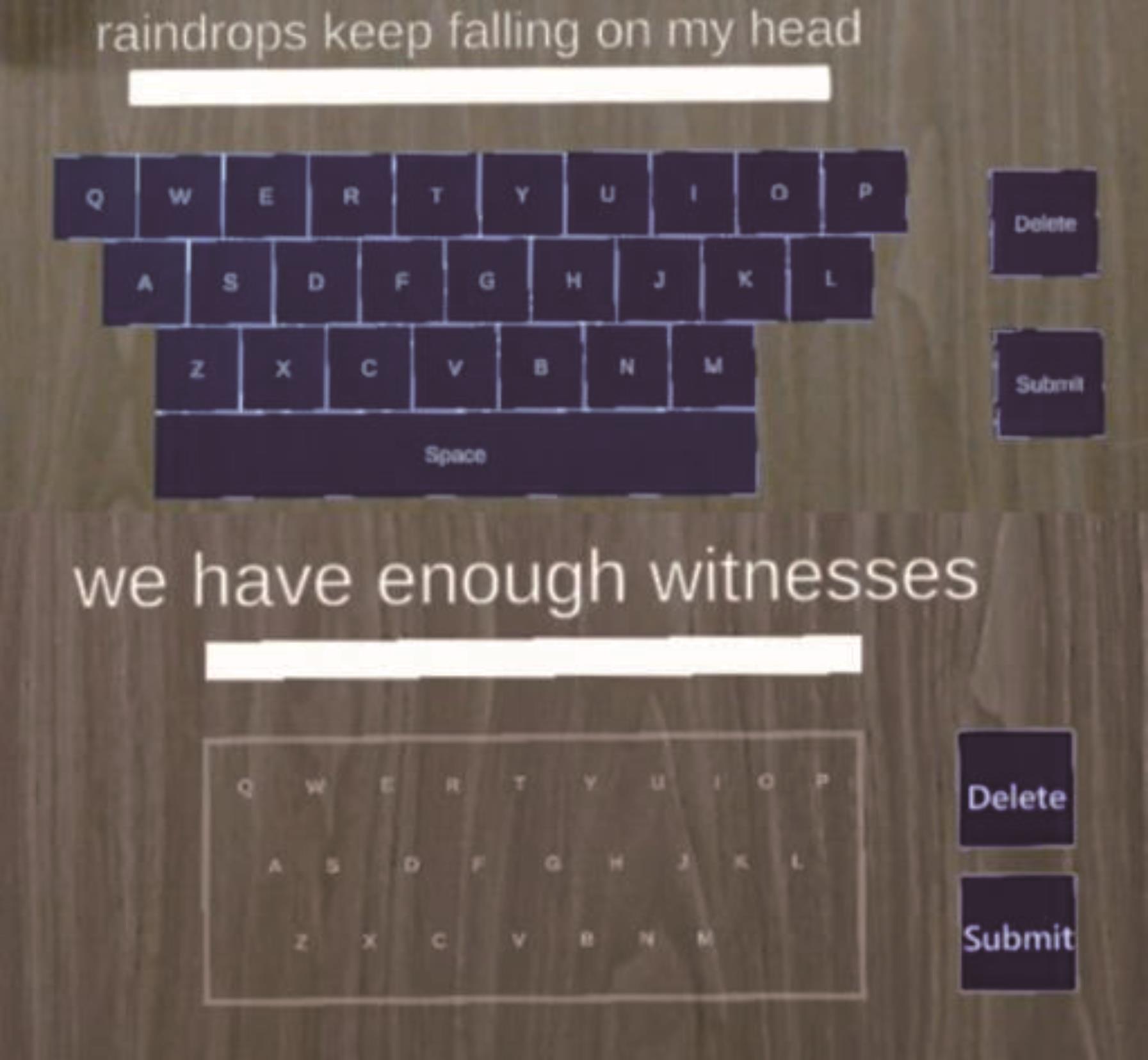

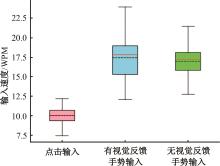

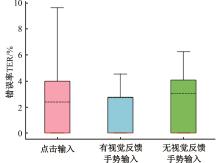

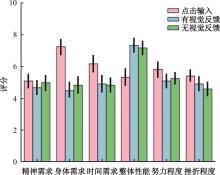

针对当前的增强现实环境中,点击输入方式存在效率低和视觉遮挡等问题,本文提出了一种新型的文本输入方式,将手势输入和假想键盘相结合,使用者在透明方框中绘制单词手势进行输入。首先,验证了在增强现实环境中使用假想键盘是可行和实用的;其次,对点击输入、有视觉反馈手势输入和无视觉反馈手势输入(本文方法)3种输入方式进行了用户研究,评估三者的性能。结果表明,无视觉反馈手势输入的平均速度可达16.95 WPM(Words per minute),超过点击输入6.92 WPM。

中图分类号:

- TP391.9

| 1 | Lu F, Davari S, Lisle L, et al. Glanceable AR: evaluating information access methods for head-worn augmented reality[C]∥2020 IEEE Conference on Virtual Reality and 3D User Interfaces(VR), Atlanta, USA, 2020: 930-939. |

| 2 | 秦贵和, 黄俊锋, 孙铭会. 基于双手键盘的虚拟现实文本输入[J].吉林大学学报: 工学版, 2022, 52(8): 1881-1888. |

| Qin Gui-he, Huang Jun-feng, Sun Ming-hui. Text input based on two⁃handed keyboard in virtual environment[J]. Journal of Jilin University (Engineering and Technology Edition), 2022, 52(8): 1881-1888. | |

| 3 | Hincapié R J D, Guo X, Moghadasian P, et al. Consumed endurance: a metric to quantify arm fatigue of mid-air interactions[C]∥Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Toronto, Canada, 2014: 1063-1072. |

| 4 | Lages W S, Bowman D A. Walking with adaptive augmented reality workspaces: design and usage patterns[C]∥Proceedings of the 24th International Conference on Intelligent User Interfaces, Marina del Ray, United States, 2019: 356-366. |

| 5 | Lee L H, Lam K Y, Yau Y P, et al. Hibey: hide the keyboard in augmented reality[C]∥2019 IEEE International Conference on Pervasive Computing and Communications, Kyoto, Japan, 2019: 1-10. |

| 6 | Pourchon R, Léger P M, Labonté-LeMoyne É, et al. Is augmented reality leading to more risky behaviors? An experiment with pokémon go[C]∥International Conference on HCI in Business, Government, and Organizations, Vancouver, Canada, 2017: 354-361. |

| 7 | Xu W, Liang H N, He A, et al. Pointing and selection methods for text entry in augmented reality head mounted displays[C]∥2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Beijing, China, 2019: 279-288. |

| 8 | Elsayed N A M, Thomas B H, Marriott K, et al. Situated analytics: demonstrating immersive analytical tools with augmented reality[J]. Journal of Visual Languages & Computing, 2016, 36: 13-23. |

| 9 | Liverani A, Amati G, Caligiana G. A CAD-augmented reality integrated environment for assembly sequence check and interactive validation[J]. Concurrent Engineering, 2004, 12(1): 67-77. |

| 10 | Grossman T, Chen X A, Fitzmaurice G. Typing on glasses: adapting text entry to smart eyewear[C]∥ Proceedings of the 17th International Conference on Human-Computer Interaction with Mobile Devices and Services, Copenhagen, Denmark, 2015: 144-152. |

| 11 | Yu C, Sun K, Zhong M, et al. One-dimensional handwriting: Inputting letters and words on smart glasses[C]∥Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, San Jose, USA, 2016: 71-82. |

| 12 | Sridhar S, Feit A M, Theobalt C, et al. Investigating the dexterity of multi-finger input for mid-air text entry[C]∥Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 2015: 3643-3652. |

| 13 | Ni T, Bowman D, North C. Airstroke: bringing unistroke text entry to freehand gesture interfaces[C]∥ Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Montréal, Canada, 2011: 2473-2476. |

| 14 | Wang C Y, Chu W C, Chiu P T, et al. Palmtype: using palms as keyboards for smart glasses[C]∥Proceedings of the 17th International Conference on Human-Computer Interaction with Mobile Devices and Services, Copenhagen, Denmark, 2015: 153-160. |

| 15 | Yu D, Fan K, Zhang H, et al. Pizzatext: text entry for virtual reality systems using dual thumbsticks[J]. IEEE Transactions on Visualization and Computer Graphics, 2018, 24(11): 2927-2935. |

| 16 | Li F C Y, Dearman D, Truong K N. Virtual shelves: interactions with orientation aware devices[C]∥Proceedings of the 22nd Annual ACM Symposium on User Interface Software and Technology, Victoria, Canada, 2009: 125-128. |

| 17 | Yan Y, Yu C, Ma X, et al. Eyes-free target acquisition in interaction space around the body for virtual reality[C]∥Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, Canada, 2018: 1-13. |

| 18 | Gustafson S, Bierwirth D, Baudisch P. Imaginary interfaces: spatial interaction with empty hands and without visual feedback[C]∥Proceedings of the 23nd Annual ACM Symposium on User Interface Software and Technology, New York, USA, 2010: 3-12. |

| 19 | Lin S Y, Su C H, Cheng K Y, et al. Pub-point upon body: exploring eyes-free interaction and methods on an arm[C]∥Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, USA, 2011: 481-488. |

| 20 | Cui W, Zheng J, Lewis B, et al. Hotstrokes: word-gesture shortcuts on a trackpad[C]∥Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, United Kingdom, 2019: 1-13. |

| 21 | Cui W, Zhu S, Li Z, et al. Backswipe: back-of-device word-gesture interaction on smartphones[C]∥ Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 2021: 1-12. |

| 22 | Zhai S, Kristensson P O. The word-gesture keyboard: reimagining keyboard interaction[J]. Communications of the ACM, 2012, 55(9): 91-101. |

| 23 | Macleod C, Ide N, Grishman R. The American national corpus: a standardized resource for American English[C]∥2nd International Conference on Language Resources and Evaluation, Athens, Greece, 2000: 1-4. |

| 24 | Gustafson S, Holz C, Baudisch P. Imaginary phone: learning imaginary interfaces by transferring spatial memory from a familiar device[C]∥Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology, Santa Barbara, USA, 2011: 283-292. |

| 25 | Kristensson P O, Zhai S. Shark2: a large vocabulary shorthand writing system for pen-based computers[C]∥Proceedings of the 17th Annual ACM Symposium on User Interface Software and Technology, Santa Fe, USA, 2004: 43-52. |

| 26 | Mackenzie I S, Soukoreff R W. Phrase sets for evaluating text entry techniques[C]∥Chi'03 Extended Abstracts on Human Factors in Computing Systems, Fort Lauderdale, USA, 2003: 754-755. |

| 27 | Hart S G, Staveland L E. Development of NASA-TLX (task load index): results of empirical and theoretical research[J]. Advances in Psychology, 1988, 52: 139-183. |

| 28 | Arif A S, Stuerzlinger W. Analysis of text entry performance metrics[C]∥2009 IEEE Toronto International Conference Science and Technology for Humanity (TIC-STH), Toronto, Canada, 2009: 100-105. |

| [1] | 车翔玖,武宇宁,刘全乐. 基于因果特征学习的有权同构图分类算法[J]. 吉林大学学报(工学版), 2025, 55(2): 681-686. |

| [2] | 梁礼明,周珑颂,尹江,盛校棋. 融合多尺度Transformer的皮肤病变分割算法[J]. 吉林大学学报(工学版), 2024, 54(4): 1086-1098. |

| [3] | 拉巴顿珠,扎西多吉,珠杰. 藏语文本标准化方法[J]. 吉林大学学报(工学版), 2024, 54(12): 3577-3588. |

| [4] | 车娜,朱奕明,赵剑,孙磊,史丽娟,曾现伟. 基于联结主义的视听语音识别方法[J]. 吉林大学学报(工学版), 2024, 54(10): 2984-2993. |

| [5] | 张惠,聂志玲,肖宏伟,王聃星. 无人驾驶汽车智能座舱人机交互界面色彩设计[J]. 吉林大学学报(工学版), 2023, 53(5): 1315-1321. |

| [6] | 薛珊,张亚亮,吕琼莹,曹国华. 复杂背景下的反无人机系统目标检测算法[J]. 吉林大学学报(工学版), 2023, 53(3): 891-901. |

| [7] | 时小虎,吴佳琦,吴春国,程石,翁小辉,常志勇. 基于残差网络的弯道增强车道线检测方法[J]. 吉林大学学报(工学版), 2023, 53(2): 584-592. |

| [8] | 王振,杨宵晗,吴楠楠,李国坤,冯创. 基于生成对抗网络的序列交叉熵哈希[J]. 吉林大学学报(工学版), 2023, 53(12): 3536-3546. |

| [9] | 周丰丰,颜振炜. 基于混合特征的特征选择神经肽预测模型[J]. 吉林大学学报(工学版), 2023, 53(11): 3238-3245. |

| [10] | 秦贵和,王曼莹,孙铭会. 多媒体终端上新型压力交互范式研究[J]. 吉林大学学报(工学版), 2023, 53(11): 3186-3193. |

| [11] | 王俊杰,农元君,张立特,翟佩臣. 基于施工场景的视觉关系检测方法[J]. 吉林大学学报(工学版), 2023, 53(1): 226-233. |

| [12] | 朱冰,李紫薇,李奇. 基于改进SegNet的遥感图像建筑物分割方法[J]. 吉林大学学报(工学版), 2023, 53(1): 248-254. |

| [13] | 秦贵和,黄俊锋,孙铭会. 基于双手键盘的虚拟现实文本输入[J]. 吉林大学学报(工学版), 2022, 52(8): 1881-1888. |

| [14] | 曲福恒,丁天雨,陆洋,杨勇,胡雅婷. 基于邻域相似性的图像码字快速搜索算法[J]. 吉林大学学报(工学版), 2022, 52(8): 1865-1871. |

| [15] | 白天,徐明蔚,刘思铭,张佶安,王喆. 基于深度神经网络的诉辩文本争议焦点识别[J]. 吉林大学学报(工学版), 2022, 52(8): 1872-1880. |

|

||