Journal of Jilin University(Engineering and Technology Edition) ›› 2024, Vol. 54 ›› Issue (1): 232-239.doi: 10.13229/j.cnki.jdxbgxb.20220239

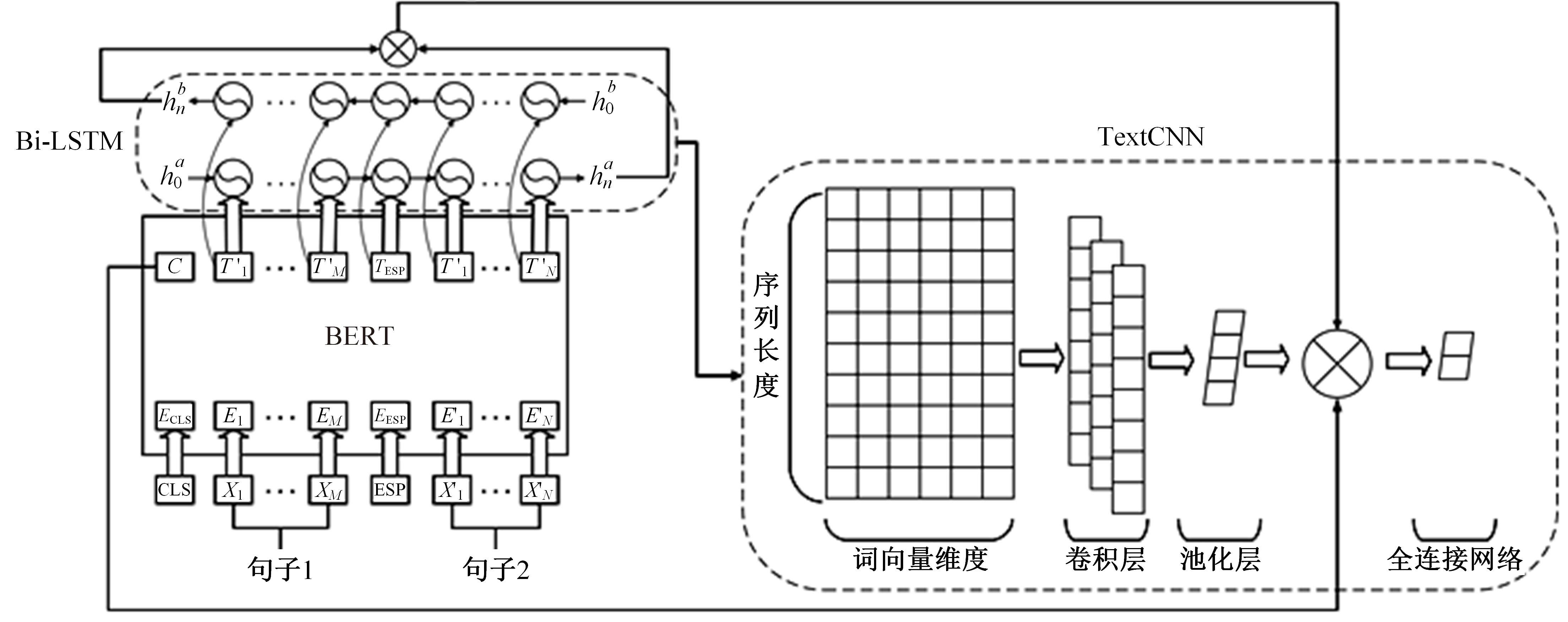

Long text semantic matching model based on BERT and dense composite network

Yue-lin CHEN1( ),Zhu-cheng GAO1,Xiao-dong CAI2(

),Zhu-cheng GAO1,Xiao-dong CAI2( )

)

- 1.School of Mechanical and Electrical Engineering,Guilin University of Electronic Technology,Guilin 541000,China

2.School of Information and Communication,Guilin University of Electronic Technology,Guilin 541000,China

CLC Number:

- TP391.1

| 1 | Filice Simone 1,Giovanni Da San Martinoao,Alessandro Moschitti,et al. SemEval-2017 task 3-learning pairwise patterns in community question answering[C]∥Proceedings of the 11th International Workshop on Semantic Evaluation, Vancouver, Canada, 2017: 326-333. |

| 2 | Wu Guo-shun, Sheng Yi-xuan, Lan Man, et al. Using traditional and deep learning methods to address community question answering task[C]∥Proceedings of the 11th International Workshop on Semantic Evaluation, Vancouver, Canada, 2017: 365-369. |

| 3 | Feng Wen-zheng, Wu Yu, Wu Wei, et al. Ranking system with neural matching features for community question answering[C]∥Proceedings of the 11th International Workshop on Semantic Evaluation, Vancouver, Canada, 2017: 280-286. |

| 4 | Koreeda Yuta, Hashito Takuya, Niwa Yoshiki, et al. Combination of neural similarity features and comment plausibility features[C]∥Proceedings of the 11th International Workshop on Semantic Evaluation, Vancouver, Canada, 2017: 353-359. |

| 5 | Wang Zhi-guo, Wael Hamza, Radu Florian. Bilateral multi-perspective matching for natural language sentences[C]∥Proceedings of the 26th International Joint Conference on Artificial Intelligence, Melbourne, Australia, 2017: 4144-4150. |

| 6 | Tan Chuan-qi, Wei Fu-ru, Wang Wen-hui, et al. Multiway attention networks for modeling sentence pairs[C]∥Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 2018: 4411-4417. |

| 7 | Jan Milan Deriu, Cieliebak Mark. Attention-based convolutional neural network for community question answering[C]∥Proceedings of the 11th International Workshop on Semantic Evaluation, Vancouver, Canada, 2017: 334-338. |

| 8 | Jacob Devlin, Chang Ming-wei, Lee Kenton, et al. BERT: pre-training of deep bidirectional transformers for language understanding[C]∥Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics, Minneapolis, USA, 2019: 4171-4186. |

| 9 | 陈源,丘心颖. 结合自监督学习的多任务文本语义匹配方法[J]. 北京大学学报: 自然科学版, 2022,58(1): 83-90. |

| Chen Yuan, Qiu Xin-ying. Multi-task semantic matching with self-supervised learning[J]. Acta Scientiarum Naturalium Universitatis Pekinensis,2022,58(1): 83-90. | |

| 10 | Reimers Nils, Gurevych Iryna. Sentence BERT: Sentence embeddings using siamese BERTnetworks[C]∥Proceedings of the 3rd Workshop on Neural Generation and Translation, Hong Kong, China, 2019: 3982-3992. |

| 11 | Li Bo-han, Zhou Hao, He Jun-xian, et al. On the sentence embeddings from pre-trained language models[C]∥The Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 2020: 9119-9130. |

| 12 | Peinelt N, Nguyen D, Liakata M. tBERT: topic models and BERT joining forces for semantic similarity detection[C]∥Proceedings of the 58rd Annual Meeting of the Association for Computational Linguistics, Tokyo, Japan, 2020: 7047-7055. |

| 13 | Chen Han-jie, Zheng Guang-tao, Ji Yang-feng. Generating hierarchical explanations on text classification via feature interaction detection[C]∥Proceedings of the 58rd Annual Meeting of the Association for Computational Linguistics, Tokyo, Japan,2020:5578-5593. |

| 14 | Tsang M, Cheng D, Liu H, et al. Feature interaction interpretability: a case for explaining ad-recommendation systems via neural interaction detection[J/OL]. [2020-12-11]. . |

| 15 | Gao J, He D, Tan X, et al. Representation degeneration problem in training natural language generation models[J/OL].[2019-12-10]. . |

| 16 | Ethayarajh K. How contextual are contextualized word representations?[C]∥Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, Hong Kong, China, 2019, 55-65. |

| 17 | Yan Yuan-meng, Li Ru-mei, Wang Si-rui, et al. ConSERT: a contrastive framework for self-supervised sentence representation transfer[C]∥Association for Computational Linguistics and International Joint Conference on Natural Language Processing,Online, 2021: 5065–5075. |

| 18 | Schick T, Schütze H. Generating datasets with pretrained language models[J/OL][2021-10-21]. . |

| 19 | Chen Han-jie, Song Feng, Ganhotra Jatin, et al. Explaining neural network predictions on sentence pairs via learning word-group masks[C]∥Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics, Mexico City, Mexico, 2021: 3917-3930. |

| 20 | Balazs Jorge A, Matsuo Y. Gating mechanisms for combining character and word-level word representations: an empirical study[C]∥Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics, Minneapolis, USA, 2019: 110-124. |

| 21 | Choi H, Kim J, Joe S, et al. Evaluation of BERT and albert sentence embedding performance on downstream NLP tasks[C]∥The 25th International Conference on Pattern Recognition,Online, 2021: 5482-5487. |

| [1] | Wen-hui ZHANG,Jing YI. Optimization of bus stop system considering capacity and queuing delays [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(1): 146-154. |

| [2] | Guang HUO,Da-wei LIN,Yuan-ning LIU,Xiao-dong ZHU,Meng YUAN,Di GAI. Lightweight iris segmentation model based on multiscale feature and attention mechanism [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2591-2600. |

| [3] | Xiao-jun JIN,Yan-xia SUN,Jia-lin YU,Yong CHEN. Weed recognition in vegetable at seedling stage based on deep learning and image processing [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(8): 2421-2429. |

| [4] | Qing-tian GENG,Zhi LIU,Qing-liang LI,Fan-hua YU,Xiao-ning LI. Prediction of soil moisture based on a deep learning model [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(8): 2430-2436. |

| [5] | Wei-tiao WU,Kun ZENG,Wei ZHOU,Peng LI,Wen-zhou JIN. Deep learning method for bus passenger flow prediction based on multi-source data and surrogate-based optimization [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(7): 2001-2015. |

| [6] | Zhen-hai ZHANG,Kun JI,Jian-wu DANG. Crack identification method for bridge based on BCEM model [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(5): 1418-1426. |

| [7] | Fei WU,Hao-ye NONG,Chen-hao MA. Tool wear prediction method based on particle swarm optimizationlong and short time memory model [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(4): 989-997. |

| [8] | Wen-li JI,Zhong TIAN,Jing CHAI,Ding-ding ZHANG,Bin WANG. Prediction of water⁃flowing height in fractured zone based on distributed optical fiber and multi⁃attribute fusion [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(4): 1200-1210. |

| [9] | Ke HE,Hai-tao DING,Xuan-qi LAI,Nan XU,Kong-hui GUO. Wheel odometry error prediction model based on transformer [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(3): 653-662. |

| [10] | Chun-hui LIU,Si-chang WANG,Ce ZHENG,Xiu-lian CHEN,Chun-lei HAO. Obstacle avoidance planning algorithm for indoor navigation robot based on deep learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(12): 3558-3564. |

| [11] | Xiao-qi LYU,Hao LI,Yu GU. Adaptive blur and deduplication algorithm for digital media image based on wavelet domain [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(11): 3201-3206. |

| [12] | Jun WANG,Hua-lin WANG,Bo-wen HUANG,Qiang FU,Jun LIU. Intrusion detection for industrial internet of things based on federated learning and self-attention [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(11): 3229-3237. |

| [13] | Shu-yang SUN,Wei-bin CHENG,Hao-zhen ZHANG,Xiang-ping DENG,Hong QI. Deep-learning-based two-stage approach for real-time explicit topology optimization [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(10): 2942-2951. |

| [14] | You QU,Wen-hui LI. Multiple object tracking method based on multi-task joint learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(10): 2932-2941. |

| [15] | Jin-wu GAO,Zhi-huan JIA,Xiang-yang WANG,Hao XING. Degradation trend prediction of proton exchange membrane fuel cell based on PSO⁃LSTM [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(9): 2192-2202. |

|

||