Journal of Jilin University(Engineering and Technology Edition) ›› 2024, Vol. 54 ›› Issue (6): 1777-1787.doi: 10.13229/j.cnki.jdxbgxb.20221042

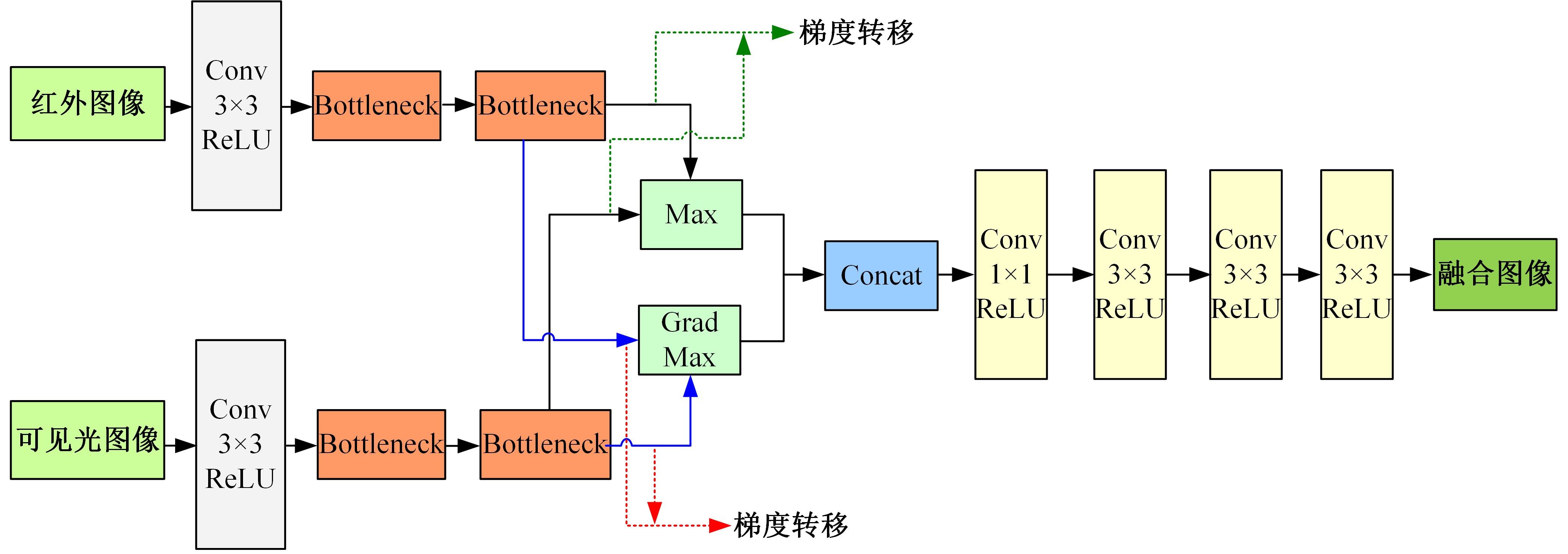

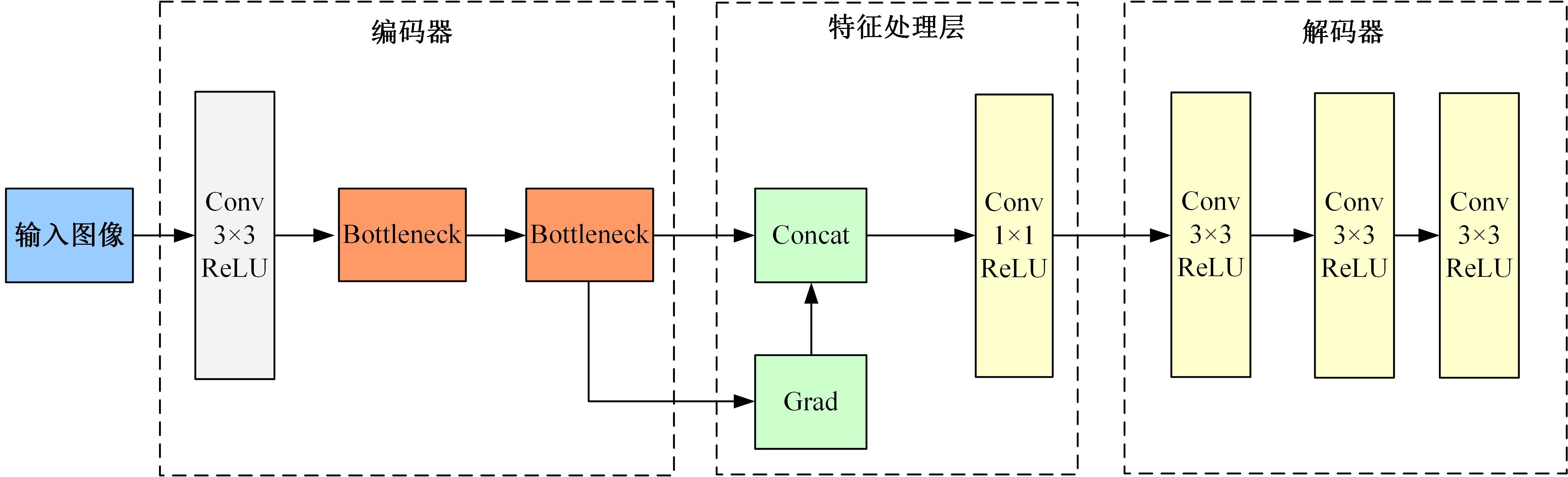

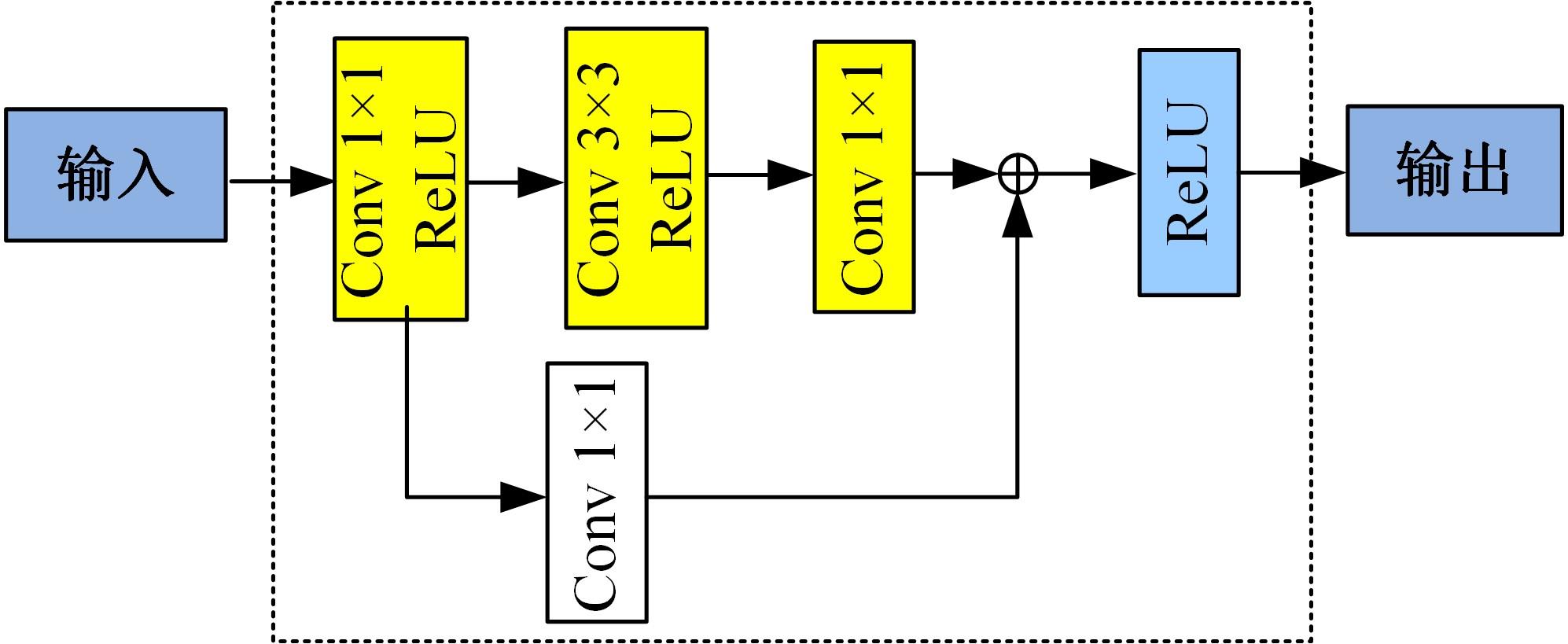

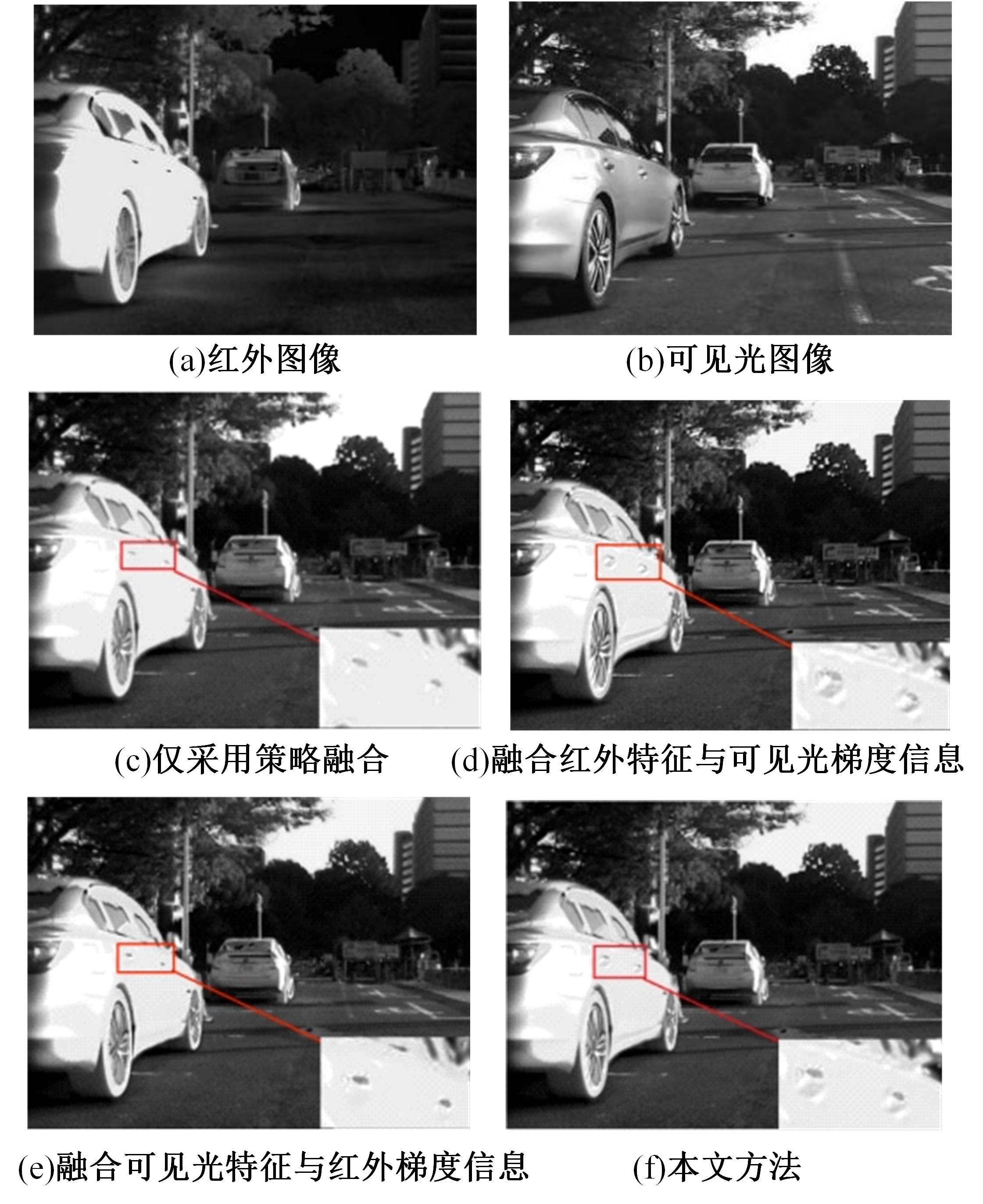

Infrared and visible image fusion based on gradient transfer and auto-encoder

Yan-feng LI1( ),Ming-yang LIU1,Jia-ming HU2,Hua-dong SUN2,Jie-yu MENG2,Ao-ying WANG2,Han-yue ZHANG1,Hua-min YANG1,Kai-xu HAN3(

),Ming-yang LIU1,Jia-ming HU2,Hua-dong SUN2,Jie-yu MENG2,Ao-ying WANG2,Han-yue ZHANG1,Hua-min YANG1,Kai-xu HAN3( )

)

- 1.School of Computer Science and Technology,Changchun University of Science and Technology,Changchun 130022,China

2.School of Artificial Intelligence,Changchun University of Science and Technology,Changchun 130022,China

3.School of Electronics and Information Engineering,Beibu Gulf University,Qinzhou 535011,China

CLC Number:

- TP391.41

| 1 | 沈英, 黄春红, 黄峰,等. 红外与可见光图像融合技术的研究进展[J]. 红外与激光工程, 2021, 50(9): No.20200467. |

| Shen Ying, Huang Chun-hong, Huang Feng,et al. Research progress of infrared and visible image fusion technology[J].Infrared and Laser Engineering,2021, 50(9): No.20200467. | |

| 2 | 陈广秋, 陈昱存, 李佳悦, 等. 基于DNST和卷积稀疏表示的红外与可见光图像融合[J].吉林大学学报:工学版, 2021, 51(3): 996-1010. |

| Chen Guang-qiu, Chen Yu-cun, Li Jia-yue,et al.Infrared and visible image fusion based on discrete nonseparable shearlet transform and convolutional sparse representation[J]. Journal of Jilin University (Engineering and Technology Edition),2021,51(3): 996-1010. | |

| 3 | 李雄飞, 宋璐, 张小利. 基于协同经验小波变换的遥 感图像融合[J]. 吉林大学学报: 工学版, 2019, 49(4): 1307-1319. |

| Li Xiong-fei, Song Lu, Zhang Xiao-li. Remote sensing image fusion based on cooperative empirical wave let transform[J].Journal of Jilin University (Engineer-ing and Technology Edition), 2019, 49(4):1307-109. | |

| 4 | Zhang X C, Ye P, Leung H,et al. Object fusion tracking based on visible and infrared images:a comprehensive review[J]. Information Fusion, 2020, 63(1): 166-187. |

| 5 | 陈卓, 方明, 柴旭, 等. 红外与可见光图像融合的U-GAN模型[J]. 西北工业大学学报, 2020, 38(4): 904-912. |

| Chen Zhuo, Fang Ming, Chai Xu, et al. U-GAN model for infrared and visible images fusion[J]. Journal of Northwestern Polytechnical University, 2020, 38(4): 904-912. | |

| 6 | Ma J Y, Ma Y, Li C. Infrared and visible image fusion methods and applications: a survey[J]. Information Fusion, 2019, 45(1): 153-178. |

| 7 | 陈潮起, 孟祥超, 邵枫, 等. 一种基于多尺度低秩分解的红外与可见光图像融合方法[J]. 光学学报, 2020, 40(11): 72-80. |

| Chen Chao-qi, Meng Xiang-chao, Shao Feng, et al. Infrared and visible image fusion method based on multiscale low-rank decomposition[J]. Acta Optica Sinica, 2020, 40(11): 72-80. | |

| 8 | 霍星, 邹韵, 陈影, 等. 双尺度分解和显著性分析相结合的红外与可见光图像融合[J]. 中国图象图形学报, 2021, 26(12): 2813-2825. |

| Huo Xing, Zou Yun, Chen Ying, et al.Dual-scale decomposition and saliency analysis based infrared and visible image fusion[J]. Journal of Image and Graphics, 2021, 26(12): 2813-2825. | |

| 9 | 裴佩佩, 杨艳春, 党建武, 等. 基于滚动引导滤波器和卷积稀疏表示的红外与可见光图像融合方法[J]. 激光与光电子学进展, 2022, 59(12): 56-63. |

| Pei Pei-pei, Yang Yan-chun, Dang Jian-wu, et al. Infrared and visible image fusion method based on rolling guide filter and convolutional sparse representation[J]. Laser and Optoelectronics Progress, 2022, 59(12): 56-63. | |

| 10 | 申铉京, 张雪峰, 王玉, 等. 像素级卷积神经网络多聚焦图像融合算法[J]. 吉林大学学报: 工学版, 2022, 52(8): 1857-1864. |

| Shen Xuan-jing, Zhang Xue-feng, Wang Yu,et al. Multi⁃focus image fusion algorithm based on pixel⁃level convolutional neural network[J]. Journal of Jilin University (Engineering and Technology Edition), 2022, 52(8): 1857-1864. | |

| 11 | Liu Y, Chen X, Peng H, et al. Multi-focus image fusion with a deep convolutional neural network[J].Information Fusion, 2017, 36(1): 191-207. |

| 12 | Prabhakar K R, Srikar V S, Babu R V. DeepFuse: a deep unsupervised approach for exposure fusion with extreme exposure image pairs[C]∥Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 2017: 4714-4722. |

| 13 | Li H, Wu X J. DenseFuse: a fusion approach to infrared and visible images[J]. IEEE Transactions on Image Processing, 2018, 28(5): 2614-2623. |

| 14 | Tang L, Yuan J, Zhang H, et al. PIAFusion: a progressive infrared and visible image fusion network based on illumination aware[J]. Information Fusion, 2022,3(7): 79-92. |

| 15 | Xu H, Ma J, Jiang J, et al. U2Fusion: a unified unsupervised image fusion network[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020,44(1): 502-518. |

| 16 | Ha Q, Watanabe K, Karasawa T, et al.MFNet: towards realtime semantic segmentation for autonomous vehicles with multi-spectral scenes[C]∥Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Vancouver, Canada, 2017: 5108-5115. |

| 17 | Ma J, Wei Y, Liang P, et al. FusionGAN: a generative adversarial network for infrared and visible image fusion[J]. Information Fusion, 2019, 48(1): 11-26. |

| 18 | Ma J, Zhang H, Shao Z,et al. GANMcC: a generative adversarial network with multiclassification constraints for infrared and visible image fusion[J]. IEEE Transactions on Instrumentation and Measurement, 2020, 70: 1-14. |

| 19 | Li H, Wu X J, Josef K. RFN-Nest: an end-to-end residual fusion network for infrared and visible images[J]. Information Fusion, 2021, 73: 72-86. |

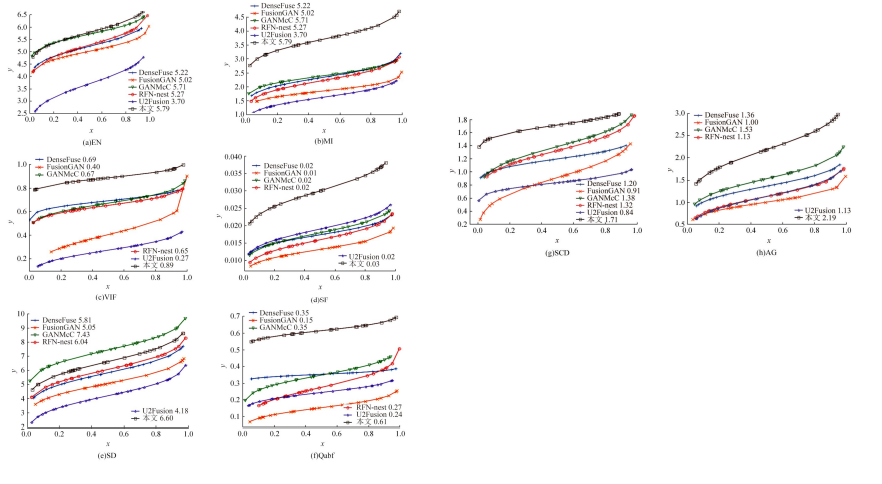

| 20 | van Aardt J.Assessment of image fusion procedures using entropy, image quality, and multispectral classifification[J]. Journal of Applied Remote Sensing, 2008, 2(1): No. 023522. |

| 21 | Qu G, Zhang D, Yan P. Information measure for performance of image fusion[J]. Electronics Letters, 2002, 38(7): 313-315. |

| 22 | Eskicioglu A M, Fisher P S.Image quality measures and their performance[J]. IEEE Trans. Commun., 1995, 43(12): 2959-2965. |

| 23 | Han Y, Cai Y, Cao Y, et al. A new image fusion performance metric based on visual information fifidelity[J]. Information Fusion, 2013,14(2): 127-135. |

| [1] | Ming-hui SUN,Hao XUE,Yu-bo JIN,Wei-dong QU,Gui-he QIN. Video saliency prediction with collective spatio-temporal attention [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(6): 1767-1776. |

| [2] | Li-ping ZHANG,Bin-yu LIU,Song LI,Zhong-xiao HAO. Trajectory k nearest neighbor query method based on sparse multi-head attention [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(6): 1756-1766. |

| [3] | Zi-chao ZHANG,Jian CHEN. A mapping method using 3D orchard point cloud based on hawk-eye-inspired stereo vision and super resolution [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(5): 1469-1481. |

| [4] | Li-ming LIANG,Long-song ZHOU,Jiang YIN,Xiao-qi SHENG. Fusion multi-scale Transformer skin lesion segmentation algorithm [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(4): 1086-1098. |

| [5] | Yun-zuo ZHANG,Wei GUO,Wen-bo LI. Omnidirectional accurate detection algorithm for dense small objects in remote sensing images [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(4): 1105-1113. |

| [6] | Guan-xu LONG,Xiu-shi ZHANG,Gong-feng XIN,Tao WANG,Gan YANG. Bridge weigh-in-motion combined with machine version [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(1): 188-197. |

| [7] | Yun-zuo ZHANG,Xu DONG,Zhao-quan CAI. Multi view gait cycle detection by fitting geometric features of lower limbs [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2611-2619. |

| [8] | Ming-yao XIAO,Xiong-fei LI,Rui ZHU. Medical image fusion based on pixel correlation analysis in NSST domain [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2640-2648. |

| [9] | Guang HUO,Da-wei LIN,Yuan-ning LIU,Xiao-dong ZHU,Meng YUAN,Di GAI. Lightweight iris segmentation model based on multiscale feature and attention mechanism [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2591-2600. |

| [10] | Ying HE,Zhuo-ran WANG,Xu ZHOU,Yan-heng LIU. Point of interest recommendation algorithm integrating social geographical information based on weighted matrix factorization [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2632-2639. |

| [11] | Ya-hui ZHAO,Fei-yu LI,Rong-yi CUI,Guo-zhe JIN,Zhen-guo ZHANG,De LI,Xiao-feng JIN. Korean⁃Chinese translation quality estimation based on cross⁃lingual pretraining model [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(8): 2371-2379. |

| [12] | Xiang-jiu CHE,Huan XU,Ming-yang PAN,Quan-le LIU. Two-stage learning algorithm for biomedical named entity recognition [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(8): 2380-2387. |

| [13] | Lian-ming WANG,Xin WU. Method for 3D motion parameter measurement based on pose estimation [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(7): 2099-2108. |

| [14] | Ze-qiang ZHANG,Wei LIANG,Meng-ke XIE,Hong-bin ZHENG. Elite differential evolution algorithm for mixed⁃model two⁃side disassembly line balancing problem [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(5): 1297-1304. |

| [15] | Zhen-hai ZHANG,Kun JI,Jian-wu DANG. Crack identification method for bridge based on BCEM model [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(5): 1418-1426. |

|

||