Journal of Jilin University(Engineering and Technology Edition) ›› 2025, Vol. 55 ›› Issue (1): 93-104.doi: 10.13229/j.cnki.jdxbgxb.20230313

Previous Articles Next Articles

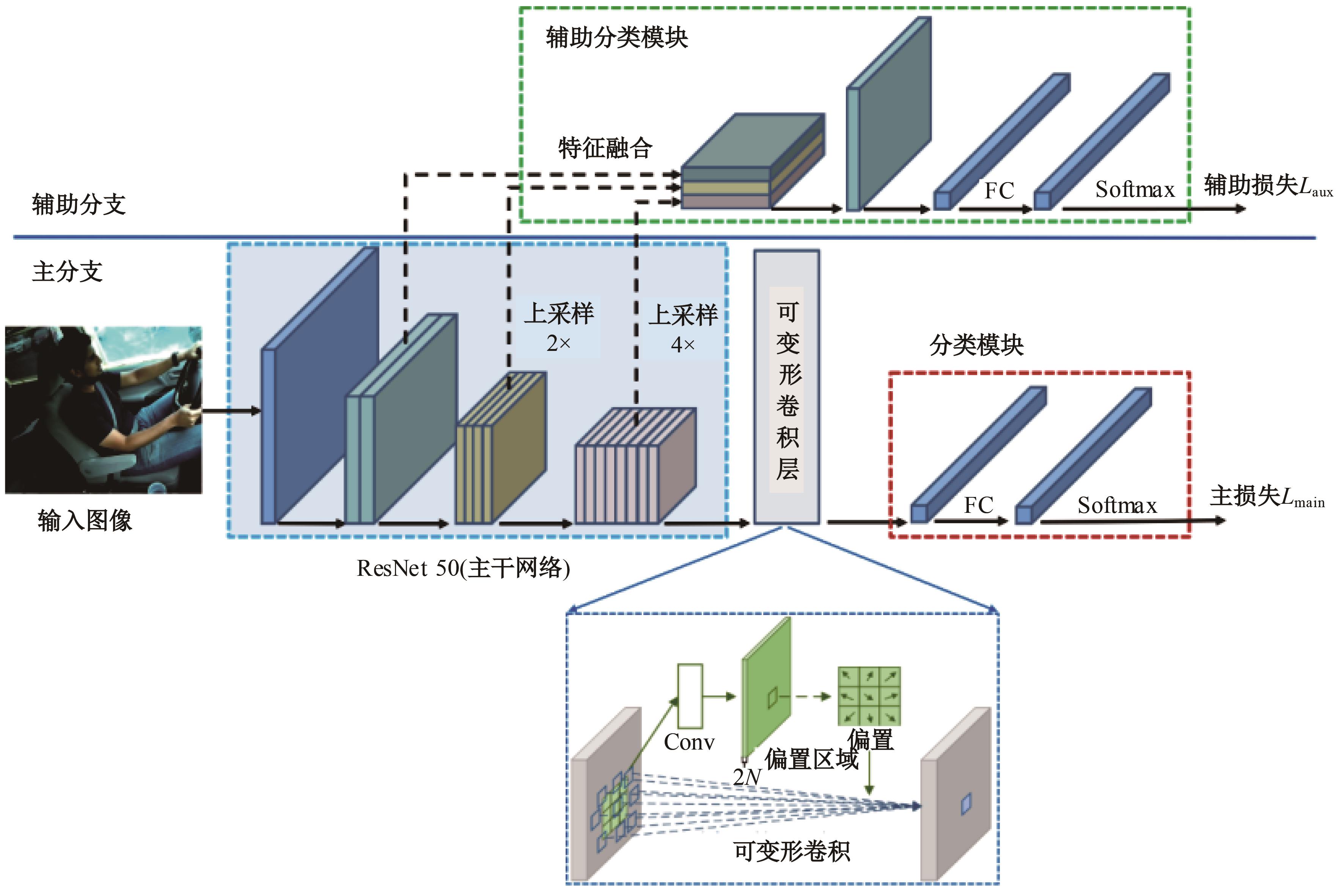

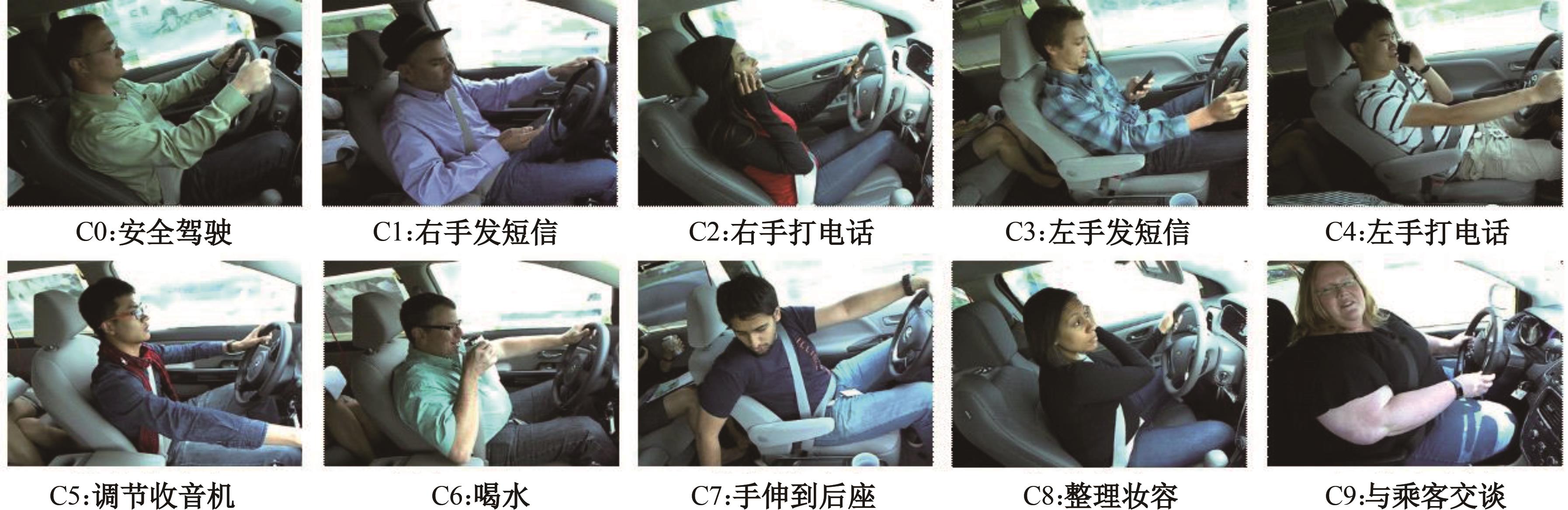

Driver behavior recognition method based on dual-branch and deformable convolutional neural networks

Hong-yu HU( ),Zheng-guang ZHANG,You QU,Mu-yu CAI,Fei GAO(

),Zheng-guang ZHANG,You QU,Mu-yu CAI,Fei GAO( ),Zhen-hai GAO

),Zhen-hai GAO

- State Key Laboratory of Automotive Simulation and Control,Jilin University,Changchun 130022,China

CLC Number:

- U471.3

| 1 | Dogan E, Rahal M C, Deborne R, et al. Transition of control in a partially automated vehicle: effects of anticipation and non-driving-related task involvement [J]. Transportation Research Part F: Traffic Psychology and Behaviour, 2017, 46(2017): 205-215. |

| 2 | Deeb J, Zakaria F, Kamali W, et al. Method of detection of early falling asleep while driving using EOG analysis[C]∥Proceedings of the 4th International Conference on Advances in Biomedical Engineering (ICABME), Beirut, Lebanon, 2017:150-153. |

| 3 | Halomoan J, Ramli K, Sudiana D. Statistical analysis to determine the ground truth of fatigue driving state using ECG recording and subjective reporting[C]∥Proceedings of the 1st International Conference on Information Technology, Advanced Mechanical and Electrical Engineering, Yogyakarta, Indonesia, 2020: 244-248. |

| 4 | Satti A T, Kim J, Yi E, et al. Microneedle array electrode-based wearable EMG system for detection of driver drowsiness through steering wheel grip[J]. Sensors, 2021, 21(15): 5091-5104. |

| 5 | Ebrahimian S, Nahvi A, Tashakori M, et al. Multi-level classification of driver drowsiness by simultaneous analysis of ECG and respiration signals using deep neural networks[J]. International Journal of Environmental Research and Public Health, 2022, 19(17): 10736-10752. |

| 6 | Jantan S, Ahmad S A, Soh A C, et al. A multi-model analysis for driving fatigue detection using EEG signals[C]∥Proceedings of the 7th IEEE-EMBS Conference on Biomedical Engineering and Sciences(IECBES), Kuala Lumpur, Malaysia, 2022: 183-188. |

| 7 | Pakdamanian E, Sheng S, Baee S, et al. DeepTake: prediction of driver takeover behavior using multimodal data[C]∥Proceedings of the CHI Conference on Human Factors in Computing Systems, Yokohama,Japan, 2021:No.3445563. |

| 8 | Wang X, Xu R, Zhang S, et al. Driver distraction detection based on vehicle dynamics using naturalistic driving data[J]. Transportation Research Part C: Emerging Technologies, 2022, 136(2022): 103561-103572. |

| 9 | Li Z, Chen L, Nie L, et al. A novel learning model of driver fatigue features representation for steering wheel angle[J]. IEEE Transactions on Vehicular Technology, 2022, 71(1): 269-281. |

| 10 | Tang M, Wu F, Zhao L L, et al. Detection of distracted driving based on MultiGranularity and Middle-Level features[C]∥Chinese Automation Congress (CAC),Shanghai, China, 2020: 2717-2722. |

| 11 | Lin Y, Cao D, Fu Z, et al. A lightweight attention-based network towards distracted driving behavior recognition[J]. Applied Sciences, 2022, 12(9): 4191-4208. |

| 12 | Huang T, Fu R, Chen Y, et al. Real-time driver behavior detection based on deep deformable inverted residual network with an attention mechanism for human-vehicle co-driving system[J]. IEEE Transactions on Vehicular Technology, 2022, 71(12): 12475-12488. |

| 13 | Liu D, Yamasaki T, Wang Y, et al. Toward extremely lightweight distracted driver recognition with distillation-based neural architecture search and knowledge transfer[J]. IEEE Transactions on Intelligent Transportation Systems, 2022, 24(1): 764-777. |

| 14 | He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA,2016:770-778. |

| 15 | Deng J, Dong W, Socher R, et al. Imagenet: a large-scale hierarchical image database[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, USA,2009:248-255. |

| 16 | Dai J F, Qi H Z, Xiong Y W, et al. Deformable convolutional networks[C]∥Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy,2017:764-773. |

| 17 | Szegedy C, Liu W, Jia Y, et al. Going deeper with convolutions[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015:No.7298594. |

| 18 | Inc Kaggle. State farm distracted driver detection[DB/OL].[2023-03-25].. |

| 19 | Omeiza D, Speakman S, Cintas C, et al. Smooth grad-cam++: an enhanced inference level visualization technique for deep convolutional neural network models [J/OL].[2023-03-26]. . |

| 20 | Krizhevsky A, Sutskever I, Hinton G E. Imagenet classification with deep convolutional neural networks [J]. Communications of the ACM, 2017, 60(6): 84-90. |

| 21 | Huang G, Liu Z, Van Der Maaten L, et al. Densely connected convolutional networks[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu,USA,2017: 4700-4708. |

| 22 | Szegedy C, Vanhoucke V, Ioffe S, et al. Rethinking the inception architecture for computer vision[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas,USA,2016: 2818-2826. |

| 23 | Su J, Vargas D V, Sakurai K. One pixel attack for fooling deep neural networks[J]. IEEE Transactions on Evolutionary Computation, 2019, 23(5): 828-841. |

| [1] | Jun-nian WANG,Yu-jing CAO,Zhi-ren LUO,Kai-xuan LI,Wen-bo ZHAO,Ying-yi MENG. Online detection algorithm of road water depth based on binocular vision [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(1): 175-184. |

| [2] | Cao TAN,Hao-xin REN,Wen-qing GE,Ya-dong SONG,Jia-yu LU. Improved active disturbance rejection control for hydraulic vibration stages based on the direct-drive valve [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(1): 84-92. |

| [3] | Shou-tao LI,Lu YANG,Ru-yi QU,Peng-peng SUN,Ding-li YU. Slip rate control method based on model predictive control [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(9): 2687-2696. |

| [4] | Liang WU,Yi-fan GU,Biao XING,Fang-wu MA,Li-wei NI,Wei-wei JIA. Steering four-wheel distributed integrated control method based on LQR [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(9): 2414-2422. |

| [5] | Chao-lu TEMUR,Ya-ping ZHANG. Link anomaly detection algorithm for wireless sensor networks based on convolutional neural networks [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(8): 2295-2300. |

| [6] | Yu-hai WANG,Xiao-zhi LI,Xing-kun LI. Predictive energy saving algorithm for hybrid electric truck under high-speed condition [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(8): 2121-2129. |

| [7] | Sheng CHANG,Hong-fei LIU,Nai-wei ZOU. H∞ loop shaping robust control of vehicle tracking on variable curvature curve [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(8): 2141-2148. |

| [8] | Hong-wei ZHAO,Hong WU,Ke MA,Hai LI. Image classification framework based on knowledge distillation [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(8): 2307-2312. |

| [9] | Jin-zhou ZHANG,Shi-qing JI,Chuang TAN. Fusion algorithm of convolution neural network and bilateral filtering for seam extraction [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(8): 2313-2318. |

| [10] | Jian-ze LIU,Jiang LIU,Min LI,Xin-jie ZHANG. Vehicle speed decoupling road identification method based on least squares [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(7): 1821-1830. |

| [11] | Xian-yi XIE,Ming-jun ZHANG,Li-sheng JIN,Bin ZHOU,Tao HU,Yu-fei BAI. Artificial bee colony trajectory planning algorithm for intelligent vehicles considering comfortable [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(6): 1570-1581. |

| [12] | Ling HUANG,Zuan CUI,Feng YOU,Pei-xin HONG,Hao-chuan ZHONG,Yi-xuan ZENG. Vehicle trajectory prediction model for multi-vehicle interaction scenario [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(5): 1188-1195. |

| [13] | Hong-yan GUO,Lian-bing WANG,Xu ZHAO,Qi-kun DAI. Joint estimation of vehicle mass and road slope considering lateral motion [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(5): 1175-1187. |

| [14] | Yu-kai LU,Shuai-ke YUAN,Shu-sheng XIONG,Shao-peng ZHU,Ning ZHANG. High precision detection system for automotive paint defects [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(5): 1205-1213. |

| [15] | Shao-hua WANG,Qi-rui ZHANG,De-hua SHI,Chun-fang YIN,Chun LI. Analysis of nonlinear vibration response characteristics of hybrid transmission system with dual-planetary gear sets [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(4): 890-901. |

|

||