Journal of Jilin University(Engineering and Technology Edition) ›› 2024, Vol. 54 ›› Issue (1): 209-220.doi: 10.13229/j.cnki.jdxbgxb.20220202

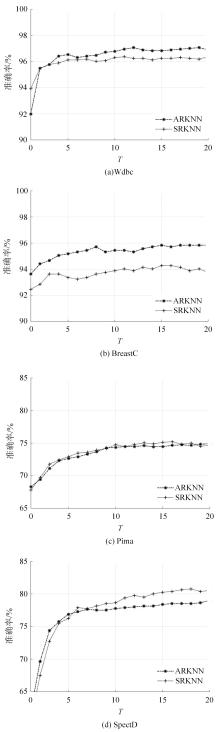

Random K-nearest neighbor algorithm with learning process

Zhong-liang FU( ),Xiao-qing CHEN,Wei REN,Yu YAO

),Xiao-qing CHEN,Wei REN,Yu YAO

- Chengdu Institute of Computer Applications,University of Chinese Academy of Sciences,Chengdu 610299,China

CLC Number:

- TP18

| 1 | Cover T M, Hart P E. Nearst neighbor pattern classification[J]. IEEE Transactions on Information Theory, 1967, 13(1): 21-27. |

| 2 | Hart P E. The condensed nearest neighbor rule[J]. IEEE Trans actions on Information Theory, 1968, 14(3):515-516. |

| 3 | 李荣陆, 胡运发. 基于密度的KNN 文本分类器学习样本裁剪方法[J]. 计算机研究与发展, 2004, 41(4): 539-545. |

| Li Rong-lu, Hu Yun-Fa. A density-based method for reducing the amount of training data in kNN text classification[J]. Journal of Computer Research and Development, 2004, 41(4): 539-545. | |

| 4 | 张孝飞, 黄河燕. 一种采用聚类技术改进的KNN 文本分类方法[J]. 模式识别与人工智能, 2009, 22(6): 936-940. |

| Zhang Xiao-fei, Huang He-yan. An improved KNN text categorization algorithm by adopting cluster technology[J]. Pattern Recognition and Artificial Intelligence, 2009, 22(6): 936-940. | |

| 5 | Hwang W J, Wen K W. Fast KNN classification algorithm based on partial distance search[J]. Electron Letter, 1998, 34(21): 2062-2063. |

| 6 | Pan J S, Qiao Y L, Sun S H. A fast k-nearest neighbors classification algorithm[J]. IEICE Transactions Fundamentals, 2004, 87(4): 961-961. |

| 7 | Samet H. k-Nearst neighbor finding using MaxNearstDist [J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2008, 30(2): 243-252. |

| 8 | Guo G D, Wang H, Bell D, et al. Using KNN model for automatic text categorization[J]. Soft Computing-A Fusion of Foundations,Methodologies and Application, 2006, 10(5): 423-430. |

| 9 | 朱付保, 谢利杰, 汤萌萌, 等. 基于模糊C-Means 的改进型KNN分类方法[J]. 华中师范大学学报: 自然科学版, 2017, 51(6): 754-759. |

| Zhu Fu-bao, Xie Li-jie, Tang meng-meng, et al. Improved KNN classification algorithm based fuzzy C-means[J]. Journal of Central China Normal University (Natural Science Edition), 2017, 51(6): 754-759. | |

| 10 | Samet H. The Design an Analysis of Spatial Data Structure[M]. Reading: Addison-Wesley, 1990. |

| 11 | 尚文倩, 黄厚宽, 刘玉玲, 等.文本分类中基于基尼指数的特征选择算法研究 [J]. 计算机研究与发展, 2006, 43(10): 1688-1694. |

| Shang Wen-qian, Huang Hou-kuan, Liu Yu-ling, et al. Research on the algorithm of feature selection based on Gini index for text categorization[J]. Journal of Computer Research and Development, 2006,43(10): 1688-1694. | |

| 12 | 林用民, 朱卫东. 模糊KNN在文本分类中的应用研究[J]. 计算机应用与软件, 2008, 25(9): 185-187. |

| Lin Yong-min, Zhu Wei-dong. Study on the application of fuzzy KNN to text categorization[J]. Computer Applications and Software, 2008, 25(9): 185-187. | |

| 13 | Thanh N P, Kappas M. Comparison of random forest, k-nearest neighbor, and support vector machine classifiers for land cover classification using Sentinel-2 imagery[J]. Sensors, 2018, 18(1): 18. |

| 14 | 黄光华, 殷锋, 冯九林. 一种交叉验证和距离加权方法改进的KNN算法研究[J]. 西南民族大学学报: 自然科学版, 2020, 46(2): 172-177. |

| Huang Guang-hua, Yin Feng, Feng Jiu-lin. An improved KNN algorithm based on cross validation and distance weighting[J]. Journal of Southwest Minzu University (Natural Science Edition), 2020, 46(2): 182-177. | |

| 15 | Gou J P, Ma H X, Ou W H, et al. A generalized mean distance-based k-nearest neighbor classifier[J]. Expert Systems with Applications, 2019, 115: 356-372. |

| 16 | Gou J P, Qiu W M, Zhang Y, et al. A local mean representation-based K-nearest neighbor classifier[J]. ACM Transactions on Intelligent Systems, 2019, 29 (10): 1-25. |

| 17 | Bicego M, Loog M. Weighted K-nearest neighbor revisited[C]∥The 23th International Conference on Pattern Recognition (ICPR),Cancun, Mexico, 2016, 1642-1647. |

| 18 | Gou J P, Qiu W M, Zhang Y, et al. Locality constrained representation-based K-nearest neighbor classification[J]. Knowledge-Based Systems, 2019, 167(3): 38-52. |

| 19 | Ma H, Gou J, Ou W, et al. A new nearest neighbor classifier based on multi-harmonic mean distances[C]∥International Conference on Security, Pattern Analysis, and Cybernetics (SPAC), Shenzhen, China, 2017: 115-125. |

| 20 | Zhang S C, Li X L, Zong M, et al. Learning k for kNN classification[J]. ACM Transactions on Intelligent Systems and Technology, 2017, 8(3): 1-19. |

| 21 | Zhong X F, Guo S Z, Gao L, et al. An improved k-NN classification with dynamic k [C]∥Proceedings of the 9th International Conference on Machine Learning and Computing, Singapore, 2017: 211-216. |

| 22 | Li B, Chen Y, Chen Y. The nearest neighbor algorithm of local probability centers[J]. IEEE Transactions on Systems, Man, and Cybernetics, Part B: Cybernetics, 2008, 38(1): 141-154. |

| 23 | Breiman L. Bagging predictors[J]. Machine Learning, 1996, 24(2): 123-140. |

| 24 | Freund Y, Schapire R E. A decision-theoretic generalization of on-line learning and an application to boosting[J]. Journal of Computer and System Sciences, 1997, 55(1): 119-139. |

| 25 | 付忠良, 张丹普, 王莉莉. 多标签AdaBoost 算法的改进算法[J]. 四川大学学报: 工程科学版, 2015, 47(5): 103-109. |

| Fu Zhong-liang, Zhang Dan-pu, Wang Li-li. Improvement on AdaBoost for multi-label classification[J]. Journal of Sichuan University (Engineering Science Edition), 2015, 47(5): 103-109. | |

| 26 | Breiman L. Random forest[J]. Machine Learning, 2001, 29(1): 1-10. |

| 27 | 付忠良. 分类器线性组合及分类器线性组合的有效性和最佳组合问题的研究[J]. 计算机研究与发展, 2009, 46(7): 1206-1216. |

| Fu Zhong-liang. Effective property and best combination of classifier linear combination[J]. Journal of Computer Research and Development, 2009, 46(7): 1206-1216. |

| [1] | Mei WANG,Zhi-yuan SONG. Pedestrian dead reckoning technology based on TrAdaBoost algorithm [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(8): 2364-2370. |

| [2] | Qing-tian GENG,Zhi LIU,Qing-liang LI,Fan-hua YU,Xiao-ning LI. Prediction of soil moisture based on a deep learning model [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(8): 2430-2436. |

| [3] | Heng-yan PAN,Wen-hui ZHANG,Ting-ting LIANG,Zhi-peng PENG,Wei GAO,Yong-gang WANG. Inducement analysis of taxi drivers' traffic accidents based on MIMIC and machine learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(2): 457-467. |

| [4] | Wei YUAN,Xiao-hui YUAN,Yan GAO,Kun-chen LI,Deng-feng ZHAO,Zhao-hui LIU. Identification method for electric bus pedal misoperation based on natural driving data [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(12): 3342-3350. |

| [5] | Feng-feng ZHOU,Zhen-wei YAN. A model for identifying neuropeptides by feature selection based on hybrid features [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(11): 3238-3245. |

| [6] | Qing-tian GENG,Yang ZHAO,Qing-liang LI,Fan-hua YU,Xiao-ning LI. Integrated LSTM and ARIMA method based on attention model for soil temperature [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(10): 2973-2981. |

| [7] | Xiang-jiu CHE,Ying-jie YU,Quan-le LIU. Enhanced Bagging ensemble learning and multi⁃target detection algorithm [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(12): 2916-2923. |

| [8] | Liang DUAN,Chun-yuan SONG,Chao LIU,Wei WEI,Cheng-ji LYU. State recognition in bearing temperature of high-speed train based on machine learning algorithms [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(1): 53-62. |

| [9] | Guang-song LI,Wen-qing LI,Qing LI. Encrypted and compressed traffic classification based on random feature set [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(4): 1375-1386. |

| [10] | Xiao-long ZHU,Zhong XIE. Geospatial data extraction algorithm based on machine learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(3): 1011-1016. |

| [11] | Yang LI,Shuo LI,Li-wei JING. Estimate model based on Bayesian model and machine learning algorithms applicated in financial risk assessment [J]. Journal of Jilin University(Engineering and Technology Edition), 2020, 50(5): 1862-1869. |

| [12] | Wei FANG,Yi HUANG,Xin-qiang MA. Automatic defect detection for virtual network perceptual data based on machine learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2020, 50(5): 1844-1849. |

| [13] | Zhou-zhou LIU,Wen-xiao YIN,Qian-yun ZHANG,Han PENG. Sensor cloud intrusion detection based on discrete optimization algorithm and machine learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2020, 50(2): 692-702. |

| [14] | ZHAO Dong, ZANG Xue-bai, ZHAO Hong-wei. Random forest prediction method based on optimization of fruit fly [J]. 吉林大学学报(工学版), 2017, 47(2): 609-614. |

| [15] | JIN Li-sheng, WANG Yan, LIU Jing-hua, WANG Ya-li, ZHENG Yi. Front vehicle detection based on Adaboost algorithm in daytime [J]. 吉林大学学报(工学版), 2014, 44(6): 1604-1608. |

|

||