Journal of Jilin University(Engineering and Technology Edition) ›› 2024, Vol. 54 ›› Issue (8): 2307-2312.doi: 10.13229/j.cnki.jdxbgxb.20230128

Previous Articles Next Articles

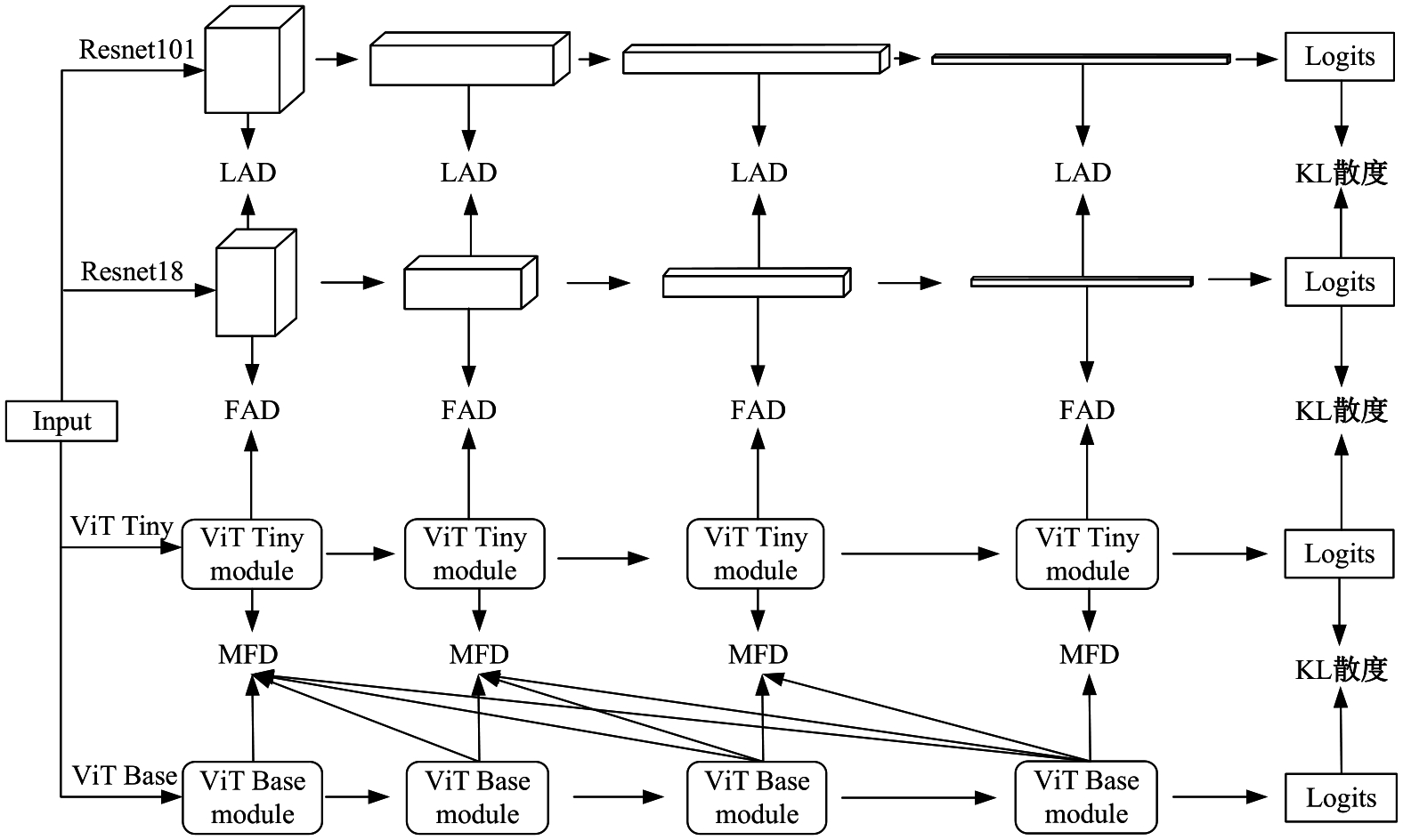

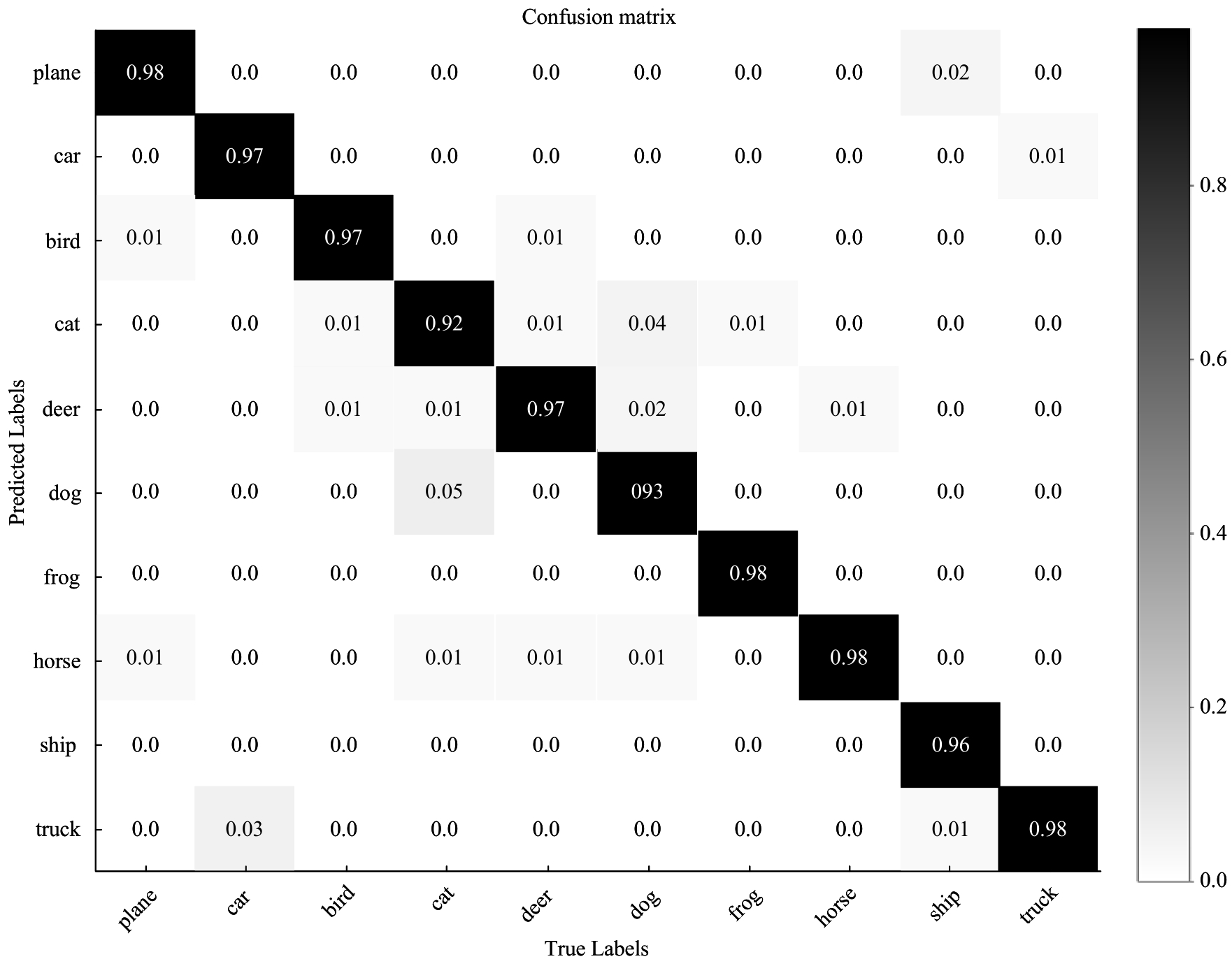

Image classification framework based on knowledge distillation

Hong-wei ZHAO1( ),Hong WU1,Ke MA2,Hai LI1

),Hong WU1,Ke MA2,Hai LI1

- 1.College of Computer Science and Technology,Jilin University,Changchun 130012,China

2.School of Mechanical and Aerospace Engineering,Jilin University,Changchun 130025,China

CLC Number:

- TP391

| 1 | Dosovitskiy A, Beyer L, Kolesnikov A, et al. An image is worth 16x16 words: transformers for image recognition at scale[DB/OL].[2023-01-05].. |

| 2 | Liu Z, Lin Y, Cao Y, et al. Swin transformer: hierarchical vision transformer using shifted windows[C]∥ Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 2021: 10012-10022. |

| 3 | 赵宏伟, 张健荣, 朱隽平, 等. 基于对比自监督学习的图像分类框架[J]. 吉林大学学报: 工学版, 2022, 52(8): 1850-1856. |

| Zhao Hong-wei, Zhang Jian-rong, Zhu Jun-ping, et al. Image classification framework based on contrastive self⁃supervised learning[J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(8): 1850-1856. | |

| 4 | 赵宏伟, 霍东升, 王洁, 等. 基于显著性检测的害虫图像分类[J]. 吉林大学学报: 工学版, 2021, 51(6): 2174-2181. |

| Zhao Hong-wei, Huo Dong-sheng, Wang Jie, et al. Image classification of insect pests based on saliency detection[J]. Journal of Jilin University (Engineering and Technology Edition), 2021, 51(6): 2174-2181. | |

| 5 | Mehta S, Rastegari M. Mobilevit: light-weight, general-purpose, and mobile-friendly vision transformer[DB/OL].[2023-01-05].. |

| 6 | Chen Y, Dai X, Chen D, et al. Mobile-former: bridging mobilenet and transformer[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 5270-5279. |

| 7 | Zagoruyko S, Komodakis N. Paying more attention to attention: improving the performance of convolutional neural networks via attention transfer[DB/OL].[2023-01-06]. |

| 8 | Gou J, Yu B, Maybank S J, et al. Knowledge distillation: a survey[J]. International Journal of Computer Vision, 2021, 129: 1789-1819. |

| 9 | 黄震华, 杨顺志, 林威, 等. 知识蒸馏研究综述[J]. 计算机学报, 2022, 45(3): 624-653. |

| Huang Zhen-hua, Yang Shun-zhi, Lin Wei, et al. Research review on knowledge distillation[J]. Chinese Journal of Computers, 2022, 45(3): 624-653. | |

| 10 | Raghu M, Unterthiner T, Kornblith S, et al. Do vision transformers see like convolutional neural networks?[J]. Advances in Neural Information Processing Systems, 2021, 34: 12116-12128. |

| 11 | He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770-778. |

| 12 | Yang Y, Newsam S. Bag-of-visual-words and spatial extensions for land-use classification[C]∥Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Joes, USA, 2010: 270-279. |

| 13 | Hinton G, Vinyals O, Dean J. Distilling the knowledge in a neural network[DB/OL].[2023-01-06]. |

| 14 | Romero A, Ballas N, Kahou S E, et al. Fitnets: hints for thin deep nets[DB/OL].[2023-01-07]. |

| 15 | Passalis N, Tzelepi M, Tefas A. Heterogeneous knowledge distillation using information flow modeling[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 2339-2348. |

| 16 | Chen D, Mei J P, Zhang Y, et al. Cross-layer distillation with semantic calibration[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2021, 35(8): 7028-7036. |

| 17 | Howard A, Sandler M, Chu G, et al. Searching for mobilenetv3[C]∥Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea(South), 2019: 1314-1324. |

| 18 | Ma N, Zhang X, Zheng H T, et al. Shufflenet v2: practical guidelines for efficient CNNs architecture design[C]∥Proceedings of the European Conference on Computer Vision, Munich, Germany, 2018: 116-131. |

| [1] | Chao-lu TEMUR,Ya-ping ZHANG. Link anomaly detection algorithm for wireless sensor networks based on convolutional neural networks [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(8): 2295-2300. |

| [2] | Jin-zhou ZHANG,Shi-qing JI,Chuang TAN. Fusion algorithm of convolution neural network and bilateral filtering for seam extraction [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(8): 2313-2318. |

| [3] | Yun-zuo ZHANG,Yu-xin ZHENG,Cun-yu WU,Tian ZHANG. Accurate lane detection of complex environment based on double feature extraction network [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(7): 1894-1902. |

| [4] | Ming-hui SUN,Hao XUE,Yu-bo JIN,Wei-dong QU,Gui-he QIN. Video saliency prediction with collective spatio-temporal attention [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(6): 1767-1776. |

| [5] | Yan-feng LI,Ming-yang LIU,Jia-ming HU,Hua-dong SUN,Jie-yu MENG,Ao-ying WANG,Han-yue ZHANG,Hua-min YANG,Kai-xu HAN. Infrared and visible image fusion based on gradient transfer and auto-encoder [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(6): 1777-1787. |

| [6] | Li-ping ZHANG,Bin-yu LIU,Song LI,Zhong-xiao HAO. Trajectory k nearest neighbor query method based on sparse multi-head attention [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(6): 1756-1766. |

| [7] | Li-ming LIANG,Long-song ZHOU,Jiang YIN,Xiao-qi SHENG. Fusion multi-scale Transformer skin lesion segmentation algorithm [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(4): 1086-1098. |

| [8] | Yun-zuo ZHANG,Wei GUO,Wen-bo LI. Omnidirectional accurate detection algorithm for dense small objects in remote sensing images [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(4): 1105-1113. |

| [9] | Xiao-xu LI,Wen-juan AN,Ji-jie WU,Zhen LI,Ke ZHANG,Zhan-yu MA. Channel attention bilinear metric network [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(2): 524-532. |

| [10] | Yun-zuo ZHANG,Xu DONG,Zhao-quan CAI. Multi view gait cycle detection by fitting geometric features of lower limbs [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2611-2619. |

| [11] | Ming-yao XIAO,Xiong-fei LI,Rui ZHU. Medical image fusion based on pixel correlation analysis in NSST domain [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2640-2648. |

| [12] | Guang HUO,Da-wei LIN,Yuan-ning LIU,Xiao-dong ZHU,Meng YUAN,Di GAI. Lightweight iris segmentation model based on multiscale feature and attention mechanism [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2591-2600. |

| [13] | Ying HE,Zhuo-ran WANG,Xu ZHOU,Yan-heng LIU. Point of interest recommendation algorithm integrating social geographical information based on weighted matrix factorization [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2632-2639. |

| [14] | Ya-hui ZHAO,Fei-yu LI,Rong-yi CUI,Guo-zhe JIN,Zhen-guo ZHANG,De LI,Xiao-feng JIN. Korean⁃Chinese translation quality estimation based on cross⁃lingual pretraining model [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(8): 2371-2379. |

| [15] | Xiang-jiu CHE,Huan XU,Ming-yang PAN,Quan-le LIU. Two-stage learning algorithm for biomedical named entity recognition [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(8): 2380-2387. |

|