吉林大学学报(工学版) ›› 2021, Vol. 51 ›› Issue (5): 1775-1784.doi: 10.13229/j.cnki.jdxbgxb20200573

• 计算机科学与技术 • 上一篇

基于相对总变差结构提取的遥感图像融合

李雄飞1,2( ),吴佳婧1,2,张小利1,2(

),吴佳婧1,2,张小利1,2( ),王泽宇2,冯云丛2,3

),王泽宇2,冯云丛2,3

- 1.吉林大学 软件学院,长春 130012

2.吉林大学 符号计算与知识工程教育部重点实验室,长春 130012

3.长春工业大学 计算机科学与工程学院,长春 130012

Remote sensing image fusion algorithm based on relative total variation structure extraction

Xiong-fei LI1,2( ),Jia-jing WU1,2,Xiao-li ZHANG1,2(

),Jia-jing WU1,2,Xiao-li ZHANG1,2( ),Ze-yu WANG2,Yun-cong FENG2,3

),Ze-yu WANG2,Yun-cong FENG2,3

- 1.College of Software,Jilin University,Changchun 130012,China

2.Key Laboratory of Symbolic Computation and Knowledge Engineering of Ministry of Education,Jilin University,Changchun 130012,China

3.College of Computer Science and Engineering,Changchun University of Technology,Changchun 130012,China

摘要:

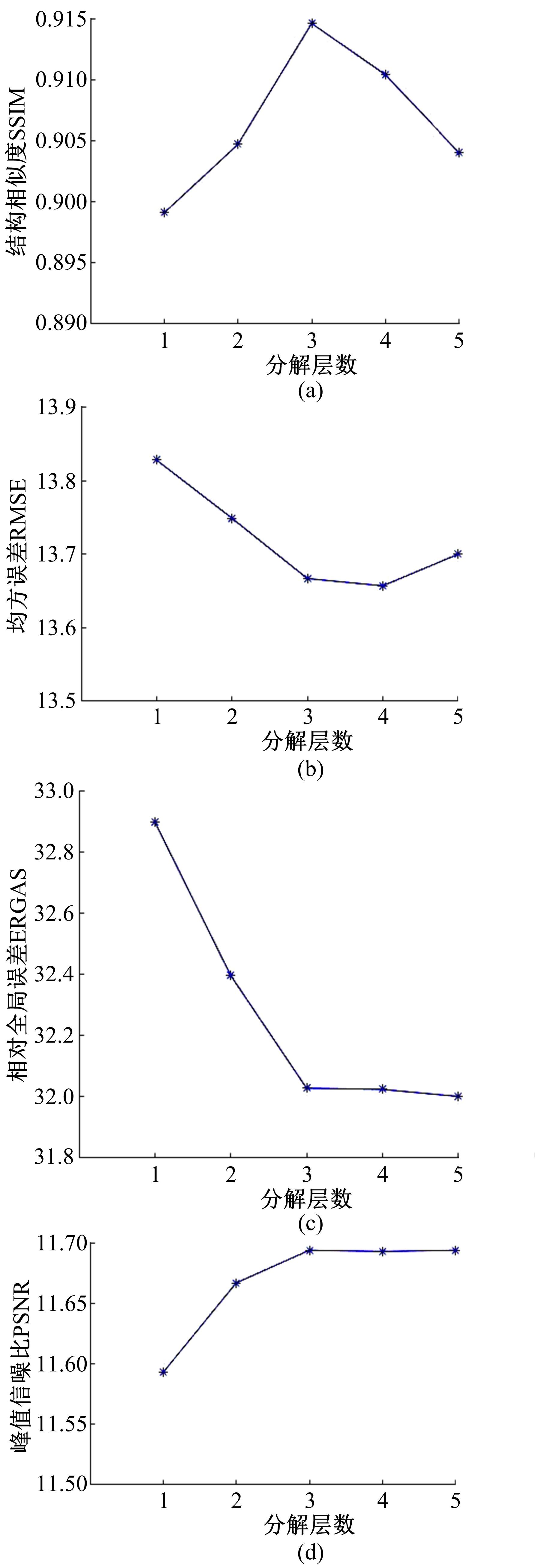

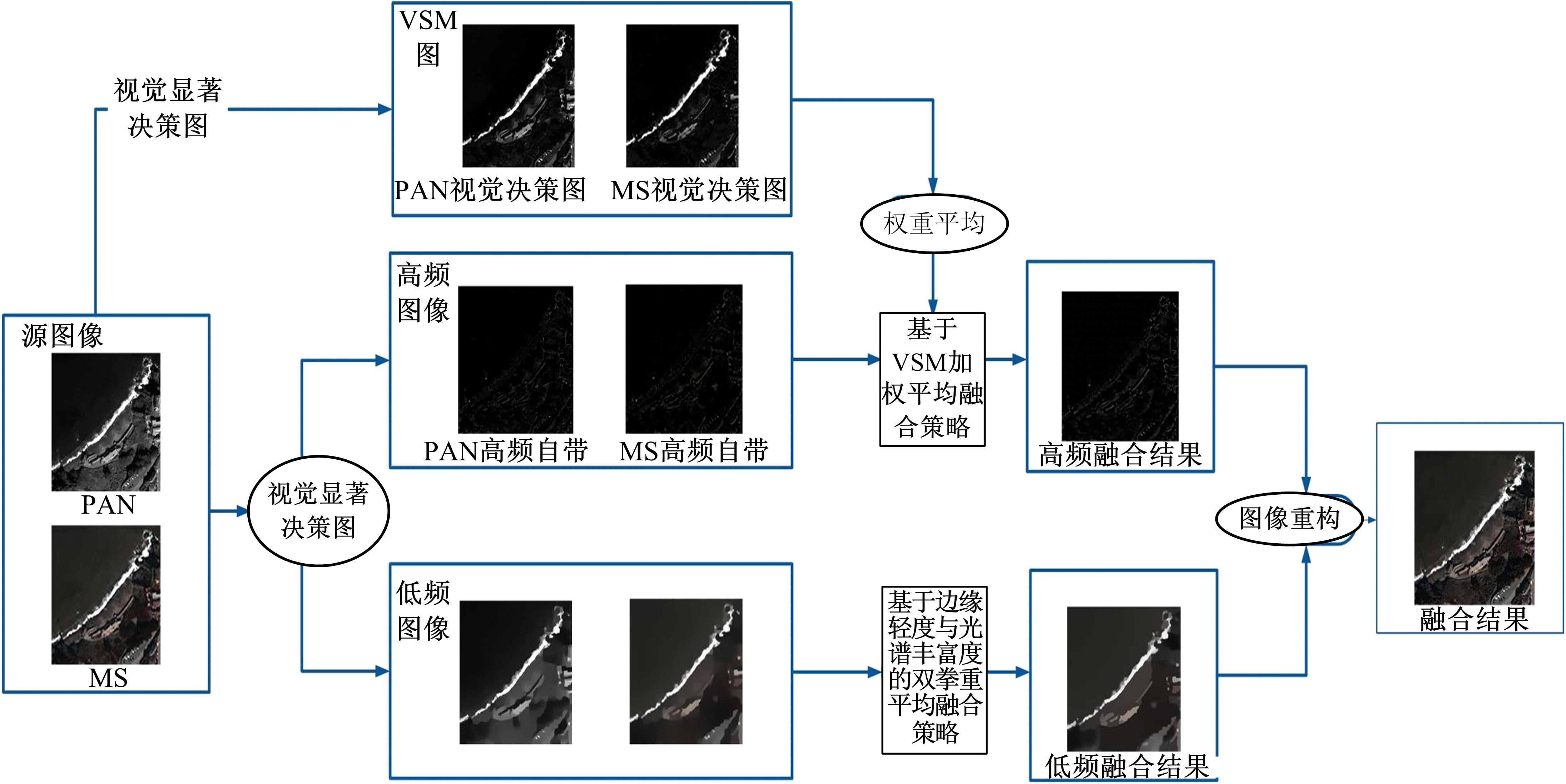

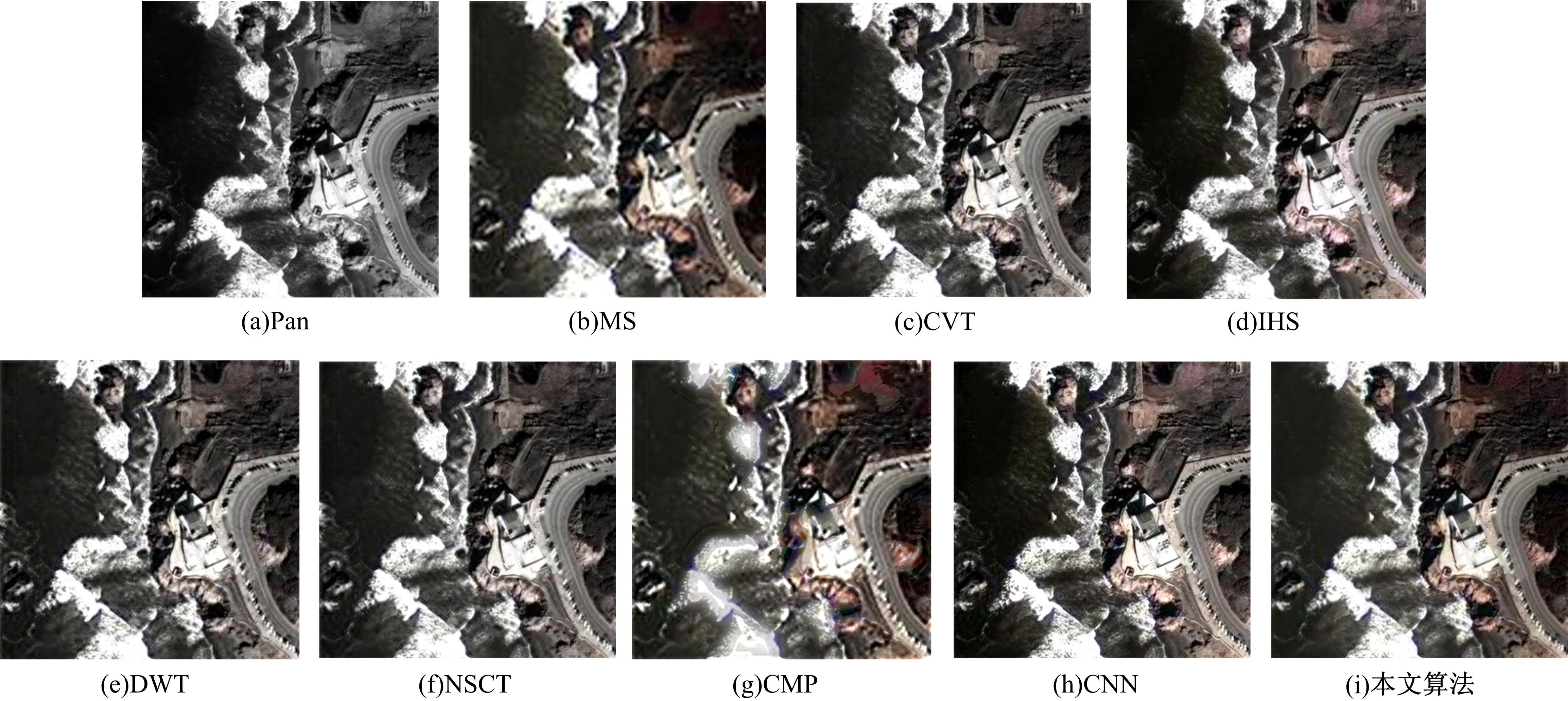

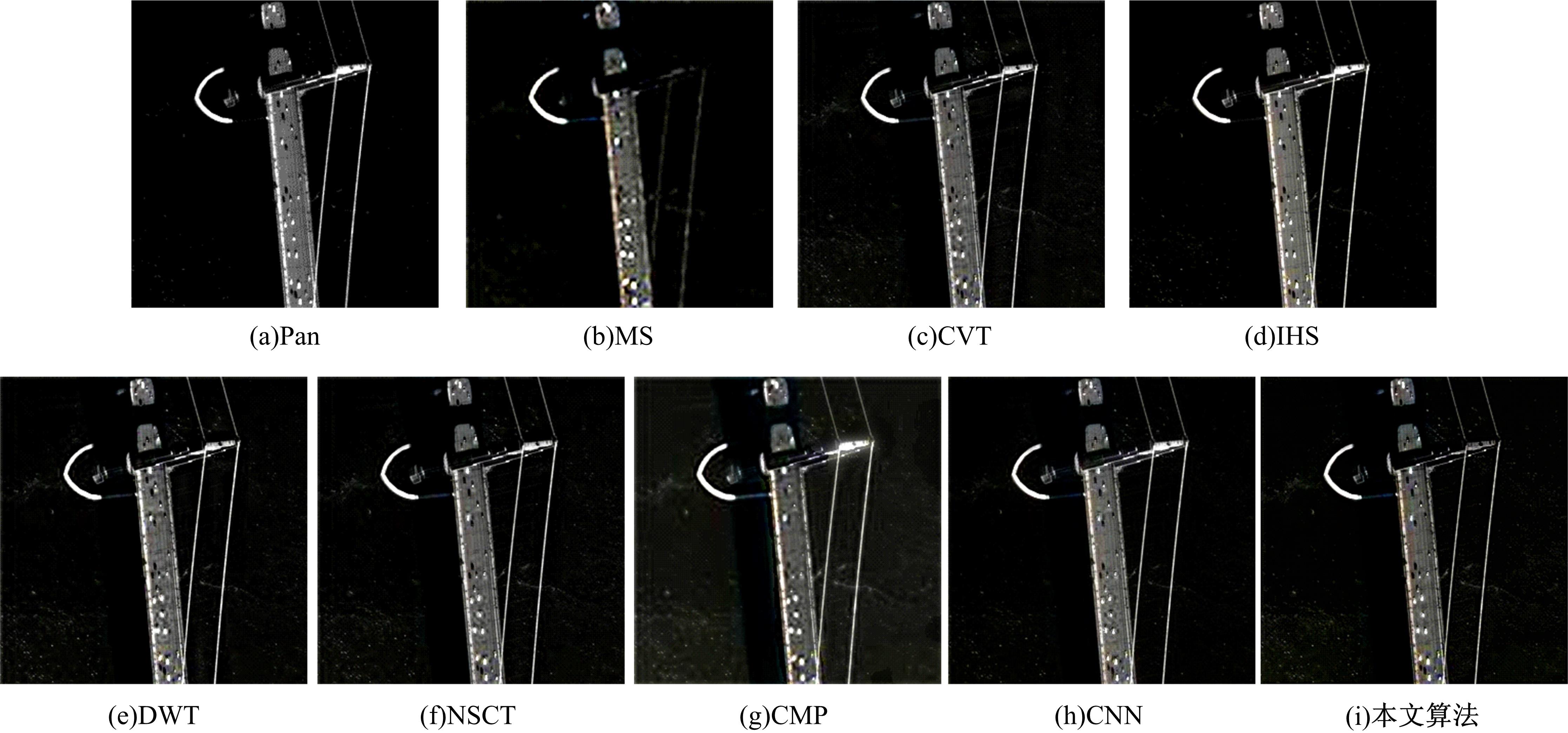

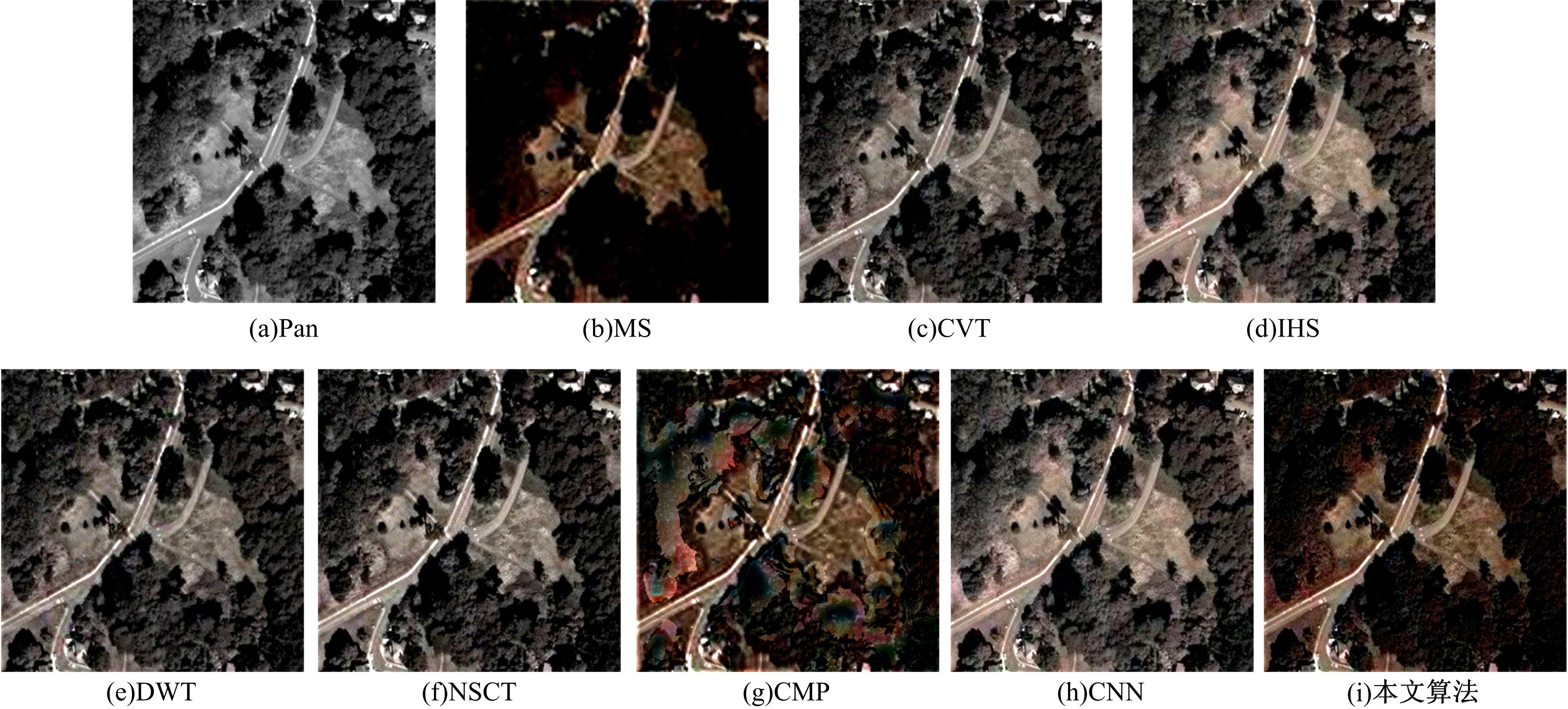

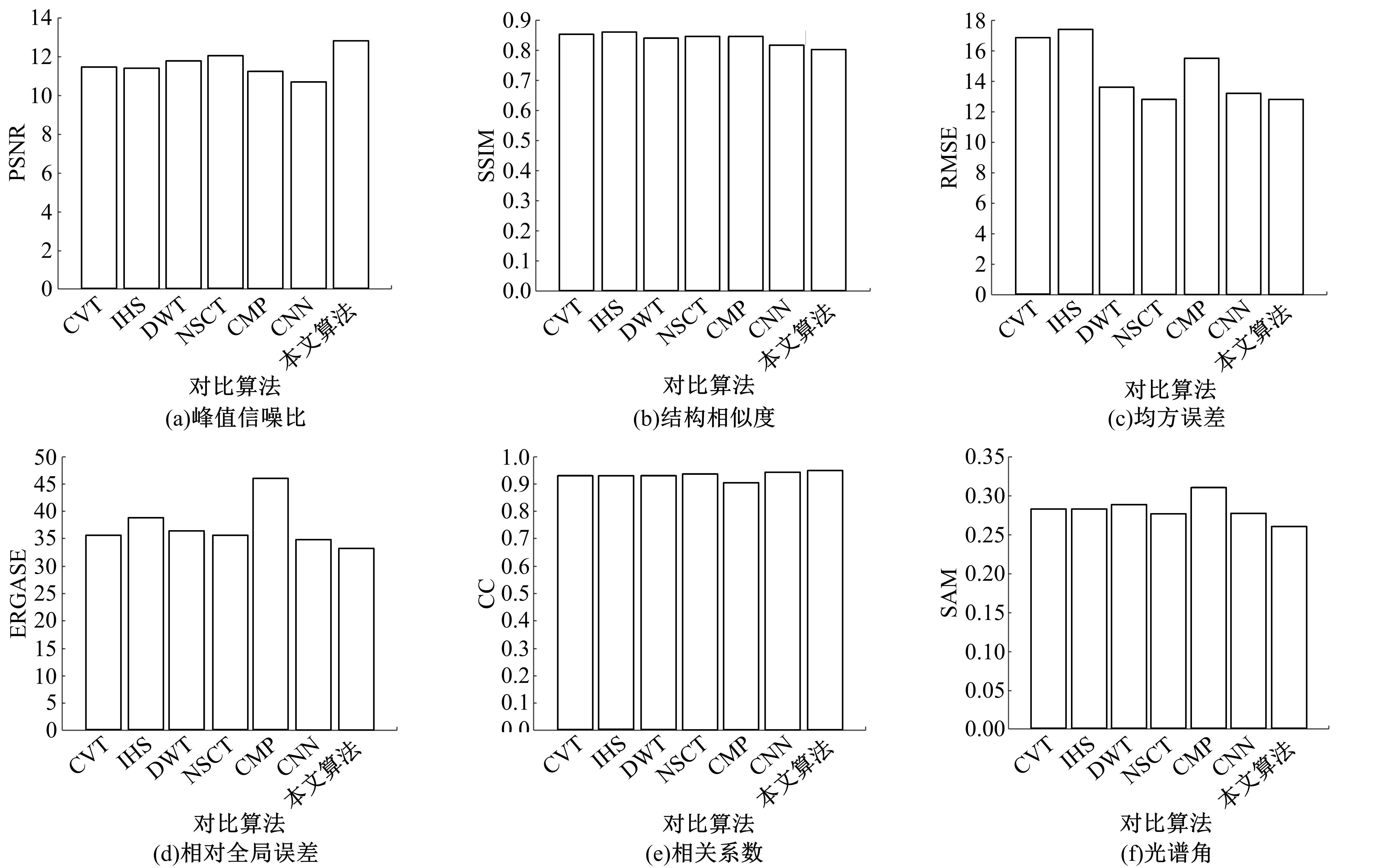

针对遥感图像融合结果光谱信息丰富度欠缺的问题,提出了一种基于结构提取的融合方法。首先,采用一种通过相对总变差从纹理中提取结构的方法对图像进行分解,分离出对应的低频和高频子带。其次,在高频层引入视觉决策图来指导高频进行融合,在低频融合规则中,同时考虑全色图像的边缘强度和多光谱的色彩信息强度来进行融合。最后,通过重构低频融合结果和高频融合结果得到融合图像。在Worldview-2卫星遥感图像上验证本文算法的有效性。实验表明:本文算法较好地保留了全色图像的细节信息以及多光谱图像的光谱信息,与其他同类算法相比具有一定的优越性。

中图分类号:

- TP391

| 1 | Liu X Y, Liu Q J, Wang Y H. Remote sensing image fusion based on two-stream fusion network[J]. Information Fusion, 2020, 55: 1-15. |

| 2 | Sun J. A study of PCA image fusion techniques on remote sensing[C]∥International Conference on Space Information Technology. International Society for Optics and Photonics, 2005: 739-744. |

| 3 | Hassan G. A review of remote sensing image fusion methods[J]. Information Fusion, 2016, 32: 75-89. |

| 4 | Leung Y, Liu J, Zhang J. An improved adaptive intensity-hue-saturation method for the fusion of remote sensing images[J]. IEEE Geoscience & Remote Sensing Letters, 2013, 11(5): 985-989. |

| 5 | 包磊, 徐其志. 基于PCA变换和光谱补偿的遥感影像融合方法[J]. 吉林大学学报: 工学版, 2013, 43(): 88-91. |

| Bao Lei, Xu Qi-zhi. Spectrum-keeping algorithm for fusing based on PCA[J]. Journal of Jilin University(Engineering and Technology Edition), 2013, 43(Sup.1): 88-91. | |

| 6 | Tu T M, Lee Y C, Chang C P, et al. Adjustable intensity-hue-saturation and Brovey transform fusion technique for IKONOS/QuickBird imagery[J].Optical Engineering, 2005, 44(11): 116201. |

| 7 | Candra D S. Analysis of SPOT-6 data fusion using Gram-Schmidt spectral sharpening on rural areas[J]. International Journal of Remote Sensing & Earth Sciences, 2013, 10(2): 84-89. |

| 8 | Shahdoosti H R, Ghassemian H. Spatial PCA as a new method for image fusion[C]∥Csi International Symposium on Artificial Intelligence and Signal Processing,Shiraz, Iran, 2012: 90-94. |

| 9 | 陈荣元, 郑晨, 申立智. 多源影像融合与分割的协同方法[J]. 电子学报, 2015, 43(10): 1994-2000. |

| Chen Rong-yuan, Zheng Chen, Shen Li-zhi.Cooperation between fusion and segmentation for multisource image[J]. Acta Electronica Sinica, 2015, 43(10): 1994-2000. | |

| 10 | 李雄飞, 宋璐, 张小利. 基于协同经验小波变换的遥感图像融合[J]. 吉林大学学报: 工学版, 2019, 49(4): 1307-1319. |

| Li Xiong-fei, Song Lu, Zhang Xiao-li. Remote sensing image fusion based on cooperative empirical wavelet transform[J]. Journal of Jilin University(Engineering and Technology Edition), 2019, 49(4): 1307-1319. | |

| 11 | 金海燕, 焦李成. 基于CP和多小波HMT模型的克隆选择遥感图像融合[J]. 计算机学报, 2009, 32(7): 1434-1442. |

| Jin Hai-yan, Jiao Li-cheng. Clonal selection remote sensing image fusion based on CP and multiwavelet HMT models[J]. Chinese Journal of Computers, 2009, 32(7): 1434-1442. | |

| 12 | Li Y T, Wu J, Yang Y. HIS and DWT based remote sensing image fusion and its application on road extraction[C]∥2011 International Conference on Consumer Electronics, Communications and Networks, Xianning, 2011, 394-397. |

| 13 | 李光鑫, 王珂. 基于Contourlet变换的彩色图像融合算法[J]. 电子学报, 2007, 35(1): 112-117. |

| Li Guang-xin, Wang Ke. Color image fusion algorithm using the contourlet transform[J]. Acta Electronica Sinica, 2007,35(1): 112-117. | |

| 14 | Li T J, Wang Y Y. Biological image fusion using a NSCT based variable weight method[J]. Information Fusion, 2011, 12(2): 85-92. |

| 15 | Li H, Zhang F, Zhang K. Remote sensing image fusion based on sparse non-negative matrix factorization[J]. Journal of Xidian University, 2016, 43(2): 193-198. |

| 16 | Dong Y N, Du B, Zhang L P. Target detection based on random forest metric learning[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2017, 8(4): 1830-1838. |

| 17 | He G, Ji J, Dong D, et al. Infrared and visible image fusion method by using hybrid representation learning[J]. Geoscience and Remote Sensing Letters, 2019, 6(11): 1796-1800. |

| 18 | Xiao A, Wang Z Y, Wang L, et al. Super-resolution for 'Jilin-1' satellite video imagery via a convolutional network[J]. Sensors, 2018, 18(4): 18041194. |

| 19 | Liu Y, Chen X, Wang Z, et al. Deep learning for pixel-level image fusion: recent advances and future prospects[J]. Information Fusion, 2018, 42: 158-173. |

| 20 | Xu L, Yan Q, Xia Y, et al. Structure extraction from texture via relative total variation[J]. ACM Transaction on Graphics, 2012, 31(6): 2366158. |

| 21 | Ma J L, Zhou Z Q, Wang B, et al. Infrared and visible image fusion based on visual saliency map and weighted least square optimization[J]. Infrared Physics and Technology, 2017, 82: 8-17. |

| 22 | Tan W, Xiang P, Zhang J, et al. Remote sensing image fusion via boundary measured dual-channel PCNN in multi-scale morphological gradient domain[J]. Access, 2020, 99: 42540-42549. |

| 23 | Liu Y, Chen X, Cheng J,et al. A medical image fusion method based on convolutional neural networks[C]∥20th International Conference on Information Fusion, Xi'an, China, 2017: 17102534. |

| [1] | 陈广秋,陈昱存,李佳悦,刘广文. 基于DNST和卷积稀疏表示的红外与可见光图像融合[J]. 吉林大学学报(工学版), 2021, 51(3): 996-1010. |

| [2] | 陈蔓,钟勇,李振东. 隐低秩结合低秩表示的多聚焦图像融合[J]. 吉林大学学报(工学版), 2020, 50(1): 297-305. |

| [3] | 李雄飞,王婧,张小利,范铁虎. 基于SVM和窗口梯度的多焦距图像融合方法[J]. 吉林大学学报(工学版), 2020, 50(1): 227-236. |

| [4] | 李雄飞,宋璐,张小利. 基于协同经验小波变换的遥感图像融合[J]. 吉林大学学报(工学版), 2019, 49(4): 1307-1319. |

| [5] | 刘哲, 徐涛, 宋余庆, 徐春艳. 基于NSCT变换和相似信息鲁棒主成分分析模型的图像融合技术[J]. 吉林大学学报(工学版), 2018, 48(5): 1614-1620. |

| [6] | 尹明, 战荫伟, 裴海龙. 基于稀疏补算子学习的图像融合方法[J]. 吉林大学学报(工学版), 2016, 46(6): 2052-2058. |

| [7] | 于德新, 刘春宇, 郑坤, 郭亚娟, 赵鑫. 基于遥感图像的车辆空间占有率估计[J]. 吉林大学学报(工学版), 2016, 46(3): 764-769. |

| [8] | 王晓文1, 2, 赵宗贵3, 庞秀梅4, 刘敏5. 图的图像融合方法[J]. 吉林大学学报(工学版), 2014, 44(4): 1203-1208. |

| [9] | 杨扬, 戴明, 周箩鱼, 孙明超. 基于非下采样Bandelet变换的多聚焦图像融合[J]. 吉林大学学报(工学版), 2014, 44(2): 525-530. |

| [10] | 王昕, 李玮琳, 刘富. 小波域CT/MRI医学图像融合新方法[J]. 吉林大学学报(工学版), 2013, 43(增刊1): 25-28. |

| [11] | 李娟, 南旭良, 毕思远, 吴微. 改进多目标粒子群优化算法及在图像融合中的应用[J]. 吉林大学学报(工学版), 2013, 43(增刊1): 477-480. |

| [12] | 包磊, 徐其志. 基于PCA变换和光谱补偿的遥感影像融合方法[J]. 吉林大学学报(工学版), 2013, 43(增刊1): 88-91. |

| [13] | 冯鑫, 王晓明, 党建武, 沈瑜. 基于插值Directionlet变换的图像融合方法[J]. 吉林大学学报(工学版), 2013, 43(04): 1127-1132. |

| [14] | 邢笑雪, 刘富, 马冬梅, 翟微微, 王芳荣. 基于机器视觉的软体纤维丝集束智能计数系统[J]. , 2012, 42(05): 1267-1272. |

| [15] | 刘刚, 赵红毅, 胡臻龙. 基于带有权值混合泛函的盲超分辨率[J]. , 2012, 42(04): 1054-1058. |

|

||