Journal of Jilin University(Engineering and Technology Edition) ›› 2024, Vol. 54 ›› Issue (3): 785-796.doi: 10.13229/j.cnki.jdxbgxb.20220483

Underwater image enhancement based on color correction and TransFormer detail sharpening

De-xing WANG( ),Kai GAO,Hong-chun YUAN(

),Kai GAO,Hong-chun YUAN( ),Yu-rui YANG,Yue WANG,Ling-dong KONG

),Yu-rui YANG,Yue WANG,Ling-dong KONG

- School of Information,Shanghai Ocean University,Shanghai 201306,China

CLC Number:

- TP751

| 1 | Skarlatos D, Agrafiotis P, Menna F, et al. Ground control networks for underwater photogrammetry in archaeological excavations[C]∥Proceedings of the 3rd IMEKO International Conference on Metrology for Archaeology and Cultural Heritage, Lecce, Italy, 2017: 23-25. |

| 2 | Chuang M C, Hwang J N, Kresimir W. A feature learning and object recognition framework for underwater fish images[J]. IEEE Transactions on Image Processing: a Publication of the IEEE Signal Processing Society, 2016, 25(4): 1862-1872. |

| 3 | Trahanias P E, Venetsanopoulos A N. Institute of electric and electronic engineer. color image enhancement through 3-D histogram equalization[C]∥11th IAPR International Conference on Image, Speech and Signal Analysis, The Hague, Netherlands, 1992: 545-548. |

| 4 | Zuiderveld. Contrast limited adaptive histogram equalization[J/OL]. [2022-04-18]. |

| 5 | Zou W, Wang X, Li K, et al. Self-tuning underwater image fusion method based on dark channel prior[C]∥IEEE International Conference on Robotics and Biomimetics, Qingdao, China, 2016: 788-793. |

| 6 | AbuNaser A, Doush I A, Mansour N, et al. Underwater image enhancement using particle swarm optimization[J]. Journal of Intelligent Systems, 2015, 24(1): 99-115. |

| 7 | Hitam M S, Awalludin E A, Yussof W N J H W Y, et al. Mixture contrast limited adaptive histogram equalization for underwater image enhancement[C]∥International Conference on Computer Applications Technology, Sousse, Tunisia, 2013: 1-5. |

| 8 | Ancuti C, Ancuti C O, Bekaert P. Enhancing underwater images and videos by fusion[C]∥2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 2012: 81-88. |

| 9 | Zhang S, Wang T, Dong J, et al. Underwater image enhancement via extended multi-scale Retinex[J]. Neurocomputing, 2017, 245: 1-9. |

| 10 | Akkaynak D, Treibitz T, Sea-thru: a method for removing water from underwater images[C]∥2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 2019: 1682-1691. |

| 11 | Trucco E, Olmos-Antillon T. Self-tuning underwater image restoration[J].IEEE Journal of Oceanic Engineering, 2006, 31(2): 511-519. |

| 12 | McGlamery B L. A computer model for underwater camera systems[J/OL]. [2022-04-18]. |

| 13 | Jaffe J S. Computer modeling and the design of optimal underwater imaging systems[J]. IEEE Journal of Oceanic Engineering, 1990, 15(2): 101-111. |

| 14 | Chiang J, Chen Y. Underwater image enhancement by wavelength compensation and dehazing[J]. IEEE Transactions on Image Processing: a Publication of the IEEE Signal Processing Society, 2012, 21(4): 1756-1769. |

| 15 | Peng Y T, Cao K M, Cosman P C. Generalization of the dark channel prior for single image restoration[J]. IEEE Transactions on Image Processing: a Publication of the IEEE Signal Processing Society, 2018, 27(6): 2856-2868. |

| 16 | Akkaynak D, Treibitz T. A revised underwater image formation model[C]∥2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 2018: 6723-6732. |

| 17 | Ding X, Wang Y, Zheng L, et al. Towards Underwater Image Enhancement Using Super-Resolution Convolutional Neural Networks[M]. Singapore: Springer, 2018. |

| 18 | Li J, Skinner K A, Eustice R M, et al. WaterGAN: unsupervised generative network to enable real-time color correction of monocular underwater images[J]. IEEE Robotics and Automation Letters,2018,3(1): 387-394. |

| 19 | Fabbri C, Jahidul I M, Sattar J. Enhancing underwater imagery using generative adversarial networks[EB/OL]. [2022-04-28]. |

| 20 | Li C, Guo C, Ren W, et al. An underwater image enhancement benchmark dataset and beyond[J]. IEEE Transactions on Image Processing: a Publication of the IEEE Signal Processing Society, 2019, 29: 4376-4389. |

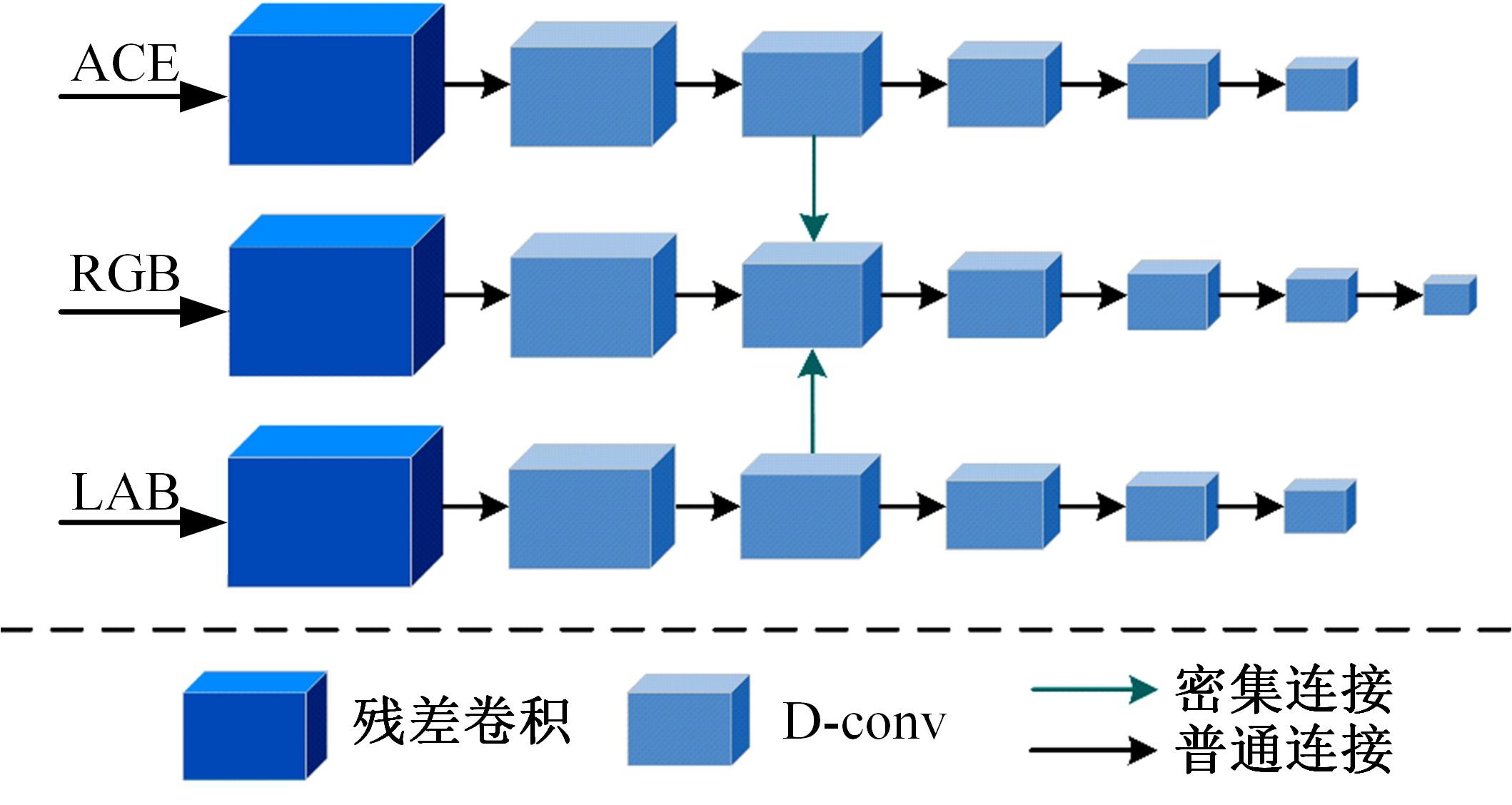

| 21 | Wang Y D, Guo J C, Gao H, et al. UIEC^2-Net: CNN-based underwater image enhancement using two color space[J]. Signal Processing: Image Communication, 2021, 96: No. 116250. |

| 22 | Liu Z, Lin Y T, Cao Y, et al. Swin Transformer: hierarchical vision transformer using shifted windows[J/OL]. [2022-04-28]. |

| 23 | Xu L, Yan Q, Xia Y, et al. Structure extraction from texture via relative total variation[J]. ACM Transactions on Graphics, 2012, 31(6): 1-10. |

| 24 | Getreuer P. Automatic color enhancement (ACE) and its fast implementation[J]. Image Processing on Line, 2012, 2: 266-277. |

| 25 | Li C, Anwar S, Hou J, et al. Underwater image enhancement via medium transmission-guided multi-color space embedding[J]. IEEE Transactions on Image Processing, 2021, 30: 4985-5000. |

| 26 | Isola P, Zhu J Y, Zhou T H, et al. Image-to-image translation with conditional adversarial networks[C]∥IEEE Conference on Computer Vision & Pattern Recognition, Honolulu, HI, USA, 2017: 5967-5976. |

| 27 | Zhao H, Gallo O, Frosio I, et al. Loss functions for neural networks for image processing[EB/OL]. [2022-04-28]. |

| 28 | Islam M J, Xia Y, Sattar J. Fast underwater image enhancement for improved visual perception[J]. IEEE Robotics and Automation Letters, 2020, 5(2): 3227-3234. |

| 29 | Li C, Anwar S. Underwater scene prior inspired deep underwater image and video enhancement[J]. Pattern Recognition, 2019, 98(1): No. 107038. |

| [1] | Guo-jun YANG,Ya-hui QI,Xiu-ming SHI. Review of bridge crack detection based on digital image technology [J]. Journal of Jilin University(Engineering and Technology Edition), 2024, 54(2): 313-332. |

| [2] | Ming-yao XIAO,Xiong-fei LI,Rui ZHU. Medical image fusion based on pixel correlation analysis in NSST domain [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2640-2648. |

| [3] | Xiao-jun JIN,Yan-xia SUN,Jia-lin YU,Yong CHEN. Weed recognition in vegetable at seedling stage based on deep learning and image processing [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(8): 2421-2429. |

| [4] | Zhen-hai ZHANG,Kun JI,Jian-wu DANG. Crack identification method for bridge based on BCEM model [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(5): 1418-1426. |

| [5] | Jian LI,Qi XIONG,Ya-ting HU,Kong-yu LIU. Chinese named entity recognition method based on Transformer and hidden Markov model [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(5): 1427-1434. |

| [6] | Ke HE,Hai-tao DING,Xuan-qi LAI,Nan XU,Kong-hui GUO. Wheel odometry error prediction model based on transformer [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(3): 653-662. |

| [7] | Xin RONG,Hong-hai LIU,Zuo-yao YIN,Hai-xiang LIN,Qing-hua BIAN. On-line detection method of aggregate gradation based on image processing [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(10): 2847-2855. |

| [8] | Huai-jiang YANG,Er-shuai WANG,Yong-xin SUI,Feng YAN,Yue ZHOU. Simplified residual structure and fast deep residual networks [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(6): 1413-1421. |

| [9] | Hai-yang JIA,Rui XIA,An-qi LYU,Ceng-xuan GUAN,Juan CHEN,Lei WANG. Panoramic mosaic approach of ultrasound medical images based on template fusion [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(4): 916-924. |

| [10] | Kang WANG,Meng YAO,Li-ben LI,Jian-qiao LI,Xiang-jin DENG,Meng ZOU,Long XUE. Mechanical performance identification for lunar soil in lunar surface sampling [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(3): 1146-1152. |

| [11] | Xiao-ran GUO,Ping LUO,Wei-lan WANG. Chinese named entity recognition based on Transformer encoder [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(3): 989-995. |

| [12] | Jian LI,Kong-yu LIU,Xian-sheng REN,Qi XIONG,Xue-feng DOU. Application of canny algorithm based on adaptive threshold in MR Image edge detection [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(2): 712-719. |

| [13] | Fu LIU,Lu LIU,Tao HOU,Yun LIU. Night road image enhancement method based on optimized MSR [J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(1): 323-330. |

| [14] | LIU Zhe, XU Tao, SONG Yu-qing, XU Chun-yan. Image fusion technology based on NSCT and robust principal component analysis model with similar information [J]. Journal of Jilin University(Engineering and Technology Edition), 2018, 48(5): 1614-1620. |

| [15] | CHE Xiang-jiu, WANG Li, GUO Xiao-xin. Improved boundary detection based on multi-scale cues fusion [J]. Journal of Jilin University(Engineering and Technology Edition), 2018, 48(5): 1621-1628. |

|

||