吉林大学学报(工学版) ›› 2022, Vol. 52 ›› Issue (10): 2438-2446.doi: 10.13229/j.cnki.jdxbgxb20210298

• 计算机科学与技术 • 上一篇

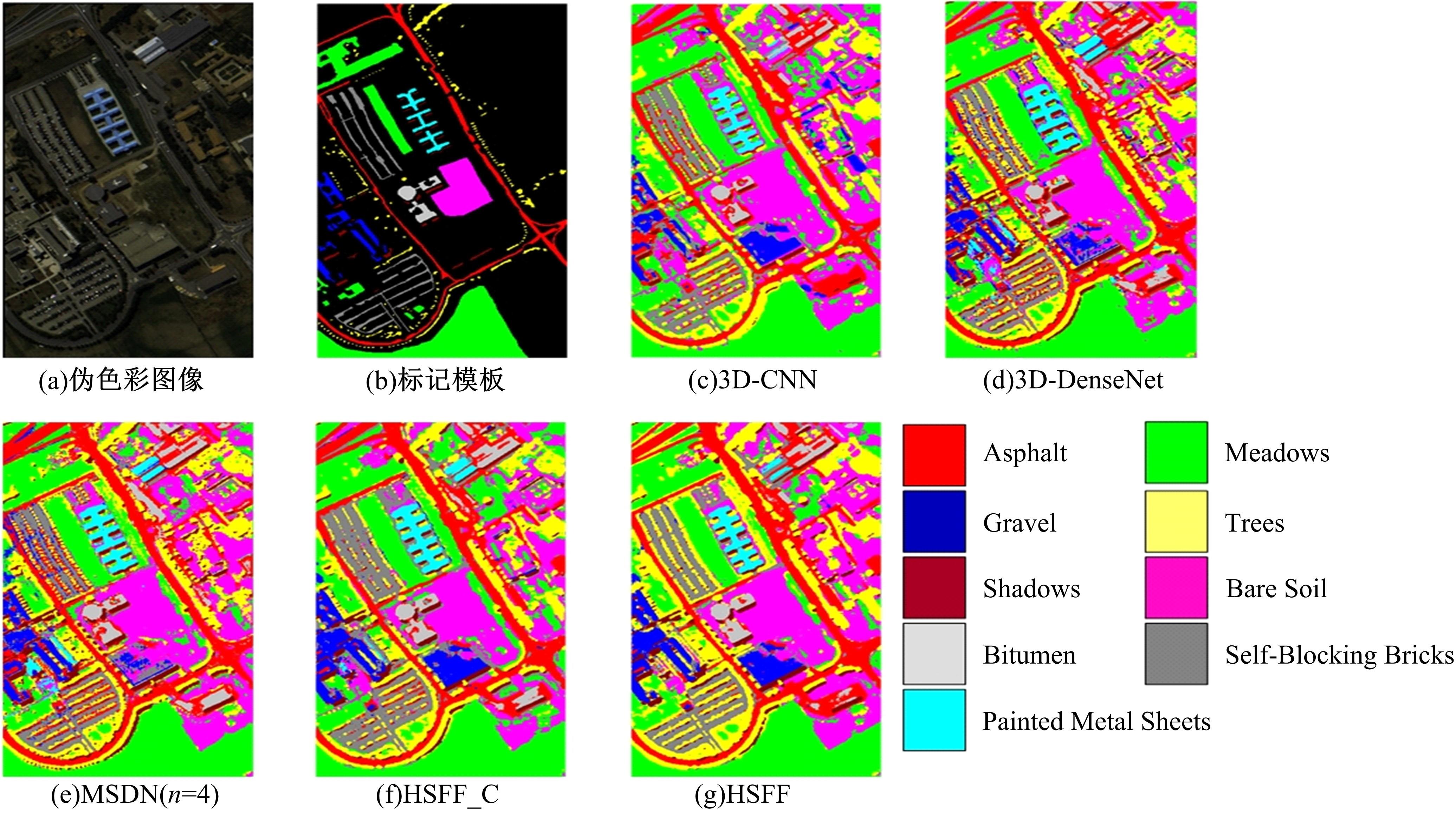

基于多层次空⁃谱融合网络的高光谱图像分类

- 桂林电子科技大学 信息与通信学院,广西 桂林 541004

Hyperspectral image classification based on hierarchical spatial-spectral fusion network

Ning OUYANG( ),Zu-feng LI,Le-ping LIN(

),Zu-feng LI,Le-ping LIN( )

)

- School of Information and Communication,Guilin University of Electronic Technology,Guilin 541004,China

摘要:

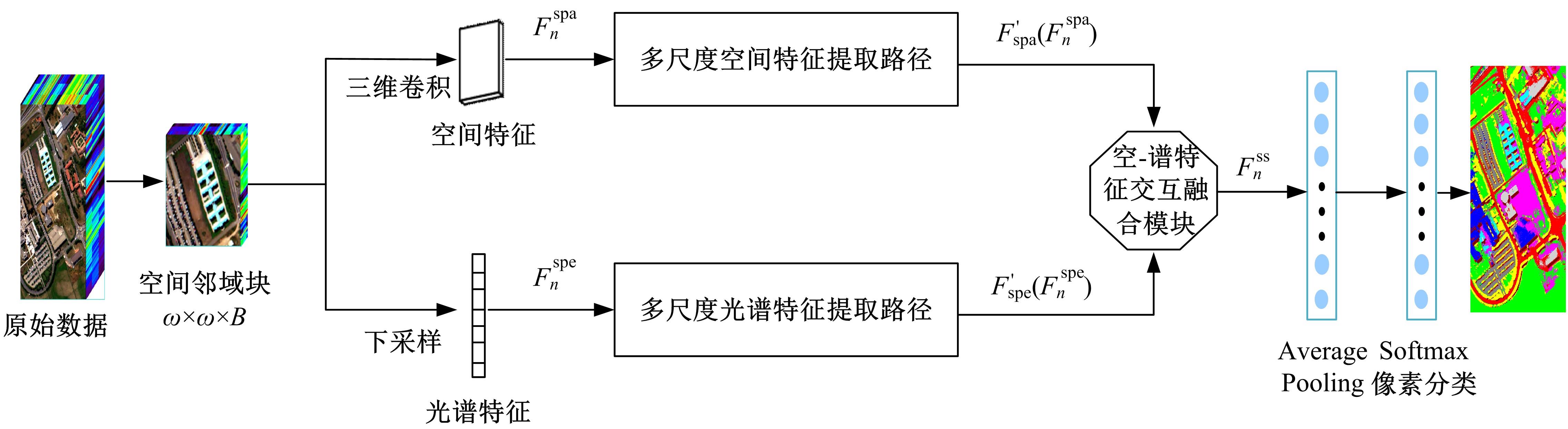

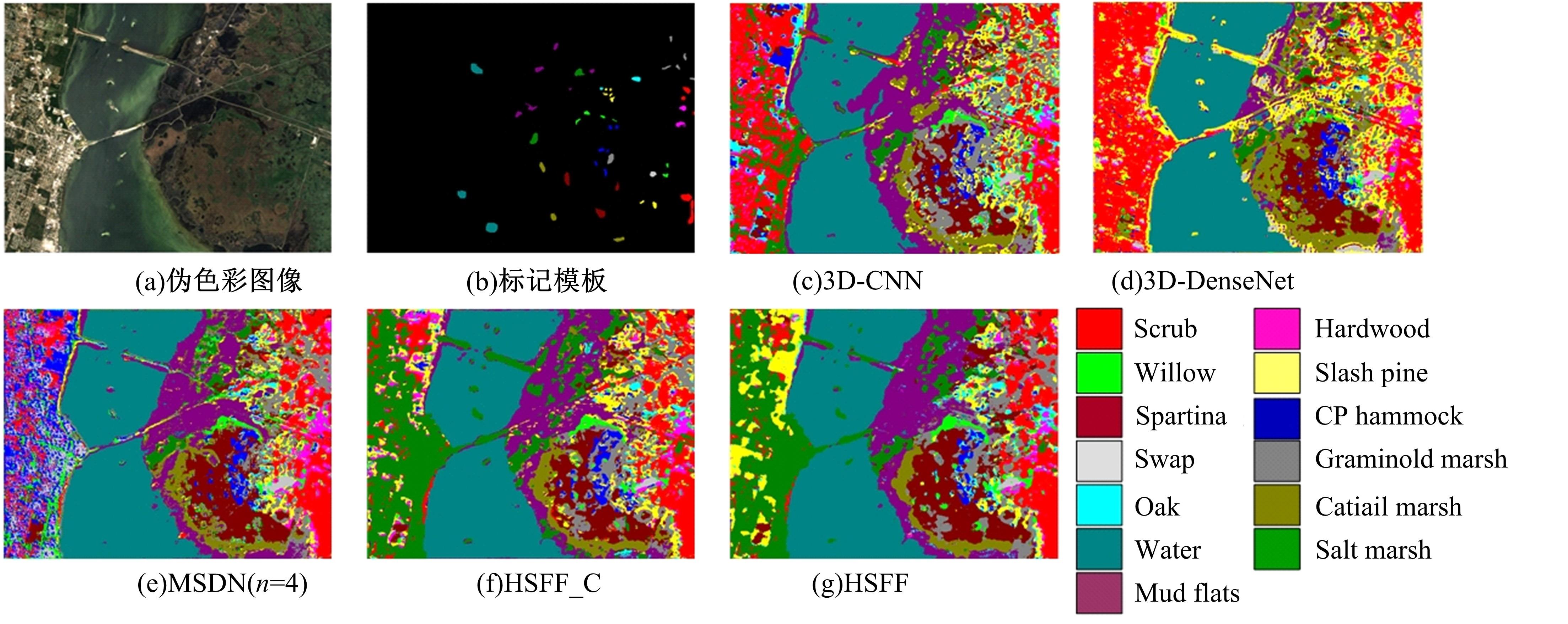

为了在高光谱图像分类中更好提取和表达光谱与空间的精细特征以及特征间的交互信息,提出一种基于多层次空-谱融合网络的高光谱图像分类方法。首先,利用多层次特征提取模块,分别提取高光谱图像的多层次空间和光谱特征;其次,设计空-谱特征交互融合模块将获得的多层次空间与光谱特征进行特征融合,以产生空-谱融合特征。本文方法可以结合网络中不同层次的空间与光谱特征,有效地捕获高光谱图像精细特征;同时,通过联合学习融合空间与光谱特征,捕获光谱与空间特征之间交互作用。实验结果表明,与现有基于神经网络的分类方法相比,所提出的高光谱图像分类算法能够获得更高的分类精度,表明该网络能有效地提取精细特征和增强空-谱融合特征的表达能力。

中图分类号:

- TP753

| 1 | Li W, Prasad S, Flower J E, et al. Locality-preserving dimensionality reduction and classification for hyperspectral image analysis[J]. IEEE Transactions on Geoscience & Remote Sensing,2012,50(4):1185-1198. |

| 2 | Li W, Chen C, Su H J, et al. Local binary patterns and extreme learning machine for hyperspectral imagery classification[J]. IEEE Transactions on Geoence & Remote Sensing, 2015, 53(7): 3681-3693. |

| 3 | Huang K, Li S, Kang X, et al. Spectral-spatial hyperspectral image classification based on KNN[J]. Sensing and Imaging, 2016, 17(1):1-13. |

| 4 | 闫敬文, 陈宏达, 刘蕾. 高光谱图像分类的研究进展[J]. 光学精密工程, 2019, 27(3): 680-693. |

| Yan Jing-wen, Chen Hong-da, Liu Lei. Overiew of hyperspectral image classification[J]. Optics and Precision Engineering, 2019, 27(3): 680-693. | |

| 5 | Zhang H, Li Y, Zhang Y, et al. Spectral-spatial classification of hyperspectral imagery using a dual-channel convolutional neural network[J]. Remote Sensing Letters, 2017, 8(5):438-447. |

| 6 | Ouyang N, Zhu T, Lin L P. Convolutional neural network trained by joint loss for hyperspectral image classification[J]. IEEE Geoscience and Remote Sensing Letters, 2018, 16(3): 457-461. |

| 7 | Qing Y, Liu W. Hyperspectral image classification based on multi-scale residual network with attention mechanism[J]. Remote Sensing,2021,13(3):No.335. |

| 8 | Zhu M H, Jiao L C, Liu F, et al. Residual spectral–spatial attention network for hyperspectral image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2020, 59(1): 449-462. |

| 9 | 欧阳宁, 朱婷, 林乐平. 基于空-谱融合网络的高光谱图像分类方法[J].计算机应用, 2018, 38(7): 1888-1892. |

| Ouyang Ning, Zhu Ting, Lin Le-ping. Spatial-spetral fusion network for hyperspectral image classification method[J]. Journal of Computer Applications, 2018, 38(7): 1888-1892. | |

| 10 | Roy S K, Krishna G, Dubey S R, et al. HybridSN: exploring 3D-2D CNN feature hierarchy for hyperspectral image classification[J]. IEEE Geoscience and Remote Sensing Letters, 2020, 17(2): 277-281. |

| 11 | Li Y, Zhang H K, Shen Q. Spectral-spatial classification of hyperspectral imagery with 3D convolutional neural network[J]. Remote Sensing, 2017, 9(1): 67-87. |

| 12 | Zhang C, Li G, Du S, et al. Three-dimensional densely connected convolutional network for hyperspectral remote sensing image classification[J]. Journal of Applied Remote Sensing, 2019, 13(1):No.16519. |

| 13 | Zhang C J, Li G D, Du S H, et al. Multi-scale dense networks for hyperspectral remote sensing image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2019, 57(11): 9201-9222. |

| 14 | Wang W, Dou S, Jiang Z, et al. A fast dense spectral-spatial convolution network framework for hyperspectral images classification[J]. Remote Sensing, 2018, 10(7): No.1068. |

| 15 | Zhu M H, Jiao L C, Liu F, et al. Residual spectral–spatial attention network for hyperspectral image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2020, 59(1): 449-462. |

| 16 | Huang G, Chen D, Li T, et al. Multi-scale dense networks for resource efficient image classification[C]//International Conference on Learning Representations, Vancouver, Canada, 2018: 1-14. |

| 17 | Chang Y H, Xu J T, Gao Z Y. Multi-scale dense attention network for stereo matching[J]. Electronics, 2020, 9(11):1881-1892. |

| 18 | Lu J, Yang J W, Batra D, et al. Hierarchical question-image co-attention for visual question answering[C]//Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 2016: 289-297. |

| 19 | Ma H Y, Li Y J, Ji X P, et al. MsCoa: multi-step co-attention model for multi-label classification[J]. IEEE Access, 2019, 7: 109635-109645. |

| 20 | Li M N, Tei K J, Fukazawa Y. An efficient adaptive attention neural network for social recommendation[J]. IEEE Access, 2020, 8: 63595-63606. |

| [1] | 潘晓英,魏德,赵逸喆. 基于Mask R⁃CNN和上下文卷积神经网络的肺结节检测[J]. 吉林大学学报(工学版), 2022, 52(10): 2419-2427. |

| [2] | 周大可,张超,杨欣. 基于多尺度特征融合及双重注意力机制的自监督三维人脸重建[J]. 吉林大学学报(工学版), 2022, 52(10): 2428-2437. |

| [3] | 赵宏伟,霍东升,王洁,李晓宁. 基于显著性检测的害虫图像分类[J]. 吉林大学学报(工学版), 2021, 51(6): 2174-2181. |

| [4] | 倪涛,刘海强,王林林,邹少元,张红彦,黄玲涛. 基于双向长短期记忆模型的起重机智能操控方法[J]. 吉林大学学报(工学版), 2020, 50(2): 445-453. |

| [5] | 王生生, 郭湑, 张家晨, 王光耀, 赵欣. 基于全局与局部形状特征融合的形状识别算法[J]. 吉林大学学报(工学版), 2016, 46(5): 1627-1632. |

| [6] | 张浩, 刘海明, 吴春国, 张艳梅, 赵天明, 李寿涛. 基于多特征融合的绿色通道车辆检测判定[J]. 吉林大学学报(工学版), 2016, 46(1): 271-276. |

| [7] | 杨欣,刘加,周鹏宇,周大可. 基于多特征融合的粒子滤波自适应目标跟踪算法[J]. 吉林大学学报(工学版), 2015, 45(2): 533-539. |

| [8] | 齐滨, 赵春晖, 王玉磊. 基于支持向量机与相关向量机的高光谱图像分类[J]. 吉林大学学报(工学版), 2013, 43(增刊1): 143-147. |

| [9] | 吴迪, 曹洁. 智能环境下基于核相关权重鉴别分析算法的多特征融合人脸识别[J]. 吉林大学学报(工学版), 2013, 43(02): 439-443. |

| [10] | 王新颖, 刘钢, 谷方明, 肖巍. 三维模型检索中的语义与形状异构特征融合[J]. 吉林大学学报(工学版), 2012, 42(增刊1): 359-363. |

| [11] | 曲智国, 王平, 高颖慧, 王鹏, 沈振康, 李江. 基于同化核分割相同值区域特征融合的边缘检测 [J]. , 2012, (03): 759-765. |

| [12] | 陈绵书, 付平, 李勇, 张慧. 基于范围相似分最小化的图像特征融合[J]. 吉林大学学报(工学版), 2010, 40(增刊): 365-0368. |

| [13] | 王莹, 李文辉. 基于多特征融合的高精度视频火焰检测算法[J]. 吉林大学学报(工学版), 2010, 40(03): 769-0775. |

| [14] | 商飞, 马骏骁, 姚立, 田地, 邱春玲. 基于多特征融合的科学仪器工作状态检测方法[J]. 吉林大学学报(工学版), 2010, 40(02): 545-0548. |

| [15] | 郑雅羽,田翔.陈耀武. 基于时空特征融合的视觉注意模型[J]. 吉林大学学报(工学版), 2009, 39(06): 1625-1630. |

|

||