吉林大学学报(工学版) ›› 2021, Vol. 51 ›› Issue (6): 2174-2181.doi: 10.13229/j.cnki.jdxbgxb20200749

• 计算机科学与技术 • 上一篇

基于显著性检测的害虫图像分类

- 1.吉林大学 计算机科学与技术学院,长春 130012

2.上海航天控制技术研究所,上海 201109

3.长春师范大学 计算机科学与技术学院,长春 130032

Image classification of insect pests based on saliency detection

Hong-wei ZHAO1( ),Dong-sheng HUO1,Jie WANG2,Xiao-ning LI3(

),Dong-sheng HUO1,Jie WANG2,Xiao-ning LI3( )

)

- 1.College of Computer Science and Technology,Jilin University,Changchun 130012,China

2.Shanghai Academy of Spaceflight Technology,Shanghai 201109,China

3.College of Computer Science and Technology,Changchun Normal University,Changchun 130032,China

摘要:

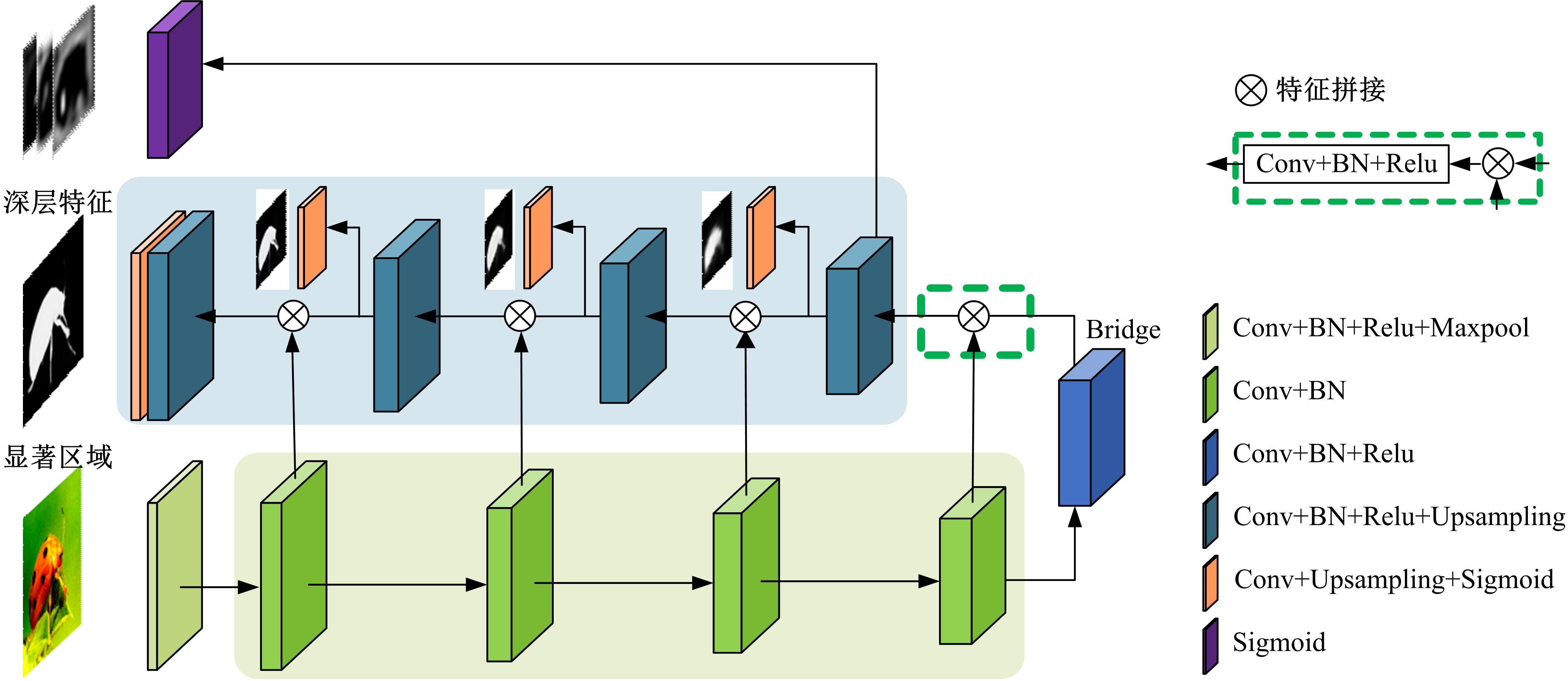

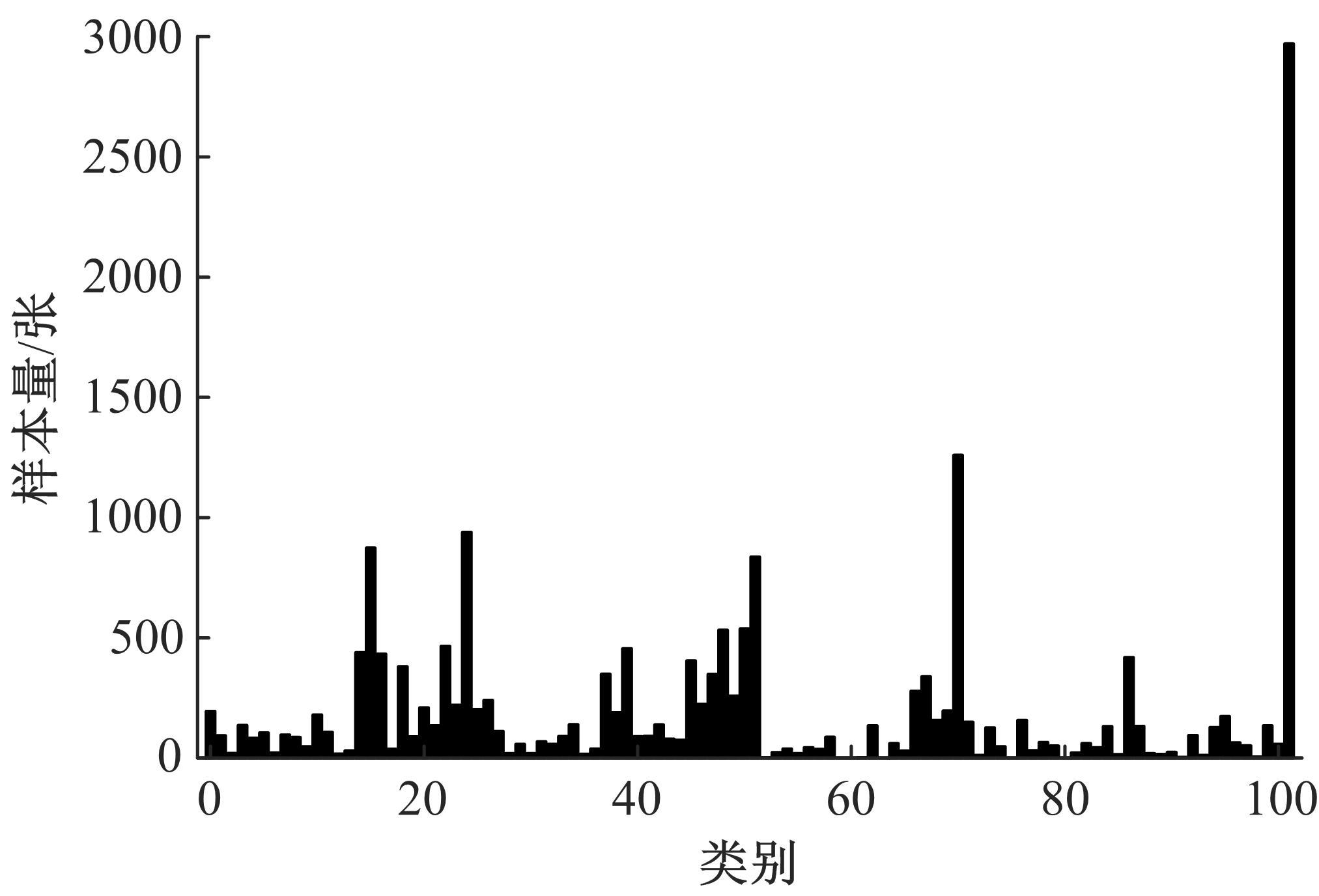

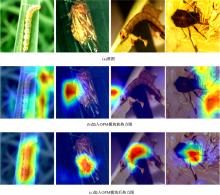

针对病虫害分类时害虫种类多样,且类间与类内差异大等问题,提出了一种害虫分类模型PestNet。模型主要由目标定位模块OPM和多特征融合模块MFFM组成,OPM通过U型网络结构整合害虫图像浅层细节信息和深层空间信息,初步划定显著区域并输出空间语义特征。MFFM通过对空间语义特征和抽象语义特征进行双线性池化操作,弱化背景信息,增加细节特征。此外,通过目标区域裁剪和掩膜等方式辅助训练模型,提高模型分类精度。将该模型在病虫害数据集IP102上进行实验,分类准确率可达77.40%,能够实现复杂背景下大规模害虫图像的分类识别。

中图分类号:

- TP391

| 1 | Serre T, Wolf L, Bileschi S, et al. Robust object recognition with cortex-like mechanisms[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2007, 29(3): 411-426. |

| 2 | Cheng X, Zhang Y H, Chen Y Q, et al. Pest identification via deep residual learning in complex background[J]. Computers and Electronics in Agriculture, 2017, 141: 351-356. |

| 3 | 车颖, 冯皛, 郑宏亮. 基于卷积神经网络的超声造影图像去噪方法[J]. 吉林大学学报:理学版, 2021, 59(5): 1256-1259. |

| Che Ying, Feng Xiao, Zheng Hong-liang. Denoisng method of contrast-enhanced ultrasound image based on convolutional neural networks[J]. Journal of Jilin University Science Edition, 2021, 59(5): 1256-1259. | |

| 4 | 李伟伟. 非局部的可切换滤波图像椒盐噪声去除方法[J]. 吉林大学学报:理学版, 2019, 57(4): 910-916. |

| Li Wei-wei. Method for removing salt and pepper noise from non local switchable filter image[J]. Journal of Jilin University Science Edition, 2019, 57(4): 910-916. | |

| 5 | Goodale M A, Milner A D. Separate visual pathways for perception and action[J]. Trends in Neurosciences, 1992, 15(1): 20-25. |

| 6 | 郑雅羽, 田翔, 陈耀武. 基于时空特征融合的视觉注意模型[J]. 吉林大学学报: 工学版, 2009, 39(6): 1625-1630. |

| Zheng Ya-yu, Tian Xiang, Chen Yao-wu. Visual attention model based on fussion of spatiotemporal features[J]. Journal of Jilin University(Engineering and Technology Edition), 2009, 39(6): 1625-1630. | |

| 7 | 李鹏松, 李俊达, 吴良武, 等. 基于阈值分割法和卷积神经网络的图像识别算法[J]. 吉林大学学报:理学版, 2020, 58(6): 1436-1442. |

| Li Peng-song, Li Jun-da, Wu Liang-wu, et al. Image recognition algorithm based on threshold segmentation method and convolutional neural network[J]. Journal of Jilin University Science Edition, 2020, 58(6): 1436-1442. | |

| 8 | 傅博, 王瑞子, 王丽妍, 等. 基于深度卷积神经网络的水下偏色图像增强方法[J]. 吉林大学学报:理学版, 2021, 59(4): 891-899. |

| Fu Bo, Wang Rui-zi, Wang Li-yan, et al. Enhancement method of underwater color cast image based on deep convolutional neural network[J]. Journal of Jilin University Science Edition, 2021, 59(4): 891-899. | |

| 9 | 高云龙, 吴川, 朱明. 基于改进卷积神经网络的短文本分类模型[J]. 吉林大学学报:理学版, 2020, 58(4): 923-930. |

| Gao Yun-long, Wu Chuan, Zhu Ming. Short text classification model based on improved convolutional neural network[J]. Journal of Jilin University Science Edition, 2020, 58(4): 923-930. | |

| 10 | Ronneberger O, Fischer P, Brox T. U-net: convolutional networks for biomedical image segmentation[C]∥International Conference on Medical Image Computing and Computer-Assisted Intervention, University of Freiburg, Germany, 2015: 234-241. |

| 11 | Goodfellow I J, Pouget-Abadie J, Mirza M, et al. Generative adversarial nets[C]∥Proceedings of the 27th International Conference on Neural Information Processing Systems, Cambridge, MA, USA, 2014: 2672-2680. |

| 12 | Pinheiro P O, Collobert R. From image-level to pixel-level labeling with convolutional networks[C]∥2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 2015: 1713-1721. |

| 13 | Wang Z, Bovik A C, Sheikh H R, et al. Image quality assessment: from error visibility to structural similarity[J]. IEEE Transactions on Image Processing, 2004, 13(4): 600-612. |

| 14 | 范敏, 韩琪, 王芬, 等. 基于多层次特征表示的场景图像分类算法[J]. 吉林大学学报: 工学版, 2017, 47(6): 1909-1917. |

| Fan Min, Han Qi, Wang Fen, et al. Scene image categorization algorithm based on multi-level features representation[J]. Journal of Jilin University(Engineering and Technology Edition), 2017, 47(6): 1909-1917. | |

| 15 | Wei X S, Wu J, Cui Q. Deep learning for fine-grained image analysis: a survey[J/OL]. [2020-09-18]. |

| 16 | Wu X P, Zhan C, Lai Y K, et al. IP102: A large-scale benchmark dataset for insect pest recognition[C]∥2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 2019: 8779-8788. |

| 17 | Selvaraju R R, Cogswell M, Das A, et al. Grad-cam: Visual explanations from deep networks via gradient-based localization[C]∥2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 2017: 618-626. |

| 18 | Hu T, Qi H, Huang Q, et al. See better before looking closer: Weakly supervised data augmentation network for fine-grained visual classification[J/OL]. [2020-09-20]. |

| 19 | Liu C, Zoph B, Neumann M, et al. Progressive neural architecture search[C]∥Proceedings of the European Conference on Computer Vision, Munich, Germany, 2018: 1-16. |

| 20 | 吴建,许镜,丁韬.基于集成迁移学习的细粒度图像分类算法[J].重庆邮电大学学报:自然科学版,2020,32(3):452-458. |

| Wu Jian, Xu Jing, Ding Tao. Fine-grained image classification algorithm based on ensemble methods of transfer learning[J]. JJournal of Chongqing University of Posts and Telecommunications(Natural Science Edition), 2020, 32(3): 452-458. | |

| 21 | 李伟, 黄鹤鸣, 武风英, 等. 基于深度多特征融合的自适应CNN图像分类算法[J]. 计算机工程, 2021, 47(9): 235-239, 251. |

| Li Wei, Huang He-ming, Wu Feng-ying, et al. Adaptive CNN-based image classification algorithm based on deep fusion of multi-feature[J]. Computer Engineering, 2021, 47(9): 235-239, 251. | |

| 22 | 生龙, 马建飞, 杨瑞欣, 等. 基于特征交换的CNN图像分类算法研究[J]. 计算机工程, 2020, 46(9): 268-273. |

| Sheng Long, Ma Jian-fei, Yang Rui-xin, et al. Research on CNN image classification algorithm based on feature exchange[J]. Computer Engineering, 2020, 46(9): 268-273. |

| [1] | 王生生,陈境宇,卢奕南. 基于联邦学习和区块链的新冠肺炎胸部CT图像分割[J]. 吉林大学学报(工学版), 2021, 51(6): 2164-2173. |

| [2] | 林俊聪,雷钧,陈萌,郭诗辉,高星,廖明宏. 基于电影视觉特性的动态多目标实时相机规划[J]. 吉林大学学报(工学版), 2021, 51(6): 2154-2163. |

| [3] | 姚引娣,贺军瑾,李杨莉,谢荡远,李英. 自构建改进型鲸鱼优化BP神经网络的ET0模拟计算[J]. 吉林大学学报(工学版), 2021, 51(5): 1798-1807. |

| [4] | 赵宏伟,张子健,李蛟,张媛,胡黄水,臧雪柏. 基于查询树的双向分段防碰撞算法[J]. 吉林大学学报(工学版), 2021, 51(5): 1830-1837. |

| [5] | 曹洁,屈雪,李晓旭. 基于滑动特征向量的小样本图像分类方法[J]. 吉林大学学报(工学版), 2021, 51(5): 1785-1791. |

| [6] | 孙小雪,钟辉,陈海鹏. 基于决策树分类技术的学生考试成绩统计分析系统[J]. 吉林大学学报(工学版), 2021, 51(5): 1866-1872. |

| [7] | 张萌谡,刘春天,李希今,黄永平. 基于K⁃means聚类算法的绩效考核模糊综合评价系统设计[J]. 吉林大学学报(工学版), 2021, 51(5): 1851-1856. |

| [8] | 王春波,底晓强. 基于标签分类的云数据完整性验证审计方案[J]. 吉林大学学报(工学版), 2021, 51(4): 1364-1369. |

| [9] | 欧阳丹彤,刘扬,刘杰. 故障响应指导下基于测试集的故障诊断方法[J]. 吉林大学学报(工学版), 2021, 51(3): 1017-1025. |

| [10] | 钱榕,张茹,张克君,金鑫,葛诗靓,江晟. 融合全局和局部特征的胶囊图神经网络[J]. 吉林大学学报(工学版), 2021, 51(3): 1048-1054. |

| [11] | 朱小龙,谢忠. 基于机器学习的地理空间数据抽取算法[J]. 吉林大学学报(工学版), 2021, 51(3): 1011-1016. |

| [12] | 孙宝凤,任欣欣,郑再思,李国一. 考虑工人负荷的多目标流水车间优化调度[J]. 吉林大学学报(工学版), 2021, 51(3): 900-909. |

| [13] | 刘元宁,吴迪,朱晓冬,张齐贤,李双双,郭书君,王超. 基于YOLOv3改进的用户界面组件检测算法[J]. 吉林大学学报(工学版), 2021, 51(3): 1026-1033. |

| [14] | 陈广秋,陈昱存,李佳悦,刘广文. 基于DNST和卷积稀疏表示的红外与可见光图像融合[J]. 吉林大学学报(工学版), 2021, 51(3): 996-1010. |

| [15] | 王淑敏,陈伟. 基于连续密度隐马尔可夫模型的矿下异常行为识别算法[J]. 吉林大学学报(工学版), 2021, 51(3): 1067-1072. |

|

||