吉林大学学报(工学版) ›› 2023, Vol. 53 ›› Issue (1): 226-233.doi: 10.13229/j.cnki.jdxbgxb20210510

• 计算机科学与技术 • 上一篇

基于施工场景的视觉关系检测方法

- 中国海洋大学 工程学院,山东 青岛 266100

Visual relationship detection method based on construction scene

Jun-jie WANG( ),Yuan-jun NONG,Li-te ZHANG,Pei-chen ZHAI

),Yuan-jun NONG,Li-te ZHANG,Pei-chen ZHAI

- School of Engineering,Ocean University of China,Qingdao 266100,China

摘要:

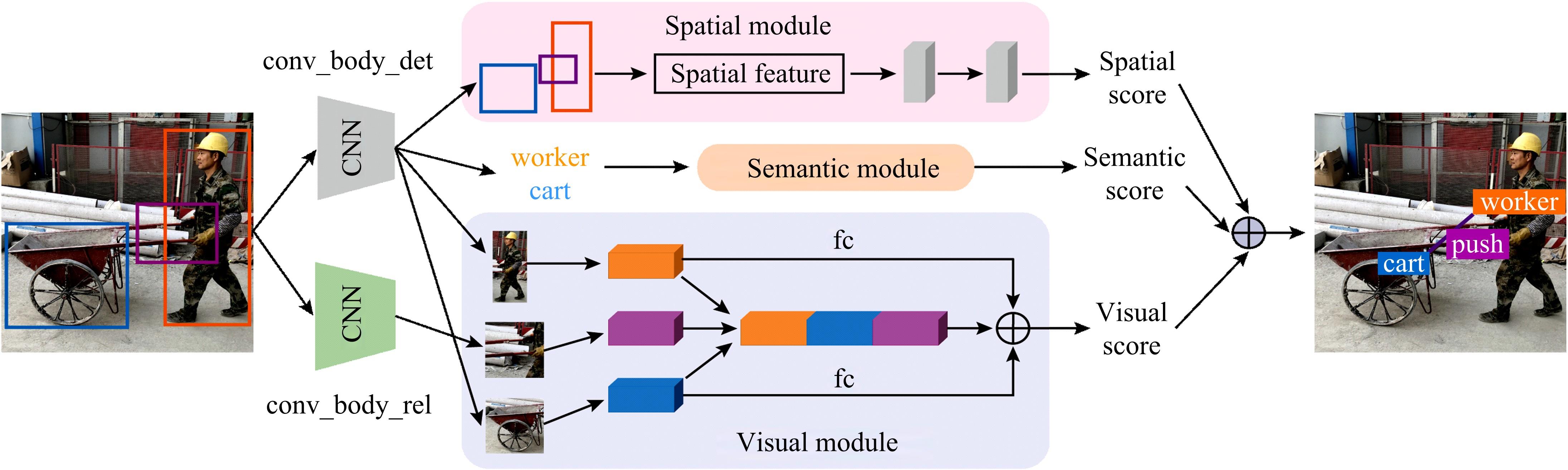

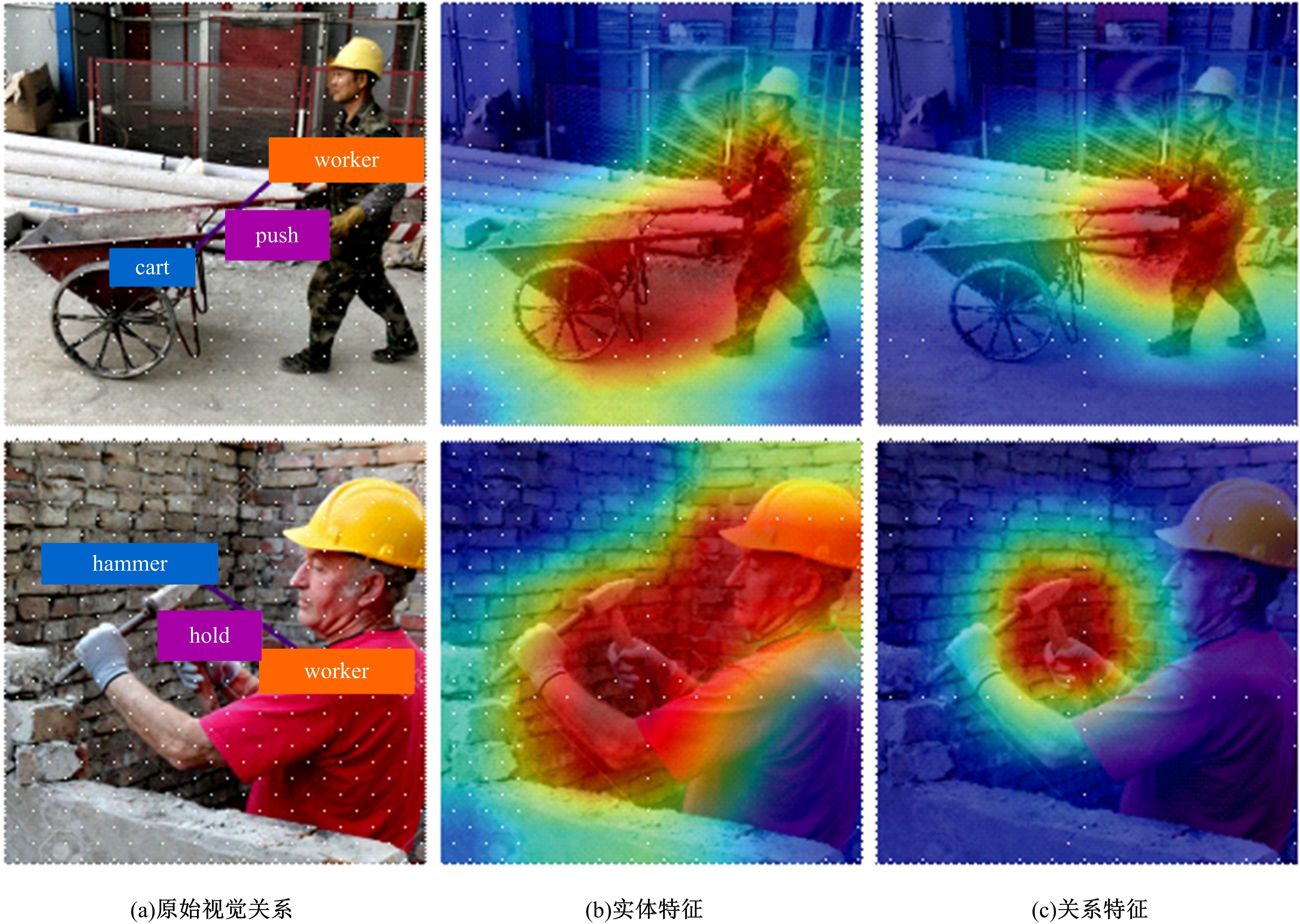

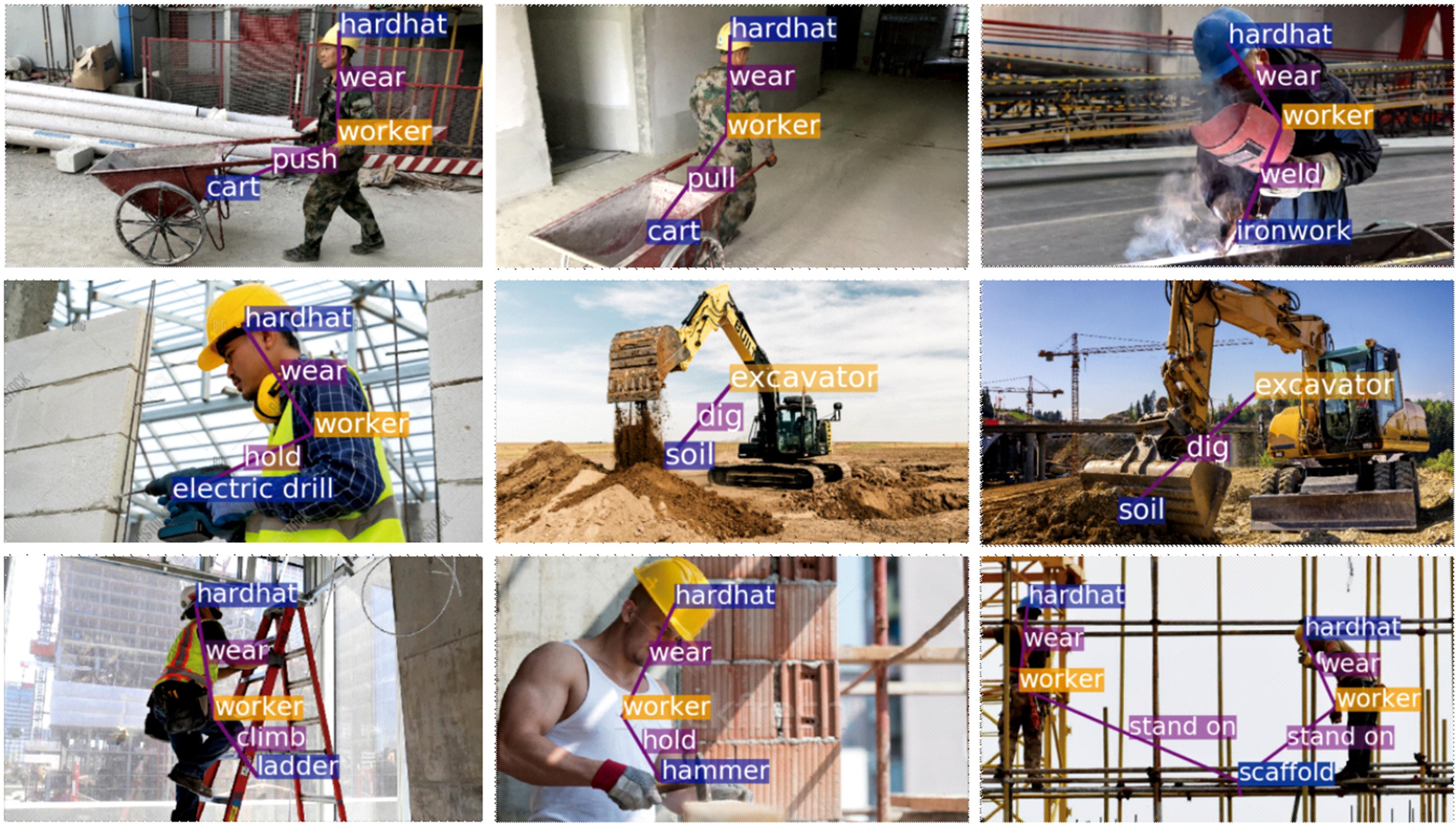

鉴于施工现场中工人与施工机械及施工用具之间不合规的交互关系是引发安全事故的重要原因,提出了一种基于施工场景的视觉关系检测方法。首先,采用卷积神经网络搭建实体检测和关系检测分支,以提取出施工场景中的实体特征和关系特征;其次,构建视觉模块、语义模块和空间模块对提取出的特征进行学习,使网络充分感知和理解视觉信息、语义信息与空间信息;最后,设计了一种图形对比损失函数,以提高模型的视觉关系检测性能。在自制的施工场景关系检测数据集上的实验结果表明,本文方法实现了75.89%、77.64%、78.93%的R@20、R@50、R@100召回率,具有良好的视觉关系检测性能,能精准地检测出施工场景中的目标及其交互关系。

中图分类号:

- TP319.4

| 1 | 中华人民共和国住房和城乡建设部办公厅. 住房和城乡建设部办公厅关于2019年房屋市政工程生产安全事故情况的通报[EB/OL]. [2021-06-04]. . |

| 2 | Luo H, Wang M, Wong P K, et al. Construction machine pose prediction considering historical motions and activity attributes using gated recurrent unit (GRU)[J]. Automation in Construction, 2021,121:No.103444. |

| 3 | Wu J, Cai N, Chen W, et al. Automatic detection of hardhats worn by construction personnel: a deep learning approach and benchmark dataset[J]. Automation in Construction, 2019,106: No.102894. |

| 4 | Fang Q, Li H, Luo X, et al. Detecting non-hardhat-use by a deep learning method from far-field surveillance videos[J]. Automation in Construction, 2018,85:1-9. |

| 5 | Nath N D, Behzadan A H, Paal S G. Deep learning for site safety: real-time detection of personal protective equipment[J]. Automation in Construction, 2020,112: No.103085. |

| 6 | Lu C, Krishna R, Bernstein M, et al. Visual Relationship detection with language priors[J]. European Conference on Computer Vision, 2016,9905: 852-869. |

| 7 | Tang S, Roberts D, Golparvar-Fard M. Human-object interaction recognition for automatic construction site safety inspection[J]. Automation in Construction, 2020, 120: No.103356. |

| 8 | Xiong R, Song Y, Li H, et al. Onsite video mining for construction hazards identification with visual relationships[J]. Advance Engineering Informatics, 2019, 42: No.100966. |

| 9 | Zhang J, Shih K J, Elgammal A, et al. Graphical contrastive losses for scene graph parsing[J]. IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, USA,2019:11527-11535. |

| 10 | Krishna R, Zhu Y, Groth O, et al. Visual genome: connecting language and vision using crowdsourced dense image annotations[EB/OL]. [2021-06-04]. . |

| 11 | Dutta A, Zisserman A. The VIA annotation software for images, audio and video[EB/OL]. [2021-06- 04]. . |

| 12 | Zhang J, Kalantidis Y, Rohrbach M, et al. Large-scale visual relationship understanding[J]. AAAI Conference on Artificial Intelligence, 2019, 33(1):9185-9194. |

| 13 | Zellers R, Yatskar M, Thomson S, et al. Neural motifs: scene graph parsing with global context[J]. IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA,2018: 5831-5840. |

| 14 | Yang J, Lu J, Lee S, et al. Graph R-CNN for scene graph generation[J]. European Conference on Computer Vision, 2018,11205: 690-706. |

| 15 | Tang K H, Niu Y L, Huang J Q, et al. Unbiased scene graph generation from biased training[J]. IEEE Conference on Computer Vision and Pattern Recognition, Seattle, USA,2020:3716-3725. |

| [1] | 张振海,季坤,党建武. 基于桥梁裂缝识别模型的桥梁裂缝病害识别方法[J]. 吉林大学学报(工学版), 2023, 53(5): 1418-1426. |

| [2] | 刘培勇,董洁,谢罗峰,朱杨洋,殷国富. 基于多支路卷积神经网络的磁瓦表面缺陷检测算法[J]. 吉林大学学报(工学版), 2023, 53(5): 1449-1457. |

| [3] | 薛珊,张亚亮,吕琼莹,曹国华. 复杂背景下的反无人机系统目标检测算法[J]. 吉林大学学报(工学版), 2023, 53(3): 891-901. |

| [4] | 时小虎,吴佳琦,吴春国,程石,翁小辉,常志勇. 基于残差网络的弯道增强车道线检测方法[J]. 吉林大学学报(工学版), 2023, 53(2): 584-592. |

| [5] | 曲福恒,丁天雨,陆洋,杨勇,胡雅婷. 基于邻域相似性的图像码字快速搜索算法[J]. 吉林大学学报(工学版), 2022, 52(8): 1865-1871. |

| [6] | 申铉京,张雪峰,王玉,金玉波. 像素级卷积神经网络多聚焦图像融合算法[J]. 吉林大学学报(工学版), 2022, 52(8): 1857-1864. |

| [7] | 白天,徐明蔚,刘思铭,张佶安,王喆. 基于深度神经网络的诉辩文本争议焦点识别[J]. 吉林大学学报(工学版), 2022, 52(8): 1872-1880. |

| [8] | 秦贵和,黄俊锋,孙铭会. 基于双手键盘的虚拟现实文本输入[J]. 吉林大学学报(工学版), 2022, 52(8): 1881-1888. |

| [9] | 杨怀江,王二帅,隋永新,闫丰,周跃. 简化型残差结构和快速深度残差网络[J]. 吉林大学学报(工学版), 2022, 52(6): 1413-1421. |

| [10] | 高明华,杨璨. 基于改进卷积神经网络的交通目标检测方法[J]. 吉林大学学报(工学版), 2022, 52(6): 1353-1361. |

| [11] | 刘铭,杨雨航,邹松霖,肖志成,张永刚. 增强边缘检测图像算法在多书识别中的应用[J]. 吉林大学学报(工学版), 2022, 52(4): 891-896. |

| [12] | 方世敏. 基于频繁模式树的多来源数据选择性集成算法[J]. 吉林大学学报(工学版), 2022, 52(4): 885-890. |

| [13] | 王学智,李清亮,李文辉. 融合迁移学习的土壤湿度预测时空模型[J]. 吉林大学学报(工学版), 2022, 52(3): 675-683. |

| [14] | 李向军,涂洁莹,赵志宾. 基于多尺度融合卷积神经网络的熔解曲线有效性分类[J]. 吉林大学学报(工学版), 2022, 52(3): 633-639. |

| [15] | 李先通,全威,王华,孙鹏程,安鹏进,满永兴. 基于时空特征深度学习模型的路径行程时间预测[J]. 吉林大学学报(工学版), 2022, 52(3): 557-563. |

|

||