吉林大学学报(工学版) ›› 2024, Vol. 54 ›› Issue (5): 1426-1434.doi: 10.13229/j.cnki.jdxbgxb.20220790

• 计算机科学与技术 • 上一篇

基于高分辨率孪生网络的无人机目标跟踪算法

- 1.西安邮电大学 通信与信息工程学院,西安 710121

2.哈德斯菲尔德大学 计算机与工程学院,哈德斯菲尔德HD13DH

UAV target tracking algorithm based on high resolution siamese network

Dian-wei WANG1( ),Chi ZHANG1,Jie FANG1,Zhi-jie XU2

),Chi ZHANG1,Jie FANG1,Zhi-jie XU2

- 1.School of Communication and Information Engineering,Xi'an University of Posts and Telecommunications,Xi'an 710121,China

2.School of Computing and Engineering,University of Huddersfield,Huddersfield HD13DH,UK

摘要:

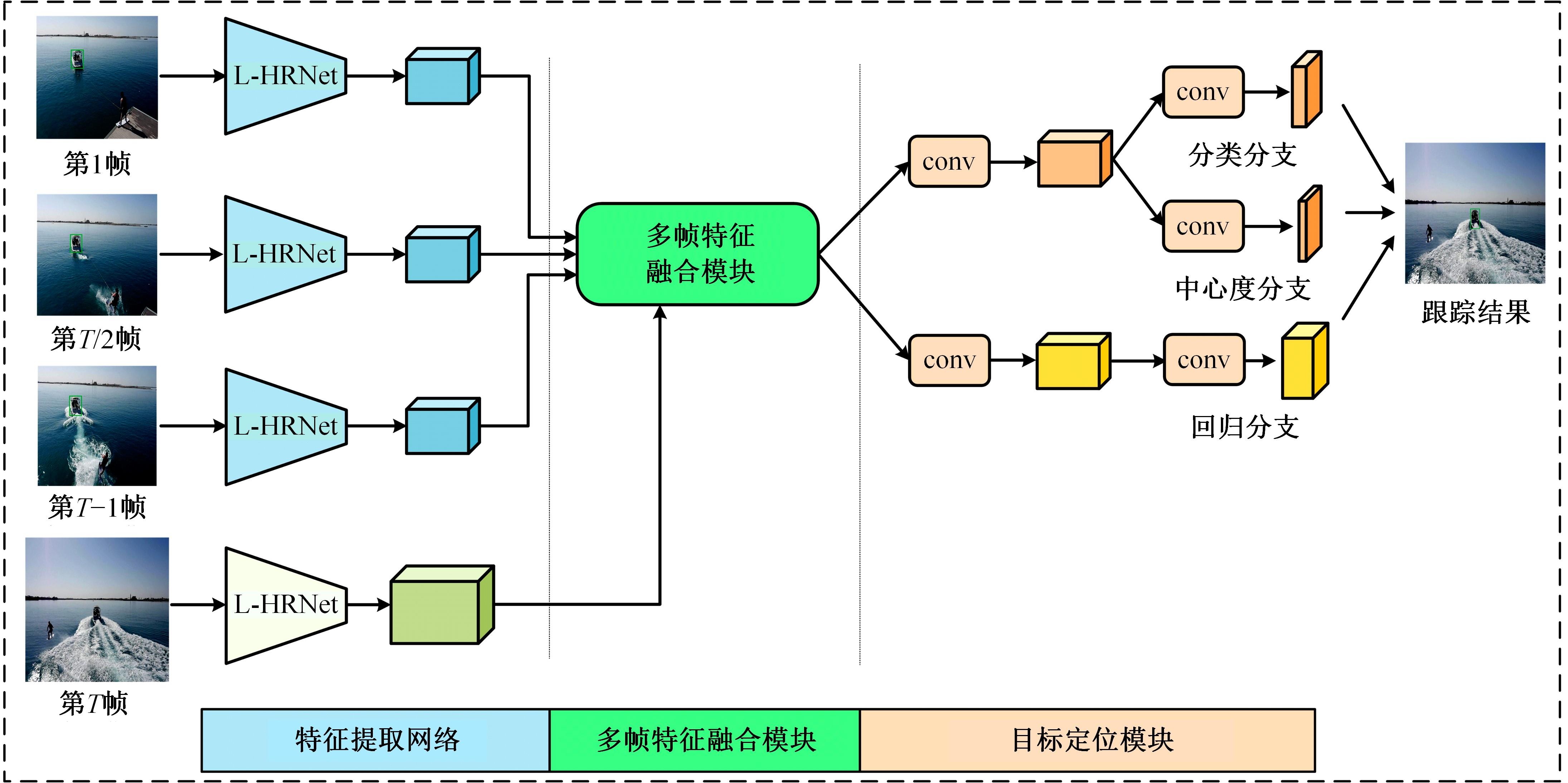

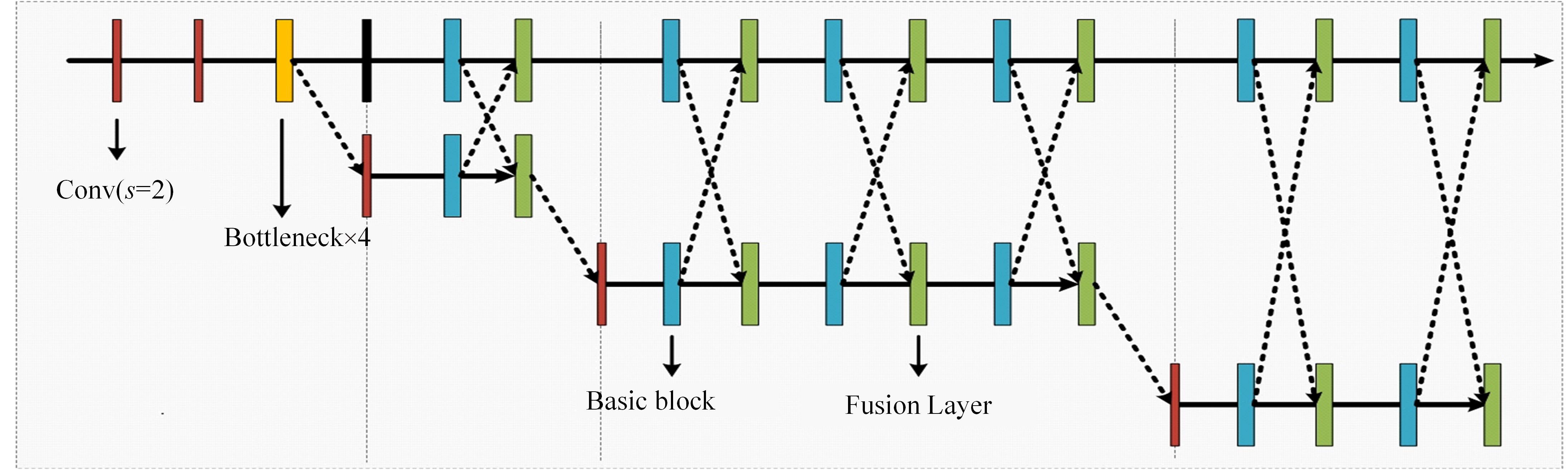

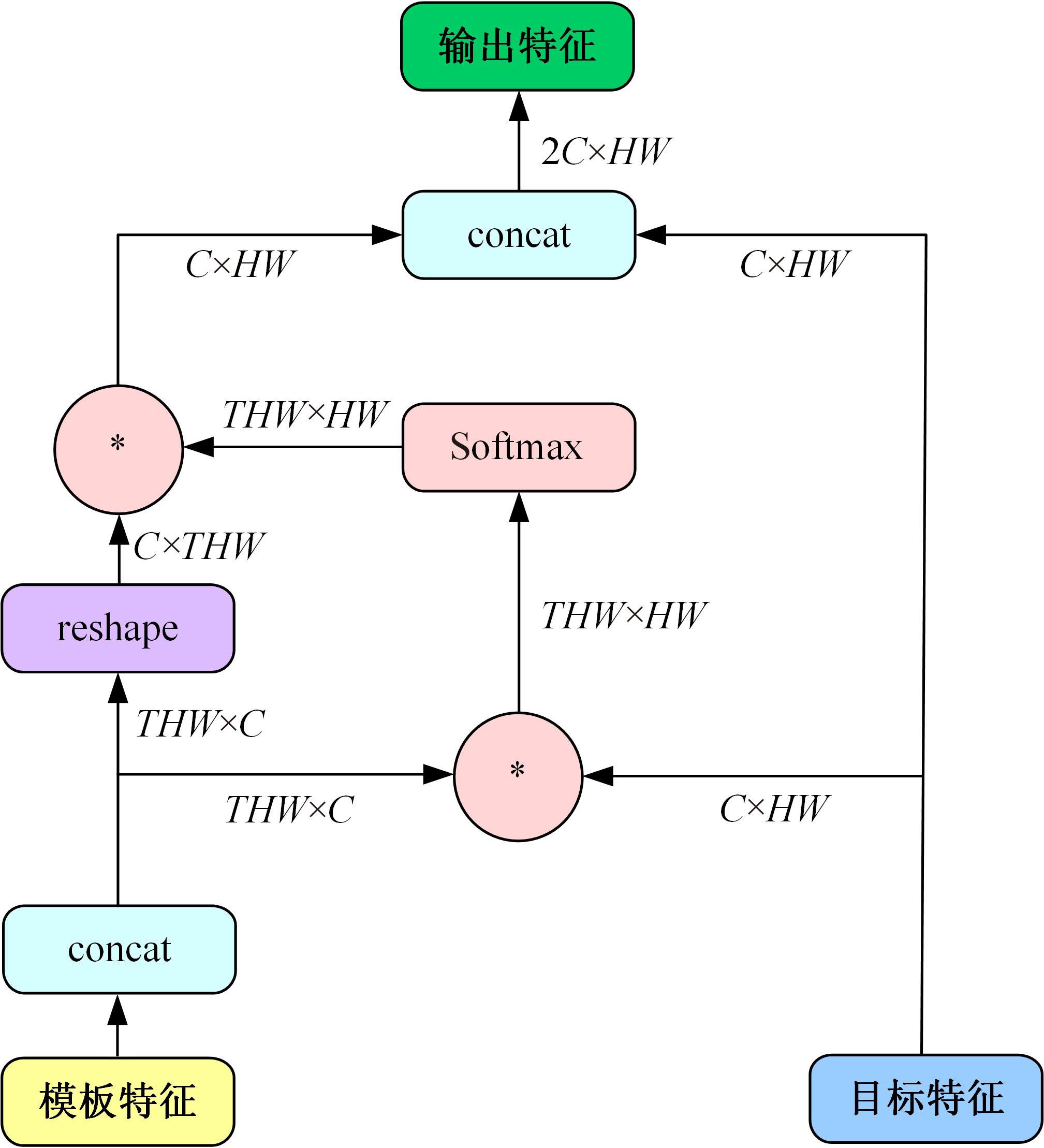

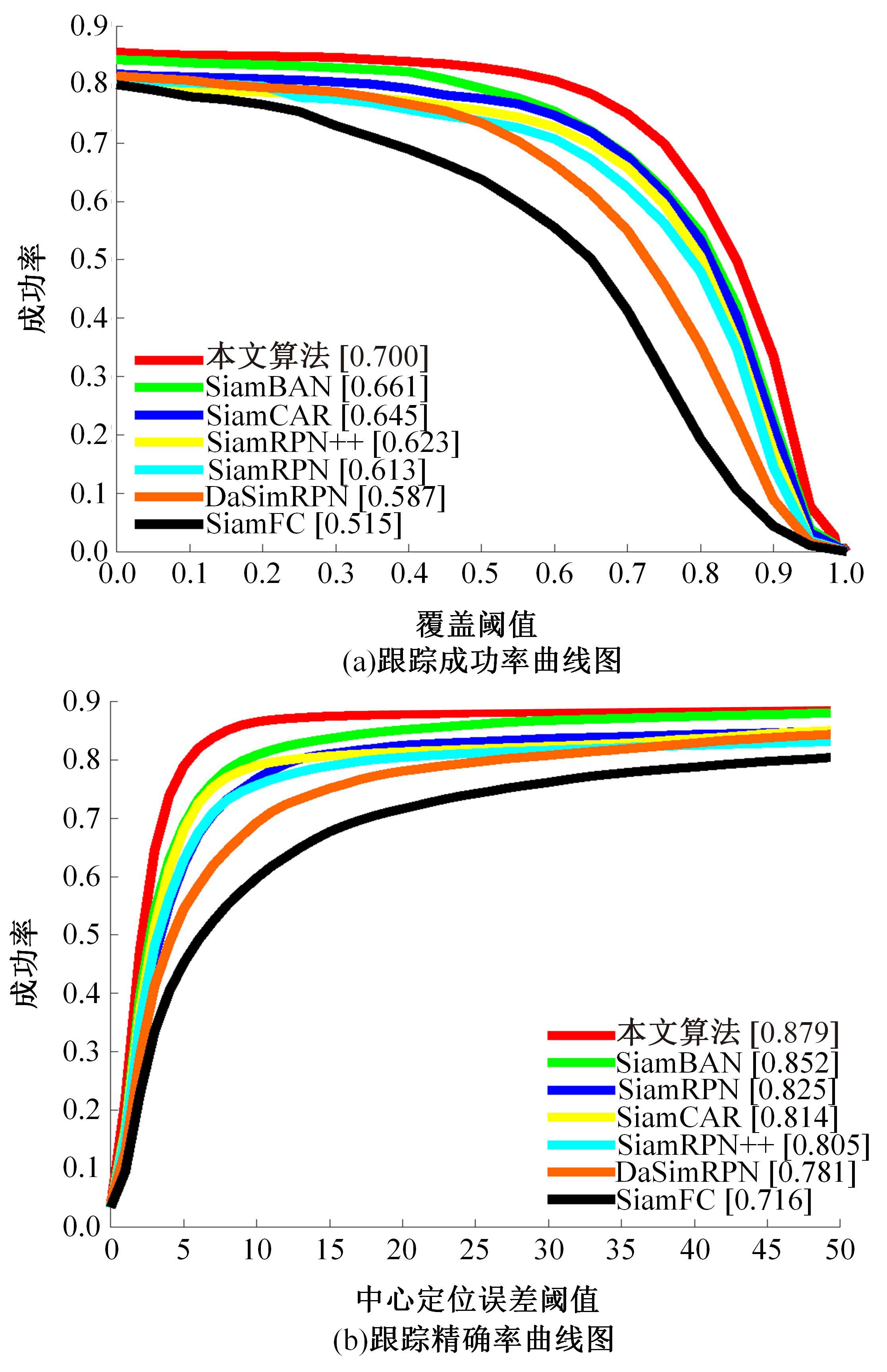

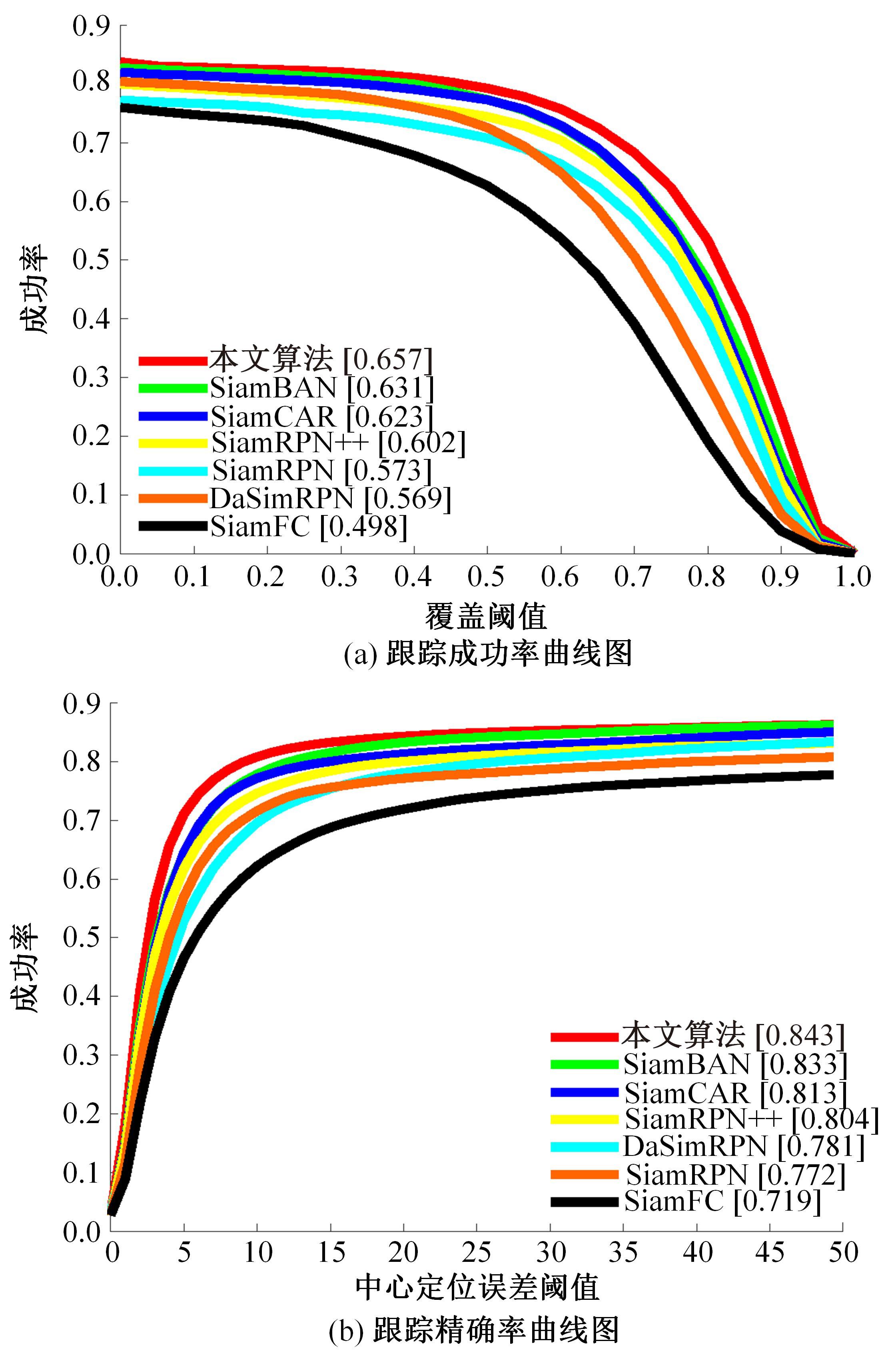

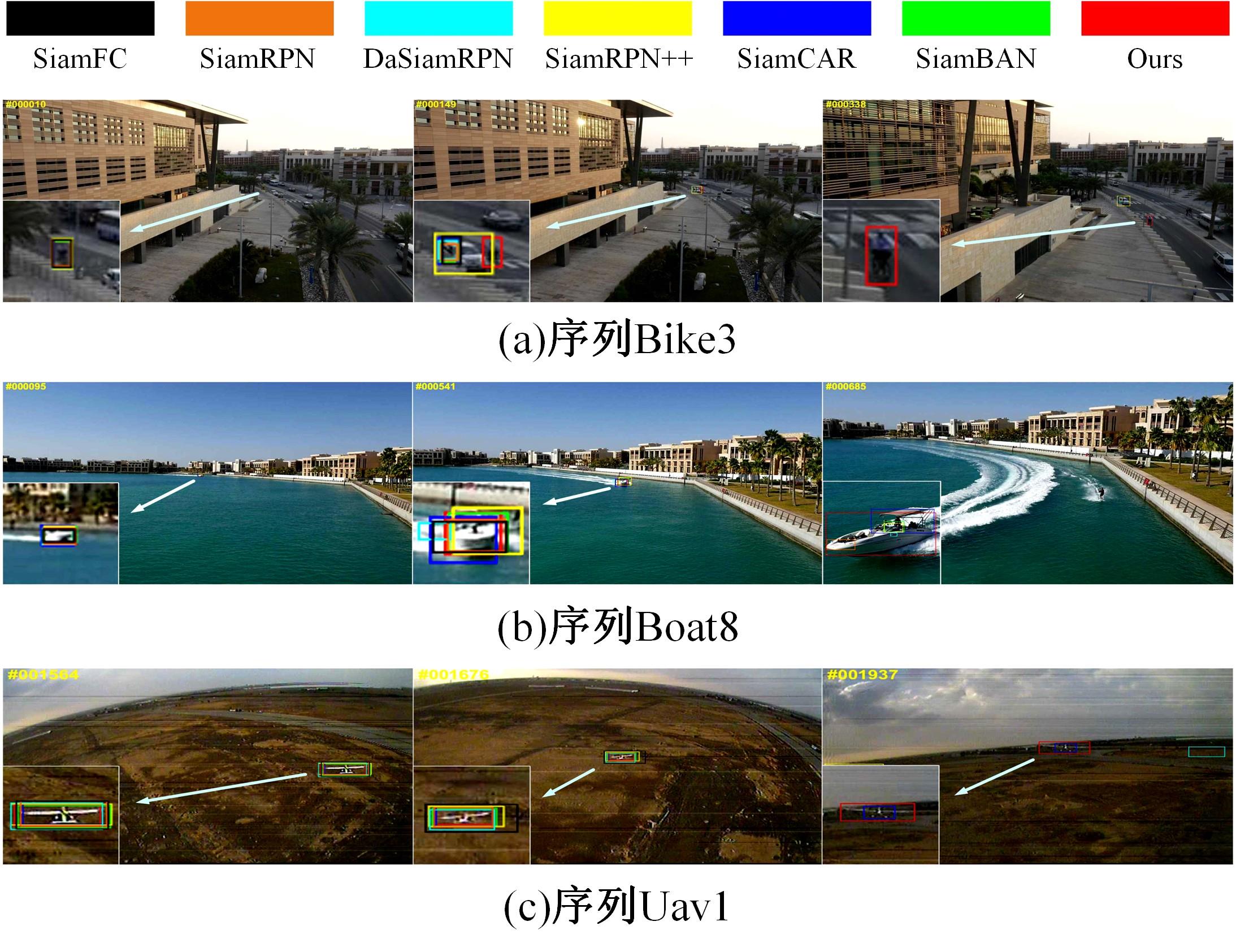

针对无人机(UAV)目标跟踪任务中目标尺寸小、尺度变化明显和视点改变频繁等问题,本文提出一种基于高分辨率孪生网络的无人机目标跟踪算法。首先,利用改进高分辨率网络作为特征提取主干网络,并且采用动态多模板策略挖掘视频的帧间信息;然后,构建多帧特征融合模块,得到利于目标定位的融合特征;最后,选取无锚框策略定位目标位置,得到精确的跟踪结果。实验结果表明:本文算法在DTB70数据集测试的成功率和准确率分别为66.0%和84.7%,在UAV123数据集测试的成功率和准确率分别为65.7%和84.3%,有效地提升了目标跟踪性能。

中图分类号:

- TP391.4

| 1 | 谢才华, 王学伟, 付强, 等. 基于动态模板更新的孪生网络目标跟踪算法[J]. 吉林大学学报: 工学版, 2022, 52(5): 1106-1116. |

| Xie Cai-hua, Wang Xue-wei, Fu Qiang, et al. Siamese network target tracking algorithm based on dynamic template update[J]. Journal of Jilin University (Engineering Edition), 2022, 52(5): 1106-1116. | |

| 2 | 刘晶红, 邓安平, 陈琪琪, 等. 基于多重注意力机制的无锚框目标跟踪算法[J]. 吉林大学学报:工学版, 2023, 53(12): 3518-3528. |

| Liu Jing-hong, Deng An-ping, Chen Qi-qi, et al. Anchor-free target tracking algorithm based on multiple attention mechanism[J]. Journal of Jilin University (Engineering and Technology Edition), 2023, 53(12): 3518-3528. | |

| 3 | Bertinetto L, Valmadre J, Henriques J F, et al. Fully convolutional Siamese networks for object tracking[C]∥Europe an Conference on Computer Vision. Berlin: Springer, 2016: 850-865. |

| 4 | Li B, Yan J, Wu W, et al. High performance visual tracking with Siamese region proposal network[C]∥Proceedings. of the IEEE Conference on Computer Vision and Pattern Recognition, Utah, USA, 2018: 8971-8980. |

| 5 | Li B, Wu W, Wang Q, et al. SiamRPN++: evolution of Siamese visual tracking with very deep networks[C]∥Proceedings. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, California, USA, 2019: 4282-4291. |

| 6 | He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[C]∥Proceedings. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nevada,USA,2016: 770 -778. |

| 7 | Guo D, Wang J, Cui Y, et al. SiamCAR: Siamese fully convolutional classification and regression for visual tracking[C]∥Proceedings. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020: 6269-6277. |

| 8 | Chen Z, Zhong B, Li G, et al. Siamese box adaptive network for visual tracking [C]∥Proceedings. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020: 6668-6677. |

| 9 | Xu Y, Wang Z, Li Z, et al. SiamFC++: towards robust and accurate visual tracking with target estimation guidelines[C]∥Proceedings. of the AAAI Conference on Artificial Intelligence, 2020, 34(7): 12549-12556. |

| 10 | Han K, Wang Y, Chen H, et al. A survey on visual transformer[J]. arXiv preprint arXiv: 2012.12556, 2020, 2(4). |

| 11 | Khan S, Naseer M, Hayat M, et al. Transformers in vision: a survey[J]. ACM Computing Surveys (CSUR), 2022, 54(10s): 1-41. |

| 12 | Chen X, Yan B, Zhu J, et al. Transformer tracking[C]∥Proceedings. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021: 8126-8135. |

| 13 | Wang N, Zhou W, Wang J, et al. Transformer meets tracker: exploiting temporal context for robust visual tracking [C]∥Proceedings. of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021:1571-1580. |

| 14 | Fu Z, Liu Q, Fu Z, et al. Stmtrack: template-free visual tracking with space time memory networks[C]∥Proceedings. of the IEEE /CVF Conference on Computer Vision and Pattern Recognition, 2021: 13774-13783. |

| 15 | Zhao M, Okada K, Inaba M. TrTr: visual tracking with transformer[J]. arXiv preprint arXiv: 2105. 03817, 2021. |

| 16 | Cui Y, Jiang C, Wang L, et al. MixFormer: end-to-end tracking with iterative mixed attention[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022: 13608-13618. |

| 17 | Cao Z, Huang Z, Pan L, et al. TCTrack: temporal contexts for aerial tracking[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition,2022: 14798-14808. |

| 18 | Song Z, Yu J, Chen Y P, et al. Transformer tracking with cyclic shifting window attention[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022: 8791-8800. |

| 19 | Cao Z, Fu C, Ye J, et al. HiFT: hierarchical feature transformer for aerial tracking[C]∥Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021: 15457-15466. |

| 20 | Fu C, Li S, Yuan X, et al. Ad2Attack: adaptive adversarial attack on real-time UAV tracking [J]. arXiv preprint arXiv:, 2022. |

| 21 | Xing D, Evangeliou N, Tsoukalas A, et al. Siamese transformer pyramid networks for real-time UAV tracking[C]∥Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, 2022: 2139-2148. |

| 22 | Simonyan K, Zisserman A. Very deep convolutional networks for large scale image recognition[J]. arXiv: , 2014. |

| 23 | Krizhevsky A, Sutskever I, Hinton G E. Imagenet classification with deep convolutional neural networks[J]. Communications of the ACM, 2017, 60(6): 84-90. |

| 24 | Sun K, Xiao B, Liu D, et al. Deep high-resolution representation learning for human pose estimation[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, California, USA, 2019: 5693-5703. |

| 25 | Zhang Y, Wang C, Wang X, et al. Fairmot: on the fairness of detection and reidentification in multiple object tracking[J]. International Journal of Computer Vision, 2021, 129(11): 3069-3087. |

| 26 | Russakovsky O, Deng J, Su H, et al. Imagenet large scale visual recognition challenge[J]. International Journal of Computer Vision, 2015, 115(3): 211-252. |

| 27 | Huang L, Zhao X, Huang K. Got-10k: a large high-diversity benchmark for generic object tracking in the wild[J]. IEEE Trans. on Pattern Analysis and Machine Intelligence, 2019, 43(5): 1562-1577. |

| 28 | Li S, Yeung D Y. Visual object tracking for unmanned aerial vehicles: a benchmark and new motion models[C]∥Thirty-first AAAI Conference on Artificial Intelligence, San Francisco, USA, 2017. |

| 29 | Mueller M, Smith N, Ghanem B. A benchmark and simulator for UAV tracking [C]∥European Conference on Computer Vision. Belin: Springer, 2016: 445-446. |

| [1] | 王宇,赵凯. 基于亚像素定位的人体姿态热图后处理[J]. 吉林大学学报(工学版), 2024, 54(5): 1385-1392. |

| [2] | 高云龙,任明,吴川,高文. 基于注意力机制改进的无锚框舰船检测模型[J]. 吉林大学学报(工学版), 2024, 54(5): 1407-1416. |

| [3] | 陈小波,陈玲. 定位噪声统计特性未知的变分贝叶斯协同目标跟踪[J]. 吉林大学学报(工学版), 2023, 53(4): 1030-1039. |

| [4] | 胡江平,周子粲,陈波. 基于方位测量的固定时间多目标定位和环航控制[J]. 吉林大学学报(工学版), 2023, 53(3): 923-932. |

| [5] | 徐卓君,王耀祥,黄兴,彭程. 多无人机地面移动目标搜寻和定位[J]. 吉林大学学报(工学版), 2023, 53(3): 832-840. |

| [6] | 申富媛,李炜,蒋栋年. 四旋翼无人机寿命预测和自主维护方法[J]. 吉林大学学报(工学版), 2023, 53(3): 841-852. |

| [7] | 于雅静,郭健,王荣浩,秦伟,宋明武,向峥嵘. 基于状态观测器的多四旋翼无人机时变编队控制[J]. 吉林大学学报(工学版), 2023, 53(3): 871-882. |

| [8] | 薛珊,张亚亮,吕琼莹,曹国华. 复杂背景下的反无人机系统目标检测算法[J]. 吉林大学学报(工学版), 2023, 53(3): 891-901. |

| [9] | 陈珑茏,冯天宇,吕宗阳,吴玉虎. 共轴倾转旋翼无人机有限时间滑模姿态控制[J]. 吉林大学学报(工学版), 2023, 53(3): 883-890. |

| [10] | 何颖,樊俊松,王巍,孙庚,刘衍珩. 无人机空地安全通信与航迹规划的多目标联合优化方法[J]. 吉林大学学报(工学版), 2023, 53(3): 913-922. |

| [11] | 王小艺,刘迪一,于家斌,何卓昀,赵峙尧. 复杂风场环境下的多旋翼无人机编队故障检测方法[J]. 吉林大学学报(工学版), 2023, 53(3): 823-831. |

| [12] | 潘弘洋,刘昭,杨波,孙庚,刘衍珩. 基于新一代通信技术的无人机系统群体智能方法综述[J]. 吉林大学学报(工学版), 2023, 53(3): 629-642. |

| [13] | 刘晶红,邓安平,陈琪琪,彭佳琦,左羽佳. 基于多重注意力机制的无锚框目标跟踪算法[J]. 吉林大学学报(工学版), 2023, 53(12): 3518-3528. |

| [14] | 曲优,李文辉. 基于多任务联合学习的多目标跟踪方法[J]. 吉林大学学报(工学版), 2023, 53(10): 2932-2941. |

| [15] | 鲜斌,李杰奇,古训. 基于非线性扰动观测器的无人机地面效应补偿[J]. 吉林大学学报(工学版), 2022, 52(8): 1926-1933. |

|

||