吉林大学学报(工学版) ›› 2024, Vol. 54 ›› Issue (7): 2072-2079.doi: 10.13229/j.cnki.jdxbgxb.20230150

• 计算机科学与技术 • 上一篇

一种工件表面压印字符识别网络

- 北京信息科技大学 网络文化与数字传播北京市重点实验室,北京 100101

An character recognition network for imprint character

Xin-dong YOU( ),Lei GUO,Jing HAN(

),Lei GUO,Jing HAN( ),Xue-qiang LYU

),Xue-qiang LYU

- Beijing Key Laboratory of Internet Culture and Digital Communication,Beijing Information Science and Technology University,Beijing 100101,China

摘要:

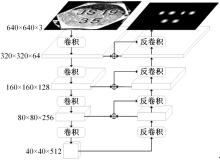

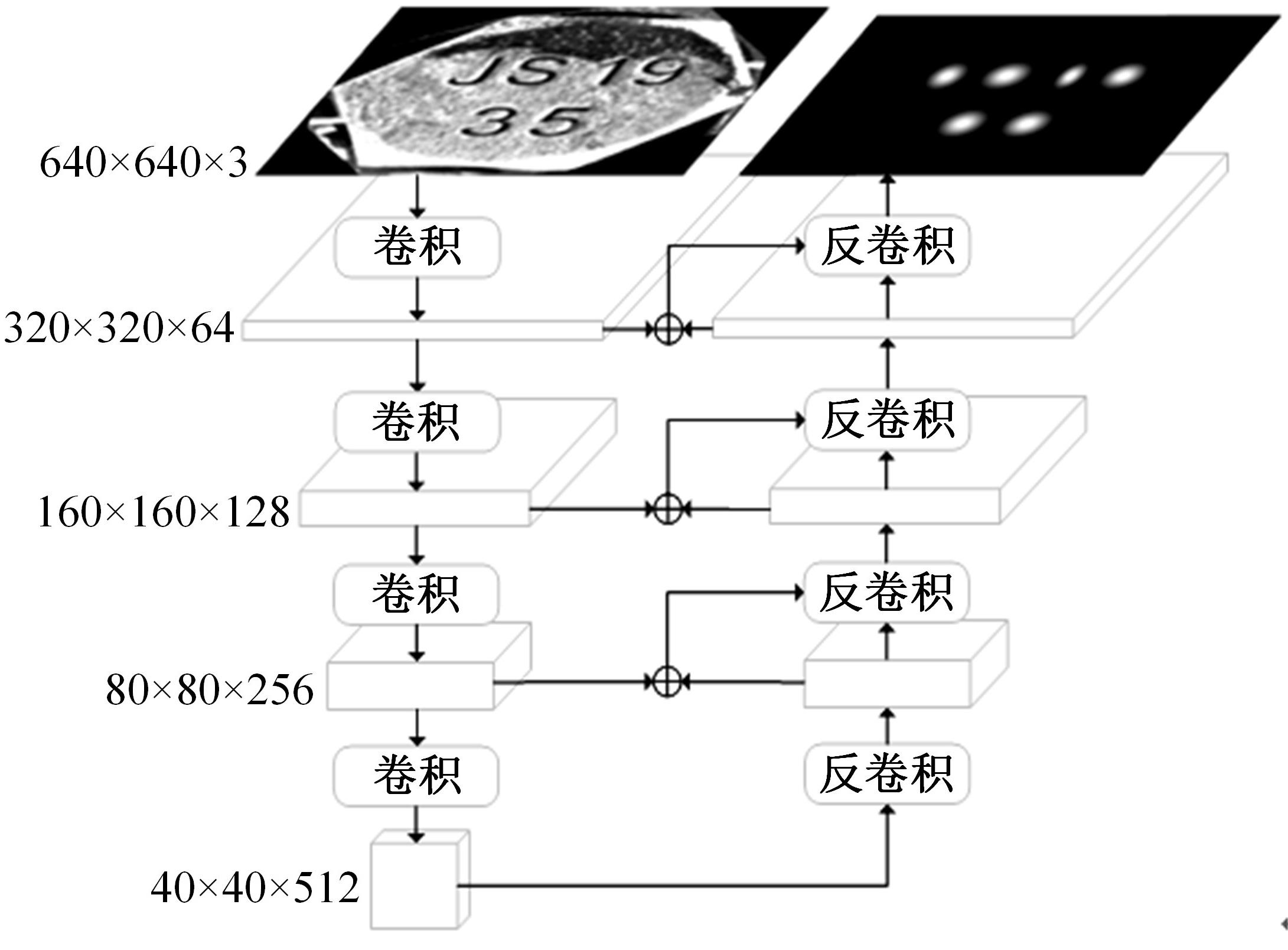

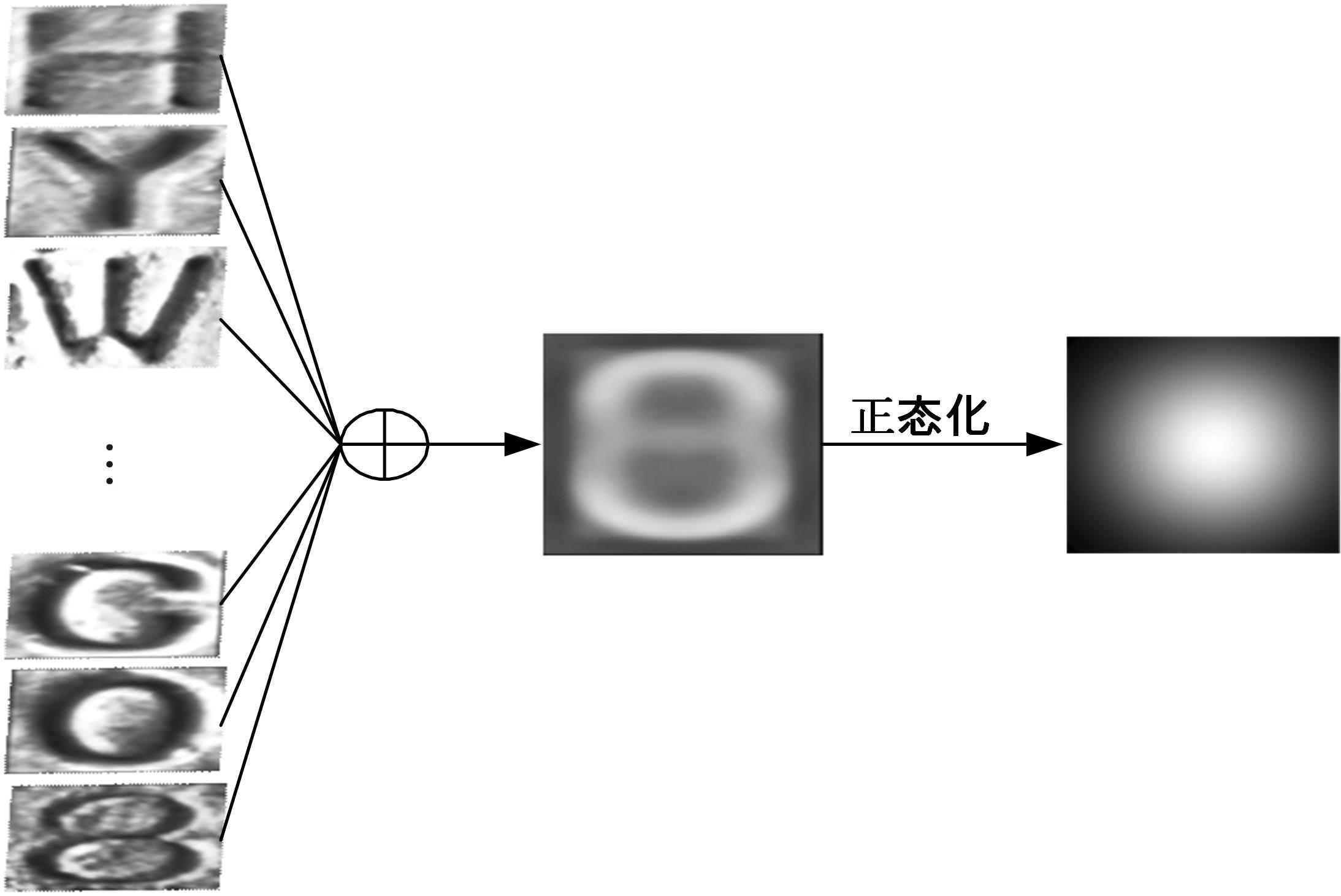

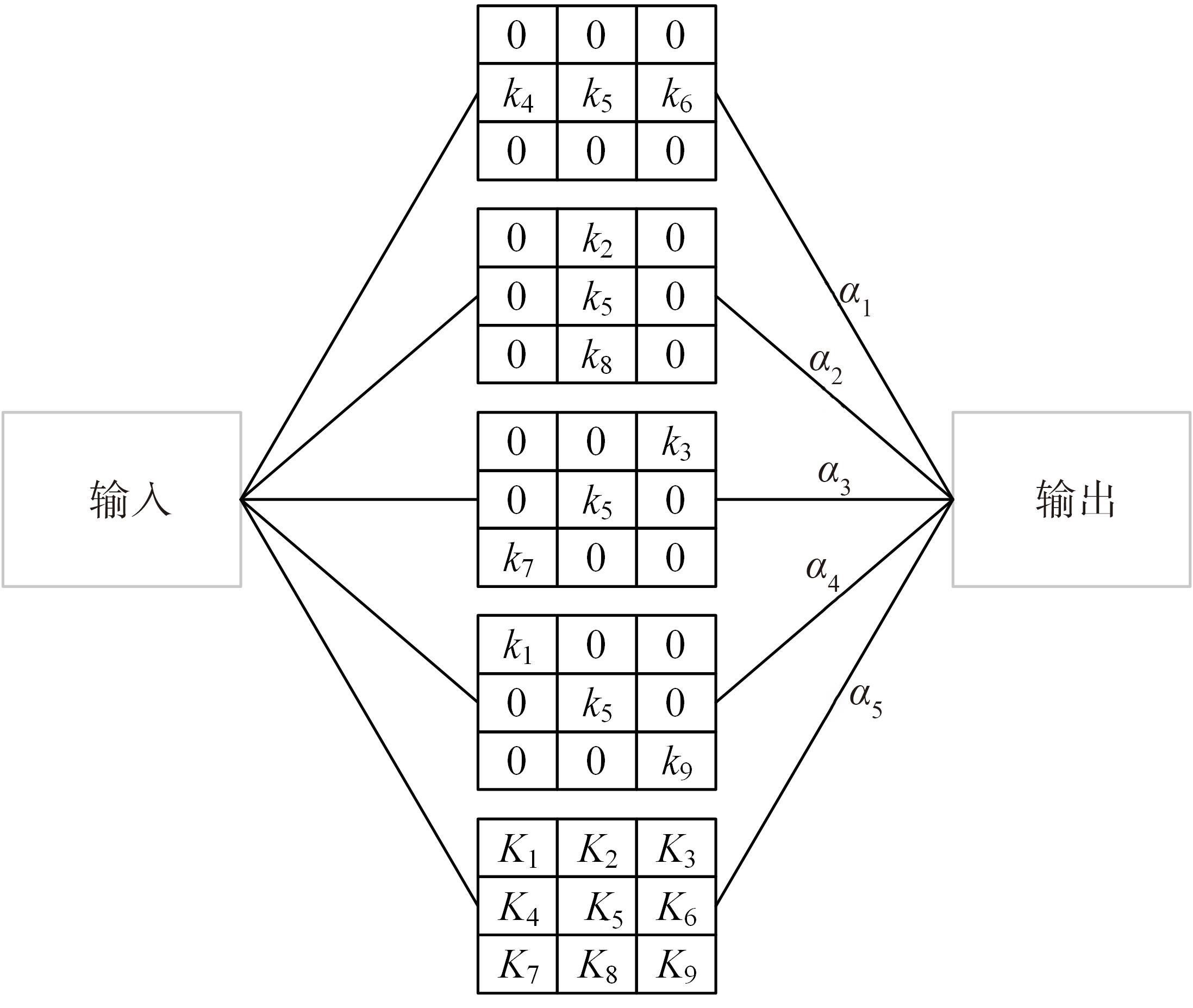

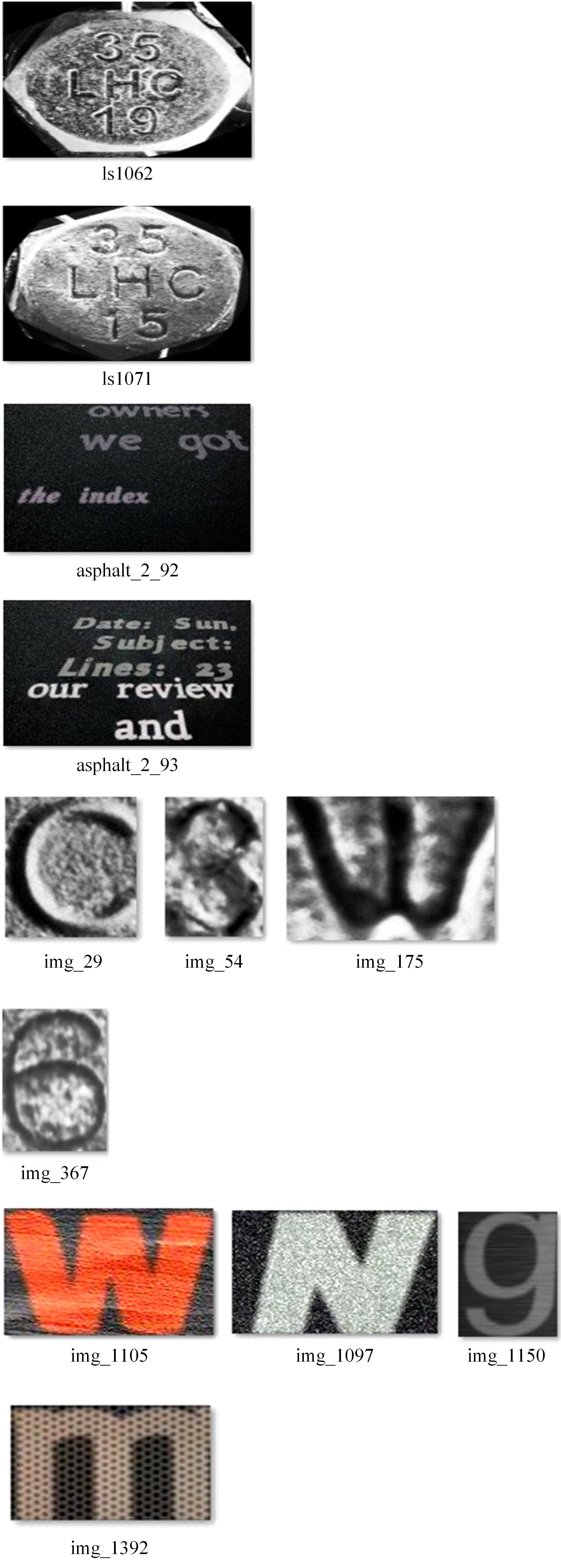

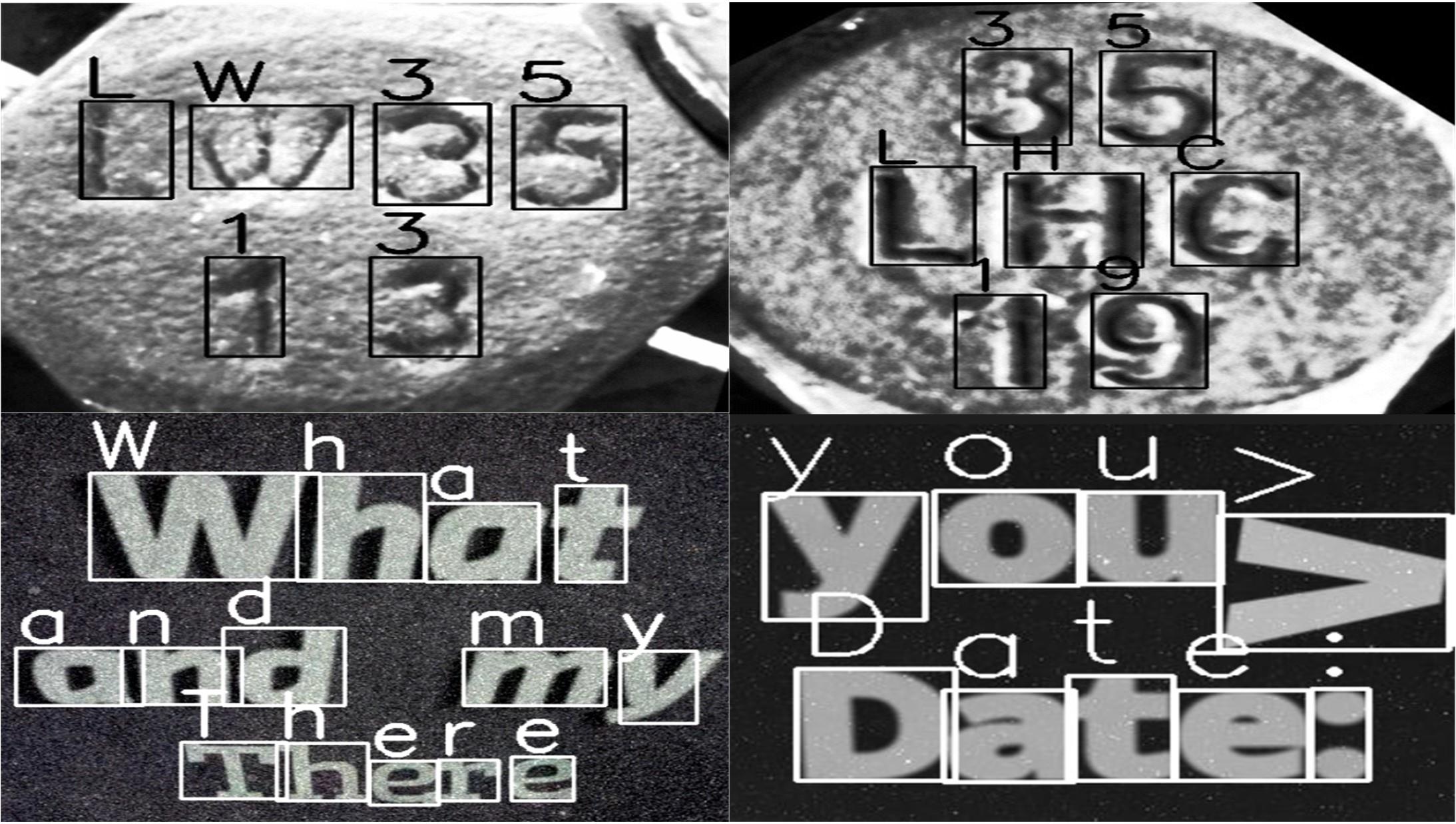

工件表面的压印字符存在凹凸不平、锈蚀、风化等问题,导致传统的字符识别算法难以取得满意的效果。针对这一问题,将工件表面压印字符的识别视为一类特殊的目标检测问题,并针对其特性设计了一种两阶段识别网络:定位-分类网络。定位网络使用无锚框的方法提取字符感兴趣区域,有效解决了字符区域提取困难的问题。分类网络采用特征解耦的卷积模块和结构重参数化技术,能够在不增加额外参数的情况下提升分类的准确率。此外,分类网络采用跨域迁移学习的训练策略,能够有效解决实际应用中的小样本和类别不平衡问题。在自建螺栓数据集和SynthText数据集上的实验结果表明,该算法的整体精度能够达到98%和92%,优于对比算法。

中图分类号:

- TP391

| 1 | 黄慧宁, 张学军, 黄菊, 等. 基于深度学习YOLOv2算法的钢材压印字符识别研究[J]. 计算机科学与应用, 2020, 10(1): 126-135. |

| Huang Hui-ning, Zhang Xue-jun, Huang Ju. Research on steel stamping character recognition on deep learning YOLOv2 algorithm[J]. Computer Science and Application, 2020, 10(1): 126-135. | |

| 2 | Chen X, Jin L, Zhu Y, et al. Text recognition in the wild: a survey[J]. ACM Computing Surveys(CSUR), 2021, 54(2): 1-35. |

| 3 | Ding X, Guo Y, Ding G, et al. ACNet: strengthening the kernel skeletons for powerful CNN via asymmetric convolution blocks[C]∥IEEE/CVF International Conference on Computer Vision(ICCV), Seoul, Korea(South), 2019: 1911-1920. |

| 4 | Chen Y, Liu S, Shen X, et al. Fast point R-CNN[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision,Seoul, Korea (South),2019: 9774-9783. |

| 5 | Qiao L, Zhao Y, Li Z, et al. Defrcn: decoupled faster r-cnn for few-shot object detection[C]∥Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 2021: 8681-8690. |

| 6 | Oksuz K, Cam B C, Kalkan S, et al. Imbalance problems in object detection: a review[J]. IEEE Trans Pattern Anal Mach Intell, 2021(10): 3388-3415. |

| 7 | Cheng T, Wang X, Huang L, et al. Boundary-preserving mask R-CNN[C]∥European Conference on Computer Vision, Berlin: Springer, 2020: 660-676. |

| 8 | He K, Girshick R, Dollár P. Rethinking imagenet pre-training[DB/OL].[2023-01-26].. |

| 9 | Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition[DB/OL].[2023-01-26].. |

| 10 | Ding X, Zhang X, Ma N, et al. RepVGG: making VGG-style convnets great again[C]//IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, USA,2021:No.01352. |

| 11 | Zhou Z, Siddiquee M, Tajbakhsh N, et al. UNet++: redesigning skip connections to exploit multiscale features in image segmentation[J]. IEEE Transactions on Medical Imaging, 2020, 39(6): 1856-1867. |

| 12 | Gupta A, Vedaldi A, Zisserman A. Synthetic data for text localisation in natural images[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 2315-2324. |

| 13 | Redmon J, Farhadi A. YOLOv3: an incremental improvement[DB/OL].[2023-01-27].. |

| 14 | Lin T Y, Goyal P, Girshick R, et al. Focal loss for dense object detection[DB/OL].[2023-01-27].. |

| 15 | Duan K, Bai S, Xie L, et al. Centernet: keypoint triplets for object detection[DB/OL].[2023-01-27].. |

| 16 | He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770-778. |

| [1] | 高云龙,任明,吴川,高文. 基于注意力机制改进的无锚框舰船检测模型[J]. 吉林大学学报(工学版), 2024, 54(5): 1407-1416. |

| [2] | 陈仁祥,胡超超,胡小林,杨黎霞,张军,何家乐. 基于改进YOLOv5的驾驶员分心驾驶检测[J]. 吉林大学学报(工学版), 2024, 54(4): 959-968. |

| [3] | 张云佐,郭威,李文博. 遥感图像密集小目标全方位精准检测算法[J]. 吉林大学学报(工学版), 2024, 54(4): 1105-1113. |

| [4] | 王宏志,宋明轩,程超,解东旋. 基于改进YOLOv4-tiny算法的车距预警方法[J]. 吉林大学学报(工学版), 2024, 54(3): 741-748. |

| [5] | 李晓旭,安文娟,武继杰,李真,张珂,马占宇. 通道注意力双线性度量网络[J]. 吉林大学学报(工学版), 2024, 54(2): 524-532. |

| [6] | 王春华,李恩泽,肖敏. 多特征融合和孪生注意力网络的高分辨率遥感图像目标检测[J]. 吉林大学学报(工学版), 2024, 54(1): 240-250. |

| [7] | 薛珊,张亚亮,吕琼莹,曹国华. 复杂背景下的反无人机系统目标检测算法[J]. 吉林大学学报(工学版), 2023, 53(3): 891-901. |

| [8] | 陶博,颜伏伍,尹智帅,武冬梅. 基于高精度地图增强的三维目标检测算法[J]. 吉林大学学报(工学版), 2023, 53(3): 802-809. |

| [9] | 刘晶红,邓安平,陈琪琪,彭佳琦,左羽佳. 基于多重注意力机制的无锚框目标跟踪算法[J]. 吉林大学学报(工学版), 2023, 53(12): 3518-3528. |

| [10] | 黄彭奇子,段晓君,黄文伟,晏良. 基于元学习的小样本图像非对称缺陷检测方法[J]. 吉林大学学报(工学版), 2023, 53(1): 234-240. |

| [11] | 高明华,杨璨. 基于改进卷积神经网络的交通目标检测方法[J]. 吉林大学学报(工学版), 2022, 52(6): 1353-1361. |

| [12] | 曲优,李文辉. 基于锚框变换的单阶段旋转目标检测方法[J]. 吉林大学学报(工学版), 2022, 52(1): 162-173. |

| [13] | 曹洁,屈雪,李晓旭. 基于滑动特征向量的小样本图像分类方法[J]. 吉林大学学报(工学版), 2021, 51(5): 1785-1791. |

| [14] | 潘德伦,冀隽,张跃进. 基于运动矢量空间编码的视频监控动态目标检测方法[J]. 吉林大学学报(工学版), 2021, 51(4): 1370-1374. |

| [15] | 金立生,郭柏苍,王芳荣,石健. 基于改进YOLOv3的车辆前方动态多目标检测算法[J]. 吉林大学学报(工学版), 2021, 51(4): 1427-1436. |

|

||