吉林大学学报(工学版) ›› 2023, Vol. 53 ›› Issue (10): 2952-2963.doi: 10.13229/j.cnki.jdxbgxb.20211348

基于改进鲸鱼算法优化SVM参数和特征选择

郭辉1,2( ),付接递1,2,李振东1,2(

),付接递1,2,李振东1,2( ),严岩3,李虓1,2

),严岩3,李虓1,2

- 1.宁夏大学 信息工程学院,银川 750021

2.宁夏大数据与人工智能省部共建协同创新中心,银川 750021

3.国网宁夏电力有限公司 电力科学研究院,银川 750011

SVM parameters and feature selection optimization based on improved whale algorithm

Hui GUO1,2( ),Jie-di FU1,2,Zhen-dong LI1,2(

),Jie-di FU1,2,Zhen-dong LI1,2( ),Yan YAN3,Xiao LI1,2

),Yan YAN3,Xiao LI1,2

- 1.School of Information Engineering,Ningxia University,Yinchuan 750021,China

2.Collaborative Innovation Center for Ningxia Big Data and Artificial Intelligence Co-founded by Ningxia Municipality and Ministry of Education,Yinchuan 750021,China

3.Electric Power Research Institute,State Grid Ningxia Power Co. ,Ltd. ,Yinchuan 750011,China

摘要:

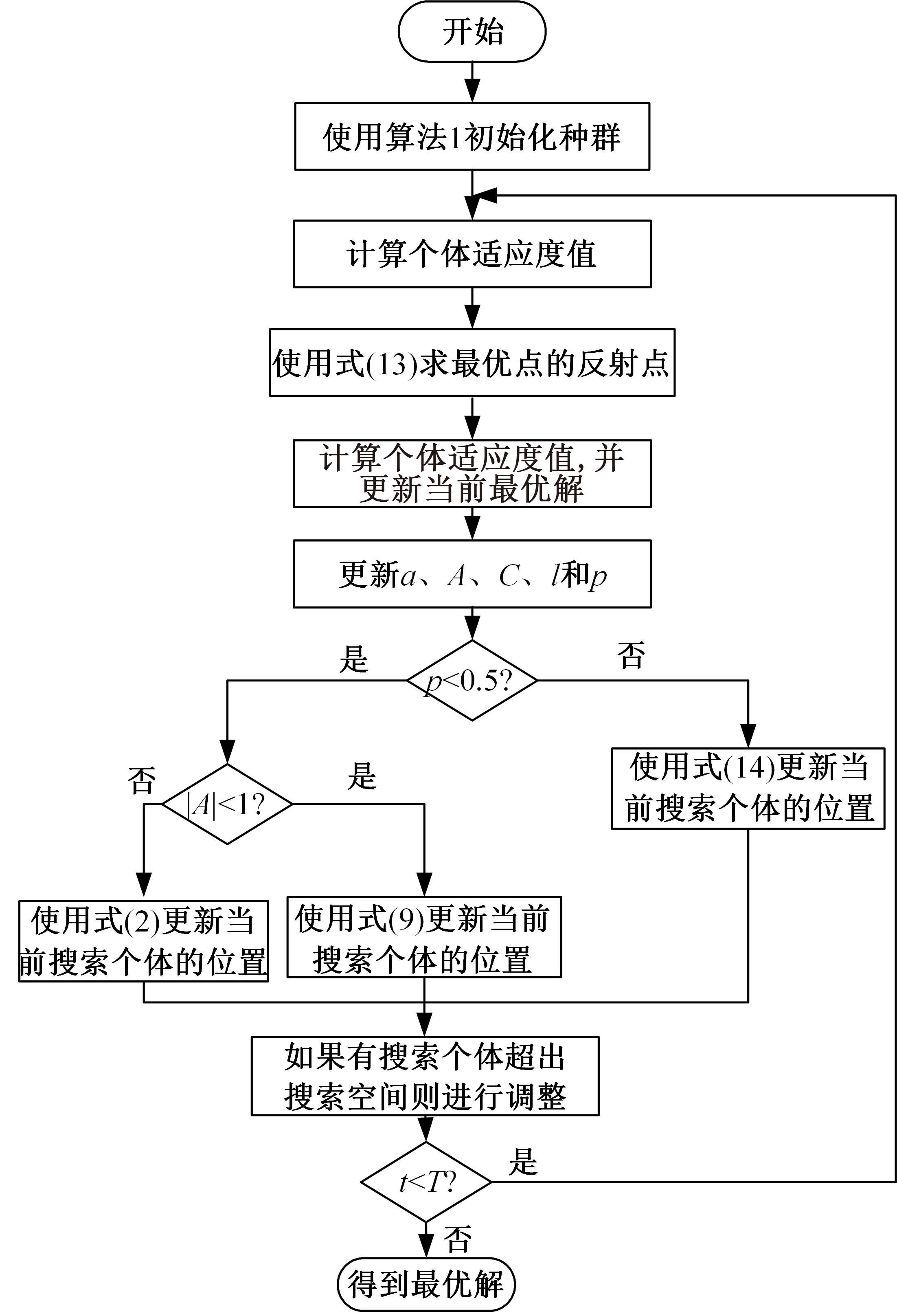

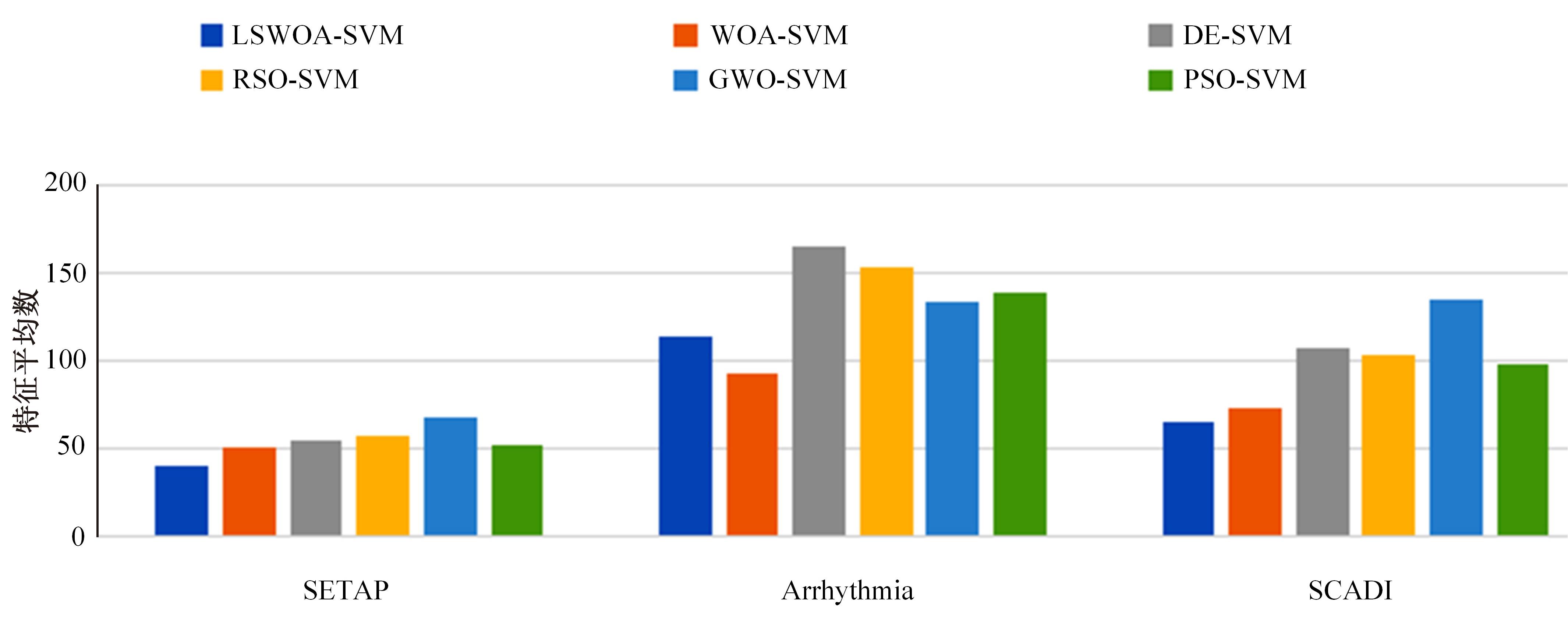

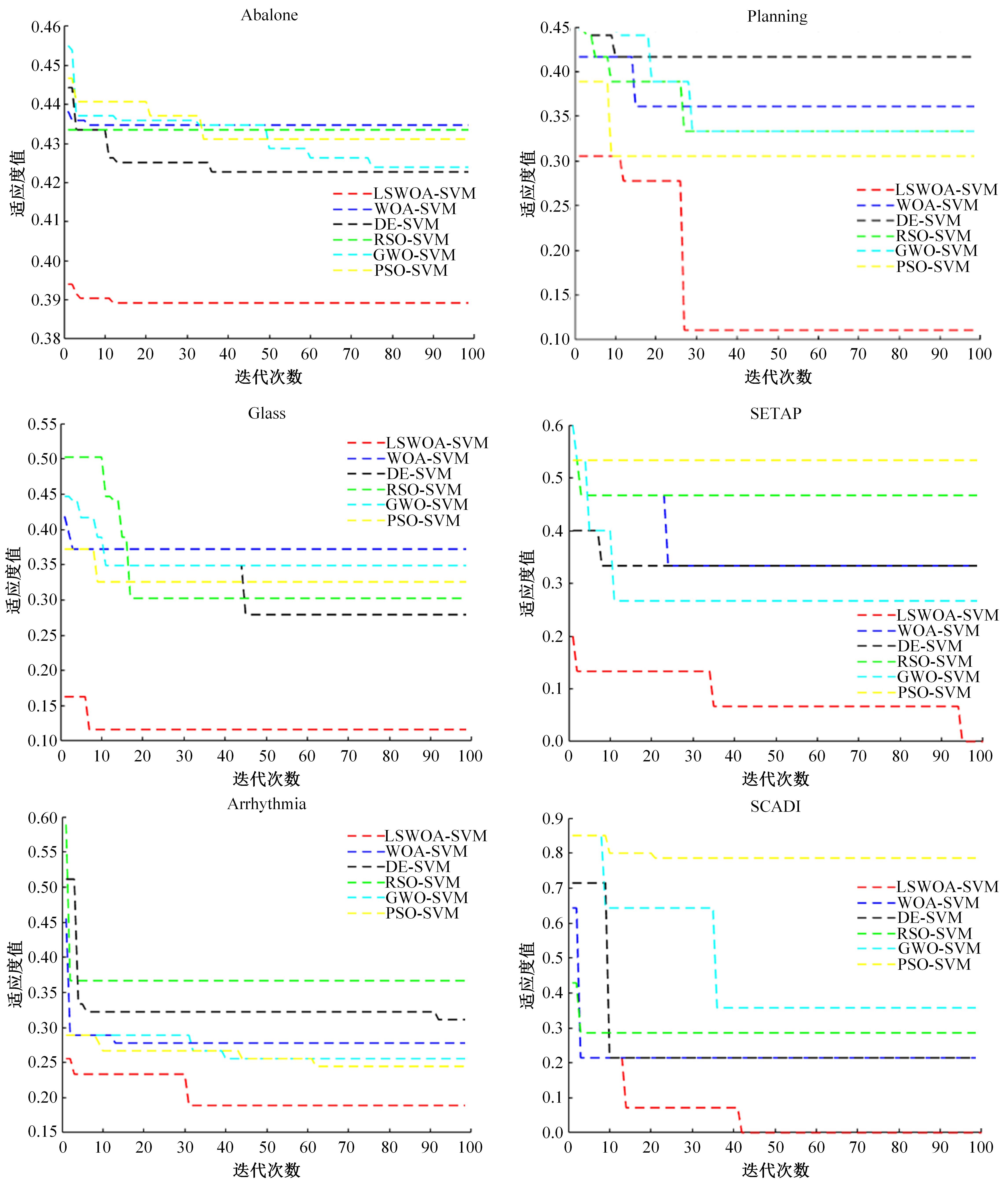

针对传统支持向量机(SVM)在数据分类方面准确率识别较低的问题,提出了一种改进鲸鱼优化算法同步优化SVM的特征选择模型。首先,利用Levy飞行策略对鲸鱼优化算法的螺旋更新位置进行变异扰动,利用单纯形策略中的反射操作对种群中的精英个体进行反射点求解的改进,标准函数的测试结果证明其改进能有效提高算法的收敛速度和计算精度;其次,将SVM核参数和特征选择目标作为共同优化对象,在获得最优核参数的同时得到相对应的最优特征子集;最后,对UCI标准数据集和真实乳腺癌数据集进行特征选择仿真实验,在平均分类准确率、平均适应度值、适应度标准差和所选特征个数上进行评价。结果表明,本文算法在降低特征维度,实现数据分类上效果明显。在真实乳腺癌数据集上的分类精度与传统支持向量机相比提高了11.053%。

中图分类号:

- TP301.6

| 1 | Meenakshi S. Improving the performance of heart disease classification using chaotic tent map based whale optimizer for feature selection with SVM algorithm[J]. Turkish Journal of Physiotherapy and Rehabilitation,2021, 32(3): 4229-4244. |

| 2 | Antal B, Hajdu A. An ensemble-based system for automatic screening of diabetic retinopathy[J]. Knowledge-based Systems, 2014, 60: 20-27. |

| 3 | Phuong T M, Lin Z, Altman R B. Choosing SNPs using feature selection[C]∥IEEE Computational Systems Bioinformatics Conference,Stanford, USA, 2005: 301-309. |

| 4 | Hamla H, Ghanem K. Comparative study of embedded feature selection methods on microarray data[C]∥IFIP International Conference on Artificial Intelligence Applications and Innovations, Hersonissos, Greece, 2021: 69-77. |

| 5 | Blum Christian, Roli Andrea. Metaheuristics in combi-natorial optimization: overview and conceptual comparison[J]. ACM Comput Surv, 2003, 35(3): 268-308. |

| 6 | Mirjalili S, Mirjalili S M, Lewis A. Grey wolf optimizer[J]. Advances in Engineering Software, 2014, 69: 46-61. |

| 7 | Kennedy J, Eberhart R. Particle swarm optimization[C]∥Proceedings of International Conference on Neural Networks, Perth, Australia, 1995: 1942-1948. |

| 8 | Wong K P, Dong Z Y. Differential evolution, an alternative approach to evolutionary algorithm[C]∥Proceedings of the 13th International Conference on, Intelligent Systems Application to Power Systems, Arlington, USA, 2005: 73-83. |

| 9 | Dhiman G, Kaur A. STOA: a bio-inspired based optimization algorithm for industrial engineering problems[J]. Engineering Applications of Artificial Intelligence, 2019, 82: 148-174. |

| 10 | Zhang Y, Gong D, Cheng J. Multi-objective particle swarm optimization approach for cost-based feature selection in classification[J]. IEEE/ACM Transactions on Computational Biology and Bioinformatics, 2015, 14(1): 64-75. |

| 11 | Sayed G I, Darwish A, Hassanien A E. A new chaotic whale optimization algorithm for features selection[J]. Journal of Classification, 2018, 35(2): 300-344. |

| 12 | 张文杰, 蒋烈辉. 一种基于遗传算法优化的大数据特征选择方法[J]. 计算机应用研究, 2020, 37(1): 50-52, 56. |

| Zhang Wen-jie, Jiang Lie-hui. A feature selection method for big data based on genetic algorithm optimization[J]. Computer Application Research, 2020, 37(1): 50-52, 56. | |

| 13 | Mirjalili S, Saremi S, Mirjalili S M, et al. Multi-objective grey wolf optimizer: a novel algorithm for multi-criterion optimization[J]. Expert Systems with Applications, 2016, 47: 106-119. |

| 14 | 贾鹤鸣, 李瑶, 孙康健. 基于遗传乌燕鸥算法的同步优化特征选择[J]. 自动化学报, 2022, 48(6): 1601-1615. |

| Jia He-ming, Li Yao, Sun Kang-jian. Synchronous optimization feature selection based on Genetic Black-tern algorithm[J]. Automatica, 2022, 48(6): 1601-1615. | |

| 15 | 沈永良, 宋杰, 万志超. 基于改进烟花算法的SVM特征选择和参数优化[J]. 微电子学与计算机, 2018, 35(1): 21-25. |

| Shen Yong-liang, Song Jie, Wan Zhi-chao. SVM feature selection and parameter optimization based on improved fireworks algorithm[J]. Microelectronics and Computers, 2018, 35(1): 21-25. | |

| 16 | 姚全珠, 蔡婕. 基于PSO的LS-SVM特征选择与参数优化算法[J]. 计算机工程与应用, 2010, 46(1): 134-136, 229. |

| Yao Quan-zhu, Cai Jie. LS-SVM Feature selection and parameter optimization algorithm based on PSO[J]. Computer Engineering and Applications, 2010, 46(1): 134-136, 229. | |

| 17 | Mirjalili S, Lewis A. The whale optimization algorithm[J]. Advances in Engineering Software, 2016, 95: 51-67. |

| 18 | Mafarja Majdi M, Seyedali Mirjalili. Hybrid whale optimization algorithm with simulated annealing for feature selection[J]. Neurocomputing, 2017, 260: 302-312. |

| 19 | Jadhav Amolkumar Narayan, Gomathi N. WGC: hybridzation of exponential grey wolf optimizer with whale optimization for data clustering[J]. Alexandria Engineering Journal, 2018, 57: 1569-1584. |

| 20 | Yan Z, Zhang J, Zeng J, et al. Nature-inspired approach: an enhanced whale optimization algorithm for global optimization[J]. Mathematics and Computers in Simulation, 2021, 185: 17-46. |

| 21 | 龙文, 蔡绍洪, 焦建军, 等. 求解大规模优化问题的改进鲸鱼优化算法[J]. 系统工程理论与实践, 2017, 37(11): 2983-2994. |

| Long Wen, Cai Shao-hong, Jiao Jian-jun, et al. Improved whale optimization algorithm for solving large scale optimization problems[J]. Systems Engineering Theory and Practice, 2017, 37(11): 2983-2994. | |

| 22 | Zhang Y, Zhang Y, Wang G, et al. An improved hybrid whale optimization algorithm based on differential evolution[C]∥International Conference on Artificial Intelligence and Electromechanical Automation (AIEA), Tianjin, China, 2020: 103-107. |

| 23 | 毕孝儒, 牟琦, 龚尚福. 融合动态概率阈值和自适应变异的鲸鱼优化算法[J]. 微电子学与计算机, 2019, 36(12): 78-83. |

| Bi Xiao-ru, Mou Qi, Gong Shang-fu. Whale optimization algorithm combining dynamic probability threshold and adaptive variation[J]. Microelectronics and Computers, 2019, 36(12): 78-83. | |

| 24 | Shi X, Li M. Whale optimization algorithm improved effectiveness analysis based on compound chaos optimization strategy and dynamic optimization parameters[C]∥International Conference on Virtual Reality and Intelligent Systems, Jishou, China, 2019: 338-341. |

| 25 | Mostafa Bozorgi S, Yazdani S. IWOA: an improved whale optimization algorithm for optimization problems[J]. Journal of Computational Design and Engineering, 2019, 6(3): 243-259. |

| 26 | Zamli K Z. Optimizing S-box generation based on the adaptive agent heroes and cowards algorithm[J]. Expert Systems with Applications, 2021,182: No.115305. |

| 27 | Nelder J A, Mead R. A simplex method for function minimization[J]. The Computer Journal, 1965, 7(4): 308-313. |

| 28 | 郭德龙, 周锦程, 周永权. 基于Levy飞行改进蝴蝶优化算法[J]. 数学的实践与认识, 2021, 51(12): 130-137. |

| Guo De-long, Zhou Jin-cheng, Zhou Yong-quan. Improved butterfly optimization algorithm based on Levy flight[J]. Practice and Understanding of Mathematics, 2021, 51(12): 130-137. | |

| 29 | Aljarah I, Al-Zoubi A M, Faris H, et al. Simultaneous feature selection and support vector machine optimization using the grasshopper optimization algorithm[J]. Cognitive Computation, 2018, 10(2): 478-495. |

| 30 | Dua D, Graff C. UCI machine learning repository[R]. Irvine: University of California, School of Information and Computer Science, 2019. |

| 31 | Blake C. UCI repository of machine learning databases[DB/OL].[2020-07-04]. . |

| [1] | 周丰丰,颜振炜. 基于混合特征的特征选择神经肽预测模型[J]. 吉林大学学报(工学版), 2023, 53(11): 3238-3245. |

| [2] | 白丽丽,姜封国,周玉明,曾枭. 基于改进鲸鱼算法的结构可靠性优化设计[J]. 吉林大学学报(工学版), 2023, 53(11): 3160-3165. |

| [3] | 李佩泽,赵世舜,翁小辉,蒋鑫妹,崔洪博,乔建磊,常志勇. 基于多传感器优化的农药残留快速检测新方法[J]. 吉林大学学报(工学版), 2022, 52(8): 1951-1956. |

| [4] | 周丰丰,朱海洋. 基于三段式特征选择策略的脑电情感识别算法SEE[J]. 吉林大学学报(工学版), 2022, 52(8): 1834-1841. |

| [5] | 王斌,何丙辉,林娜,王伟,李天阳. 基于随机森林特征选择的茶园遥感提取[J]. 吉林大学学报(工学版), 2022, 52(7): 1719-1732. |

| [6] | 王生生,姜林延,杨永波. 基于最优传输特征选择的医学图像分割迁移学习[J]. 吉林大学学报(工学版), 2022, 52(7): 1626-1638. |

| [7] | 姚引娣,贺军瑾,李杨莉,谢荡远,李英. 自构建改进型鲸鱼优化BP神经网络的ET0模拟计算[J]. 吉林大学学报(工学版), 2021, 51(5): 1798-1807. |

| [8] | 李景彬,杨禹锟,温宝琴,坎杂,孙雯,杨朔. 基于根茬检测的秋后残膜回收导航路径提取方法[J]. 吉林大学学报(工学版), 2021, 51(4): 1528-1539. |

| [9] | 高万夫,张平,胡亮. 基于已选特征动态变化的非线性特征选择方法[J]. 吉林大学学报(工学版), 2019, 49(4): 1293-1300. |

| [10] | 赵东,孙明玉,朱金龙,于繁华,刘光洁,陈慧灵. 结合粒子群和单纯形的改进飞蛾优化算法[J]. 吉林大学学报(工学版), 2018, 48(6): 1867-1872. |

| [11] | 刘杰, 张平, 高万夫. 基于条件相关的特征选择方法[J]. 吉林大学学报(工学版), 2018, 48(3): 874-881. |

| [12] | 袁哲明, 张弘杨, 陈渊. 基于特征选择和支持向量机的HIV-1型蛋白酶剪切位点预测[J]. 吉林大学学报(工学版), 2017, 47(2): 639-646. |

| [13] | 代琨,于宏毅,仇文博,李青. 基于SVM的网络数据无监督特征选择算法[J]. 吉林大学学报(工学版), 2015, 45(2): 576-582. |

| [14] | 王刚, 张禹瑄, 李颖, 陈慧灵, 胡玮通, 秦磊. 一种微阵列数据降维新方法[J]. 吉林大学学报(工学版), 2014, 44(5): 1429-1434. |

| [15] | 李阳, 文敦伟, 王珂, 刘乐. 多核学习矩阵化最小二乘支持向量机算法及肺结节识别[J]. 吉林大学学报(工学版), 2014, 44(2): 508-515. |

|

||