吉林大学学报(工学版) ›› 2024, Vol. 54 ›› Issue (8): 2338-2347.doi: 10.13229/j.cnki.jdxbgxb.20221373

基于轨迹优化的三维车辆多目标跟踪

才华1( ),寇婷婷1,2,杨依宁3(

),寇婷婷1,2,杨依宁3( ),马智勇4,王伟刚4,孙俊喜5

),马智勇4,王伟刚4,孙俊喜5

- 1.长春理工大学 电子信息工程学院,长春 130022

2.长春建筑学院 人工智能产业学院,长春 130604

3.电磁空间安全全国重点实验室,天津 300308

4.吉林大学第一医院 泌尿外二科,长春 130061

5.东北师范大学 信息科学与技术学院,长春 130117

Three-dimensional vehicle multi-target tracking based on trajectory optimization

Hua CAI1( ),Ting-ting KOU1,2,Yi-ning YANG3(

),Ting-ting KOU1,2,Yi-ning YANG3( ),Zhi-yong MA4,Wei-gang WANG4,Jun-xi SUN5

),Zhi-yong MA4,Wei-gang WANG4,Jun-xi SUN5

- 1.School of electronic information engineering, Changchun University of Science and Technology, Changchun 130022, China

2.Industrial College of Artificial Intelligence, Changchun University of Architecture, Changchun 130604, China

3.National Key Laboratory of Electromagnetic Space Security, Tianjin 300308, China

4.No. 2 Department of Urology, the First Hospital of Jilin University, Changchun 130061, China

5.School of Information Science and Technology, Northeast Normal University, Changchun 130117, China

摘要:

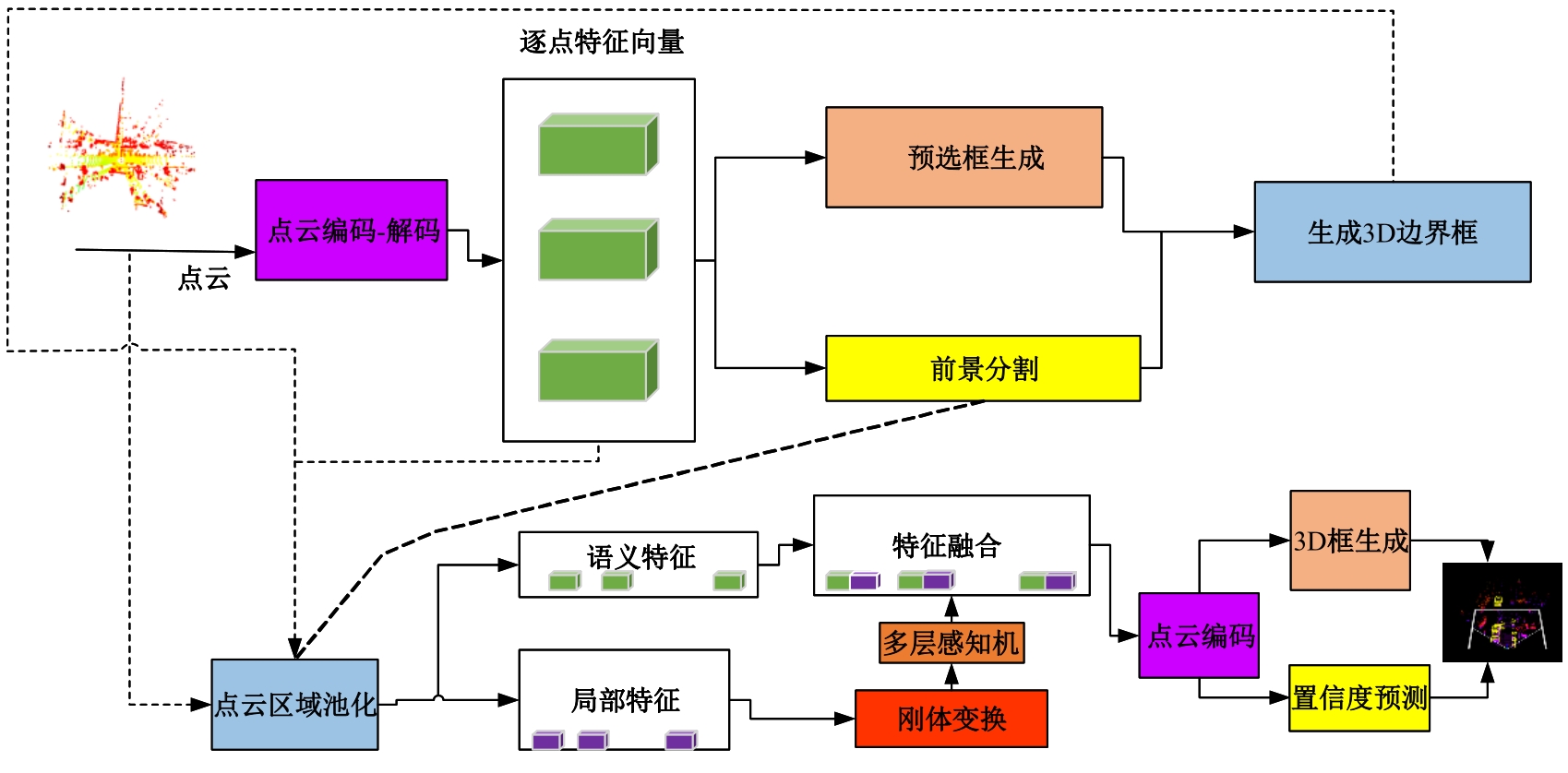

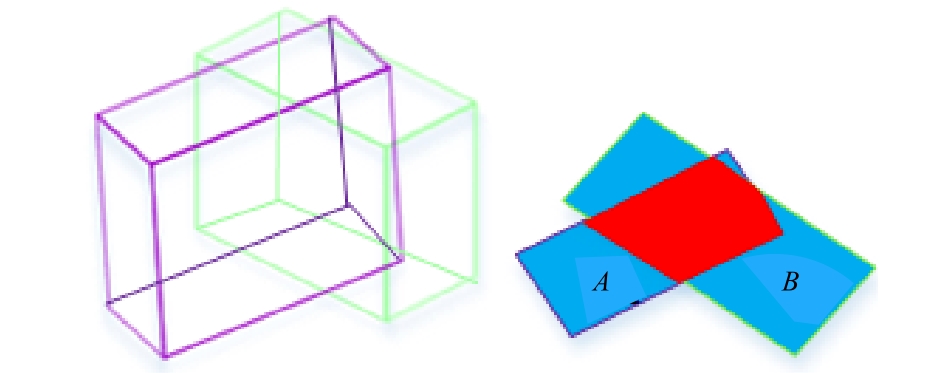

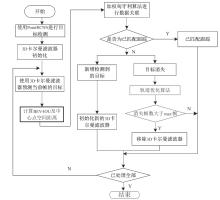

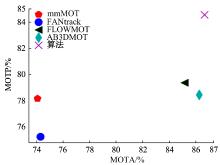

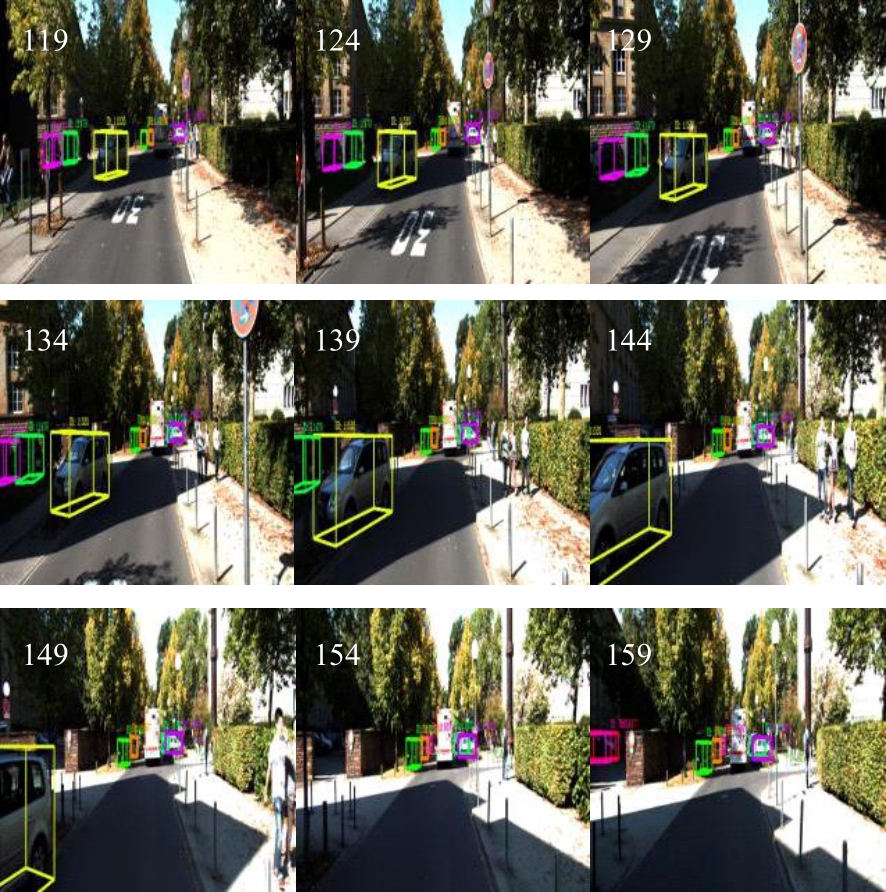

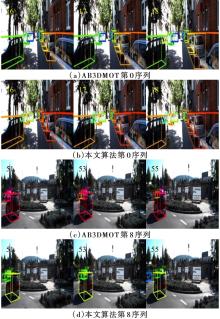

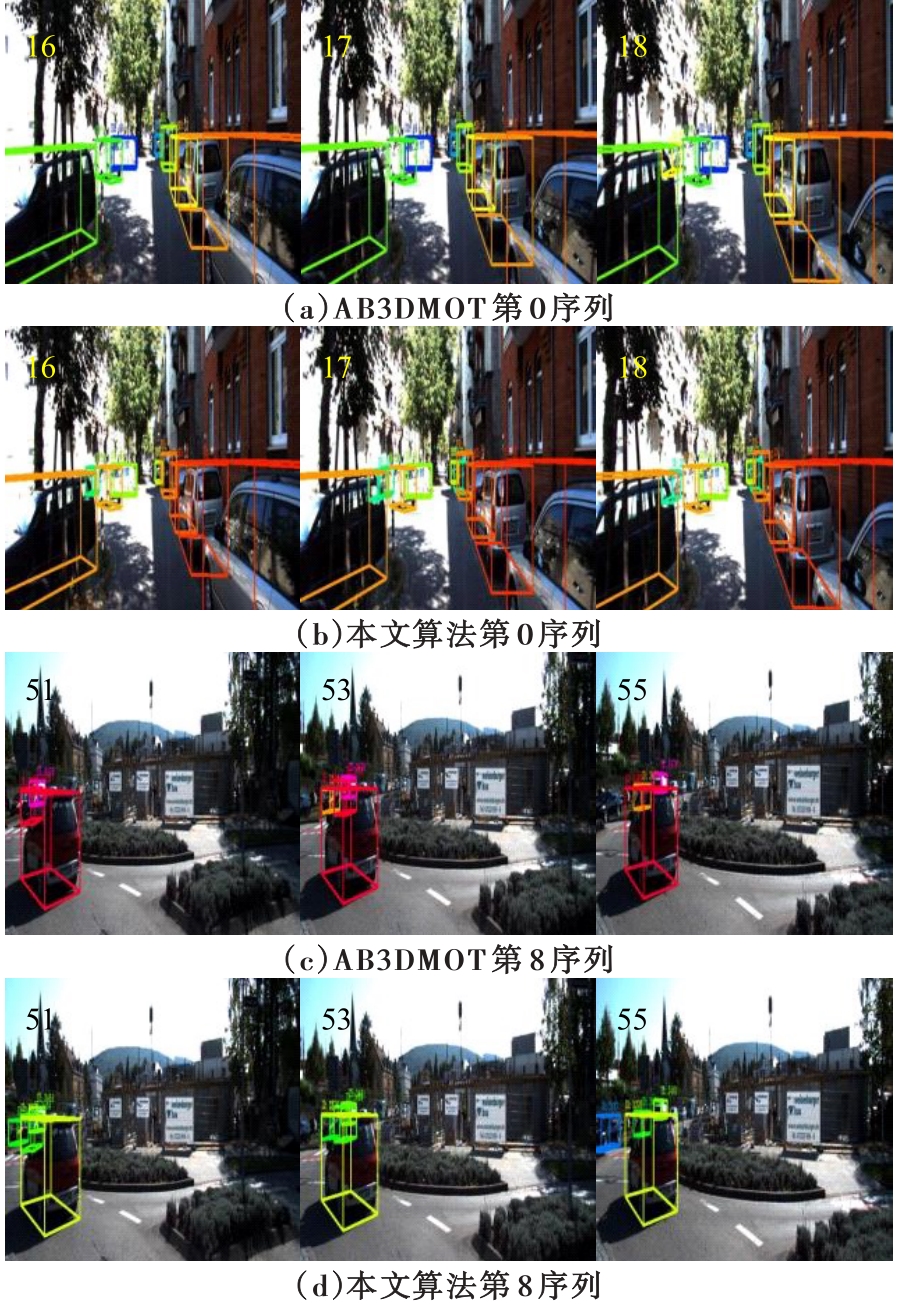

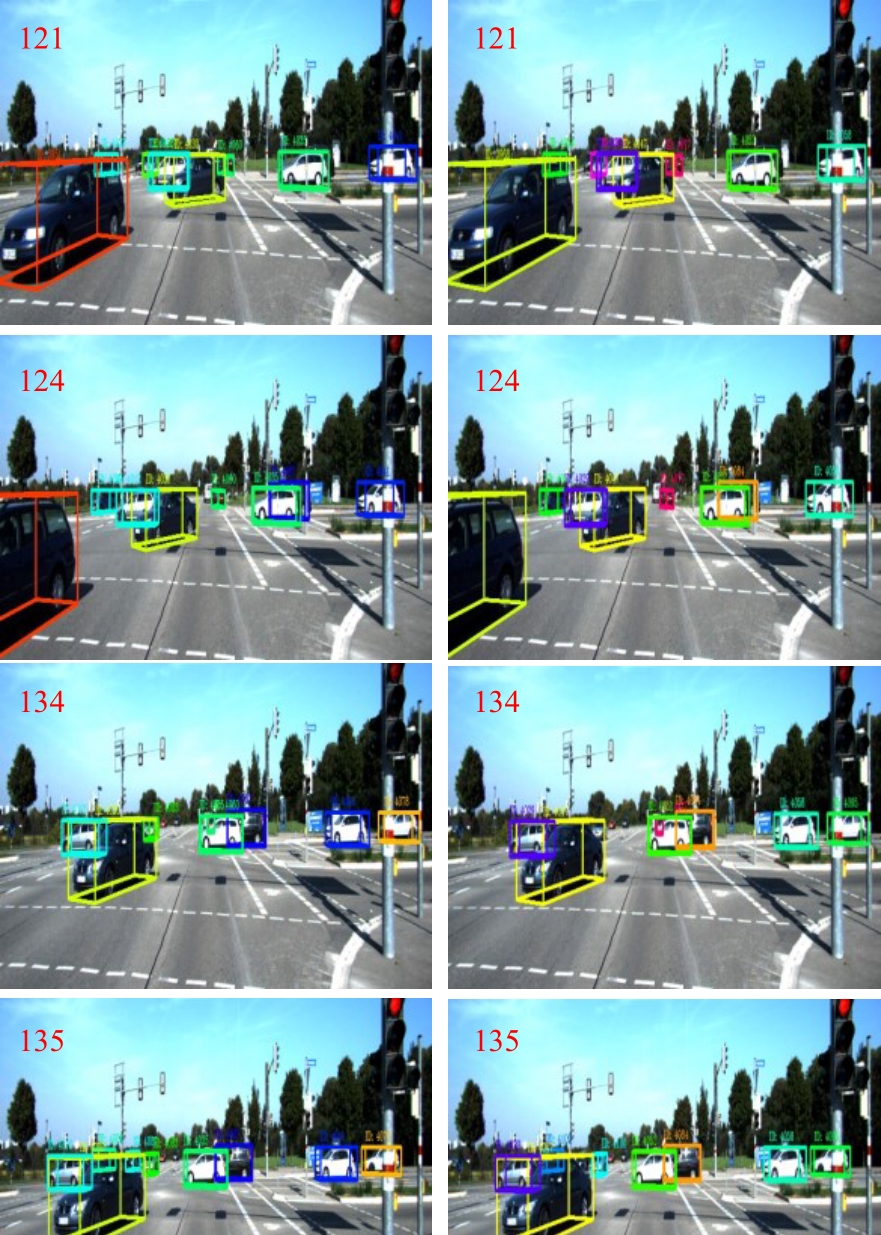

针对多目标跟踪算法在目标遮挡情况下存在的跟踪效果不佳的问题,本文提出一种基于三维点云检测的多目标跟踪算法。采用基于点云的三维目标检测器检测车辆目标,获取三维目标的位置信息;通过三维卡尔曼滤波器结合当前帧跟踪目标位置预测其在下一帧的位置;融合三维中心点空间距离与鸟瞰视图的交并比作为权重,使用改进的匈牙利算法进行数据关联;针对遮挡前后目标发生标签切换问题,提出了轨迹优化算法。在KITTI数据集上进行实验,车辆类跟踪精度、跟踪准确度分别达到84.71%、86.63%。在同样阈值的情况下,该方法相比AB3DMOT分别提升了6.28%、0.39%。实验结果表明此算法能有效改善三维多目标跟踪性能。

中图分类号:

- TP391.4

| 1 | 曲优, 李文辉. 基于多任务联合学习的多目标跟踪方法[J].吉林大学学报: 工学版, 2023, 53(10): 2932-2941. |

| Qu you, Li Wen-hui. Multi target tracking method based on multi task joint learning[J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(10): 2932- 2941. | |

| 2 | 丁贵鹏, 陶钢, 庞春桥, 等. 基于无锚的轻量化孪生网络目标跟踪算法[J]. 吉林大学学报:理学版, 2023, 61(4): 890-898. |

| Ding Gui-peng, Tao Gang, Pang Chun-qiao, et al. Lightweight siamese network target tracking algorithm based on ananchor free[J]. Journal of Jilin University (Science Edition), 2023, 61(4): 890-898. | |

| 3 | 才华, 陈广秋, 刘广文, 等. 遮挡环境下多示例学习分块目标跟踪[J]. 吉林大学学报: 工学版, 2017, 47(1): 281-287. |

| Cai Hua, Chen Guang-qiu, Liu Guang-wen, et al. Novelty fragments-based target tracking with multiple instance learning under occlusions[J]. Journal of Jilin University (Engineering and Technology Edition), 2017, 47(1): 281-287. | |

| 4 | 李晓峰, 任杰, 李东. 基于深度强化学习的移动机器人视觉图像分级匹配算法[J]. 吉林大学学报:理学版, 2023, 61(1): 127-135. |

| Li Xiao-feng, Ren Jie, Li Dong. Hierarchical matching algorithm of visual image for mobile robots based on deep reinforcement learning[J]. Journal of Jilin University (Science Edition), 2023, 61(1): 127-135. | |

| 5 | Zhang W, Zhou H, Sun S, et al. Robust multi- modality multi-object tracking[C]//2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea (South), 2019: 2365-2374. |

| 6 | Shenoi A, Patel M, Gwak J Y, et al. JRMOT: a real-time 3D multi-object tracker and a new large-scale dataset[C]//2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, USA, 2020: 10335-10342. |

| 7 | Weng X S, Wang J R, Heldet D, et al. 3D multi-object tracking: a baseline and new evaluation metrics[C]//2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, USA, 2020: 10359-10366. |

| 8 | Yin T, Zhou X, Krähenbühl P. Center-based 3D object detection and tracking[C]//2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, USA, 2021:11779-11788. |

| 9 | Chen X, Kundu K, Zhang Z, et al. Monocular 3D object detection for autonomous driving[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, USA, 2016: 2147-2156. |

| 10 | Li B Y, Sheng L, Zeng X Y, et al . G S3D: an efficient 3D object detection framework for autonomous driving[C]//2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, USA, 2019: 1019-1028. |

| 11 | Charles R Q, Su H, Kaichun M, et al. Pointnet: deep learning on point sets for 3D classification and segmentation[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, USA, 2017: 77-85. |

| 12 | Shi S, Wang X, Li H. PointRCNN: 3D object proposal generation and detection from point cloud[C]//2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, USA, 2019: 770-779. |

| 13 | Charles R Q, Li Y, Su H, et al. PointNet++: deep hierarchical feature learning on point sets in a metric space[C]//Advances in Neural Information Processing Systems, Long Beach, USA, 2017: 5099–5108. |

| 14 | Bergmann P, Meinhardt T, Leal-Taixé L. Tracking without bells and whistles[C]//2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea (South), 2019: 941-951. |

| 15 | Karunasekera H, Wang H, Zhang H. Multiple object tracking with attention to aearance, structure, motion and size[J]. IEEE Access, 2019, 7: 104423-104434. |

| 16 | Baser V, Balasubramanian V, Bhattacharyya P, et al. FANTrack: 3D multi-object tracking with feature association network[C]//2019 IEEE Intelligent Vehicles Symposium(IV), Paris, France, 2019:1426-1433. |

| 17 | Kim A, Ošep A, Leal-Taixé L. EagerMOT: 3D multi-object tracking via sensor fusion[C]//2021 IEEE International Conference on Robotics and Automation (ICRA), Xi'an, China, 2021: 11315-11321. |

| 18 | Bewley A, Ge Z, Ott L, et al. Simple online and realtime tracking[C]//2016 IEEE International Conference on Image Processing (ICIP), Phoenix, USA, 2016: 3464-3468. |

| 19 | Patil A, Malla S, Gang H, et al. The H3D dataset for full-surround 3D multi-object detection and tracking in crowded urban scenes[C]//2019 International Conference on Robotics and Automation (ICRA), Montreal, Canada, 2019: 9552-9557. |

| 20 | Geiger A, Lenz P, Urtasun R. Are we ready for autonomous driving? The KITTI vision benchmark suite[C]//2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, USA, 2012: 3354-3361. |

| 21 | Zhai G Y, Kong X, Cui J H, et al. Flowmot: 3D multi-object tracking by scene flow association[C]//2020 IEEE/RSJ International Conference on Intelligent Robots and Systems(IROS), Las Vegas, USA, 2020: 1048550. |

| 22 | 翟光耀. 基于激光雷达的三维目标跟踪算法研究[D]. 杭州: 浙江大学控制科学与工程学院, 2021. |

| Zhai Guang-yao. 3D target tracking algorithm based on laser radar research[D]. Hangzhou: School of Control Science and Engineering of Zhejiang University, 2021. |

| [1] | 朱圣杰,王宣,徐芳,彭佳琦,王远超. 机载广域遥感图像的尺度归一化目标检测方法[J]. 吉林大学学报(工学版), 2024, 54(8): 2329-2337. |

| [2] | 孙铭会,薛浩,金玉波,曲卫东,秦贵和. 联合时空注意力的视频显著性预测[J]. 吉林大学学报(工学版), 2024, 54(6): 1767-1776. |

| [3] | 王殿伟,张池,房杰,许志杰. 基于高分辨率孪生网络的无人机目标跟踪算法[J]. 吉林大学学报(工学版), 2024, 54(5): 1426-1434. |

| [4] | 王宇,赵凯. 基于亚像素定位的人体姿态热图后处理[J]. 吉林大学学报(工学版), 2024, 54(5): 1385-1392. |

| [5] | 高云龙,任明,吴川,高文. 基于注意力机制改进的无锚框舰船检测模型[J]. 吉林大学学报(工学版), 2024, 54(5): 1407-1416. |

| [6] | 刘晶红,邓安平,陈琪琪,彭佳琦,左羽佳. 基于多重注意力机制的无锚框目标跟踪算法[J]. 吉林大学学报(工学版), 2023, 53(12): 3518-3528. |

| [7] | 曲优,李文辉. 基于多任务联合学习的多目标跟踪方法[J]. 吉林大学学报(工学版), 2023, 53(10): 2932-2941. |

| [8] | 王侃,苏航,曾浩,覃剑. 表观增强的深度目标跟踪算法[J]. 吉林大学学报(工学版), 2022, 52(11): 2676-2684. |

| [9] | 曹洁,屈雪,李晓旭. 基于滑动特征向量的小样本图像分类方法[J]. 吉林大学学报(工学版), 2021, 51(5): 1785-1791. |

| [10] | 徐涛,马克,刘才华. 基于深度学习的行人多目标跟踪方法[J]. 吉林大学学报(工学版), 2021, 51(1): 27-38. |

| [11] | 赵宏伟,李明昭,刘静,胡黄水,王丹,臧雪柏. 基于自然性和视觉特征通道的场景分类[J]. 吉林大学学报(工学版), 2019, 49(5): 1668-1675. |

| [12] | 车翔玖, 王利, 郭晓新. 基于多尺度特征融合的边界检测算法[J]. 吉林大学学报(工学版), 2018, 48(5): 1621-1628. |

| [13] | 许岩岩, 陈辉, 刘家驹, 袁金钊. CELL处理器并行实现立体匹配算法[J]. 吉林大学学报(工学版), 2017, 47(3): 952-958. |

| [14] | 杨焱, 刘飒, 廉世彬, 朱晓冬. 基于计算机视觉的果树害虫的形态特征分析[J]. 吉林大学学报(工学版), 2013, 43(增刊1): 235-238. |

| [15] | 李爱娟, 李舜酩, 沈峘, 缪小冬. 基于ACT-R的智能车动态优化方法[J]. 吉林大学学报(工学版), 2013, 43(05): 1184-1189. |

|