吉林大学学报(工学版) ›› 2024, Vol. 54 ›› Issue (9): 2658-2667.doi: 10.13229/j.cnki.jdxbgxb.20221461

• 计算机科学与技术 • 上一篇

基于改进YOLOv5算法的道路目标检测方法

- 长春工业大学 计算机科学与工程学院,长春 130012

Road object detection method based on improved YOLOv5 algorithm

Hong-zhi WANG( ),Ming-xuan SONG,Chao CHENG,Dong-xuan XIE(

),Ming-xuan SONG,Chao CHENG,Dong-xuan XIE( )

)

- College of Computer Science and Engineering,Changchun University of Technology,Changchun 130012,China

摘要:

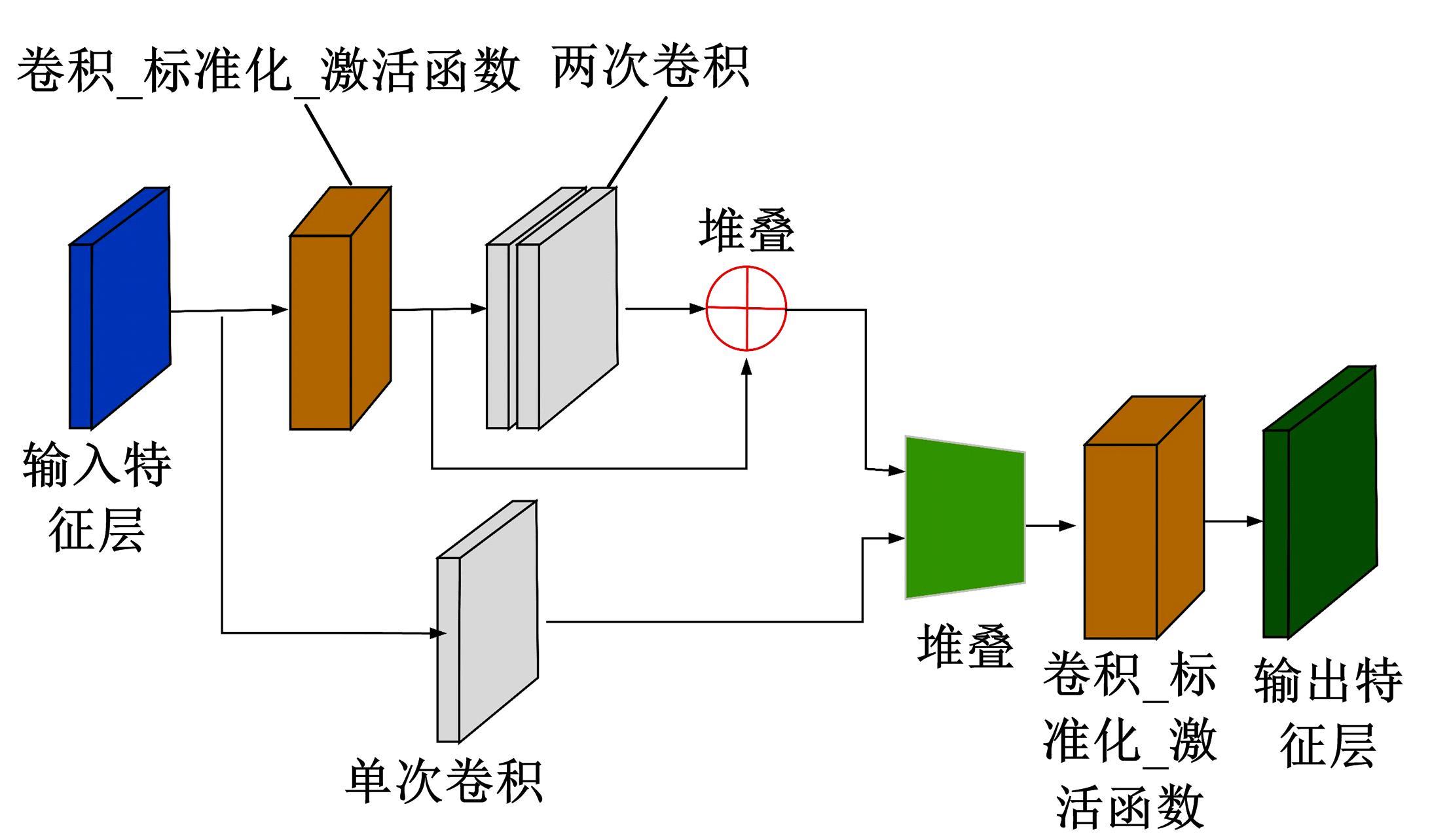

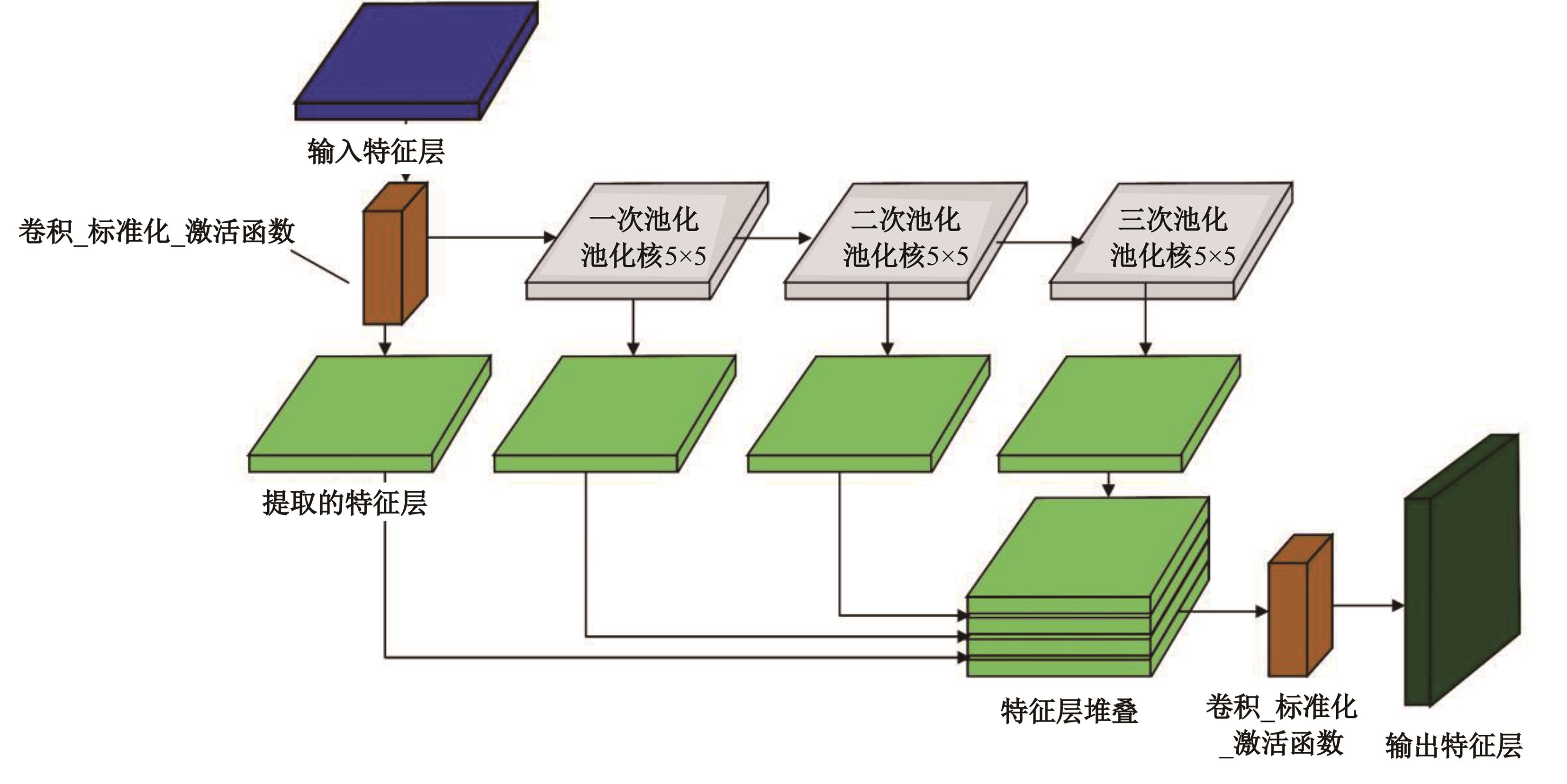

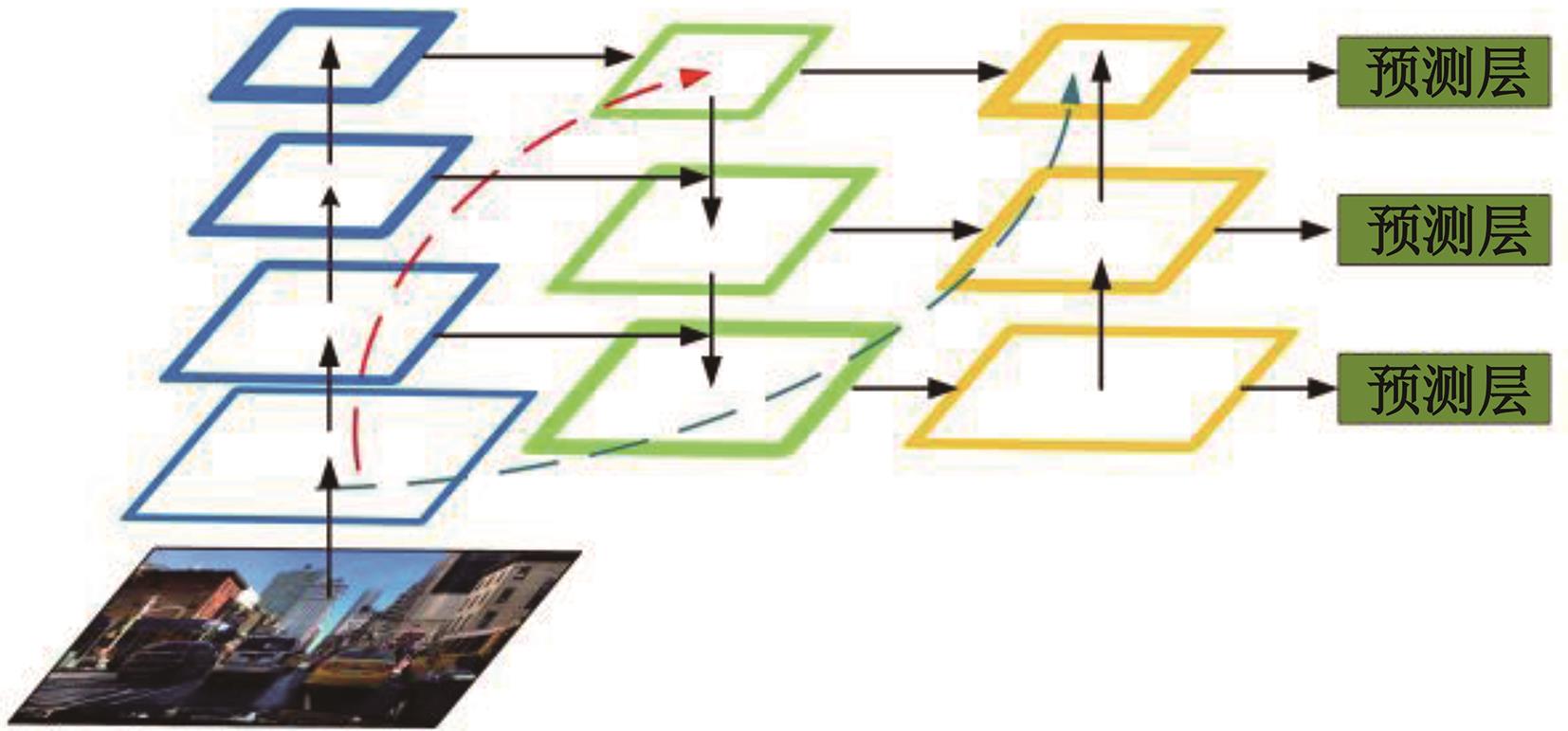

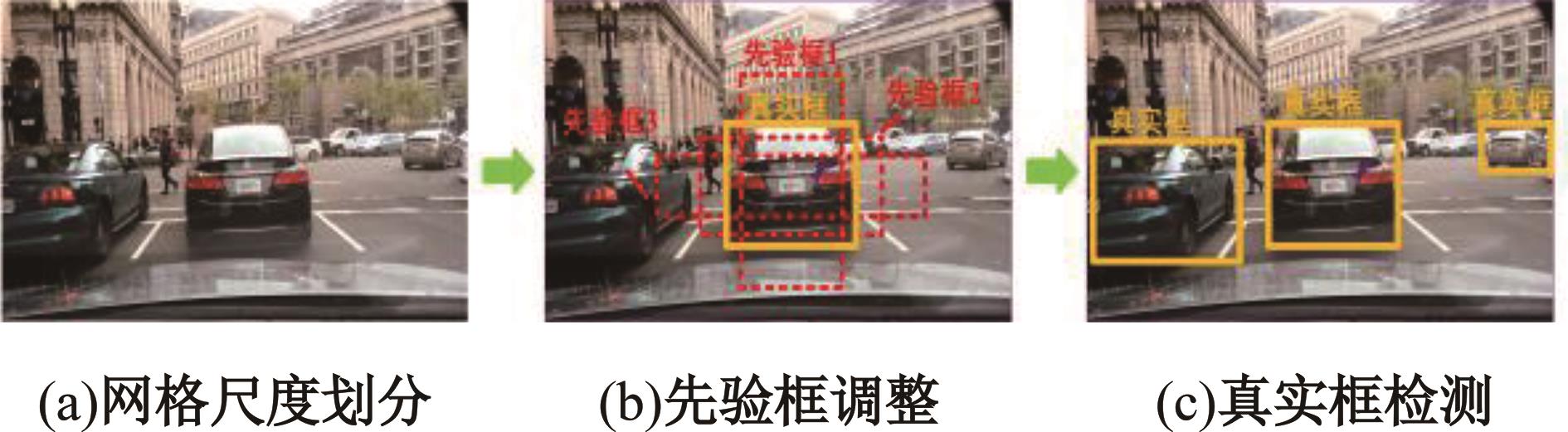

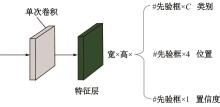

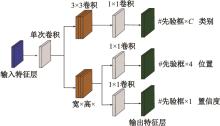

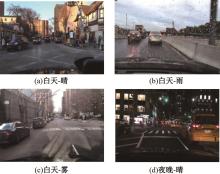

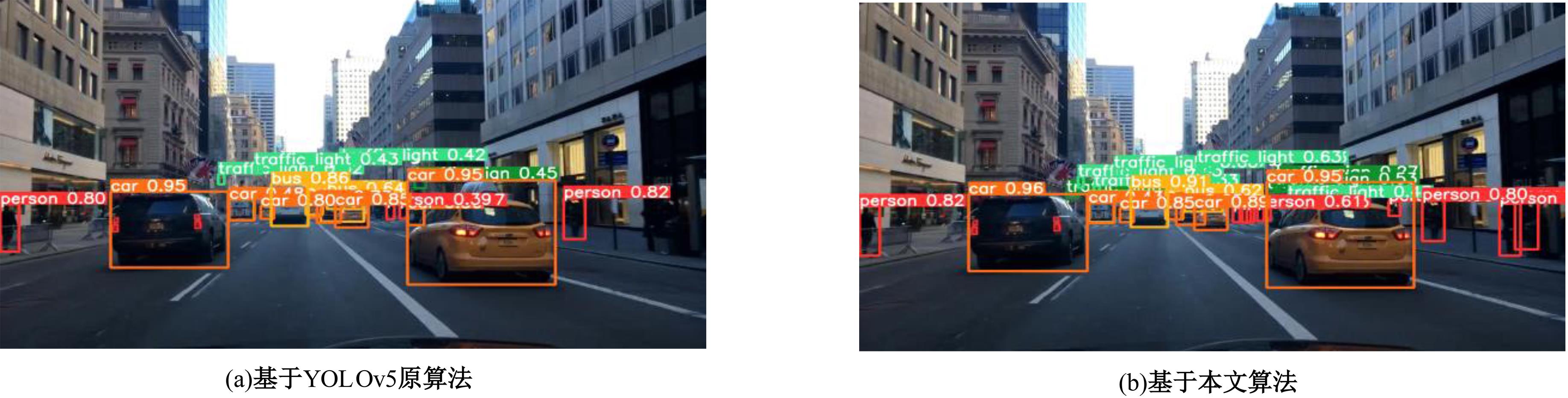

针对现有网络在道路交通场景下的远处目标识别效果欠佳、目标特征表达不充分及目标定位不准确等目标检测问题,提出一种基于改进YOLOv5算法的道路目标检测方法。首先,总结了YOLOv5算法的特征提取结构,分析出原网络结构的不足之处;其次,在原网络基础上增加小目标检测层,通过补充融合特征层及引入额外检测头,提高网络对远处目标的识别能力;再次,对原检测头进行解耦,通过将边框回归和目标分类过程改为两个分支进行,提升网络对目标特征的表达能力;然后,对先验框进行重聚类,通过K-means++算法调整先验框的高宽比例,增强网络对目标的定位能力;最后,以AP、mAP和FPS为评价指标进行消融、对比和可视化验证实验。结果表明:本文算法在 BDD100K数据集上检测速度为95.2帧/s,平均精度达到55.6%,较YOLOv5算法提高6.7%。可见,改进YOLOv5算法在满足检测实时性要求的同时,具备较高的目标检测精度,适用于复杂交通环境下的道路目标检测任务,对提升自动驾驶汽车的视觉感知能力具有指导意义。

中图分类号:

- U492.8

| 1 | Gao X, Wu Y, Yang K, et al. Vehicle bottom anomaly detection algorithm based on SIFT[J]. Optik International Journal for Light and Electron Optics, 2015, 126(23): 3562-3566. |

| 2 | Chacon I, Rivero A, Ramirez A. Adaptive fuzzy weighted color histogram and HOG appearance model for object tracking with a dynamic trained neural network prediction[J]. Signal, Image and Video Processing, 2021, 15(7): 1585-1592. |

| 3 | 裘莉娅, 陈玮琳, 李范鸣, 等. 复杂背景下基于LBP纹理特征的运动目标快速检测算法[J]. 红外与毫米波学报, 2022, 41(3): 639-651. |

| Qiu Li-ya, Chen Wei-lin, Li Fan-ming, et al. A fast moving target detection algorithm based on LBP texture feature in complex background[J]. Journal of Infrared and Millimeter Waves, 2022, 41(3): 639-651. | |

| 4 | 李雄飞, 王婧, 张小利, 等. 基于SVM和窗口梯度的多焦距图像融合方法[J]. 吉林大学学报: 工学版, 2020, 50(1): 227-236. |

| Li Xiong-fei, Wang Jing, Zhang Xiao-li, et al. Multi focus image fusion based on support vector machines and window gradient[J]. Journal of Jilin University(Engineering and Technology Edition), 2020, 50(1): 227-236. | |

| 5 | Mehmood Z, Asghar S. Customizing SVM as a base learner with AdaBoost ensemble to learn from multi-class problems: a hybrid approach AdaBoost MSVM[J]. Knowledge-based Systems, 2021, 217:No. 106845. |

| 6 | He X, Lou B, Yang H, et al. Robust lane change decision making for autonomous vehicles: an observation adversa-rial reinforcement learning approachin[J]. IEEE Transactions on Intelligent Vehicles, 2023, 8(1): 184-193. |

| 7 | 曹磊, 王强, 史润佳, 等. 基于改进RPN的Faster-RCNN网络SAR图像车辆目标检测方法[J]. 东南大学学报: 自然科学版, 2021, 51(1): 87-91. |

| Cao Lei, Wang Qiang, Shi Run-jia, et al. Method for vehicle target detection on SAR image based on improved RPN in Faster-RCNN[J]. Journal of Southeast University (Natural Science Edition), 2021, 51(1): 87-91. | |

| 8 | Agrawal P, Girshick R, Malik J. Analyzing the performance of multilayer neural networks for object recognition[C]∥European Conference on Computer Vision (ECCV), California, USA, 2014: 329-344. |

| 9 | He K M, Zhang X Y, Ren S Q, et al. Spatial pyramid pooling in deep convolutional networks for visual recognition[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1904-1916. |

| 10 | Girshick R. Fast R-CNN[C]∥Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 2015: 1440-1448. |

| 11 | He X, Lou B, Yang H, et al. Robust decision making for autonomous vehicles at highway on-ramps: a constra-ined adversarial reinforcement learning approach[J]. IEEE Transactions on Intelligent Transportation Systems, 2023, 24(4): 4103-4113. |

| 12 | Redmon J, Divvala S, Girshick R, et al. You only look once: unified, real-time object detection[C]∥2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 779-788. |

| 13 | Redmon J, Farhadi A. YOLO9000: better, faster, stronger[C]∥IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, USA, 2017: 6517-6525. |

| 14 | Redmon J, Farhadi A. YOLOv3: an incremental improve-ment[DB/OL].[2022-10-26]. |

| 15 | Bochkovskiy A, Wang C Y, Liao H. YOLOv4: optimal speed and accuracy of object detection[DB/OL].[2022-10-26]. |

| 16 | 侯庆山, 邢进生. 基于Grad-CAM与KL损失的SSD目标检测算法[J]. 电子学报, 2020, 48(12): 2409-2416. |

| Hou Qing-shan, Xing Jin-sheng. SSD object detection algorithm based on KL loss and Grad-CAM[J]. Acta Electronica Sinica, 2020, 48(12): 2409-2416. | |

| 17 | Girshick R, Donahue J, Darrell T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Lake City, USA, 2014: 580-587. |

| 18 | Zhu X, Lyu S, Wang X, et al. TPH-YOLOv5: improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios[J]. IEEE International Conference on Computer Vision Workshops (ICCVW), Montreal,Canada,2021: 2778-2788. |

| 19 | He K M, Zhang X, Ren S, et al. Deep residual learning for image recognition[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, USA, 2016: 770-778. |

| 20 | Lin T Y, Dollar P, Girshick R, et al. Feature pyramid networks for object detection[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, USA, 2017: 2117-2125. |

| 21 | Liu S, Qi L, Qin H, et al. Path aggregation network for instance segmentation[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recogni-tion, Salt Lake City, USA, 2018: 8759-8768. |

| 22 | Song G, Liu Y, Wang X. Revisiting the sibling head in object detector[C]∥2020 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, USA, 2020: 11560-11569. |

| 23 | Ren S, He K, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137-1149. |

| 24 | Amrouche A, Bentrcia Y, Abed A, et al. Vehicle detection and tracking in real-time using YOLOv4-tiny[C]∥2022 7th International Conference on Image and Signal Processing and their Applications (ISPA), Mostaganem, Algeria, 2022: 1-5. |

| 25 | Zhang Y S, Xu W X, Yang S S, et al. Improved YOLOX detection algorithm for contraband in X-ray images[J]. Applied Optics, 2022,61(21): 6297-6310. |

| 26 | Hussain M, Al-Aqrabi H, Munawar M, et al. Domain feature mapping with YOLOv7 for automated edge-based pallet racking inspections[J]. Sensors, 2022, 22(18): 6927-6939. |

| [1] | 杨军,韩鹏飞. 采用神经网络架构搜索的高分辨率遥感影像目标检测[J]. 吉林大学学报(工学版), 2024, 54(9): 2646-2657. |

| [2] | 朱圣杰,王宣,徐芳,彭佳琦,王远超. 机载广域遥感图像的尺度归一化目标检测方法[J]. 吉林大学学报(工学版), 2024, 54(8): 2329-2337. |

| [3] | 游新冬,郭磊,韩晶,吕学强. 一种工件表面压印字符识别网络[J]. 吉林大学学报(工学版), 2024, 54(7): 2072-2079. |

| [4] | 戢晓峰,徐迎豪,普永明,郝京京,覃文文. 山区双车道公路货车移动遮断小客车跟驰风险预测模型[J]. 吉林大学学报(工学版), 2024, 54(5): 1323-1331. |

| [5] | 高云龙,任明,吴川,高文. 基于注意力机制改进的无锚框舰船检测模型[J]. 吉林大学学报(工学版), 2024, 54(5): 1407-1416. |

| [6] | 邬岚,赵乐,李根. 基于方差异质性随机参数模型的汇合行为分析[J]. 吉林大学学报(工学版), 2024, 54(4): 883-889. |

| [7] | 陈仁祥,胡超超,胡小林,杨黎霞,张军,何家乐. 基于改进YOLOv5的驾驶员分心驾驶检测[J]. 吉林大学学报(工学版), 2024, 54(4): 959-968. |

| [8] | 张云佐,郭威,李文博. 遥感图像密集小目标全方位精准检测算法[J]. 吉林大学学报(工学版), 2024, 54(4): 1105-1113. |

| [9] | 张洪,朱志伟,胡天宇,龚燕峰,周建庭. 基于改进YOLOv5s的桥梁螺栓缺陷识别方法[J]. 吉林大学学报(工学版), 2024, 54(3): 749-760. |

| [10] | 王宏志,宋明轩,程超,解东旋. 基于改进YOLOv4-tiny算法的车距预警方法[J]. 吉林大学学报(工学版), 2024, 54(3): 741-748. |

| [11] | 王春华,李恩泽,肖敏. 多特征融合和孪生注意力网络的高分辨率遥感图像目标检测[J]. 吉林大学学报(工学版), 2024, 54(1): 240-250. |

| [12] | 何杰,张长健,严欣彤,王琛玮,叶云涛. 基于微观动力学参数的高速公路特征路段事故风险分析[J]. 吉林大学学报(工学版), 2024, 54(1): 162-172. |

| [13] | 薛珊,张亚亮,吕琼莹,曹国华. 复杂背景下的反无人机系统目标检测算法[J]. 吉林大学学报(工学版), 2023, 53(3): 891-901. |

| [14] | 陶博,颜伏伍,尹智帅,武冬梅. 基于高精度地图增强的三维目标检测算法[J]. 吉林大学学报(工学版), 2023, 53(3): 802-809. |

| [15] | 潘恒彦,张文会,梁婷婷,彭志鹏,高维,王永岗. 基于MIMIC与机器学习的出租车驾驶员交通事故诱因分析[J]. 吉林大学学报(工学版), 2023, 53(2): 457-467. |

|

||