吉林大学学报(工学版) ›› 2019, Vol. 49 ›› Issue (5): 1392-1404.doi: 10.13229/j.cnki.jdxbgxb20190078

• • 上一篇

汽车纵向自动驾驶的因果推理型决策

- 吉林大学 汽车仿真与控制国家重点实验室,长春 130022

Causal reasoning decision⁃making for vehicle longitudinal automatic driving

Zhen-hai GAO( ),Tian-jun SUN,Lei HE(

),Tian-jun SUN,Lei HE( )

)

- State Key Laboratory of Automobile Simulation and Control, Jilin University, Changchun 130022, China

摘要:

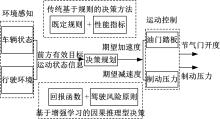

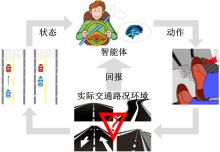

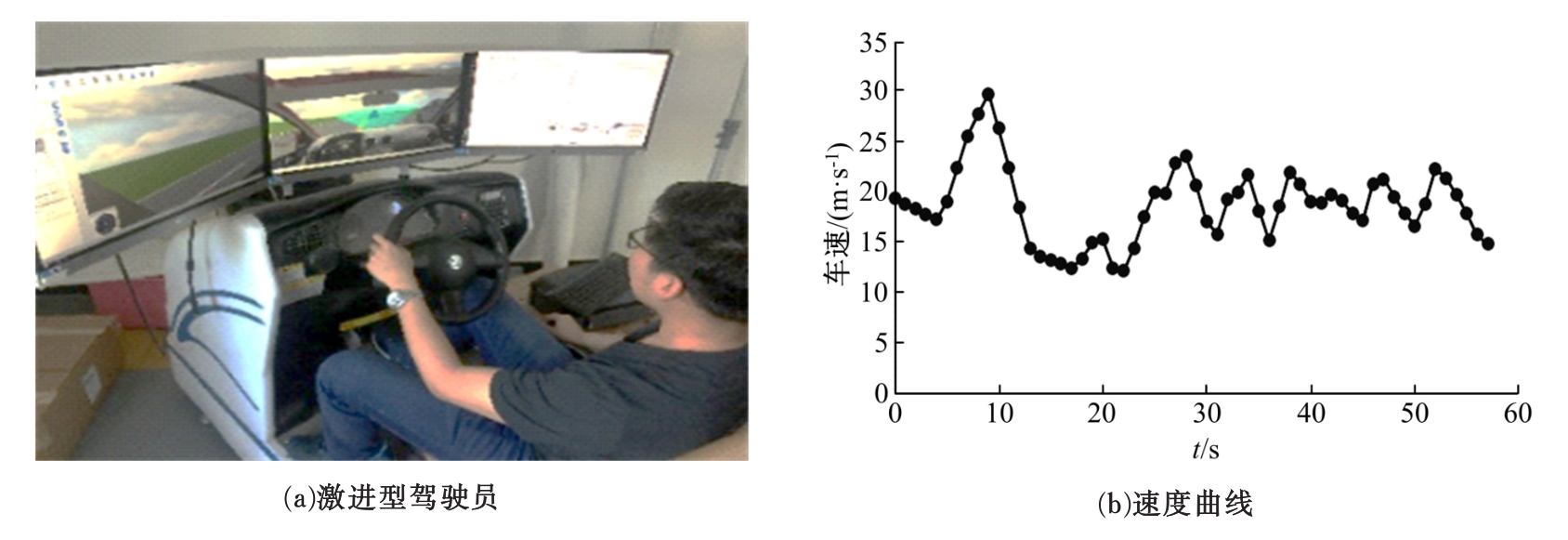

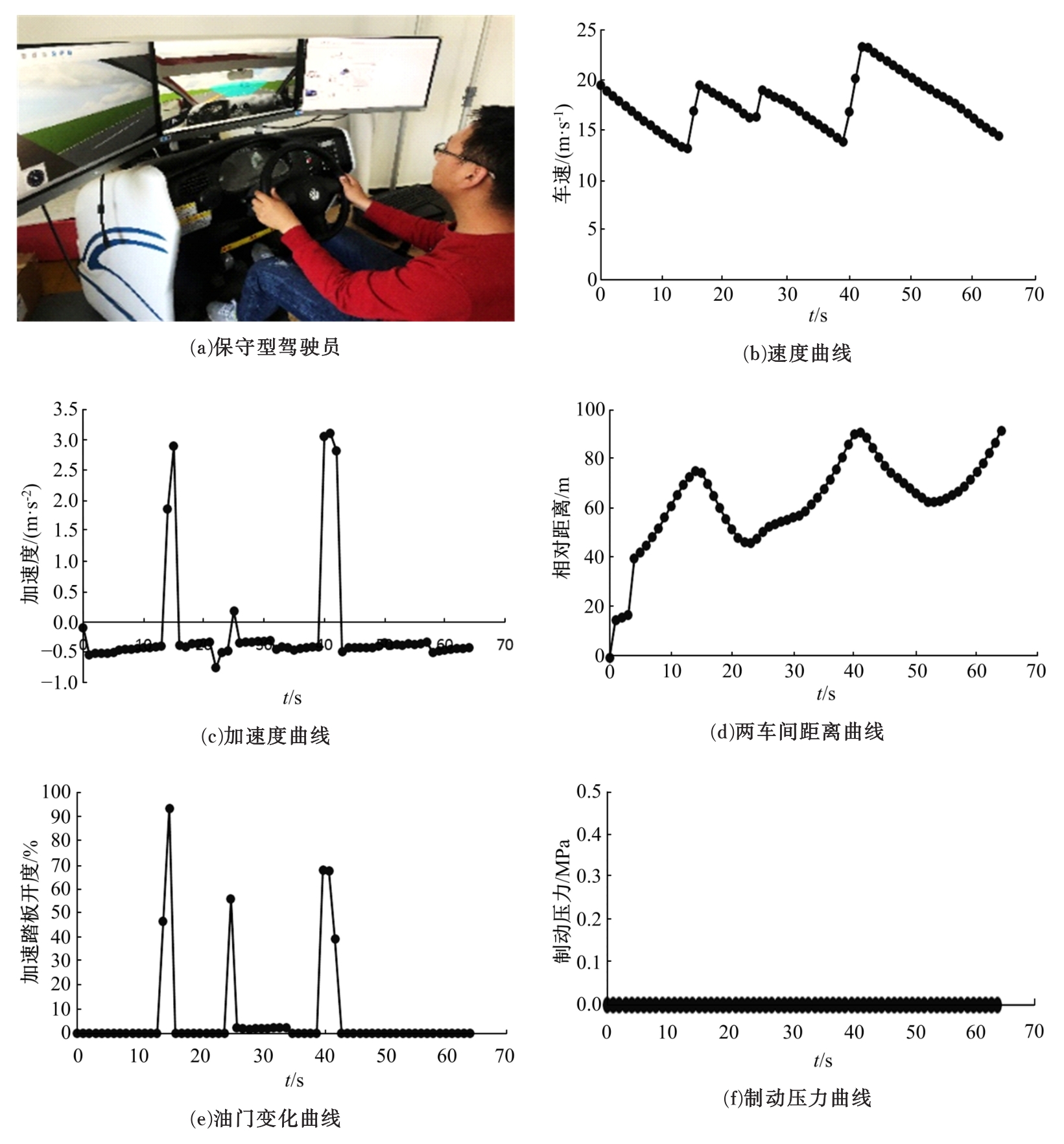

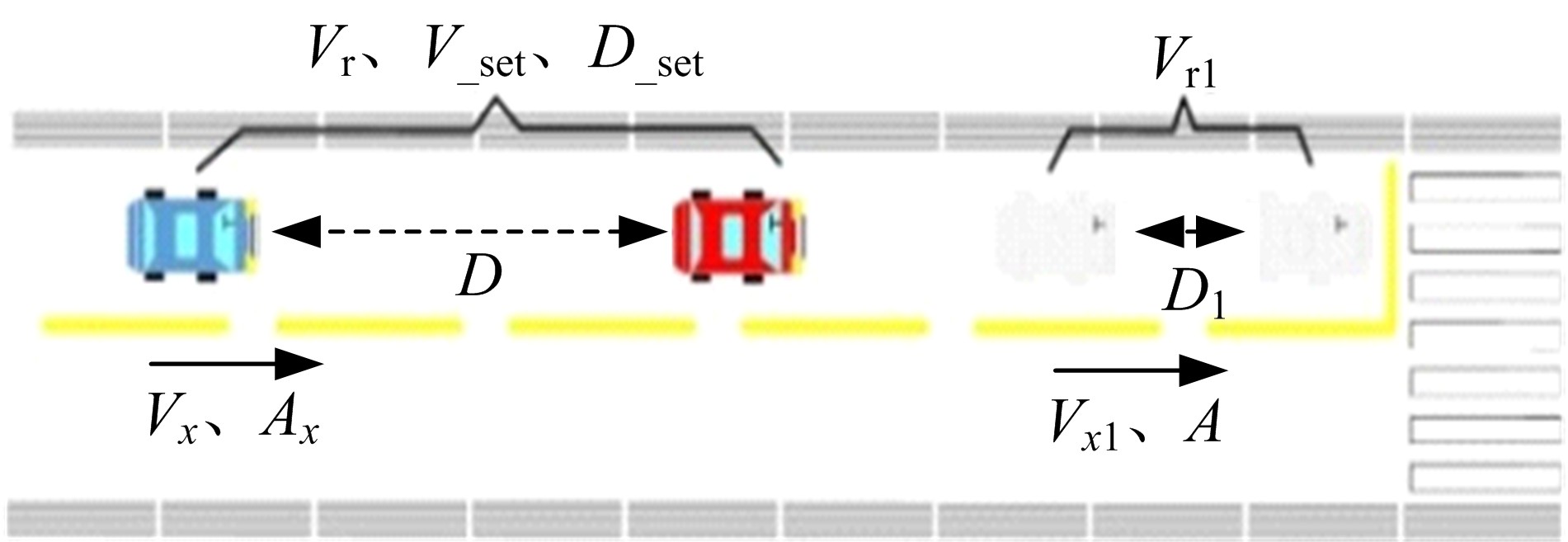

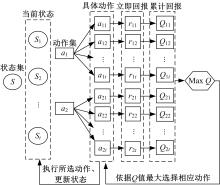

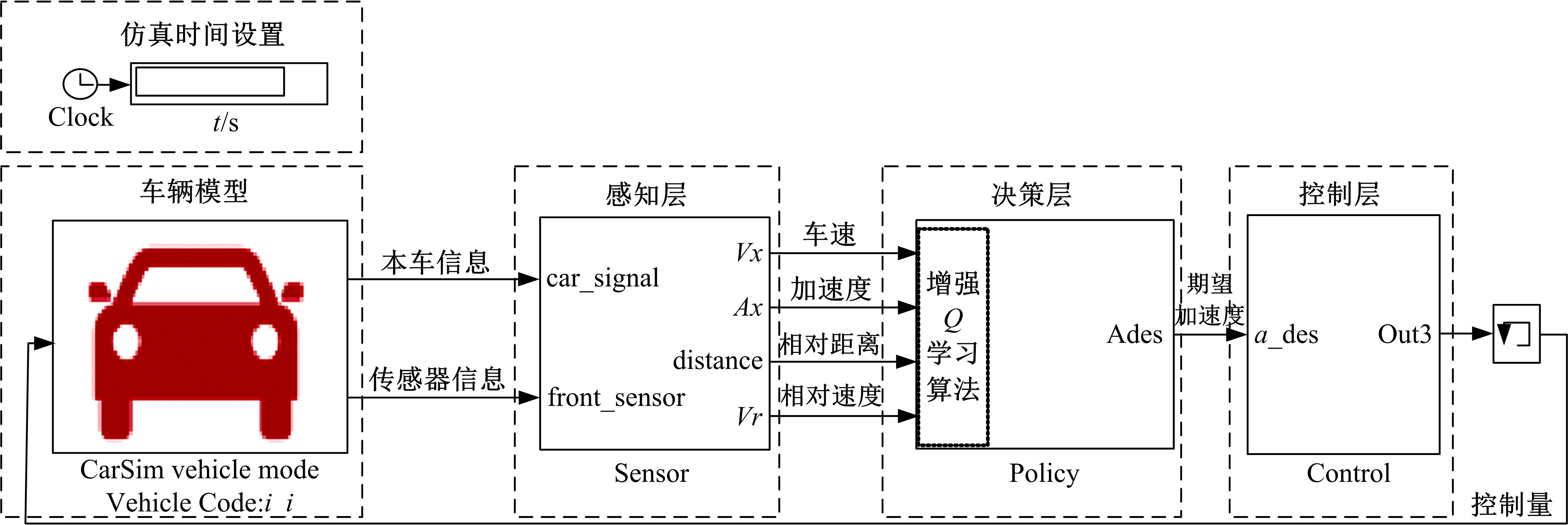

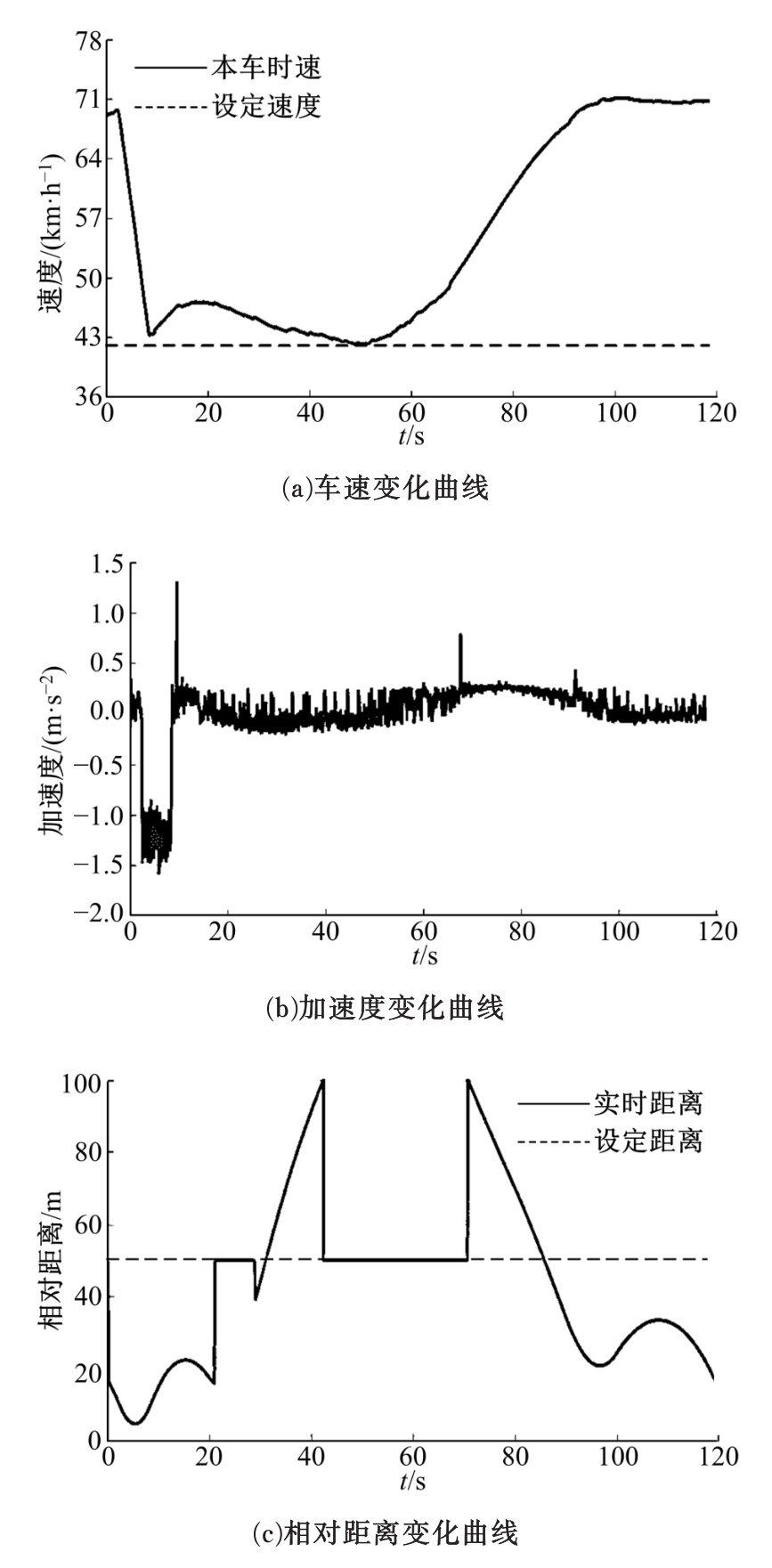

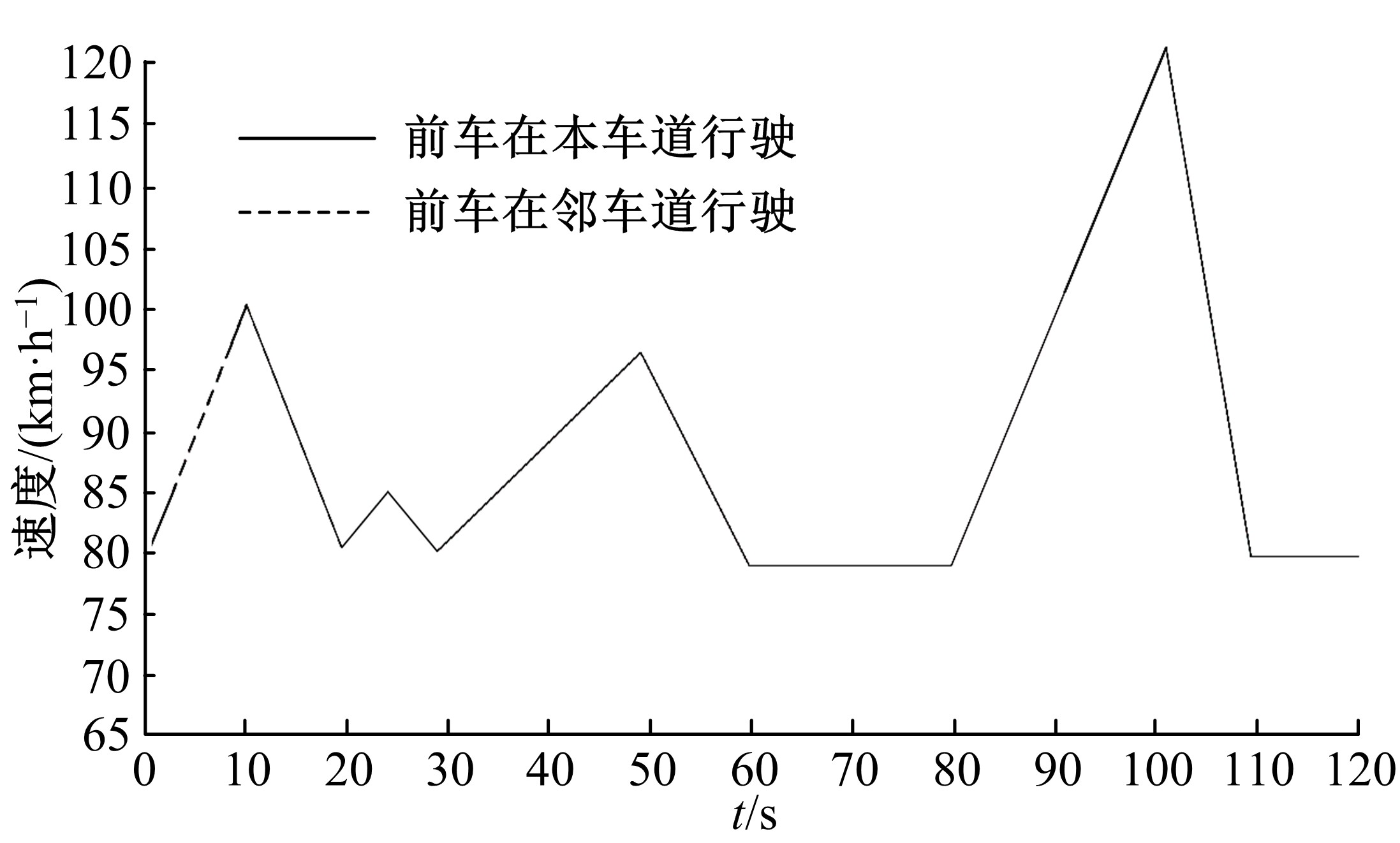

针对汽车纵向自动驾驶决策过程的因果关联问题,建立了车辆跟驰行为的马尔可夫决策过程模型,利用真实驾驶员驾驶模拟器实验数据与驾驶风险原则确定了模型中的状态集和动作集,并根据车辆的行驶状态设计了相应的回报函数,进而基于增强Q学习算法对该模型进行求解,提出了以上决策过程的因果推理机制。最终,通过在随机工况下的仿真测试,验证了该方法的可行性与有效性。

中图分类号:

- U462.1

| 1 | 宋威龙 . 城区动态环境下智能车辆行为决策研究[D]. 北京: 北京理工大学机械与车辆学院, 2016. |

| Song Wei-long . Research on behavioral decision making for intelligent vehicles in dynamic urban environments[D]. Beijing: School of Mechanical and Vehicle Engineering, Beijing Institute of Technology, 2016. | |

| 2 | 王竣 . 自适应巡航控制系统多目标感知决策控制算法研究[D]. 长春: 吉林大学汽车工程学院, 2015. |

| Wang Jun . Research on multi-objective perception-decision-control algorithm for adaptive cruise control systems[D]. Changchun: College of Automotive Engineering, Jilin University, 2015. | |

| 3 | You C X , Lu J B , Filev D , et al . Highway traffic modeling and decision making for autonomous vehicle using reinforcement learning[C]⫽2018 IEEE Intelligent Vehicles Symposium(IV), Changshu, Suzhou, China , 2018: 1227-1232. |

| 4 | 左磊 . 智能驾驶车辆自主决策与规划的增强学习方法研究[D]. 长沙: 国防科学技术大学研究生院, 2016. |

| Zuo Lei . Autonomous driving decision making and planning for intelligent vehicles using reinforcement learning[D]. Changsha: School of Graduate, National University of Defense Technology, 2016. | |

| 5 | 郑睿 . 基于增强学习的无人车辆智能决策方法研究[D]. 长沙: 国防科技大学研究生院, 2013. |

| Zheng Rui . Reinforcement learning-based intelligent decision-making methods for unmanned vehicles[D]. Changsha: School of Graduate, National University of Defense Technology, 2013. | |

| 6 | 刘春明 . 基于增强学习和车辆动力学的高速公路自主驾驶研究[D]. 长沙: 国防科技大学研究生院, 2014. |

| Liu Chun-ming . Research on autonomous driving on highway roads based on reinforcement learning and vehicle dynamics[D]. Changsha: School of Graduate, National University of Defense Technology, 2014. | |

| 7 | Gao H B , Shi G Y , Xie G T , et al . Car-following method based on inverse reinforcement learning for autonomous vehicle decision-making[J]. International Journal of Advanced Robotic Systems, 2018, 15(6): 1-11. |

| 8 | Chong L , Abbas M M , Medina F A , et al . A rule-based neural network approach to model driver naturalistic behavior in traffic[J]. Transportation Research Part C, 2013, 32(4): 207-223. |

| 9 | 王喆, 杨柏婷, 刘昕, 等 . 基于模糊聚类的驾驶决策判别[J]. 吉林大学学报: 工学版, 2015, 45(5): 1414-1419. |

| Wang Zhe , Yang Bo-ting , Liu Xin , et al . Discriminant analysis of driving decisions based on fuzzy clustering[J]. Journal of Jilin University (Engineering and Technology Edition), 2015, 45(5): 1414-1419. | |

| 10 | 杨慧媛 . 基于增强学习的优化控制方法及其在移动机器人中的应用[D]. 长沙: 国防科学技术大学研究生院, 2014. |

| Yang Hui-yuan . Reinforcement learning-based optimal control methods with applications to mobile robots[D]. Changsha: School of Graduate, National University of Defense Technology, 2014. | |

| 11 | 李冲 . 基于学习的智能交通预测与多路径规划研究[D]. 长沙: 中南大学信息科学与工程学院, 2014. |

| Li Chong . Q learning based prediction and multiple paths planning in intelligent transportation systems[D]. Changsha: School of Information Science and Engineering, Central South University, 2014. | |

| 12 | 高振海, 严伟, 李红建, 等 . 汽车自适应巡航线性参变间距控制算法[J]. 吉林大学学报: 工学版, 2016, 46(4): 1023-1029. |

| Gao Zhen-hai , Yan Wei , Li Hong-jian , et al . Design of linear parametrically varying headway control algorithm for ACC vehicles[J]. Journal of Jilin University (Engineering and Technology Edition), 2016, 46(4): 1023-1029. | |

| 13 | Bellman R E . A Markov decision process[J]. Journal of Mathematical Mech, 1957(6): 679-684. |

| 14 | Howard R . Dynamic programming and Markov processes[M]. Cambridge, Massachusetts: MIT Press, 1960. |

| 15 | 郭茂祖, 刘扬, 黄梯云 . 加强学习主要算法的比较研究[J]. 计算机工程与应用, 2001, 37(21): 16-18. |

| Guo Mao-zu , Liu Yang , Huang Ti-yun . Comparative study of the main reinforcement learning algorithms[J]. Computer Engineering and Application, 2001, 37(21): 16-18. | |

| 16 | Qu T , Yu S Y , Shi Z Q , et al . Modeling driver’s car-following behavior based on hidden markov model and model predictive control: a cyber-physical system approach[C]⫽The 11th Asian Control Conference (ASCC), Gold Coast Convention Centre, Australia, 2017: 114-119. |

| 17 | Chen X , Zhai Y , Lu C , et al . A learning model for personalized adaptive cruise control[C]⫽IEEE Intelligent Vehicles Symposium (IV), Redondo Beach, CA, USA, 2017: 379-384. |

| 18 | Hester T , Lopes M , Stone P . Learning exploration strategies in model-based reinforcement learning[C]⫽IEEE the 12th International Conference in Autonomous Agents and Multi-agent Systems (AAMAS), St Paul, Minnesota, 2013: 1069-1076. |

| 19 | 田苗 . 强化学习算法的研究与实验[D]. 西安: 西安电子科技大学控制科学与工程学院, 2014. |

| Tian Miao . Study and experiment of reinforcement learning algorithm[D]. Xi’an: School of Control Science and Engineering, Xidian University, 2014. | |

| 20 | Gao Z , Sun T , He L . A multi-mode control strategy for EV based on typical situation[C]⫽WCX™ 17: SAE World Congress Experience, Detroit Michigan, United States, 2017: 438-447. |

| 21 | 徐亮, 张自力 . 基于MAS的驾驶行为决策模型的研究[J]. 计算机工程与科学, 2010, 32(5): 154-158. |

| Xu Liang , Zhang Zi-li . Research on the Decision-Making Model of Driving Behavior based on MAS[J].Computer Engineering and Science, 2010, 32(5): 154-158. | |

| 22 | Solomon D . Accidents on Main Rural Highways Related to Speed[R]. Washington DC: Bureau of Public Roads, 1964. |

| 23 | Rigolli M , Brady M . Towards a behavioral traffic monitoring system[C]⫽International Joint Conference on Autonomous Agents and Multi-Agent Systems, The Netherlands, 2005: 449-454. |

| [1] | 马芳武,韩露,周阳,王世英,蒲永锋. 采用聚乳酸复合材料的汽车零件多材料优化设计[J]. 吉林大学学报(工学版), 2019, 49(5): 1385-1391. |

| [2] | 李静,石求军,刘鹏,户亚威. 基于纵向车速估算的商用车ABS神经网络滑模控制[J]. 吉林大学学报(工学版), 2019, 49(4): 1017-1025. |

| [3] | 杨顺,蒋渊德,吴坚,刘海贞. 基于多类型传感数据的自动驾驶深度强化学习方法[J]. 吉林大学学报(工学版), 2019, 49(4): 1026-1033. |

| [4] | 陈鑫,李铭,阮新建,王宁,王佳宁. 基于浸入单元法和延迟分离涡模型的Ahmed车模尾流涡旋结构[J]. 吉林大学学报(工学版), 2019, 49(4): 1034-1042. |

| [5] | 周华,杨志刚,朱晖. 基于整车风洞试验的MIRA车型数值计算[J]. 吉林大学学报(工学版), 2019, 49(4): 1043-1053. |

| [6] | 陈吉清,刘蒙蒙,兰凤崇. 三元动力电池及其成组后的过充安全性试验[J]. 吉林大学学报(工学版), 2019, 49(4): 1072-1080. |

| [7] | 柳润东,毛军,郗艳红,张宏宇,彭飞. 横风下高速列车会车压力波对风障的气动冲击[J]. 吉林大学学报(工学版), 2019, 49(4): 1054-1062. |

| [8] | 秦国锋,那景新,慕文龙,谭伟,栾建泽,申浩. 高温老化对CFRP/铝合金粘接接头失效的影响[J]. 吉林大学学报(工学版), 2019, 49(4): 1063-1071. |

| [9] | 张立斌,吴岛,单洪颖,邓祥敬. 基于制动试验台架的多轴车轴荷自调系统设计[J]. 吉林大学学报(工学版), 2019, 49(4): 1081-1091. |

| [10] | 李寿涛,李秋媛,刘辉,丁辉,田彦涛,于丁力. 可实现车辆稳定性控制的滑模变结构策略[J]. 吉林大学学报(工学版), 2019, 49(4): 1288-1292. |

| [11] | 常成,宋传学,张雅歌,邵玉龙,周放. 双馈电机驱动电动汽车变频器容量最小化[J]. 吉林大学学报(工学版), 2018, 48(6): 1629-1635. |

| [12] | 席利贺,张欣,孙传扬,王泽兴,姜涛. 增程式电动汽车自适应能量管理策略[J]. 吉林大学学报(工学版), 2018, 48(6): 1636-1644. |

| [13] | 何仁,杨柳,胡东海. 冷藏运输车太阳能辅助供电制冷系统设计及分析[J]. 吉林大学学报(工学版), 2018, 48(6): 1645-1652. |

| [14] | 那景新,慕文龙,范以撒,谭伟,杨佳宙. 车身钢-铝粘接接头湿热老化性能[J]. 吉林大学学报(工学版), 2018, 48(6): 1653-1660. |

| [15] | 刘玉梅,刘丽,曹晓宁,熊明烨,庄娇娇. 转向架动态模拟试验台避撞模型的构建[J]. 吉林大学学报(工学版), 2018, 48(6): 1661-1668. |

|

||