吉林大学学报(工学版) ›› 2022, Vol. 52 ›› Issue (12): 2933-2940.doi: 10.13229/j.cnki.jdxbgxb20220550

基于注意力U-Net的视网膜血管分类识别

- 1.长春师范大学 计算机科学与技术学院,长春 130032

2.中国科学院 重大任务局,北京 100864

Classification and recognition of retinal vessels based on attention U⁃Net

Yang YAN1( ),Zi-ru YOU1,Yuan YAO2,Wen-bo HUANG1(

),Zi-ru YOU1,Yuan YAO2,Wen-bo HUANG1( )

)

- 1.College of Computer Science and Technology,Changchun Normal University,Changchun 130032,China

2.Bureau of Major Tasks,Chinese Academy of Sciences,Beijing 100864,China

摘要:

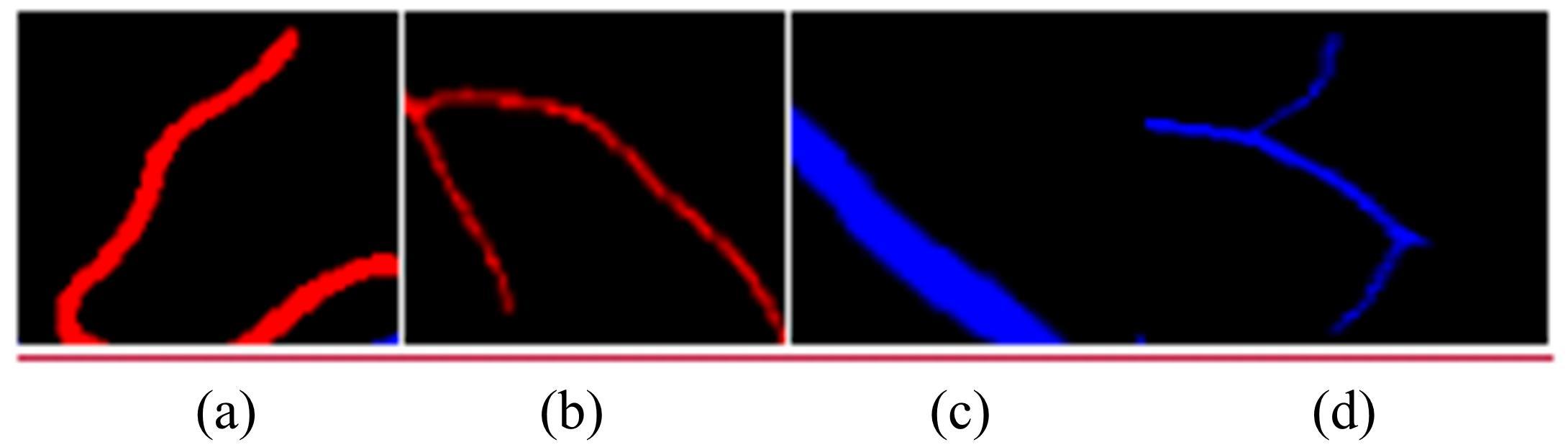

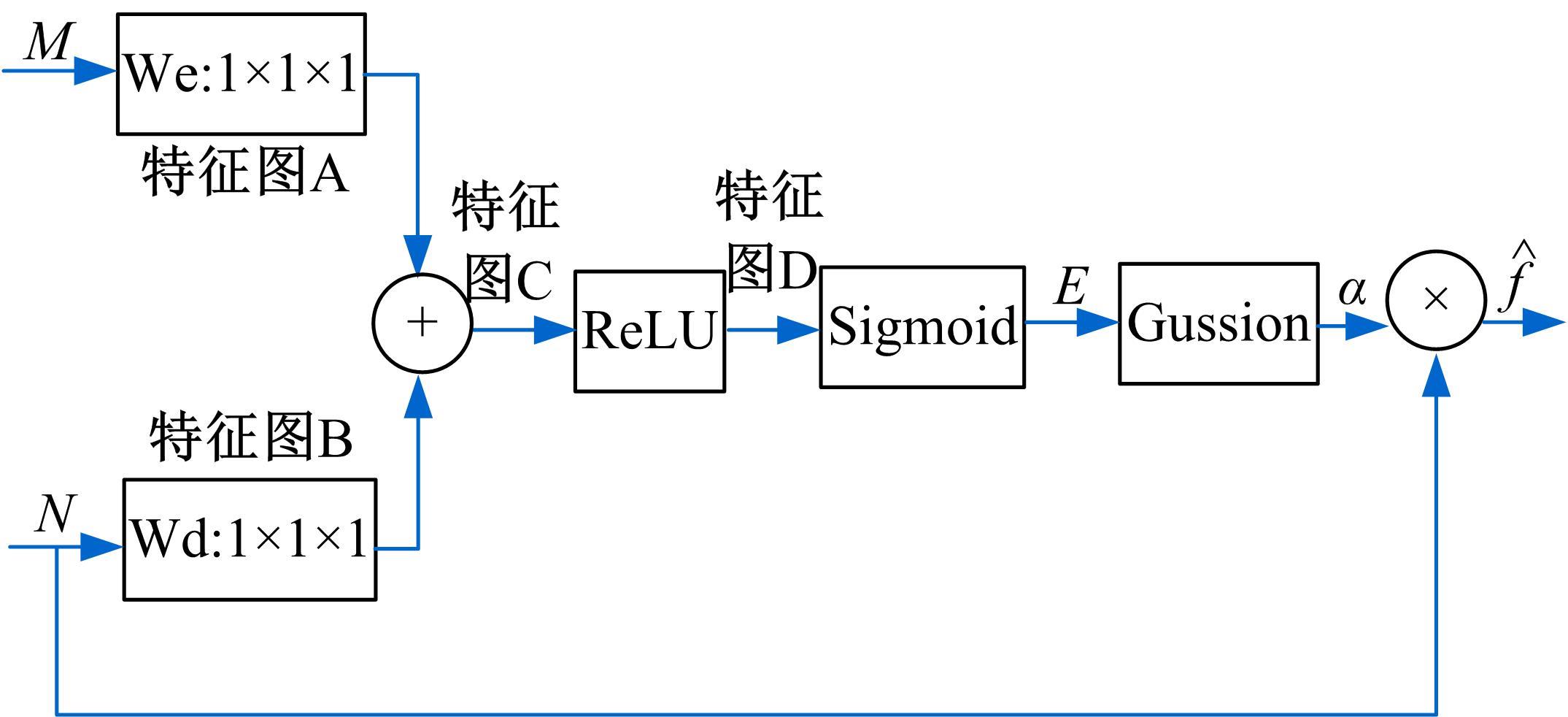

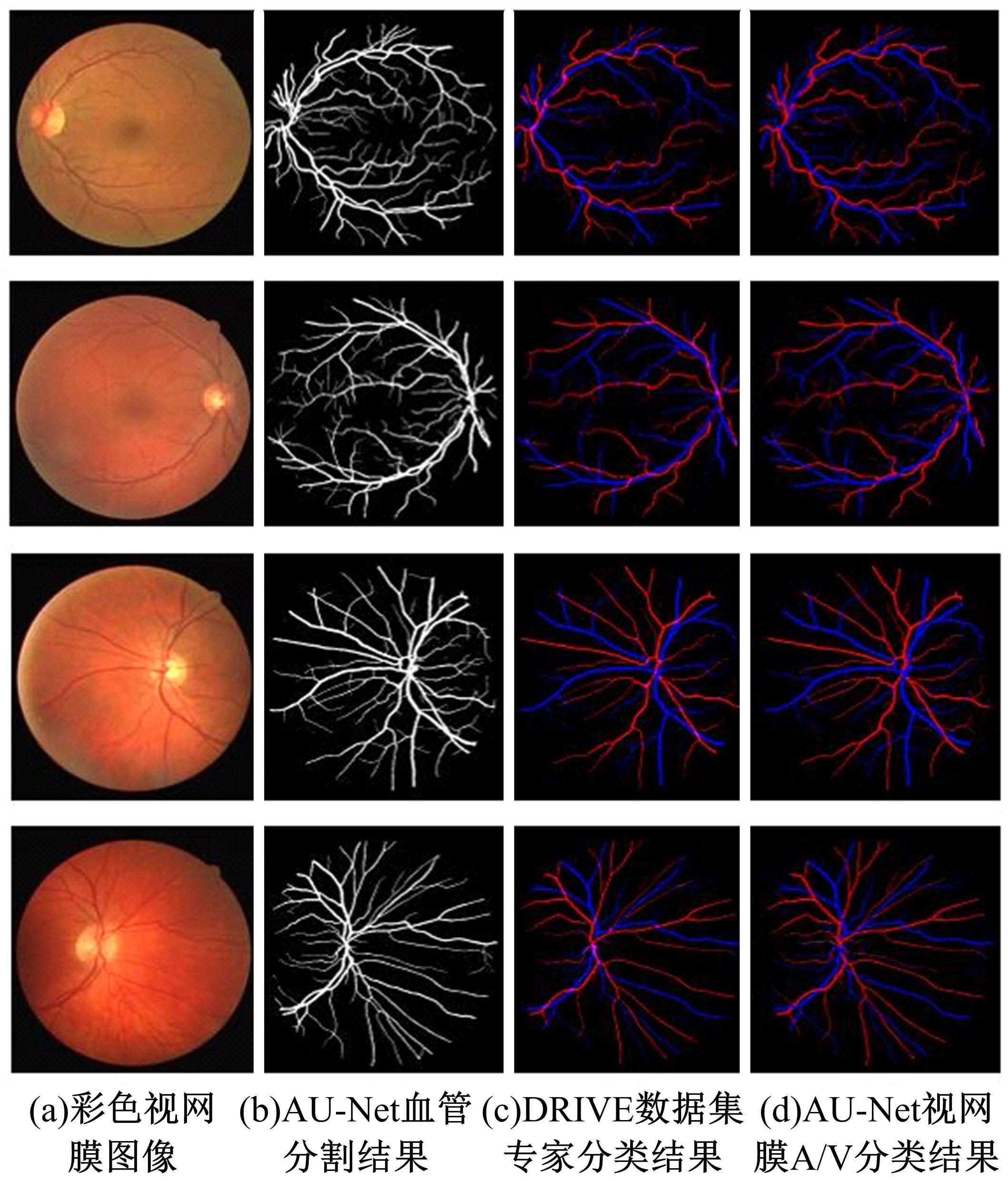

针对视网膜动静脉血管(A/V)自动分类方法的局限性,提出了基于注意力U-Net(AU-Net)的视网膜A/V自动分类方法。利用血管结构信息、拓扑关系及边缘信息增强视网膜A/V特征信息,在U-Net改进网络VC-Net模型中引入注意力模块,将局部与全局信息相结合,调整权重约束视网膜A/V特征,如抑制背景倾向特征并增强血管边缘及末端特征,实现视网膜A/V的精准分类。在DRIVE数据集中对本文方法性能进行了测试,结果表明,本文方法视网膜A/V分类精度为0.9685,F1值为0.9886,敏感度为0.9803,特异性为0.9957。由实验结果可见,与经典U-Net相比,本文方法各项性能指标均有显著提升,可供临床借鉴。

中图分类号:

- TP391.7

| 1 | 马腾, 洪芳艳, 赵强. 冠状动脉CTA对患者冠脉狭窄程度、分叉病变的诊断分析研究[J]. 影像科学与光化学, 2022, 40(2): 434-438. |

| Ma Teng, Hong Fang-yan, Zhao Qiang. Study on the diagnosis and analysis of coronary artery stenosis and bifurcation lesions by coronary CTA[J]. Imaging Science and Photochemistry, 2022, 40(2): 434-438. | |

| 2 | 薛岚燕, 曹新容, 林嘉雯,等. 动静脉血管自动分类方法及其管径测量[J]. 仪器仪表学报, 2017, 38(9): 2307-2316. |

| Xue Lan-yan, Cao Xin-rong, Lin Jia-wen, et al. Automatic classification of arterial and venous vessels and their diameter measurement[J]. Journal of Instrumentation, 2017, 38(9): 2307-2316. | |

| 3 | Alam M N, Le D, Yao X. Differential artery-vein analysis in quantitative retinal imaging: a review[J]. Quantitative Imaging in Medicine and Surgery, 2021, 11(3): 1102-1119. |

| 4 | Akbar S, Akram M U, Sharif M, et al. Arteriovenous ratio and Papilledema based hybrid decision support system for detection and grading of hypertensive retinopathy[J]. Computer Methods and Programs in Biomedicine, 2018, 154: 123-141. |

| 5 | Yin X, Irshad S, Zhang Y. Classifiers fusion for improved vessel recognition with application in quantification of generalized arteriolar narrowing[J]. Journal of Innovative Optical Health Sciences, 2020, 13(1): 1950021. |

| 6 | Welikala R A, Foster P J, Whincup P H, et al. Automated arteriole and venule classification using deep learning for retinal images from the UK biobank cohort[J]. Computers in Biology & Medicine, 2017, 90:23-32. |

| 7 | Girard F, Cheriet F. Artery/vein classification in fundus images using CNN and likelihood score propagation[C]∥IEEE Global Conference on Signal & Information Processing, Quebec, Canada, 2017: 720-724. |

| 8 | Galdran A, Costa P, Bria A, et al. A no-reference quality metric for retinal vessel tree segmentation[C]∥International Conference on Medical Image Computing and Computer-Assisted Intervention, Granada, Spain, 2018: 82-90. |

| 9 | 吴昊, 平鹏, 孙立博, 等. 基于改进LRCN模型的驾驶行为图像序列识别方法[J]. 江苏大学学报:自然科学版, 2018, 39(3): 303-308, 329. |

| Wu Hao, Ping Peng, Sun Li-bo, et al. Image sequence recognition method for driving behavior based on improved LRCN model[J]. Journal of Jiangsu University(Natural Science Edition), 2018, 39(3): 303-308, 329. | |

| 10 | Hu J, Wang H, Cao Z, et al. Automatic artery/vein classification using a vessel-constraint network for multicenter fundus images[J]. Frontiers in Cell and Developmental Biology, 2021, 9: No.659941. |

| 11 | 田萱, 王亮, 丁琪. 基于深度学习的图像语义分割方法综述[J]. 软件学报, 2019, 30(2): 440-468. |

| Tian Xuan, Wang Liang, Ding Qi. Overview of image semantic segmentation methods based on deep learning[J]. Journal of Software, 2019, 30(2):440-468. | |

| 12 | Zhou Z, Siddiquee M, Tajbakhsh N, et al. Unet++: redesigning skip connections to exploit multiscale features in image segmentation[J]. IEEE Transactions on Medical Imaging, 2020, 39(6): 1856-1867. |

| 13 | Ahn H, Yim C. Convolutional neural networks using skip connections with layer groups for super-resolution image reconstruction based on deep learning[J]. Applied Sciences, 2020, 10(6): 1959. |

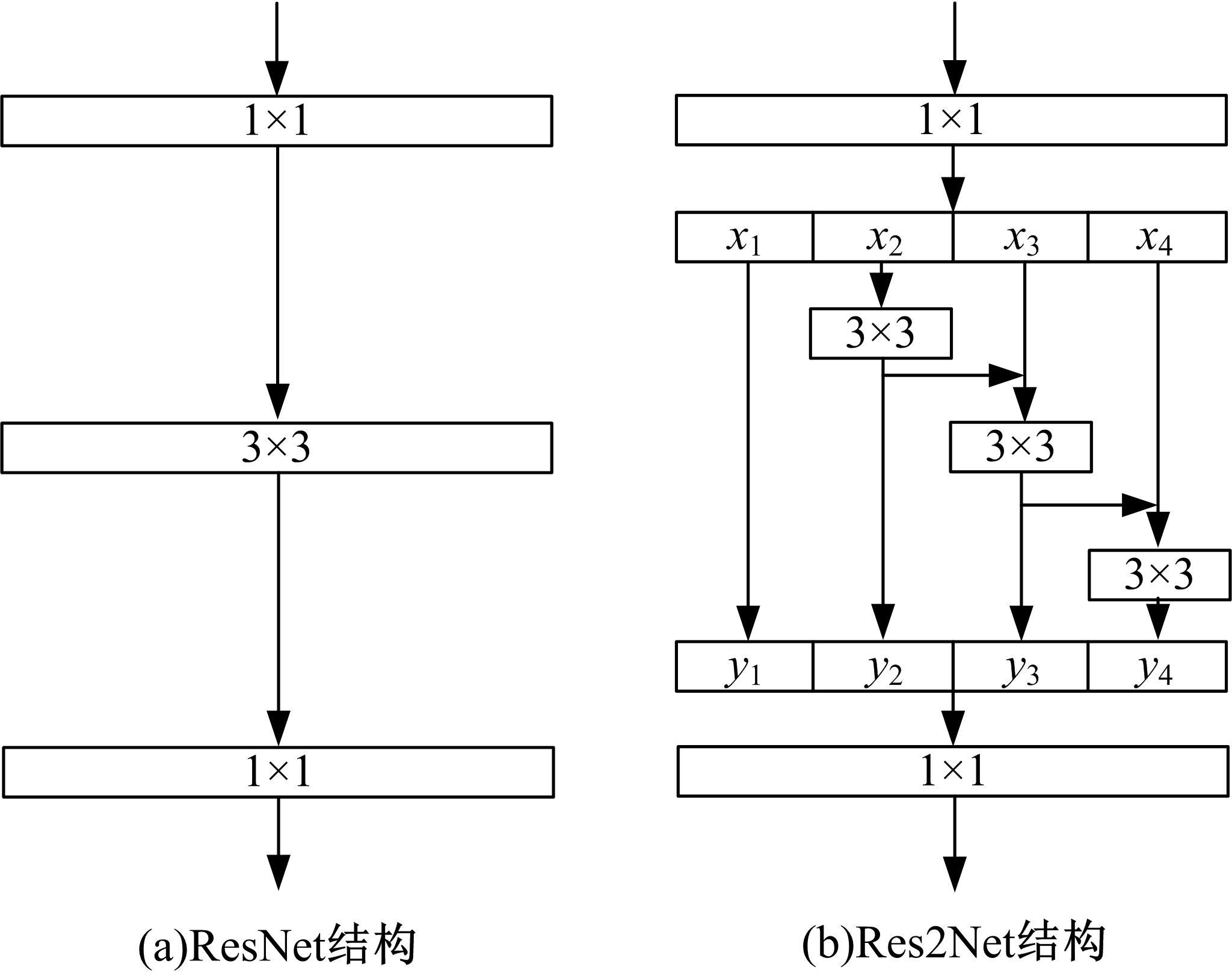

| 14 | Gao Shang-hua, Cheng Ming-ming, Zhao Kai, et al. Res2net: a new multi-scale backbone architecture[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2021, 43(2): 652-662. |

| 15 | Fu Jun, Liu Jing, Wang Yu-hang, et al. Stacked deconvolutional network for semantic segmentation[J/OL]. [2022-04-21]. |

| 16 | 权龙哲, 王建宇, 王旗, 等. 基于电磁振动与卷积神经网络的玉米品质精选装置[J]. 江苏大学学报:自然科学版, 2020, 41(3): 288-293, 313. |

| Quan Long-zhe, Wang Jian-yu, Wang Qi, et al. Classification method of corn quality selection based onelectromagnetic vibration and convolutional neural network[J]. Journal of Jiangsu University(Natural Science Edition), 2020, 41(3): 288-293, 313. | |

| 17 | He Kai-ming, Zhang Xiang-yu, Ren Shao-qing, et al. Deep residual learning for image recognition[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, RI, USA, 2016: 770-778. |

| 18 | Oktay O, Schlemper J, Folgoc L L, et al. Attention U-Net: learning where to look for the pancreas[J/OL]. [2022-04-23]. . |

| 19 | 詹为钦, 倪蓉蓉, 杨彪. 基于注意力机制的PointPillars+三维目标检测[J]. 江苏大学学报:自然科学版, 2020, 41(3): 268-273. |

| Zhan Wei-qin, Ni Rong-rong, Yang Biao. An attention-based PointPillars+3D object detection[J]. Journal of Jiangsu University(Natural Science Edition), 2020, 41(3): 268-273. | |

| 20 | Henriques J F, Caseiro R, Martins P, et al. High-speed tracking with kernelized correlation filters[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(3): 583-596. |

| 21 | Milletari F, Navab N, Ahmadi S A. V-net: fully convolutional neural networks for volumetric medical image segmentation[C]∥2016 Fourth International Conference on 3D Vision, CA, USA, 2016: 565-571. |

| 22 | Lin T Y, Goyal P, Girshick R, et al. Focal loss for dense object detection[J]. IEEE Transactions on Pattern Analysis & Machine Intelligence, 2020, 42(2):318-327. |

| 23 | Yeung M, Sala E, Schönlieb C B, et al. Unified focal loss: generalising dice and cross entropy-based losses to handle class imbalanced medical image segmentation[J]. Computerized Medical Imaging and Graphics, 2022, 95: No.102026. |

| 24 | Hu Q, Abràmoff M D, Garvin M K. Automated separation of binary overlapping trees in low-contrast color retinal images[C]∥International Conference on Medical Image Computing and Computer-Assisted Intervention, Berlin, Heidelberg, 2013: 436-443. |

| 25 | 袁梅, 全太锋, 黄俊, 等. 基于卷积神经网络的烟雾检测[J]. 重庆邮电大学学报: 自然科学版, 2020, 32(4): 620-629. |

| Yuan Mei, Quan Tai-feng, Huang Jun, et al. Smoke detection based on convolutional neural network[J]. Journal of Chongqing University of Posts and Telecommunications (Natural Science Edition), 2020, 32(4): 620-629. | |

| 26 | 何欣, 李书琴, 刘斌. 基于多尺度残差神经网络的葡萄叶片病害识别[J]. 计算机工程, 2021, 47(5): 285-291, 300. |

| He Xin, Li Shu-qin, Liu Bin. Identification of grape leaf diseases based on multi-scale residual neural network[J]. Computer Engineering, 2021, 47(5): 285-291, 300. | |

| 27 | 李新春,马红艳,林森.基于局部邻域四值模式的掌纹掌脉融合识别[J].重庆邮电大学学报: 自然科学版, 2020, 32(4): 630-638. |

| Li Xin-chun, Ma Hong-yan, Lin Sen. Palmprint and palm vein fusion recognition based on local neighbor quaternary pattern[J]. Journal of Chongqing University of Posts and Telecommunications (Natural Science Edition), 2021, 47(5): 285-291, 300. | |

| 28 | Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation[C]∥International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 2015: 234-241. |

| 29 | Morano J, Hervella L S, Novo J, et al. Simultaneous segmentation and classification of the retinal arteries and veins from color fundus images[J]. Artificial Intelligence in Medicine, 2021, 118(14): No.102116. |

| 30 | Girard F, Kavalec C, Cheriet F. Joint segmentation and classification of retinal arteries/veins from fundus images[J]. Artificial Intelligence in Medicine, 2019, 94: 96-109. |

| 31 | Chen Wen-ting, Yu Shuang, Wu Jun-de, et al. TR-GAN: topology ranking GAN with triplet loss for retinal artery/vein classification[C]∥International Conference on Medical Image Computing and Computer-Assisted Intervention, Lima, Peru, 2020: 616-625. |

| [1] | 高金武,贾志桓,王向阳,邢浩. 基于PSO-LSTM的质子交换膜燃料电池退化趋势预测[J]. 吉林大学学报(工学版), 2022, 52(9): 2192-2202. |

| [2] | 李晓英,杨名,全睿,谭保华. 基于深度学习的不均衡文本分类方法[J]. 吉林大学学报(工学版), 2022, 52(8): 1889-1895. |

| [3] | 申铉京,张雪峰,王玉,金玉波. 像素级卷积神经网络多聚焦图像融合算法[J]. 吉林大学学报(工学版), 2022, 52(8): 1857-1864. |

| [4] | 胡丹,孟新. 基于时变网格的对地观测卫星搜索海上船舶方法[J]. 吉林大学学报(工学版), 2022, 52(8): 1896-1903. |

| [5] | 高明华,杨璨. 基于改进卷积神经网络的交通目标检测方法[J]. 吉林大学学报(工学版), 2022, 52(6): 1353-1361. |

| [6] | 欧阳继红,郭泽琪,刘思光. 糖尿病视网膜病变分期双分支混合注意力决策网络[J]. 吉林大学学报(工学版), 2022, 52(3): 648-656. |

| [7] | 宋林,王立平,吴军,关立文,刘知贵. 基于信息物理融合和数字孪生的可靠性分析[J]. 吉林大学学报(工学版), 2022, 52(2): 439-449. |

| [8] | 曹洁,马佳林,黄黛麟,余萍. 一种基于多通道马尔可夫变迁场的故障诊断方法[J]. 吉林大学学报(工学版), 2022, 52(2): 491-496. |

| [9] | 杨军,高志明. 基于无监督变形网络的三维模型稠密对应关系计算[J]. 吉林大学学报(工学版), 2022, 52(12): 2971-2983. |

| [10] | 侯春萍,杨庆元,黄美艳,王致芃. 基于语义耦合和身份一致性的跨模态行人重识别方法[J]. 吉林大学学报(工学版), 2022, 52(12): 2954-2963. |

| [11] | 刘元宁,朱琳,朱晓冬,刘震,吴浩萌. 基于生成模型提升训练的深度学习虹膜识别方法[J]. 吉林大学学报(工学版), 2022, 52(12): 2924-2932. |

| [12] | 李晓宁,赵宏伟,张丹阳,张媛. 基于响应值中心加权卷积特征的图像检索算法[J]. 吉林大学学报(工学版), 2022, 52(11): 2669-2675. |

| [13] | 黄帅娜,李玉祥,毛岳恒,班爱莹,张志勇. 基于集成advGAN的黑盒迁移对抗攻击[J]. 吉林大学学报(工学版), 2022, 52(10): 2391-2398. |

| [14] | 曲优,李文辉. 基于锚框变换的单阶段旋转目标检测方法[J]. 吉林大学学报(工学版), 2022, 52(1): 162-173. |

| [15] | 刘桂霞,裴志尧,宋佳智. 基于深度学习的蛋白质⁃ATP结合位点预测[J]. 吉林大学学报(工学版), 2022, 52(1): 187-194. |

|