Journal of Jilin University(Engineering and Technology Edition) ›› 2022, Vol. 52 ›› Issue (11): 2718-2727.doi: 10.13229/j.cnki.jdxbgxb20210412

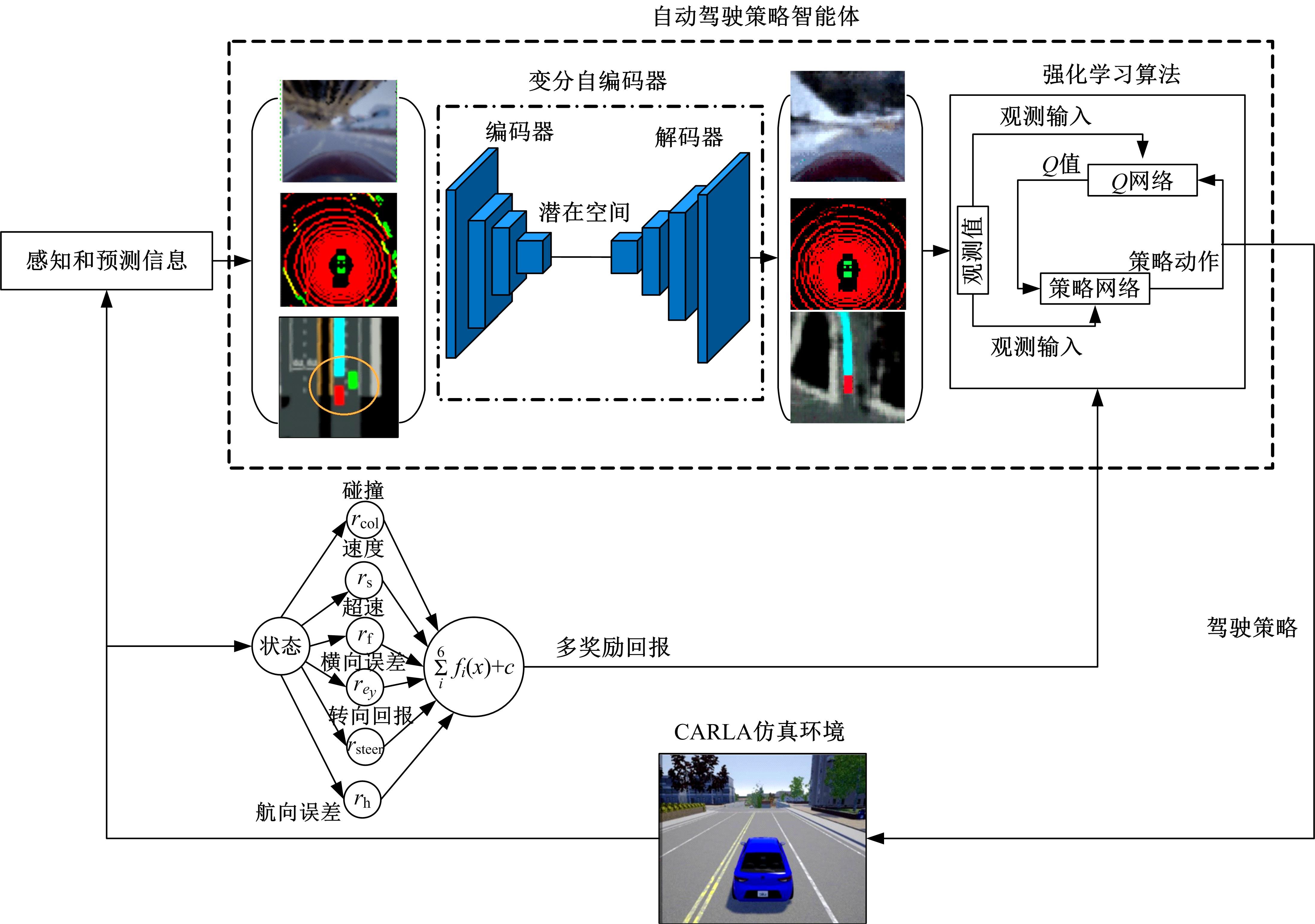

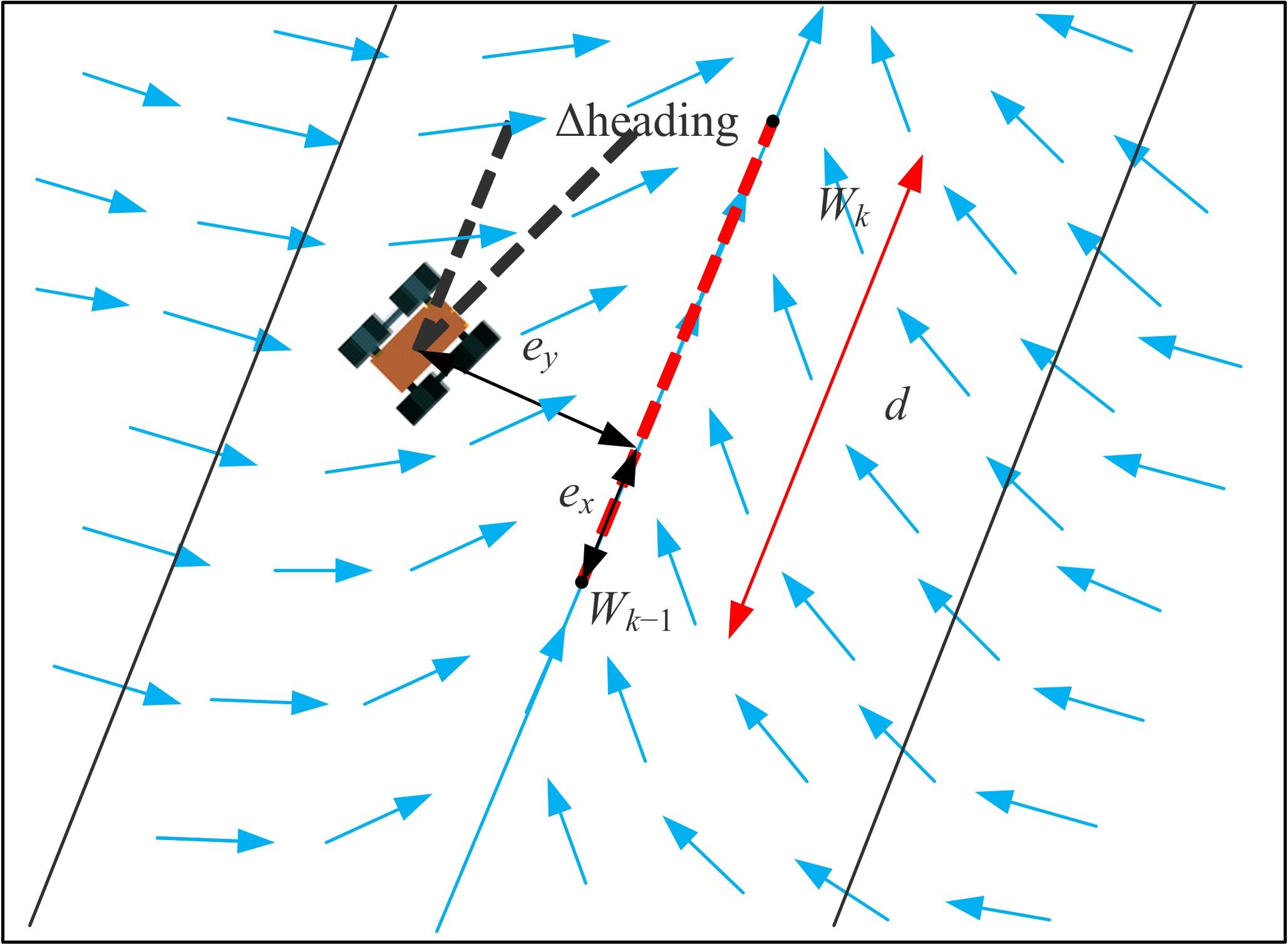

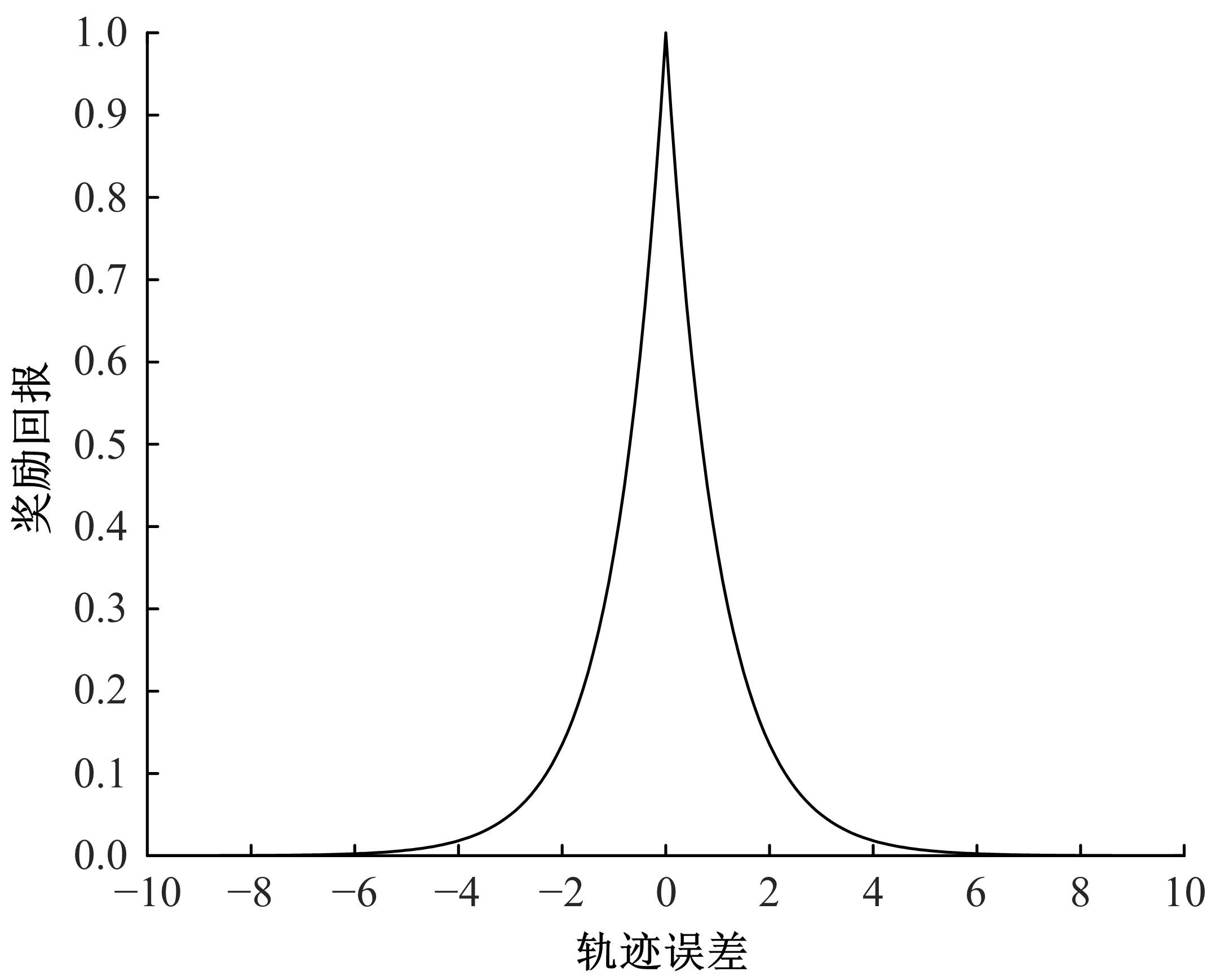

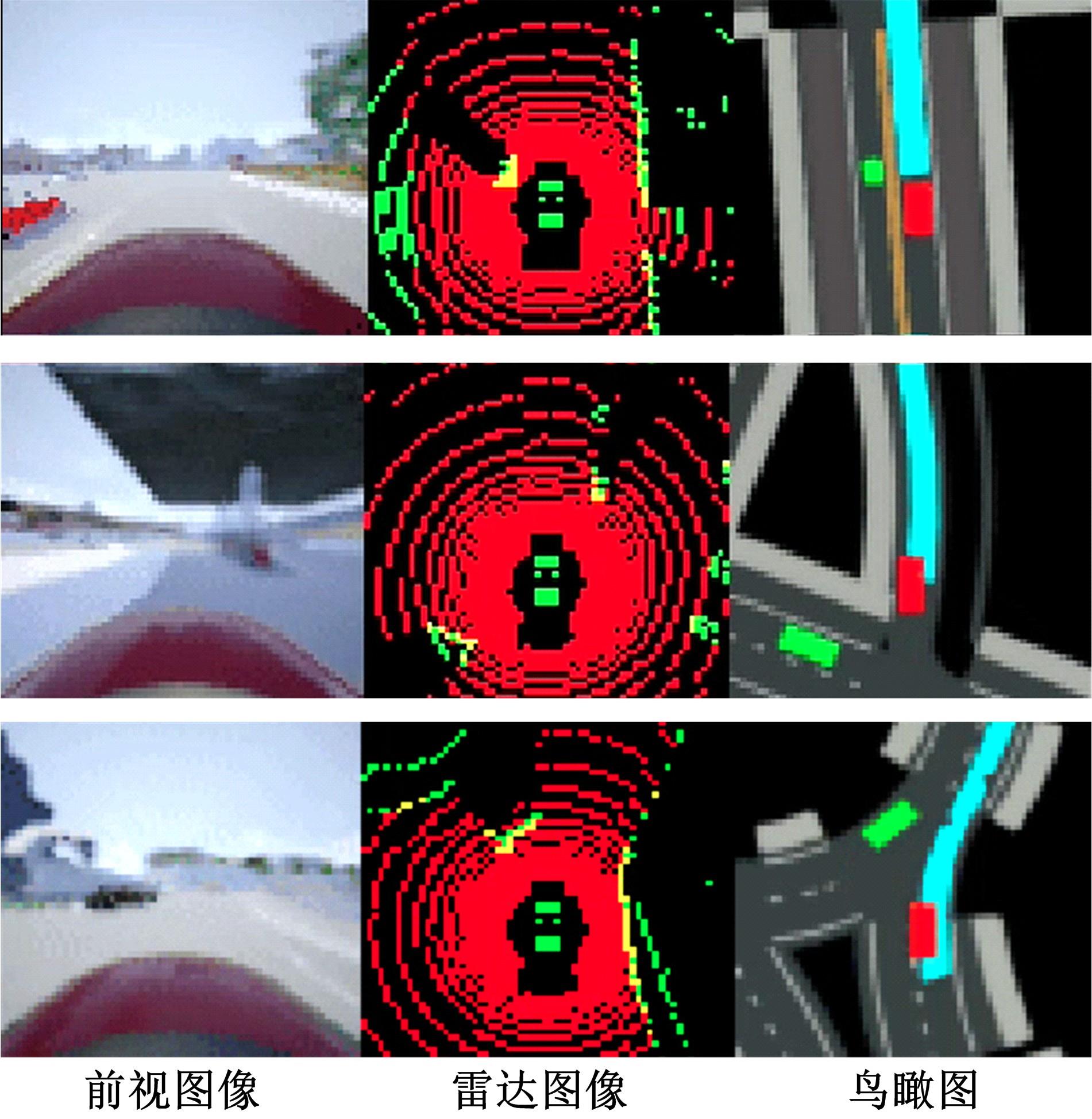

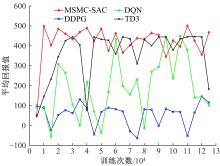

A driving decision⁃making approach based on multi⁃sensing and multi⁃constraints reward function

Zhong-li WANG( ),Hao WANG,Yan SHEN,Bai-gen CAI

),Hao WANG,Yan SHEN,Bai-gen CAI

- School of Electronic of Information Engineering,Beijing Jiaotong University,Beijing 100044,China

CLC Number:

- U469.79

| 1 | 杨顺, 蒋渊德, 吴坚, 等. 基于多类型传感数据的自动驾驶深度强化学习方法[J]. 吉林大学学报: 工学版, 2019, 49(4): 1026-1033. |

| Yang Shun, Jiang Yuan⁃de, Wu Jian, et al. Autonomous driving policy learning based on deep reinforcement learning and multi⁃type sensor data[J]. Journal of Jilin University(Engineering and Technology Edition), 2019, 49(4): 1026-1033. | |

| 2 | Silver D, Bagnell J A, Stentz A. Learning from demonstration for autonomous navigation in complex unstructured terrain[J]. The International Journal of Robotics Research, 2010, 29(12): 1565-1592. |

| 3 | Lange S, Riedmiller M, Voigtländer A. Autonomous reinforcement learning on raw visual input data in a real world application[C]∥The 2012 International Joint Conference on Neural Networks, Brisbane, Australia, 2012: 1-8. |

| 4 | Yu A, Palefsky-Smith R, Bedi R. Deep reinforcement learning for simulated autonomous vehicle control[J/OL]. [2020-08-04]. |

| 5 | Lillicrap T P, Hunt J J, Pritzel A, et al. Continuous control with deep reinforcement learning[J/OL]. [2021-09-09]. |

| 6 | Haarnoja T, Zhou A, Abbeel P, et al. Soft actor-critic: off-policy maximum entropy deep reinforcement learning with a stochastic actor[J/OL]. [2018-01-04]. |

| 7 | Bansal M, Krizhevsky A, Ogale A. Chauffeurnet: learning to drive by imitating the best and synthesizing the worst[J/OL]. [2020-12-07]. .48550/arXiv.1812.03079 |

| 8 | Kingma D P, Welling M. Auto-encoding variational bayes[J/OL]. [2020-12-20]. 50/arXiv.1312.6114 |

| 9 | Woo J, Yu C, Kim N. Deep reinforcement learning-based controller for path following of an unmanned surface vehicle[J]. Ocean Engineering, 2019, 183: 155-166. |

| 10 | Dosovitskiy A, Ros G, Codevilla F, et al. CARLA: an open urban driving simulator[J/OL]. [2020-11-10]. |

| 11 | Mnih V, Kavukcuoglu K, Silver D, et al. Playing atari with deep reinforcement learning[J/OL]. [2022-10-31]. |

| [1] | Ke-yong WANG,Da-tong BAO,Su ZHOU. Data-driven online adaptive diagnosis algorithm towards vehicle fuel cell fault diagnosis [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(9): 2107-2118. |

| [2] | Qi-ming CAO,Hai-tao MIN,Wei-yi SUN,Yuan-bin YU,Jun-yu JIANG. Hydrothermal characteristics of proton exchange membrane fuel cell start⁃up at low temperature [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(9): 2139-2146. |

| [3] | Hai-lin KUI,Ze-zhao WANG,Jia-zhen ZHANG,Yang LIU. Transmission ratio and energy management strategy of fuel cell vehicle based on AVL⁃Cruise [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(9): 2119-2129. |

| [4] | Yan LIU,Tian-wei DING,Yu-peng WANG,Jing DU,Hong-hui ZHAO. Thermal management strategy of fuel cell engine based on adaptive control strategy [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(9): 2168-2174. |

| [5] | Cheng LI,Hao JING,Guang-di HU,Xiao-dong LIU,Biao FENG. High⁃order sliding mode observer for proton exchange membrane fuel cell system [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(9): 2203-2212. |

| [6] | Pei ZHANG,Zhi-wei WANG,Chang-qing DU,Fu-wu YAN,Chi-hua LU. Oxygen excess ratio control method of proton exchange membrane fuel cell air system for vehicle [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(9): 1996-2003. |

| [7] | Xun-cheng CHI,Zhong-jun HOU,Wei WEI,Zeng-gang XIA,Lin-lin ZHUANG,Rong GUO. Review of model⁃based anode gas concentration estimation techniques of proton exchange membrane fuel cell system [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(9): 1957-1970. |

| [8] | Yao-wang PEI,Feng-xiang CHEN,Zhe HU,Shuang ZHAI,Feng-lai PEI,Wei-dong ZHANG,Jie-ran JIAO. Temperature control of proton exchange membrane fuel cell thermal management system based on adaptive LQR control [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(9): 2014-2024. |

| [9] | Guang-di HU,Hao JING,Cheng LI,Biao FENG,Xiao-dong LIU. Multi⁃objective sliding mode control based on high⁃order fuel cell model [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(9): 2182-2191. |

| [10] | Feng-xiang CHEN,Qi WU,Yuan-song LI,Tian-de MO,Yu LI,Li-ping HUANG,Jian-hong SU,Wei-dong ZHANG. Matching,simulation and optimization for 2.5 ton fuel cell/battery hybrid forklift [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(9): 2044-2054. |

| [11] | Xiao-hua WU,Zhong-wei YU,Zhang-ling ZHU,Xin-mei GAO. Fuzzy energy management strategy of fuel cell buses [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(9): 2077-2084. |

| [12] | Qing GAO,Hao-dong WANG,Yu-bin LIU,Shi JIN,Yu CHEN. Experimental analysis on spray mode of power battery emergency cooling [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(8): 1733-1740. |

| [13] | Kui-yang WANG,Ren HE. Recognition method of braking intention based on support vector machine [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(8): 1770-1776. |

| [14] | Jun-cheng WANG,Lin-feng LYU,Jian-min LI,Jie-yu REN. Optimal sliding mode ABS control for electro⁃hydraulic composite braking of distributed driven electric vehicle [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(8): 1751-1758. |

| [15] | Han-wu LIU,Yu-long LEI,Xiao-feng YIN,Yao FU,Xing-zhong LI. Multi⁃point control strategy optimization for auxiliary power unit of range⁃extended electric vehicle [J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(8): 1741-1750. |

|

||