Journal of Jilin University(Engineering and Technology Edition) ›› 2025, Vol. 55 ›› Issue (11): 3429-3445.doi: 10.13229/j.cnki.jdxbgxb.20240149

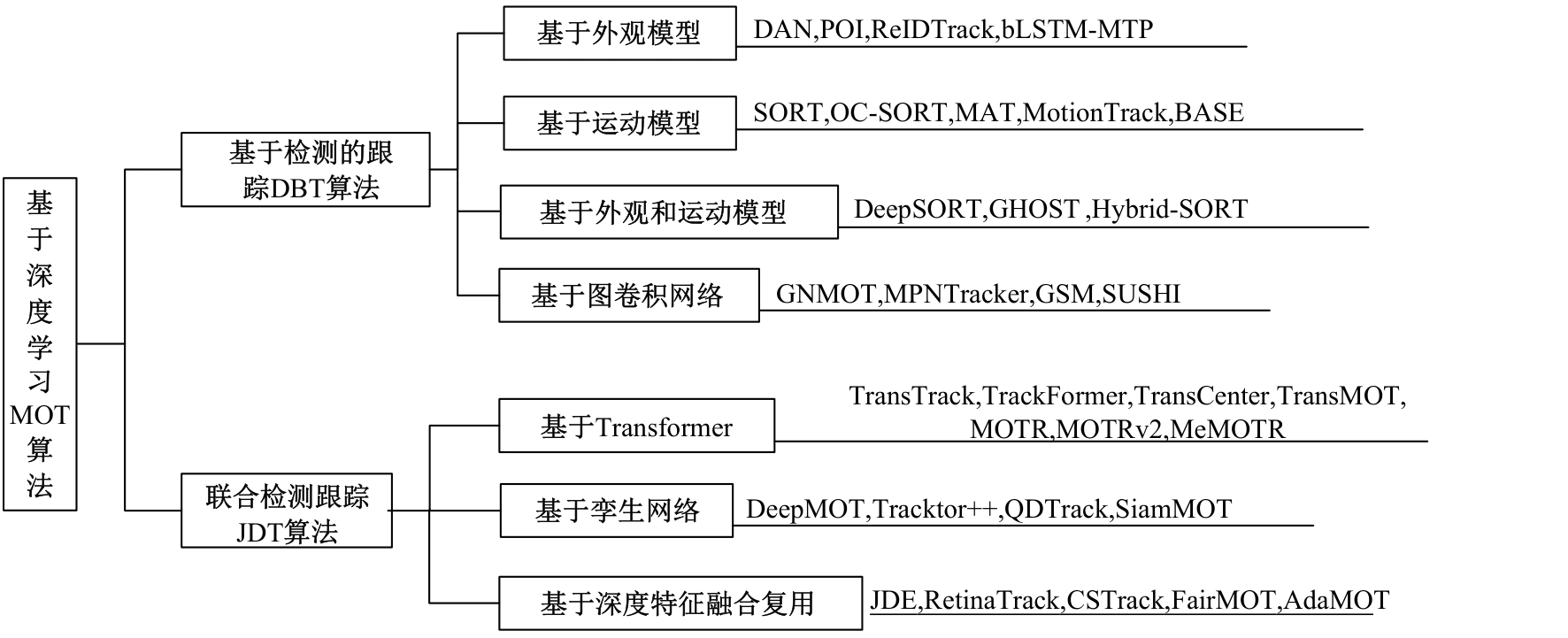

Review of multi-object tracking based on deep learning

Lai-wei JIANG1( ),Ce WANG2,Hong-yu YANG1

),Ce WANG2,Hong-yu YANG1

- 1.School of Safety Science and Engineering,Civil Aviation University of China,Tianjin 300300,China

2.School of Computer Science and Technology,Civil Aviation University of China,Tianjin 300300,China

CLC Number:

- TP391

| [1] | 金沙沙, 龙伟, 胡灵犀, 等. 多目标检测与跟踪算法在智能交通监控系统中的研究进展[J]. 控制与决策, 2023, 38(4): 890-901. |

| Jin Sha-sha, Long Wei, Hu Ling-xi,et al. Research progress of detection and multi-object tra-cking algorithm in intelligent traffic monitoring system[J]. Control and Decision, 2023, 38(4): 890-901. | |

| [2] | Cui Y, Zeng C, Zhao X, et al. SportsMOT: a large multi-object tracking dataset in multiple sports scenes[C]∥Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2023: 9921-9931. |

| [3] | Peng J, Wang T, Lin W, et al. TPM: multiple object tracking with tracklet-plane matching[J]. Pattern Recognition, 2020, 107: No.107480. |

| [4] | Ren W, Wang X, Tian J, et al. Tracking-by-counting: using network flows on crowd density maps for tracking multiple targets[J]. IEEE Transactions on Image Processing, 2020, 30: 1439-1452. |

| [5] | Shi J. Good features to track[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, USA, 1994: 593-600. |

| [6] | Broida T J, Chellappa R. Estimation of object motion parameters from noisy images[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1986(1): 90-99. |

| [7] | Isard M, Blake A. Condensation—conditional density propagation for visual tracking[J]. International Journal of Computer Vision, 1998, 29(1): 5-28. |

| [8] | Nummiaro K, Koller-meier E, Van Gool L. An adaptive color-based particle filter[J]. Image and Vision Computing, 2003, 21(1): 99-110. |

| [9] | 杨欣, 刘加, 周鹏宇, 等.基于多特征融合的粒子滤波自适应目标跟踪算法[J]. 吉林大学学报: 工学版,2015, 45(2): 533-539. |

| Yang Xin, Liu Jia, Zhou Peng-yu, et al. Adaptive particle filter for object tracking based on fusing multiple features[J]. Journal of Jilin University (Engineering and Technology Edition), 2015, 45(2): 533-539. | |

| [10] | Comaniciu D, Ramesh V, Meer P. Real-time tracking of non-rigid objects using mean shift[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hilton Head, USA, 2000: 142-149. |

| [11] | Jeyakar J, Babu R V, Ramakrishnan K. Robust object tracking with background-weighted local kernels[J]. Computer Vision and Image Understanding, 2008, 112(3): 296-309. |

| [12] | Bolme D S, Beveridge J, Draper B A, et al. Visual object tracking using adaptive correlation filters[C]∥Proceedings of the IEEE Conference on Computer V-ision and Pattern Recognition, San Francisco, USA, 2010: 2544-2550. |

| [13] | Henriques J F, Caseiro R, Martins P, et al. Exploitin-g the circulant structure of tracking-by-detection with kernels[C]∥European Conference on Computer Vision, Florence, Italy, 2012: 702-715. |

| [14] | Danelljan M, Shahbaz K F, Felsberg M, et al. Adaptive color attributes for real-time visual tracking[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 1090-1097. |

| [15] | Zhang K, Zhang L, Liu Q, et al. Fast visual tracking via dense spatio-temporal context learning[C]∥Euro-pean Conference on Computer Vision, Zürich, Swiss-Confederation, 2014: 127-141. |

| [16] | Krizhevsky A, Sutskever I, Hinton G. Imagenet classification with deep convolutional neural networks[J]. Advances in Neural Information Processing Systems, 2012, 60: 84-90. |

| [17] | Ren S, He K, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2016, 39(6): 1137-1149. |

| [18] | Liu W, Anguelov D, Erhan D, et al. SSD: single shot multibox detector[C]∥European Conference on Computer Vision, Amsterdam, Netherland, 2016: 21-37. |

| [19] | Bewley A, Ge Z, Ott L, et al. Simple online and realtime tracking[C]∥IEEE International Conference on Image Processing (ICIP). Phoenix, USA, 2016: 3464-3468. |

| [20] | He K, Gkioxari G, Dollár P, et al. Mask R-CNN[C]∥Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 2017: 2961-2969. |

| [21] | Zhou Z, Xing J, Zhang M, et al. Online multi-target tracking with tensor-based high-order graph matchin-g[C]∥24th International Conference on Pattern Recognition (ICPR), Bejing, China, 2018: 1809-1814. |

| [22] | Zhao D, Fu H, Xiao L, et al. Multi-object tracking with correlation filter for autonomous vehicle[J]. Sensors, 2018, 18(7): 2004. |

| [23] | Zhang Y, Sun P, Jiang Y, et al. Bytetrack: multi-object tracking by associating every detection box[C]∥European Conference on Computer Vision, Tel Aviv, The State of Israel, 2022: 1-21. |

| [24] | Ge Z, Liu S, Wang F, et al. YOLOX: exceeding yo-lo series in 2021[DB/OL]. [2021-08-06]. . |

| [25] | Sun S J, Akhtar N, Song H S, et al. Deep affinity network for multiple object tracking[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2019, 43(1): 104-119. |

| [26] | Yu F, Li W, Li Q, et al. POI: multiple object tracking with high performance detection and appearance feature[C]∥Computer Vision-ECCV 2016 Workshops, Amsterdam, The Netherlands, 2016: 36-42. |

| [27] | Huang K, Sun B, Chen F, et al. Reidtrack: multi-object track and segmentation without motion[DB/OL]. [2023-08-03]. . |

| [28] | Kim C, Fu X L, Alotaibi M, et al. Discriminative appearance modeling with multi-track pooling for real-time multi-object tracking[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 2021: 9553-9562. |

| [29] | Cao J, Pang J, Weng X, et al. Observation-centric sort: rethinking sort for robust multi-object tracking[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 9686-9696. |

| [30] | Han S, Huang P, Wang H, et al. MAT: motion-aware multi-object tracking[J]. Neurocomputing, 2022, 476: 75-86. |

| [31] | Qin Z, Zhou S, Wang L, et al. Motiontrack: learning robust short-term and long-term mo-tions for multi-object tracking[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 17939-17948. |

| [32] | Larsen M, Rolfsjord S, Gusland D, et al. Base: probably a better approach to multi-object track-ing[DB/OL]. [2023-09-21]. |

| [33] | Wojke N, Bewley A, Paulus D. Simple online and realtime tracking with a deep association metric[C]∥2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 2017: 3645-3649. |

| [34] | Karunasekera H, Wang H, Zhang H. Multiple object tracking with attention to appearance, structure, motion and size[J]. IEEE Access, 2019, 7: 104423-104434. |

| [35] | Seidenschwarz J, Brasó G, Serrano V, et al. Simple cues lead to a strong multi-object tracker[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 13813-13823. |

| [36] | Yang M, Han G, Yan B, et al. Hybrid-sort: weak cues matter for online multi-object tracking[C]∥Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, Canada, 2024: 6504-6512. |

| [37] | Li J, Gao X, Jiang T. Graph networks for multiple object tracking[C]∥Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, USA, 2020: 719-728. |

| [38] | Brasó G, Leal-taixé L. Learning a neural solver for multiple object tracking[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 2020: 6247-6257. |

| [39] | Liu Q, Chu Q, Liu B, et al. GSM: graph similarity model for multi-object tracking[C]∥Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, Yokohama, Japan, 2020: 530-536. |

| [40] | Cetintas O, Brasó G, Leal-taixé L. Unifying short and long-term tracking with graph hierarchies[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 22877-22887. |

| [41] | Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need[C]∥Advances in Neural Information Processing Systems, Long Beach, USA, 2017: 5999-6009. |

| [42] | Sun P, Cao J, Jiang Y, et al. Transtrack: multiple o-bject tracking with transformer[DB/OL]. [2021-05-04].. |

| [43] | Meinhardt T, Kirillov A, Leal-taixe L, et al. Trackformer: multi-object tracking with transformers[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 8844-8854. |

| [44] | Carion N, Massa F, Synnaeve G, et al. End-to-end object detection with transformers[C]∥European Conference on Computer Vision, Virtual, 2020: 213-229. |

| [45] | Xu Y, Ban Y, Delorme G, et al. TransCenter: transformers with dense representations for multiple-object tracking[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022, 45(6): 7820-7835. |

| [46] | Chu P, Wang J, You Q, et al. Transmot: spatial-temporal graph transformer for multiple object tracking[C]∥Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, USA, 2023: 4870-4880. |

| [47] | Zeng F, Dong B, Zhang Y, et al. MOTR: end-to-end multiple-object tracking with transformer[C]∥European Conference on Computer Vision, Tel Aviv, The State of Israel, 2022: 659-675. |

| [48] | Zhang Y, Wang T, Zhang X. MOTRv2: bootstrapping end-to-end multi-object tracking by pretrained object detectors[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, Canada, 2023: 22056-22065. |

| [49] | Gao R, Wang L. MeMOTR: long-term memory-augmented transformer for multi-object tracking[C]∥Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2023: 9901-9910. |

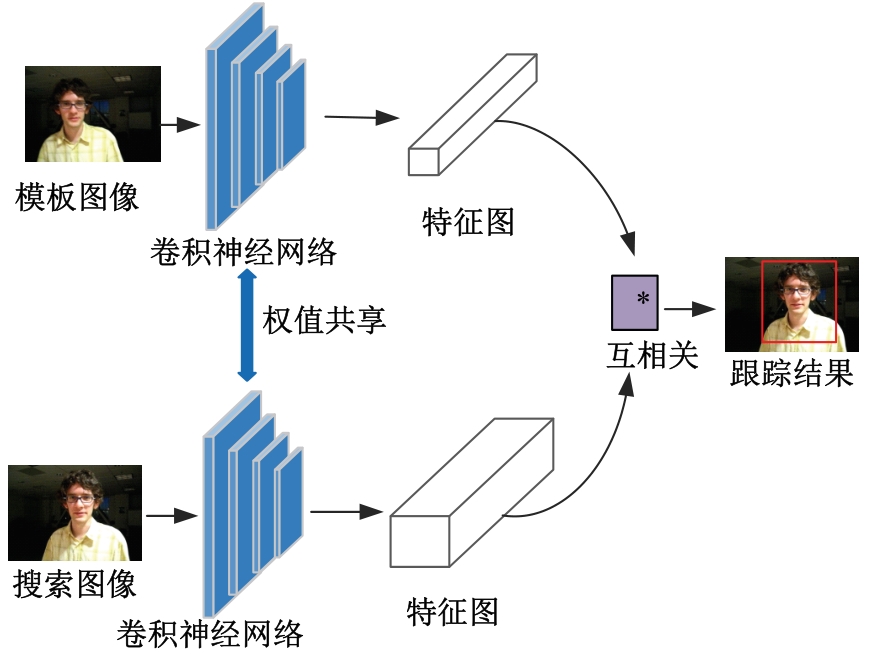

| [50] | Bertinetto L, Valmadre J, Henriques J, et al. Fully-convolutional siamese networks for object tracking[C]∥European Conference on Computer Vision, Amsterdam, Netherland, 2016: 850-865. |

| [51] | Xu Y, Osep A, Ban Y, et al. How to train your deep multi-object tracker[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 2020: 6787-6796. |

| [52] | Bergmann P, Meinhardt T, Leal-Taixe L. Tracking without bells and whistles[C]∥Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, USA, 2019: 941-951. |

| [53] | Pang J, Qiu L, Li X, et al. Quasi-dense similarity learning for multiple object tracking[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 2021: 164-173. |

| [54] | Gao X, Shen Z, Yang Y. Multi-object tracking with siamese-RPN and adaptive matching strategy[J]. Signal, Image and Video Processing, 2022, 16(4): 965-973. |

| [55] | Shuai B, Berneshawi A, Li X, et al. SiamMOT: siamese multi-object tracking[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 2021: 12372-12382. |

| [56] | Zhou X, Koltun V, Krähenbühl P. Tracking objects as points[C]∥European Conference on Computer Vision, Virtual, 2020: 474-490. |

| [57] | Wang Z, Zheng L, Liu Y, et al. Towards real-time multi-object tracking[C]∥European Conference on Computer Vision, Virtual, 2020: 107-122. |

| [58] | Lu Z, Rathod V, Votel R, et al. Retinatrack: online single stage joint detection and tracking[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 2020: 14668-14678. |

| [59] | Lin T, Goyal P, Girshick R, et al. Focal loss for dense object detection[C]∥Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 2017: 2980-2988. |

| [60] | 曲优, 李文辉. 基于多任务联合学习的多目标跟踪方法[J]. 吉林大学学报: 工学版, 2023, 53(10): 2932-2941. |

| Qu you, Li Wen-hui. Multiple object tracking method based on multi-task joint learning[J]. Journal of Jilin University (Engineering and Technology Edition), 2023, 53(10): 2932-2941. | |

| [61] | Liang C, Zhang Z, Zhou X, et al. Rethinking the competition between detection and reid in multiobject tracking[J]. IEEE Transactions on Image Processing, 2022, 31: 3182-3196. |

| [62] | Zhang Y, Wang C, Wang X, et al. FairMOT: on the fairness of detection and re-identification in multiple object tracking[J]. International Journal of Computer Vision, 2021, 129: 3069-3087. |

| [63] | Duan K, Bai S, Xie L, et al. Centernet: keypoint triplets for object detection[C]∥Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, South Korea, 2019: 6569-6578. |

| [64] | Liang T, Li B, Wang M, et al. A closer look at the joint training of object detection and re-identification in multi-object tracking[J]. IEEE Transactions on Image Processing, 2022, 32: 267-280. |

| [65] | Bernardin K, Stiefelhagen R. Evaluating multiple object tracking performance: the clear mot metrics[J]. EURASIP Journal on Image and Video Processing, 2008, 2008: 1-10. |

| [66] | Ristani E, Solera F, Zou R, et al. Performance measures and a data set for multi-target, multi-camera tracking[C]∥European Conference on Computer Vision, Amsterdam, Netherland, 2016: 17-35. |

| [67] | Luiten J, Osep A, Dendorfer P, et al. HOTA: a higher order metric for evaluating multi-object tracking[J]. International Journal of Computer Vision, 2021, 129: 548-578. |

| [68] | Geiger A, Lenz P, Urtasun R. Are we ready for autonomous driving? the kitti vision benchmark suite[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Providence, USA, 2012: 3354-3361. |

| [69] | Leal-taixé L, Milan A, Reid I, et al. MOTchallenge 2015: Towards a benchmark for multi-target tracking[DB/OL]. [2015-04-08]. . |

| [70] | Milan A, Leal-taixé L, Reid I, et al. MOT16: a benchmark for multi-object tracking[DB/OL]. [2016-05-03]. . |

| [71] | Dendorfer P, Rezatofighi H, Milan A, et al. MOT20: a benchmark for multi object tracking in cro-wded scenes[DB/OL]. [2020-03-19]. . |

| [72] | Sun P, Cao J, Jiang Y, et al. Dancetrack: multi-object tracking in uniform appearance and diverse motion[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 20993-21002. |

| [1] | Zong-wei YAO,Chen CHEN,Zhen-yun GAO,Hong-peng JIN,Hao RONG,Xue-fei LI,Hong-pu HUANG,Qiu-shi BI. Visual recognition of excavator keypoints based on synthetic image datasets [J]. Journal of Jilin University(Engineering and Technology Edition), 2026, 56(1): 76-85. |

| [2] | Lin-hong WANG,Yu-yang LIU,Zi-yu LIU,Ying-jia LU,Yu-heng ZHANG,Gui-shu HUANG. Defect recognition of lightweight bridges based on YOLOv5 [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(9): 2958-2968. |

| [3] | Jing LIAN,Ji-bao ZHANG,Ji-zhao LIU,Jia-jun ZHANG,Zi-long DONG. Text-based guided face image inpainting [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(8): 2732-2740. |

| [4] | Yuan-ning LIU,Xing-zhe WANG,Zi-yu HUANG,Jia-chen ZHANG,Zhen LIU. Stomach cancer survival prediction model based on multimodal data fusion [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(8): 2693-2702. |

| [5] | Jing-shu YUAN,Wu LI,Xing-yu ZHAO,Man YUAN. Semantic matching model based on BERTGAT-Contrastive [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(7): 2383-2392. |

| [6] | Hui-zhi XU,Dong-sheng HAO,Xiao-ting XU,Shi-sen JIANG. Expressway small object detection algorithm based on deep learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(6): 2003-2014. |

| [7] | Ru-bo ZHANG,Shi-qi CHANG,Tian-yi ZHANG. Review on image information hiding methods based on deep learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(5): 1497-1515. |

| [8] | Yue HOU,Jin-song GUO,Wei LIN,Di ZHANG,Yue WU,Xin ZHANG. Multi-view video speed extraction method that can be segmented across lane demarcation lines [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(5): 1692-1704. |

| [9] | Bin WEN,Yi-fu DING,Chao YANG,Yan-jun SHEN,Hui LI. Self-selected architecture network for traffic sign classification [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(5): 1705-1713. |

| [10] | Jian LI,Huan LIU,Yan-qiu LI,Hai-rui WANG,Lu GUAN,Chang-yi LIAO. Image recognition research on optimizing ResNet-18 model based on THGS algorithm [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(5): 1629-1637. |

| [11] | Zhen-jiang LI,Li WAN,Shi-rui ZHOU,Chu-qing TAO,Wei WEI. Dynamic estimation of operational risk of tunnel traffic flow based on spatial-temporal Transformer network [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(4): 1336-1345. |

| [12] | Hua CAI,Rui-kun ZHU,Qiang FU,Wei-gang WANG,Zhi-yong MA,Jun-xi SUN. Human pose estimation corrector algorithm based on implicit key point interconnection [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(3): 1061-1071. |

| [13] | Guang-wen LIU,Xin-yue XIE,Qiang FU,Hua CAI,Wei-gang WANG,Zhi-yong MA. Spatiotemporal Transformer with template attention for target tracking [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(3): 1037-1049. |

| [14] | Meng-xue ZHAO,Xiang-jiu CHE,Huan XU,Quan-le LIU. A method for generating proposals of medical image based on prior knowledge optimization [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(2): 722-730. |

| [15] | Hui-zhi XU,Shi-sen JIANG,Xiu-qing WANG,Shuang CHEN. Vehicle target detection and ranging in vehicle image based on deep learning [J]. Journal of Jilin University(Engineering and Technology Edition), 2025, 55(1): 185-197. |

|

||