Journal of Jilin University(Engineering and Technology Edition) ›› 2023, Vol. 53 ›› Issue (10): 2923-2931.doi: 10.13229/j.cnki.jdxbgxb.20211299

Previous Articles Next Articles

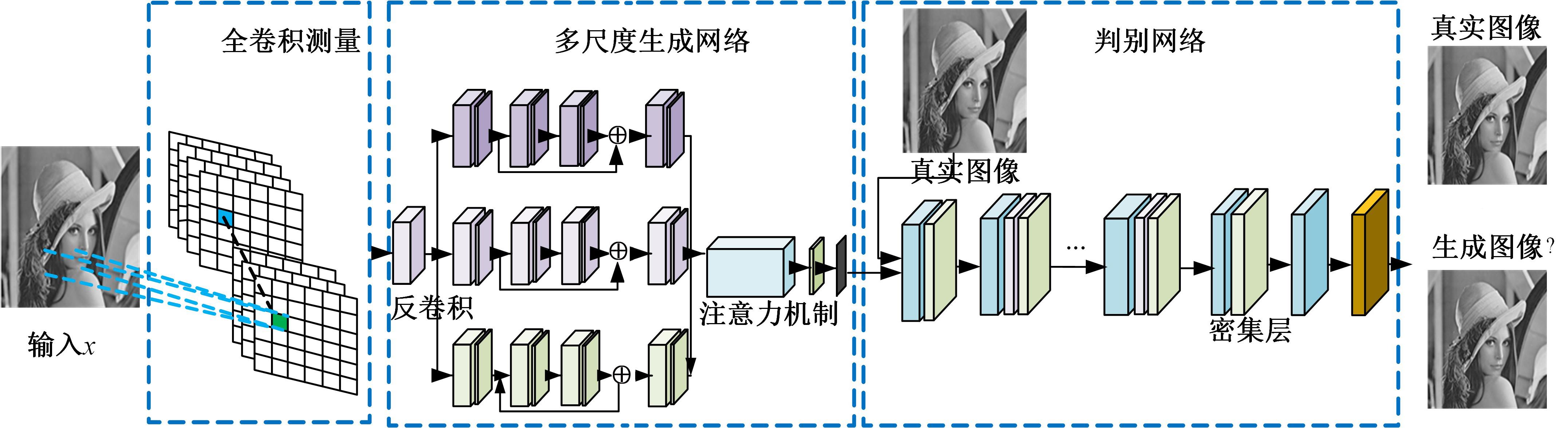

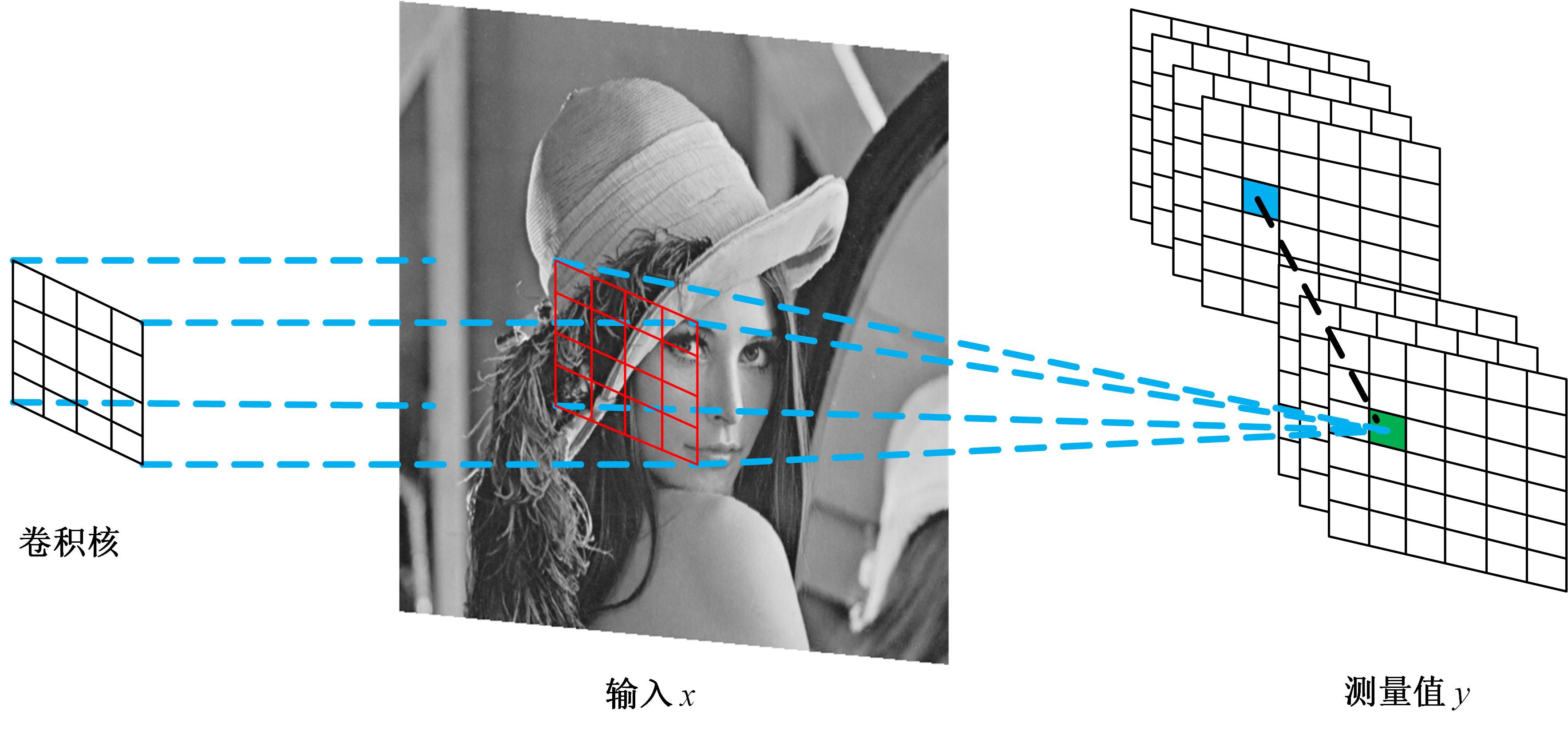

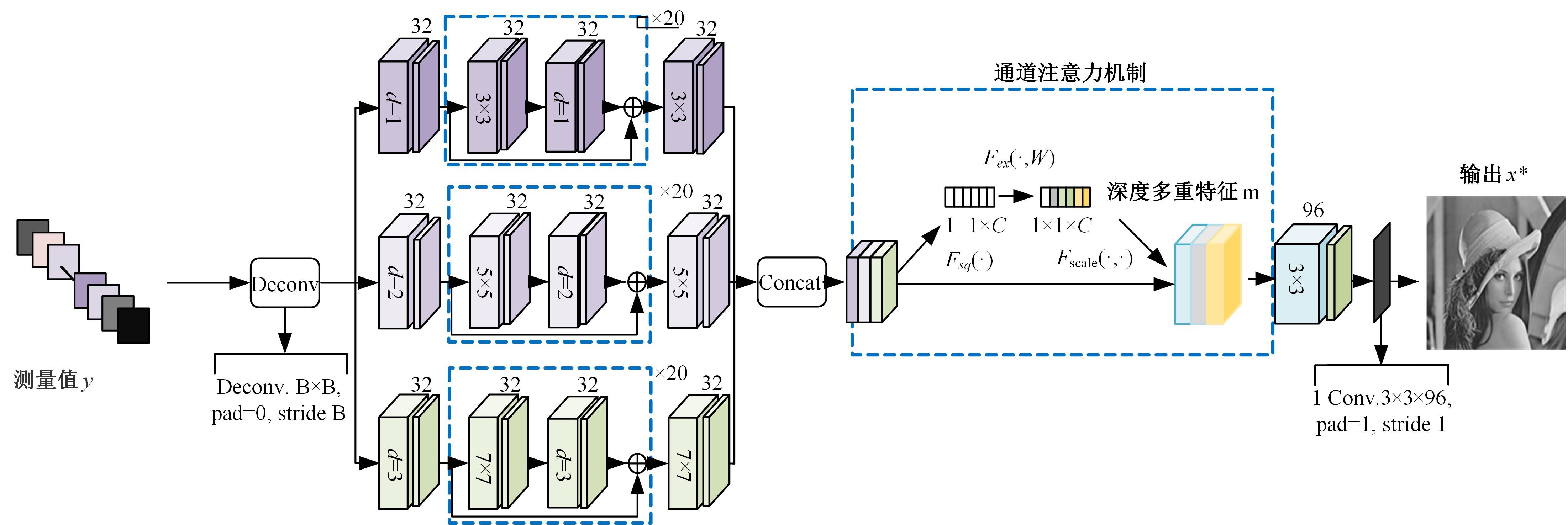

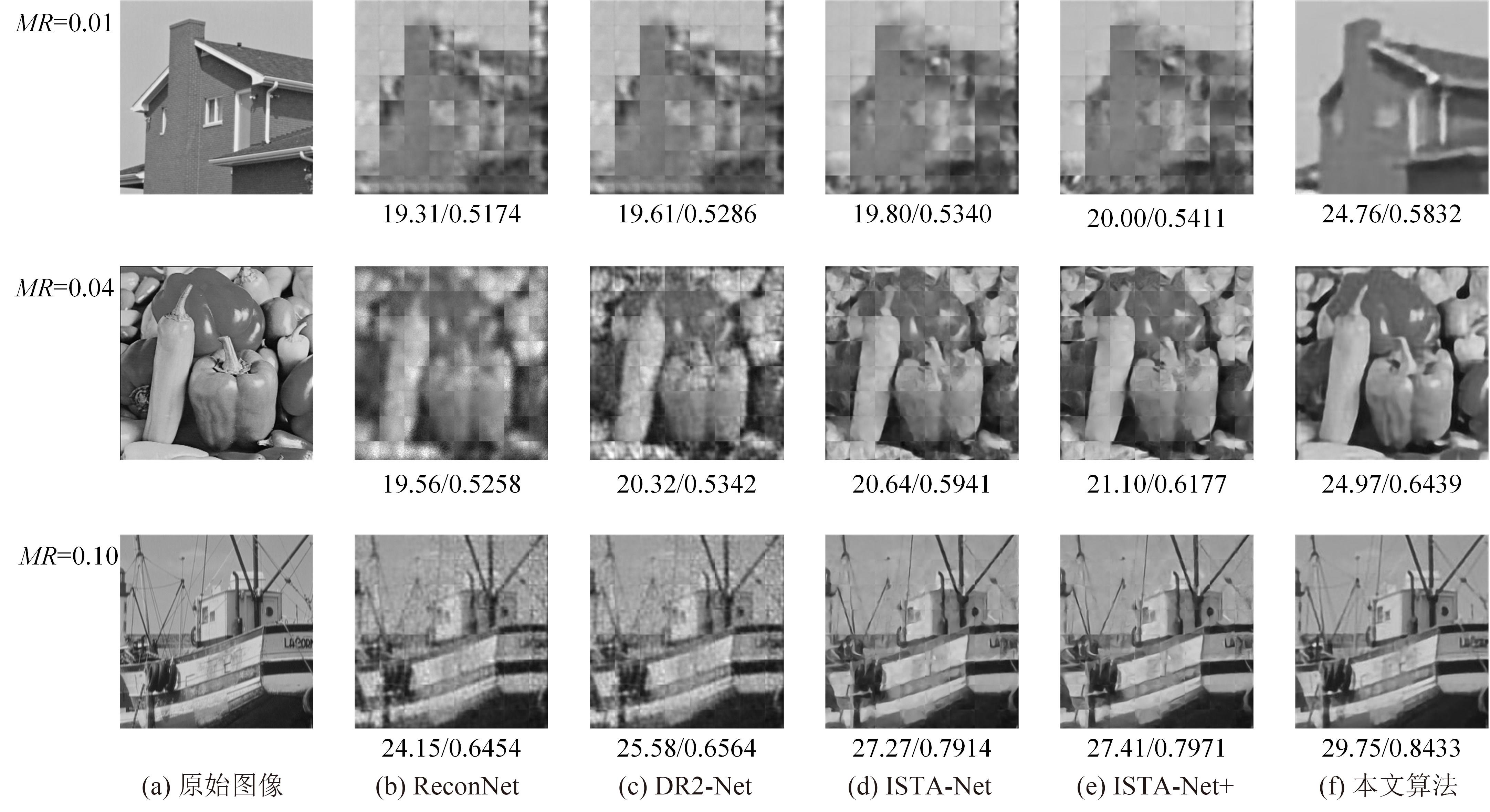

Multi-scale generative adversarial network for image compressed sensing and reconstruction algorithm

Chun-yan ZENG1( ),Kang YAN1,Zhi-feng WANG2(

),Kang YAN1,Zhi-feng WANG2( ),Zheng-hui WANG1

),Zheng-hui WANG1

- 1.Hubei Key Laboratory for High-efficiency Utilization of Solar Energy and Operation Control of Energy Storage System,Hubei University of Technology,Wuhan 430068,China

2.School of Education Information Technology,Central China Normal University,Wuhan 430079,China

CLC Number:

- TN911.73

| 1 | Donoho D. Compressed sensing[J]. IEEE Transactions on Information Theory, 2006, 52(4): 1289-1306. |

| 2 | Shi W, Jiang F, Liu S, et al. Image compressed sensing using convolutional neural network[J]. IEEE Transactions on Image Processing, 2020, 29: 375-388. |

| 3 | Mousavi A, Patel A B, Baraniuk R G. A deep learning approach to structured signal recovery[C]∥The 53rd Annual Allerton Conference on Communication, Control and Computing, Monticello,USA, 2015: 1336-1343. |

| 4 | Kulkarni K, Lohit S, Turaga P, et al. ReconNet: non-iterative reconstruction of images from compressively sensed measurements[C]∥IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 117-124. |

| 5 | Yao H T, Dai F, Zheng D M. DR2-Net:deep residual reconstruction network for image compressive sensing[C]∥IEEE Conference on Computer Vision and Pattern Recognition, Honolulu,USA, 2017: 451-462. |

| 6 | 练秋生, 富利鹏, 陈书贞, 等. 基于多尺度残差网络的压缩感知重构算法[J]. 自动化学报, 2019, 45(11):10-19. |

| Lian Qiu-sheng, Fu Li-peng, Chen Shu-zhen, et al. A compressed sensing algorithm based on multi-scale residual reconstruction network[J]. Acta Automatica Sinica, 2019, 45(11): 10-19. | |

| 7 | Gunning D, Aha D W. Darpa's explainable artificial intelligence program[J]. Magazine, 2019, 40(2): 44-58. |

| 8 | Monga V, Li Y, Eldar Y C. Algorithm unrolling: interpretable, efficient deep learning for signal and image processing[J]. IEEE Signal Processing Magazine, 2021, 38(2): 18-44. |

| 9 | Zhang J. ISTANet: interpretable optimization-inspired deep network for image compressive sensing[C]∥IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 1828-1837. |

| 10 | Fergus R, Taylor G W. Adaptive deconvolutional networks for mid and high level feature learning[C]∥International Conference on Computer Vision of IEEE Computer Society, Barcelona,Spain, 2011: 2018-2025. |

| 11 | Zeng K, Wang Y, Mao J. Deep residual deconvolutional networks for defocus blur detection[J]. IET Image Processing, 2021, 15: 1-3. |

| 12 | Woo S, Park J, Lee J Y, et al. CBAM: convolutional block attention module[C]∥Proceedings of the European Conference on Computer Vision, Munich, Germany, 2018: 3-19.. |

| 13 | Hou Q, Zhou D, Feng J. Coordinate attention for efficient mobile network design[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 2021: 13713-13722. |

| 14 | Hu A, Chen S, Wu L. WSGAN: an improved generative adversarial network for remote sensing image road network extraction by weakly supervised processing[J]. Remote Sensing, 2021, 13(13): 2506-2516. |

| 15 | Cui T, Gou G, Xiong G.6GAN: IPv6 multi-pattern target generation via generative adversarial nets with reinforcement learning[C]∥IEEE Conference on Computer Communications, Beijing, China, 2021: 1-10. |

| 16 | LEDIG C. Photo-realistic single image super-resolution using a generative adversarial network[C]∥IEEE Conference on Computer Vision and Pattern Recognition, Honolulu,USA, 2017: 105-114. |

| 17 | Deng Z, Zhu L, Hu X. Deep multi-model fusion for single-image dehazing[C]∥International Conference on Computer Vision, Seoul, South Korea, 2019: 165-174. |

| [1] | Guang HUO,Da-wei LIN,Yuan-ning LIU,Xiao-dong ZHU,Meng YUAN,Di GAI. Lightweight iris segmentation model based on multiscale feature and attention mechanism [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2591-2600. |

| [2] | Ying HE,Zhuo-ran WANG,Xu ZHOU,Yan-heng LIU. Point of interest recommendation algorithm integrating social geographical information based on weighted matrix factorization [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2632-2639. |

| [3] | Yun-zuo ZHANG,Xu DONG,Zhao-quan CAI. Multi view gait cycle detection by fitting geometric features of lower limbs [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2611-2619. |

| [4] | Ming-yao XIAO,Xiong-fei LI,Rui ZHU. Medical image fusion based on pixel correlation analysis in NSST domain [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(9): 2640-2648. |

| [5] | Ya-hui ZHAO,Fei-yu LI,Rong-yi CUI,Guo-zhe JIN,Zhen-guo ZHANG,De LI,Xiao-feng JIN. Korean⁃Chinese translation quality estimation based on cross⁃lingual pretraining model [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(8): 2371-2379. |

| [6] | Xiang-jiu CHE,Huan XU,Ming-yang PAN,Quan-le LIU. Two-stage learning algorithm for biomedical named entity recognition [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(8): 2380-2387. |

| [7] | Lian-ming WANG,Xin WU. Method for 3D motion parameter measurement based on pose estimation [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(7): 2099-2108. |

| [8] | Ze-qiang ZHANG,Wei LIANG,Meng-ke XIE,Hong-bin ZHENG. Elite differential evolution algorithm for mixed⁃model two⁃side disassembly line balancing problem [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(5): 1297-1304. |

| [9] | Zhen-hai ZHANG,Kun JI,Jian-wu DANG. Crack identification method for bridge based on BCEM model [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(5): 1418-1426. |

| [10] | Pei-yong LIU,Jie DONG,Luo-feng XIE,Yang-yang ZHU,Guo-fu YIN. Surface defect detection algorithm of magnetic tiles based on multi⁃branch convolutional neural network [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(5): 1449-1457. |

| [11] | Yu JIANG,Jia-zheng PAN,He-huai CHEN,Ling-zhi FU,Hong QI. Segmentation-based detector for traditional Chinese newspaper [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(4): 1146-1154. |

| [12] | Wei LYU,Jia-ze HAN,Jing-hui CHU,Pei-guang JING. Multi⁃modal self⁃attention network for video memorability prediction [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(4): 1211-1219. |

| [13] | Peng YU,Yan PIAO. New method for extracting person re-identification attributes based on multi-scale features [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(4): 1155-1162. |

| [14] | Hong-yang PAN,Zhao LIU,Bo YANG,Geng SUN,Yan-heng LIU. Overview of swarm intelligence methods for unmanned aerial vehicle systems based on new⁃generation information technology [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(3): 629-642. |

| [15] | Ying HE,Jun-song FAN,Wei WANG,Geng SUN,Yan-heng LIU. Joint optimization of secure communication and trajectory planning in unmanned aerial vehicle air⁃to⁃ground [J]. Journal of Jilin University(Engineering and Technology Edition), 2023, 53(3): 913-922. |

|

||