吉林大学学报(工学版) ›› 2024, Vol. 54 ›› Issue (10): 3018-3026.doi: 10.13229/j.cnki.jdxbgxb.20221564

• 通信与控制工程 • 上一篇

基于深度展开自注意力网络的压缩感知图像重构

- 上海大学 通信与信息工程学院,上海 200444

Compressive sensing image reconstruction based on deep unfolding self-attention network

- School of Communication and Information Engineering,Shanghai University,Shanghai 200444,China

摘要:

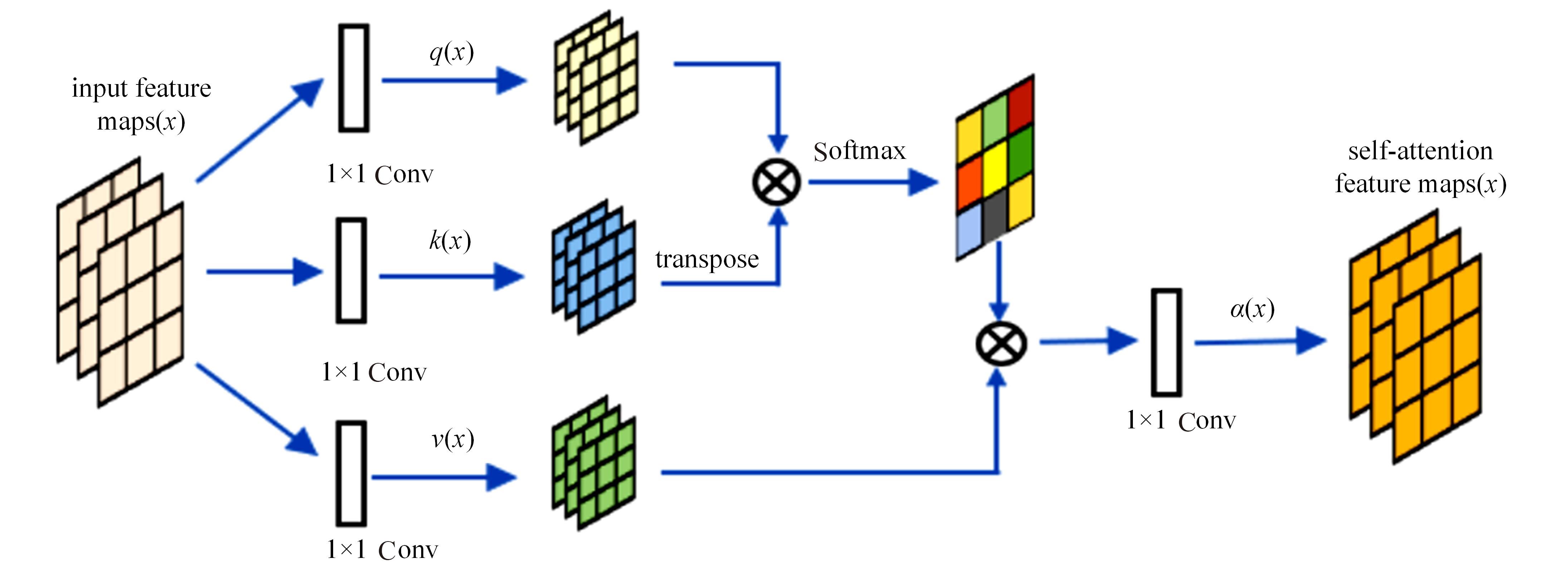

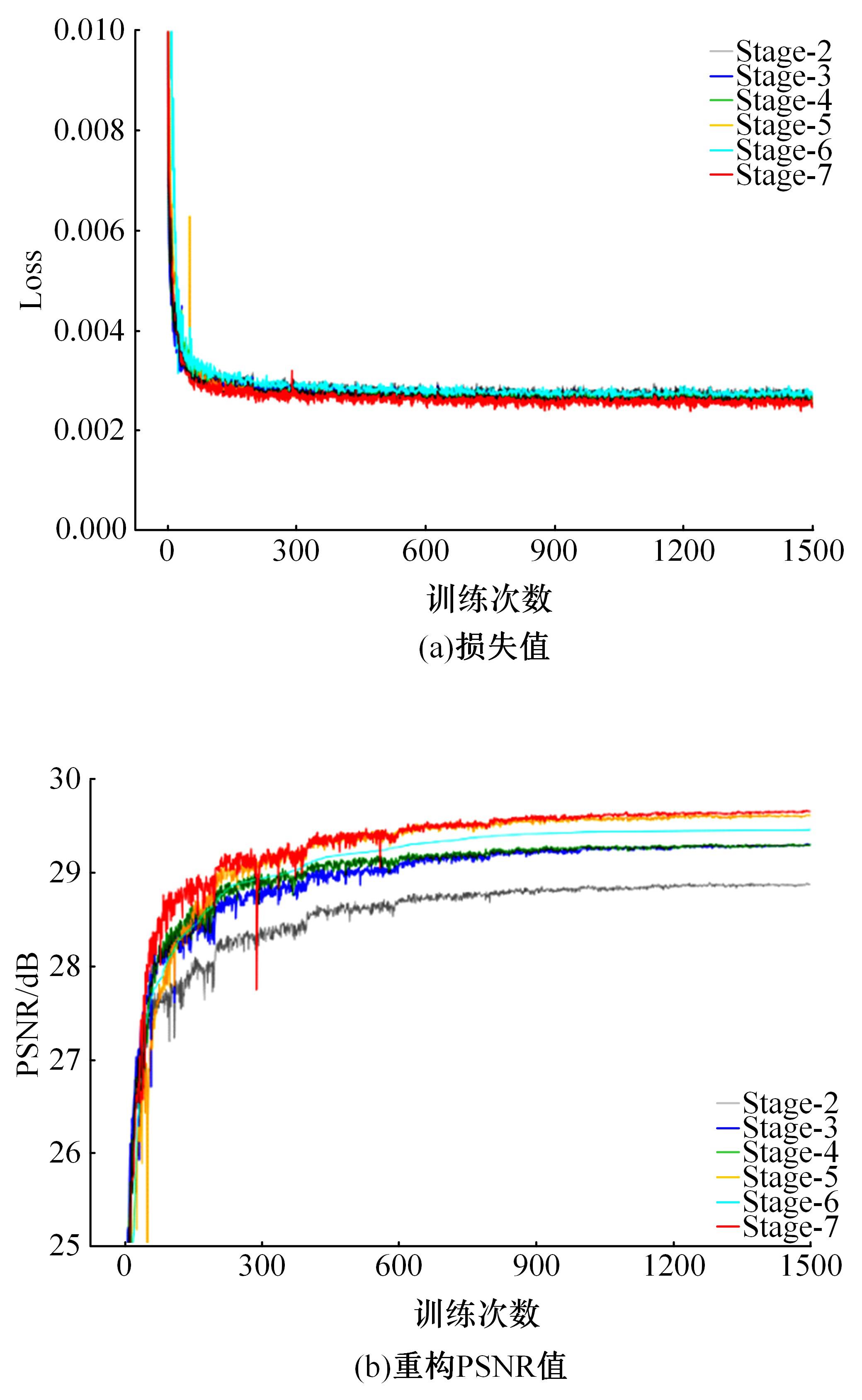

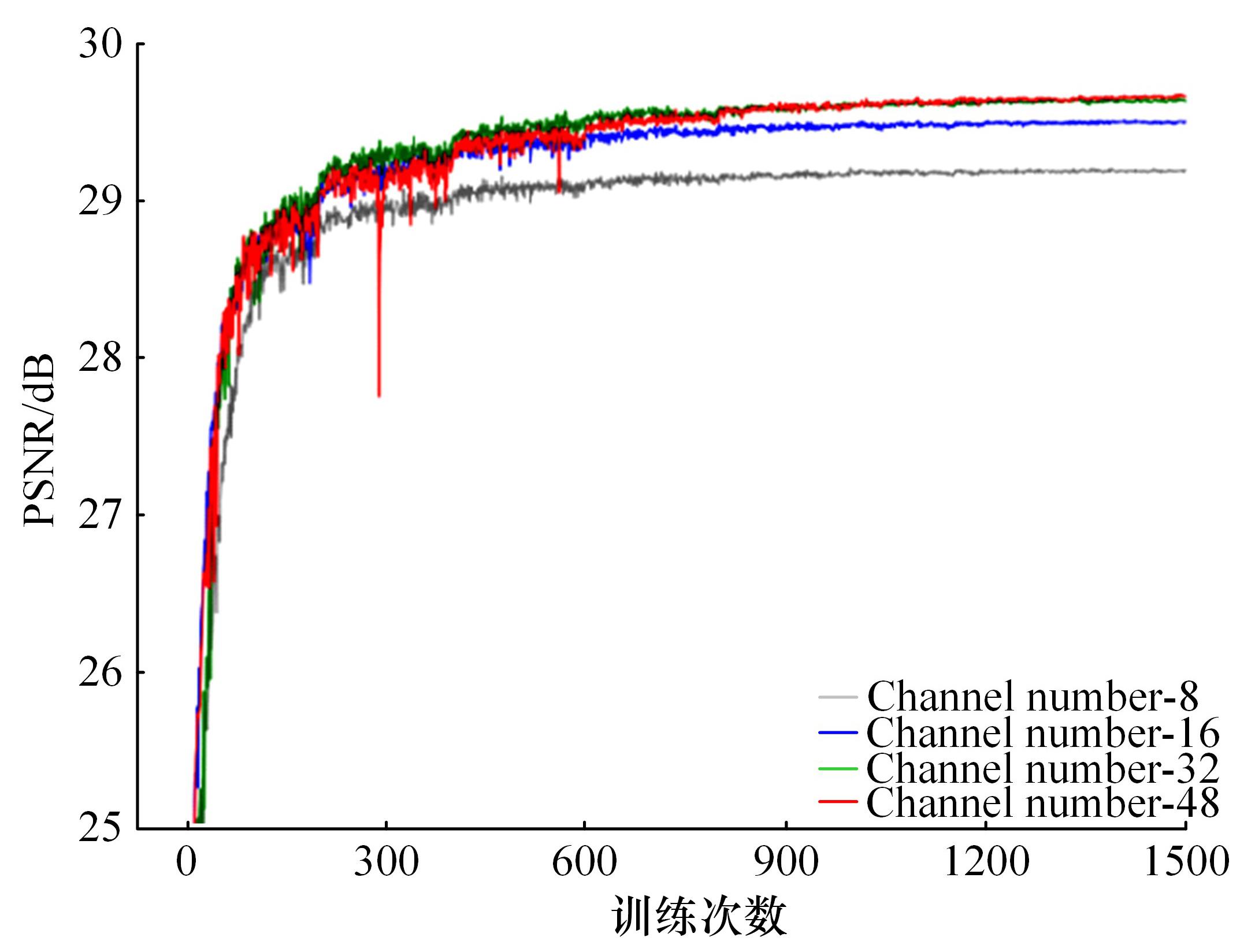

针对卷积神经网络应用于图像压缩感知重构在低采样率下包含的信息量较少,重构网络关注图像的上下文信息比较困难的缺点,提出了一种基于深度展开自注意力网络的压缩感知图像重构。该网络将采样矩阵和自注意力机制相结合用于图像的深度重构,并通过多阶段的重构模块充分利用测量值的信息,以提高图像的重构质量。实验结果表明:本文提出的网络可高效利用图像的采样信息,在不同数据集上均优于现有的最先进的方法,重建图像的视觉效果更优。

中图分类号:

- TP393

| 1 | Donoho D L. Compressed sensing[J]. IEEE Transactions on information theory, 2006, 52(4):1289-1306. |

| 2 | Baraniuk R G, Candes E, Nowak R, et al. Compressive sampling [from the guest editors][J]. IEEE Signal Processing Magazine, 2008, 25(2):12-13. |

| 3 | He K, Wang Z H, Huang X, et al. Computational multifocal microscopy[J]. Biomedical Optics Express, 2018, 9(12):6477-6496. |

| 4 | Mairal J, Sapiro G, Elad M. Learning multiscale sparse representations for image and video restoration[J]. Multiscale Modeling & Simulation, 2008, 7(1): 214-241. |

| 5 | Duarte M F, Davenport M A, Takhar D, et al. Single-pixel imaging via compressive sampling[J]. IEEE Signal Processing Magazine, 2008, 25(2): 83-91. |

| 6 | Liu Y P, Wu S, Huang X L, et al. Hybrid CS-DMRI: periodic time-variant subsampling and omnidirectional total variation based reconstruction[J]. IEEE Transactions on Medical Imaging, 2017, 36(10): 2148-2159. |

| 7 | Sharma S K, Lagunas E, Chatzinotas S, et al. Application of compressive sensing in cognitive radio communications: a survey[J]. IEEE Communications Surveys & Tutorials, 2017, 18(3):1838-1860. |

| 8 | Ma J W, Liu X Y, Shou Z, et al. Deep tensor ADMM-Net for snapshot compressive imaging[C]∥2019 IEEE/CVF International Conference on Computer Vision(ICCV), Seoul, South Korea,2019: 10222-10231. |

| 9 | Kulkarni K, Lohit S, Turaga P, et al. Reconnet: non-iterative reconstruction of images from compressively sensed measurements[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition,Las Vegas, USA,2016: 449-458. |

| 10 | Yao H T, Dai F, Zhang S L, et al. DR2-Net: deep residual reconstruction network for image compressive sensing[J]. Neurocomputing, 2019,359(24): 483-493. |

| 11 | Zhang J, Ghanem B. ISTA-Net: interpretable optimization-inspired deep network for image compressive sensing[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition,Salt Lake City, USA,2018: 1828-1837. |

| 12 | Shi W Z, Jiang F, Liu S H, et al. Image compressed sensing using convolutional neural network[J]. IEEE Transactions on Image Processing, 2020, 29: 375-388. |

| 13 | Zhang Z H, Liu Y P, Liu J N, et al. AMP-Net: denoising-based deep unfolding for compressive image sensing[J]. IEEE Transactions on Image Processing, 2021, 30: 1487-1500. |

| 14 | Hillar C J, Lim L H. Most tensor problems are NP-hard[J]. Journal of the ACM(JACM), 2013, 60(6): 1-39. |

| 15 | Li C B, Yin W T, Zhang Y. User's guide for TVAL3: TV minimization by augmented lagrangian and alternating direction algorithms[J]. CAAM Report,2009,20:46-47. |

| 16 | Chen C, Tramel E W, Fowler J E. Compressed-sensing recovery of images and video using multihypothesis predictions[C]∥2011 Conference Record of the Forty Fifth Asilomar Conference on Signals, Systems and Computers(ASILOMAR), Pacific Grove, USA, 2011: 1193-1198. |

| 17 | Mousavi A, Patel A B, Baraniuk R G. A deep learning approach to structured signal recovery[C]∥The 53rd Annual Allerton Conference on Communication, Control, and Computing(Allerton), Monticello, USA,2015: 1336-1343. |

| 18 | Sun Y B, Chen J W, Liu Q S. Dual-path attention network for compressed sensing image reconstruction[J]. IEEE Transactions on Image Processing, 2020, 29: 9482-9495. |

| 19 | Beck A, Teboulle M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems[J]. SIAM Journal on Imaging Sciences, 2009, 2(1): 183-202. |

| 20 | Vaswani A, Shazeer N, Parmar N. Attention is all you need[DB/OL].[2022-11-26].. |

| 21 | Zhang H, Ian G, Dimitris M, et al. Self-attention generative adversarial networks[C]∥International Conference on Machine Learning, Brussels, Belgium,2019: 7354-7363. |

| 22 | Khan S, Naseer M, Hayat M, et al. Transformers in vision: a survey[DB/OL].[2022-11-26].. |

| 23 | Radu T, Eirikur A, Gool Luc Van, et al. Ntire 2017 challenge on single image super-resolution: methods and results[C]∥Proceedings of the Ieee Conference on Computer Vision and Pattern Recognition Workshops,Hawaii, USA,2017: 114-125. |

| 24 | Bevilacqua M, Roumy A, Guillemot C, et al. Low-complexity single-image super-resolution based on nonnegative neighbor embedding[C]∥Proceedings of the 23rd British Machine Vision Conference,Guildford,UK, 2012:No.135. |

| 25 | Zeyde R, Elad M, Protter M. On single image scale-up using sparse-representations[C]∥International Conference on Curves and Surfaces, Beijing,China,2012: 711-730. |

| 26 | Huang J B, Abhishek S, Narendra A. Single image super-resolution from transformed self-exemplars[C]∥Proceedings of the Ieee Conference on Computer Vision and Pattern Recognition, Boston, USA,2015: 5197-5206. |

| 27 | Zhang J, Zhao D B, Gao W. Group-based sparse representation for image restoration[J]. IEEE Transactions on Image Processing, 2014, 23(8): 3336-3351. |

| 28 | Metzler C A, Maleki A, Baraniuk R G. From denoising to compressed sensing[J]. IEEE Transactions on Information Theory, 2016, 62(9): 5117-5144. |

| [1] | 李路,宋均琦,朱明,谭鹤群,周玉凡,孙超奇,周铖钰. 基于RGHS图像增强和改进YOLOv5网络的黄颡鱼目标提取[J]. 吉林大学学报(工学版), 2024, 54(9): 2638-2645. |

| [2] | 赵宏伟,武鸿,马克,李海. 基于知识蒸馏的图像分类框架[J]. 吉林大学学报(工学版), 2024, 54(8): 2307-2312. |

| [3] | 郭昕刚,程超,沈紫琪. 基于卷积网络注意力机制的人脸表情识别[J]. 吉林大学学报(工学版), 2024, 54(8): 2319-2328. |

| [4] | 张云佐,郑宇鑫,武存宇,张天. 基于双特征提取网络的复杂环境车道线精准检测[J]. 吉林大学学报(工学版), 2024, 54(7): 1894-1902. |

| [5] | 孙铭会,薛浩,金玉波,曲卫东,秦贵和. 联合时空注意力的视频显著性预测[J]. 吉林大学学报(工学版), 2024, 54(6): 1767-1776. |

| [6] | 李延风,刘名扬,胡嘉明,孙华栋,孟婕妤,王奥颖,张涵玥,杨华民,韩开旭. 基于梯度转移和自编码器的红外与可见光图像融合[J]. 吉林大学学报(工学版), 2024, 54(6): 1777-1787. |

| [7] | 张丽平,刘斌毓,李松,郝忠孝. 基于稀疏多头自注意力的轨迹kNN查询方法[J]. 吉林大学学报(工学版), 2024, 54(6): 1756-1766. |

| [8] | 周求湛,冀泽宇,王聪,荣静. 基于在线压缩重构的非侵入式电力负荷监测[J]. 吉林大学学报(工学版), 2024, 54(6): 1796-1806. |

| [9] | 梁礼明,周珑颂,尹江,盛校棋. 融合多尺度Transformer的皮肤病变分割算法[J]. 吉林大学学报(工学版), 2024, 54(4): 1086-1098. |

| [10] | 张云佐,郭威,李文博. 遥感图像密集小目标全方位精准检测算法[J]. 吉林大学学报(工学版), 2024, 54(4): 1105-1113. |

| [11] | 范博松,邵春福. 城市轨道交通突发事件风险等级判别方法[J]. 吉林大学学报(工学版), 2024, 54(2): 427-435. |

| [12] | 车娜,朱奕明,赵剑,孙磊,史丽娟,曾现伟. 基于联结主义的视听语音识别方法[J]. 吉林大学学报(工学版), 2024, 54(10): 2984-2993. |

| [13] | 周丰丰,于涛,范雨思. 基于质谱数据的生成对抗自编码器整合投票算法[J]. 吉林大学学报(工学版), 2024, 54(10): 2969-2977. |

| [14] | 霍光,林大为,刘元宁,朱晓冬,袁梦,盖迪. 基于多尺度特征和注意力机制的轻量级虹膜分割模型[J]. 吉林大学学报(工学版), 2023, 53(9): 2591-2600. |

| [15] | 何颖,王卓然,周旭,刘衍珩. 融合社交地理信息加权矩阵分解的兴趣点推荐算法[J]. 吉林大学学报(工学版), 2023, 53(9): 2632-2639. |

|

||