吉林大学学报(工学版) ›› 2024, Vol. 54 ›› Issue (6): 1746-1755.doi: 10.13229/j.cnki.jdxbgxb.20230042

• 计算机科学与技术 • 上一篇

面向脉动阵列神经网络加速器的软错误近似容错设计

- 吉林大学 计算机科学与技术学院,长春 130012

Systolic array-based CNN accelerator soft error approximate fault tolerance design

Xiao-hui WEI( ),Chen-yang WANG,Qi WU,Xin-yang ZHENG,Hong-mei YU(

),Chen-yang WANG,Qi WU,Xin-yang ZHENG,Hong-mei YU( ),Heng-shan YUE

),Heng-shan YUE

- College of Computer Science & Technology,Jilin University,Changchun 130012,China

摘要:

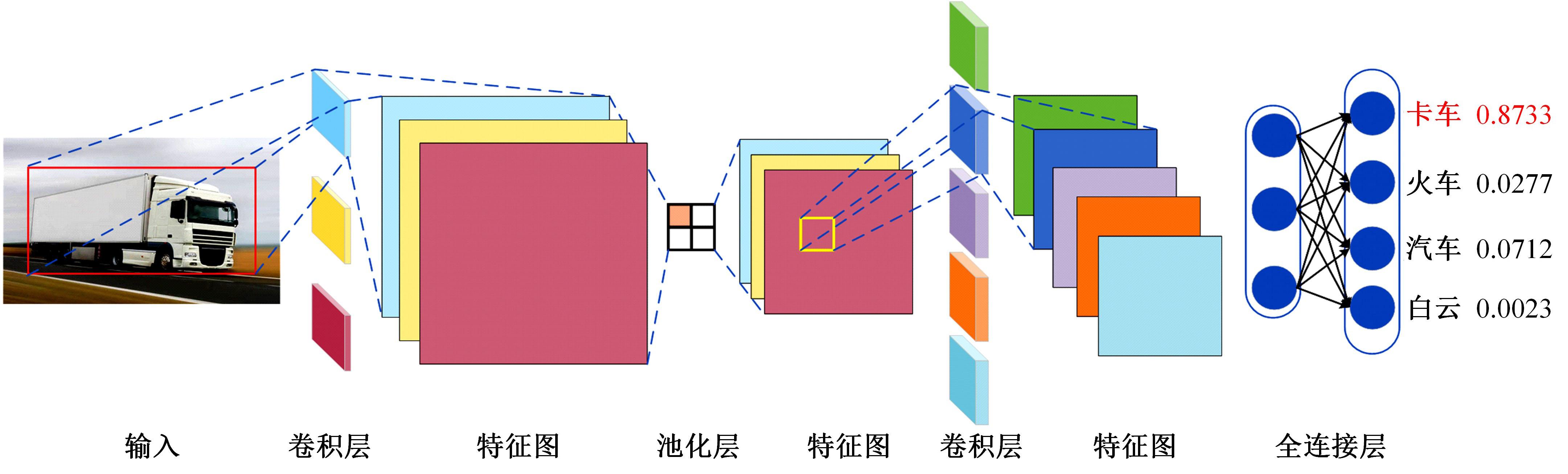

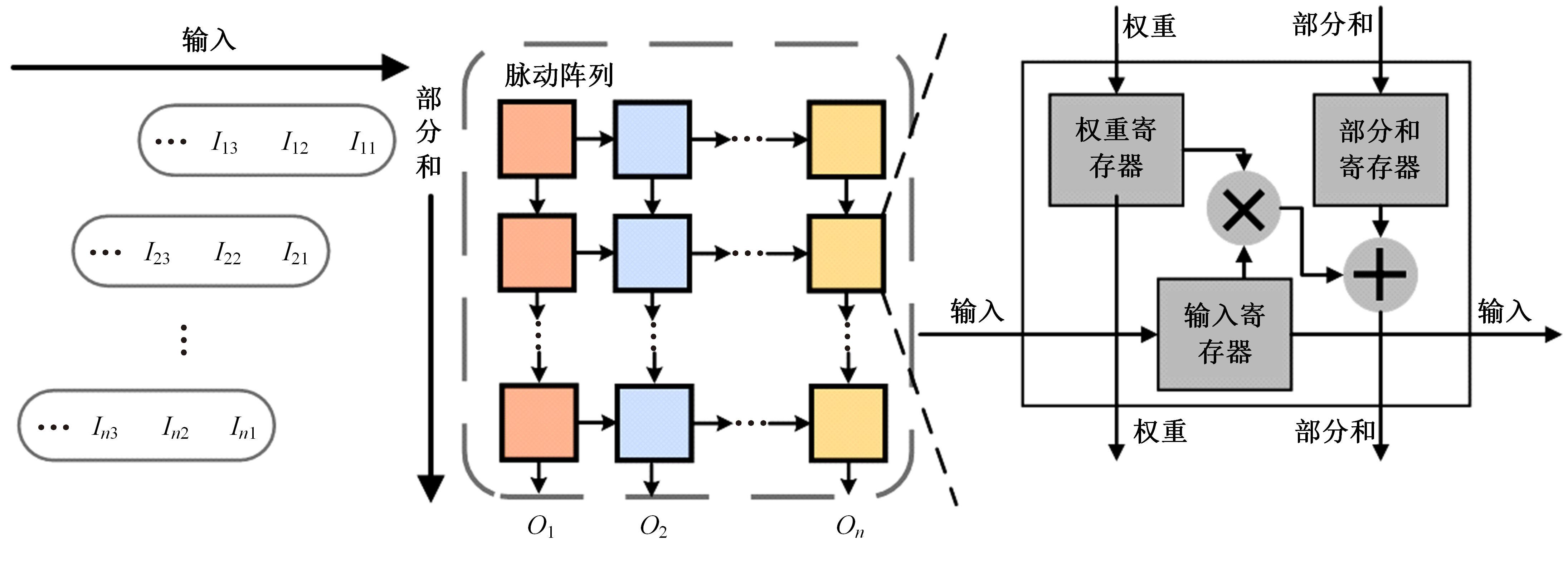

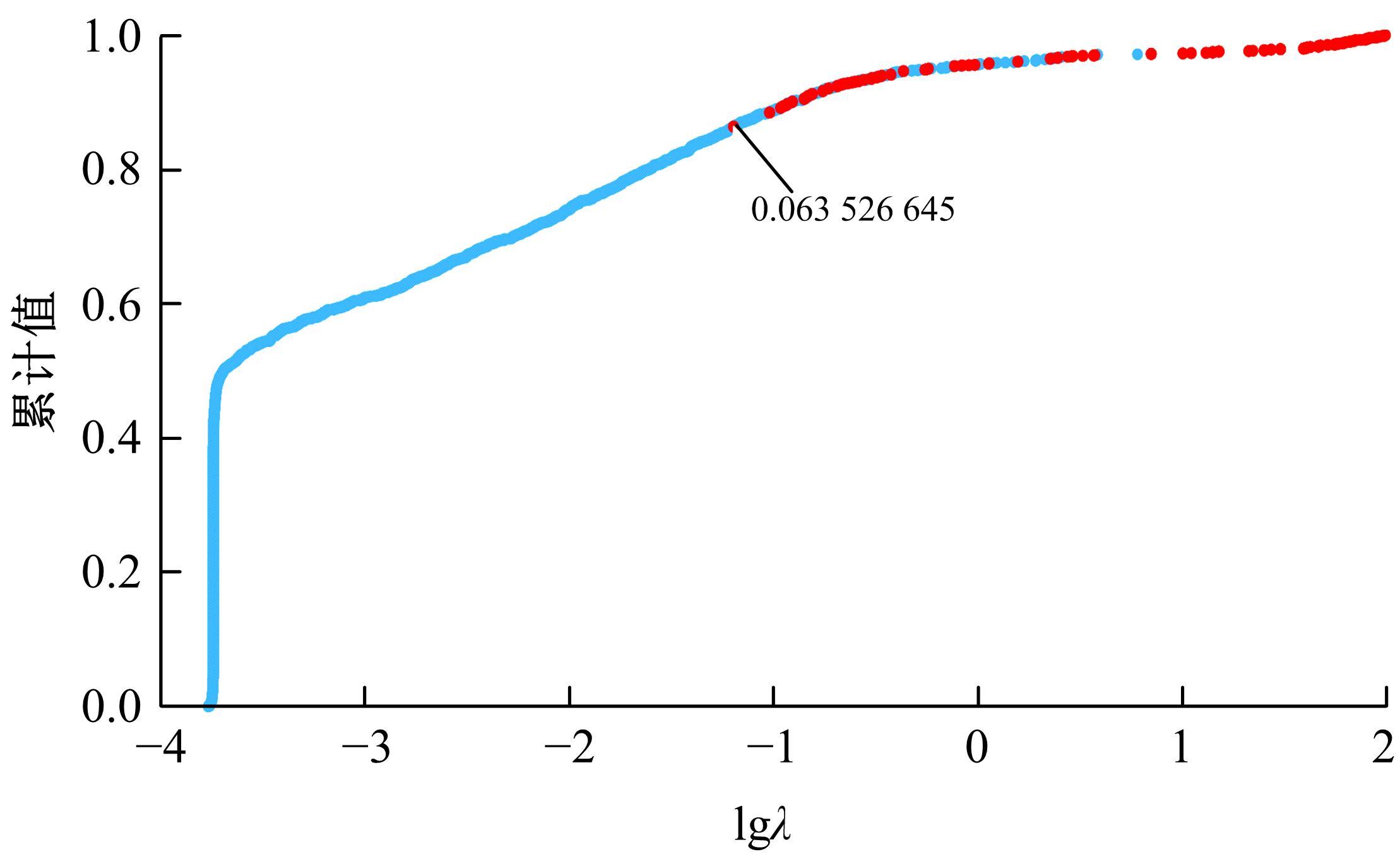

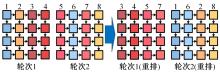

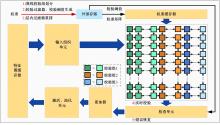

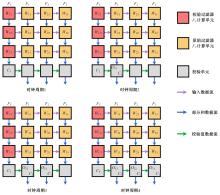

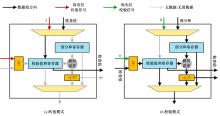

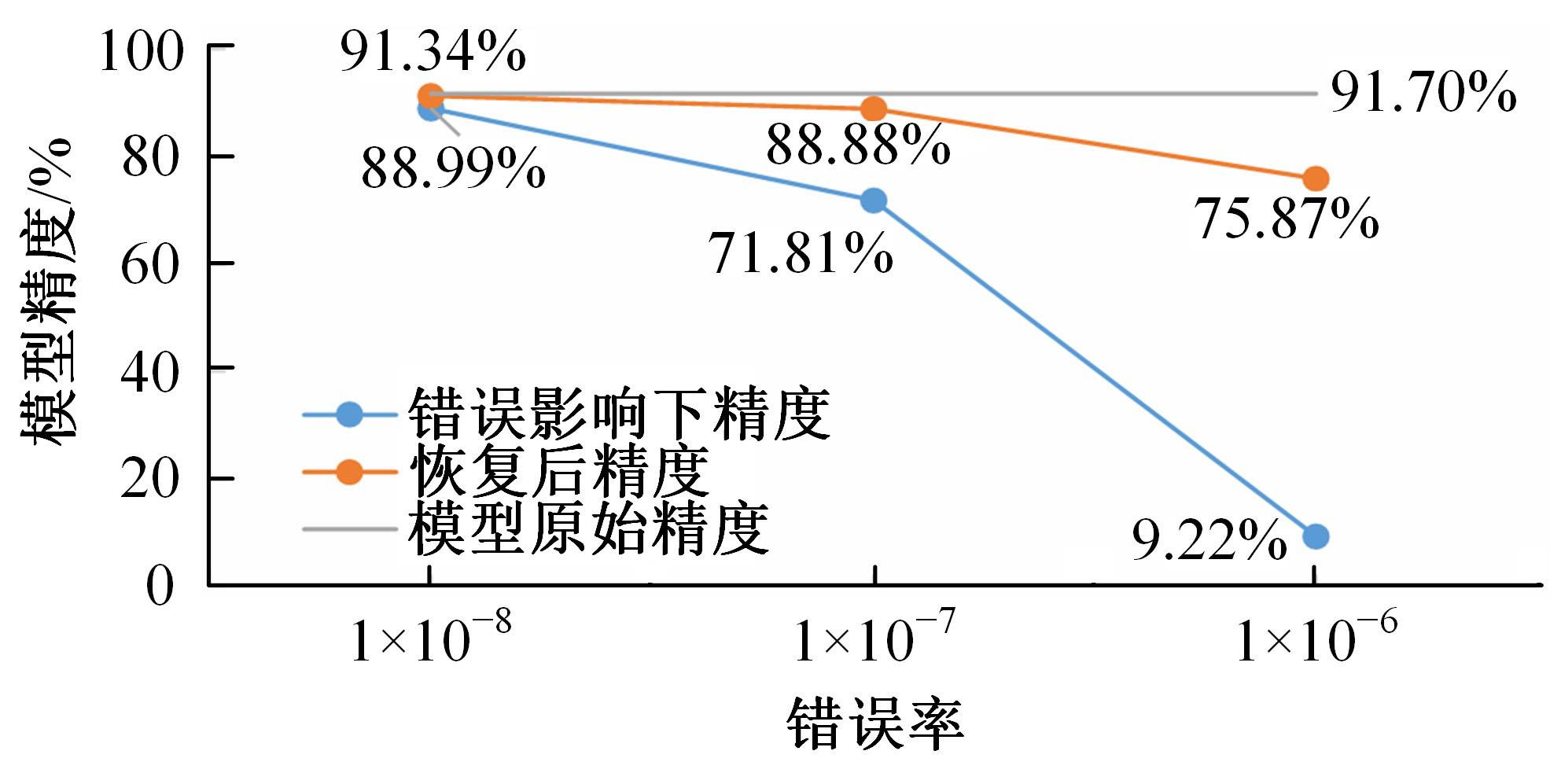

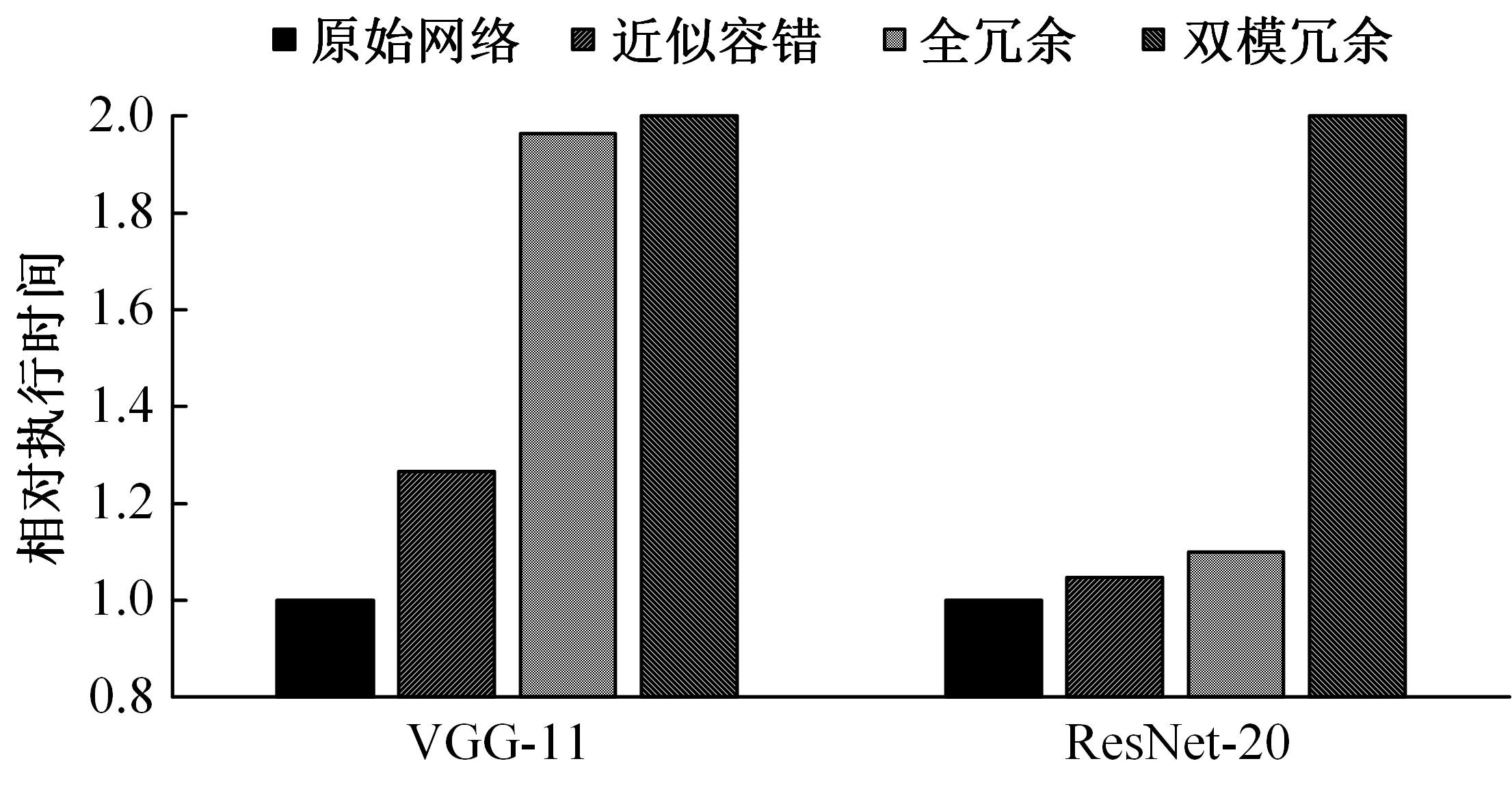

本文根据神经网络本身的错误弹性和层内过滤器相似性提出了一种近似容错设计,把过滤器划分成不同校验组进行不精确校验,保证严重错误被检出并恢复。通过优化过滤器-计算单元映射使校验流程与脉动阵列数据流契合,相较于传统双模冗余,本文提出的容错设计可以降低73.39%的性能开销。

中图分类号:

- TP302.8

| 1 | Peccerillo B, Mannino M, Mondelli A, et al. A survey on hardware accelerators: taxonomy, trends, challenges, and perspectives[J]. Journal of Systems Architecture, 2022, 129: No.102561. |

| 2 | 秦华标, 曹钦平. 基于FPGA的卷积神经网络硬件加速器设计[J]. 电子与信息学报, 2019, 41(11):2599-2605. |

| Qin Hua-biao, Cao Qin-ping. Design of convolutional neural networks hardware acceleration based on FPGA[J]. Ournal of Electronics & Information Technology, 2019, 41(11): 2599-2605. | |

| 3 | Chen Y H, Emer J, Sze V. Eyeriss: a spatial architecture for energy-efficient dataflow for convolutional neural networks[J]. ACM SIGARCH Computer Architecture News, 2016, 44(3): 367-379. |

| 4 | Du Z, Fasthuber R, Chen T, et al. ShiDianNao: shifting vision processing closer to the sensor[C]∥Proceedings of the 42nd Annual International Symposium on Computer Architecture,Portland,USA, 2015: 92-104. |

| 5 | Jouppi N P, Young C, Patil N, et al. In-datacenter performance analysis of a tensor processing unit[C]∥Proceedings of the 44th Annual International Symposium on Computer Architecture, Los Angeles,USA, 2017: 1-12. |

| 6 | Mittal S. A survey on modeling and improving reliability of DNN algorithms and accelerators[J]. Journal of Systems Architecture, 2020, 104: No.101689. |

| 7 | Azizimazreah A, Gu Y, Gu X, et al. Tolerating soft errors in deep learning accelerators with reliable on-chip memory designs[C]∥2018 IEEE International Conference on Networking, Architecture and Storage (NAS), Chongqing, China, 2018: 1-10. |

| 8 | Choi W, Shin D, Park J, et al. Sensitivity based error resilient techniques for energy efficient deep neural network accelerators[C]∥Proceedings of the 56th Annual Design Automation Conference, Las Vegas, USA, 2019: 1-6. |

| 9 | Tan J, Li Z, Fu X. Soft-error reliability and power co-optimization for GPGPUS register file using resistive memory[C]∥2015 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 2015: 369-374. |

| 10 | Schorn C, Guntoro A, Ascheid G. Accurate neuron resilience prediction for a flexible reliability management in neural network accelerators[C]∥2018 Design, Automation & Test in Europe Conference & Exhibition (DATE), Dresden, Germany, 2018: 979-984. |

| 11 | Sánchez A, Entrena L, Kastensmidt F. Approximate TMR for selective error mitigation in FPGAs based on testability analysis[C]∥2018 NASA/ESA Conference on Adaptive Hardware and Systems (AHS), Dresden, Germany, 2018: 112-119. |

| 12 | 周飞燕, 金林鹏, 董军. 卷积神经网络研究综述[J]. 计算机学报, 2017, 40(6): 1229-1251. |

| Zhou Fei-yan. Jin Lin-peng, Dong Jun. Review of convolutional neural network[J]. Chinese Journal of Computers, 2017, 40(6): 1229-1251. | |

| 13 | Samajdar A, Joseph J M, Zhu Y H, et al. A systematic methodology for characterizing scalability of dnn accelerators using scale-sim[C]∥2020 IEEE International Symposium on Performance Analysis of Systems and Software (ISPASS), Virtual, Boston, USA, 2020: 58-68. |

| 14 | Hoang L H, Hanif M A, Shafique M. Ft-clipact: resilience analysis of deep neural networks and improving their fault tolerance using clipped activation[C]∥2020 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France,2020: 1241-1246. |

| 15 | 林景栋, 吴欣怡, 柴毅, 等. 卷积神经网络结构优化综述[J]. 自动化学报, 2020, 46(1): 24-37. |

| Lin Jing-dong, Wu Xin-yi, Chai Yi,et al. Structure optimization of convolutional neural networks: a survey[J]. Acta Automatica Sinica, 2020, 46(1): 24-37. | |

| 16 | Kahatapitiya K, Rodrigo R. Exploiting the redundancy in convolutional filters for parameter reduction[C]∥Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, Hawaii, USA, 2021: 1410-1420. |

| 17 | Denil M, Shakibi B, Dinh L, et al. Predicting parameters in deep learning[EB/OL]. [2022-12-08]. |

| [1] | 夏超,王梦佳,朱剑月,杨志刚. 基于分层卷积自编码器的钝体湍流流场降阶分析[J]. 吉林大学学报(工学版), 2024, 54(4): 874-882. |

| [2] | 杨国俊,齐亚辉,石秀名. 基于数字图像技术的桥梁裂缝检测综述[J]. 吉林大学学报(工学版), 2024, 54(2): 313-332. |

| [3] | 车翔玖,徐欢,潘明阳,刘全乐. 生物医学命名实体识别的两阶段学习算法[J]. 吉林大学学报(工学版), 2023, 53(8): 2380-2387. |

| [4] | 张振海,季坤,党建武. 基于桥梁裂缝识别模型的桥梁裂缝病害识别方法[J]. 吉林大学学报(工学版), 2023, 53(5): 1418-1426. |

| [5] | 刘培勇,董洁,谢罗峰,朱杨洋,殷国富. 基于多支路卷积神经网络的磁瓦表面缺陷检测算法[J]. 吉林大学学报(工学版), 2023, 53(5): 1449-1457. |

| [6] | 高海龙,徐一博,侯德藻,王雪松. 基于深度异步残差网络的路网短时交通流预测算法[J]. 吉林大学学报(工学版), 2023, 53(12): 3458-3464. |

| [7] | 朱逢乐,刘益,乔欣,何梦竹,郑增威,孙霖. 基于多尺度级联卷积神经网络的高光谱图像分析[J]. 吉林大学学报(工学版), 2023, 53(12): 3547-3557. |

| [8] | 朱冰,李紫薇,李奇. 基于改进SegNet的遥感图像建筑物分割方法[J]. 吉林大学学报(工学版), 2023, 53(1): 248-254. |

| [9] | 申铉京,张雪峰,王玉,金玉波. 像素级卷积神经网络多聚焦图像融合算法[J]. 吉林大学学报(工学版), 2022, 52(8): 1857-1864. |

| [10] | 高明华,杨璨. 基于改进卷积神经网络的交通目标检测方法[J]. 吉林大学学报(工学版), 2022, 52(6): 1353-1361. |

| [11] | 杨怀江,王二帅,隋永新,闫丰,周跃. 简化型残差结构和快速深度残差网络[J]. 吉林大学学报(工学版), 2022, 52(6): 1413-1421. |

| [12] | 王学智,李清亮,李文辉. 融合迁移学习的土壤湿度预测时空模型[J]. 吉林大学学报(工学版), 2022, 52(3): 675-683. |

| [13] | 李向军,涂洁莹,赵志宾. 基于多尺度融合卷积神经网络的熔解曲线有效性分类[J]. 吉林大学学报(工学版), 2022, 52(3): 633-639. |

| [14] | 李先通,全威,王华,孙鹏程,安鹏进,满永兴. 基于时空特征深度学习模型的路径行程时间预测[J]. 吉林大学学报(工学版), 2022, 52(3): 557-563. |

| [15] | 高文志,王彦军,王欣伟,张攀,李勇,董阳. 基于卷积神经网络的柴油机失火故障实时诊断[J]. 吉林大学学报(工学版), 2022, 52(2): 417-424. |

|

||