吉林大学学报(工学版) ›› 2022, Vol. 52 ›› Issue (8): 1850-1856.doi: 10.13229/j.cnki.jdxbgxb20210607

• 计算机科学与技术 • 上一篇

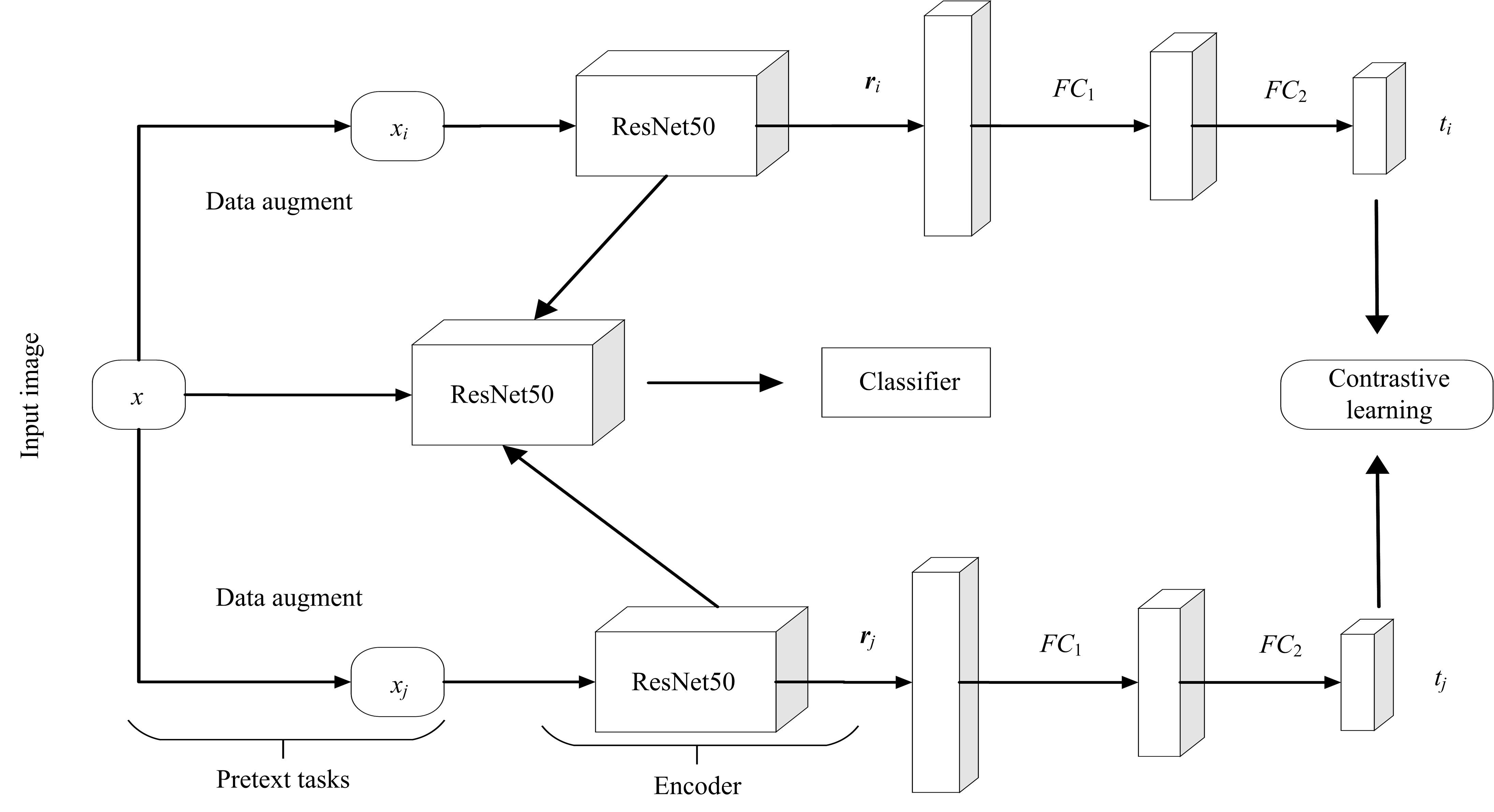

基于对比自监督学习的图像分类框架

- 1.吉林大学 计算机科学与技术学院,长春 130012

2.上海贞亘实业有限公司,上海 201508

Image classification framework based on contrastive self⁃supervised learning

Hong-wei ZHAO1( ),Jian-rong ZHANG1,Jun-ping ZHU2(

),Jian-rong ZHANG1,Jun-ping ZHU2( ),Hai LI1

),Hai LI1

- 1.College of Computer Science and Technology,Jilin University,Changchun 130012,China

2.Shanghai Zhengen Industrial Co. ,Ltd. ,Shanghai 201508,China

摘要:

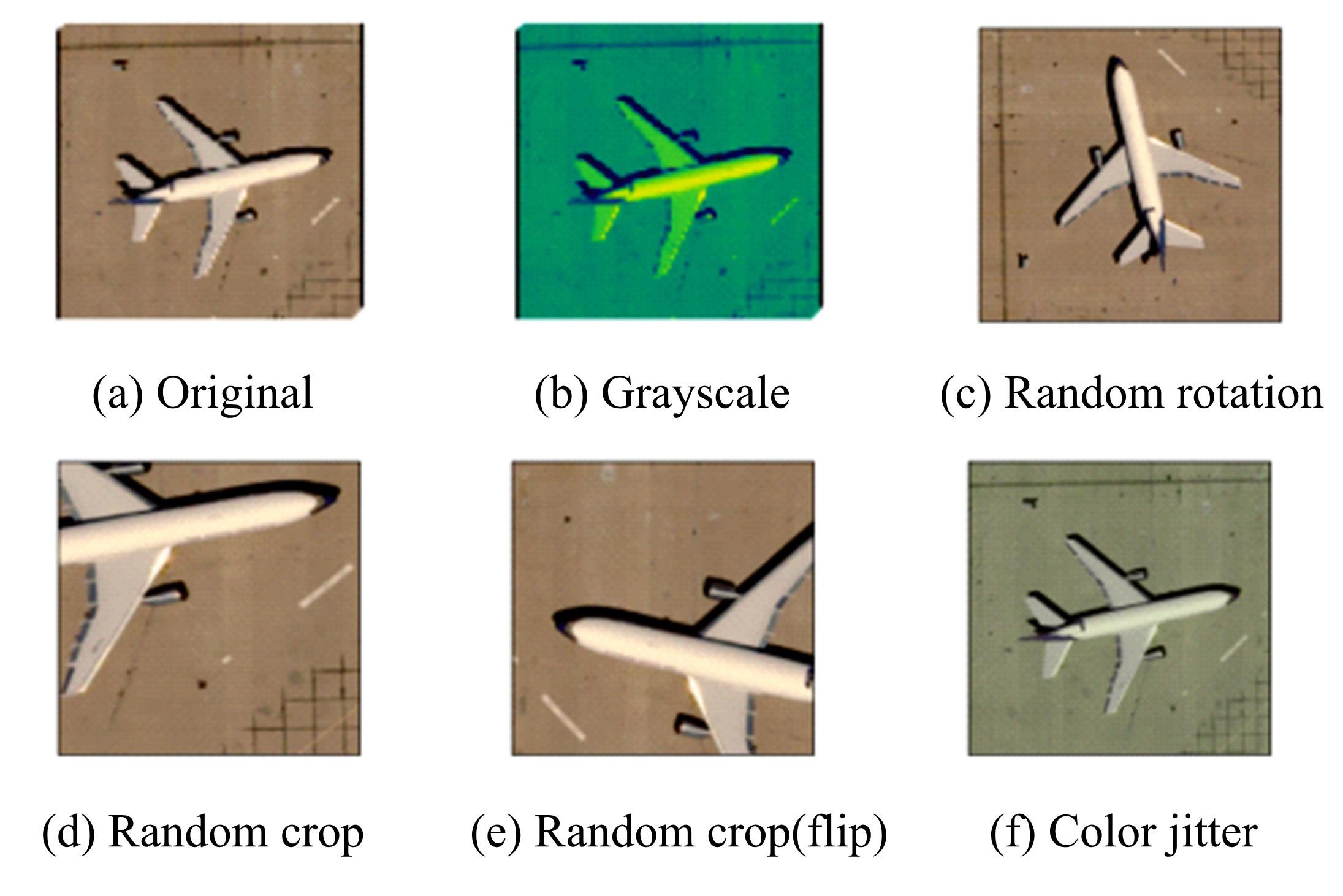

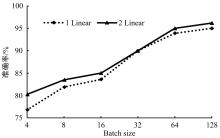

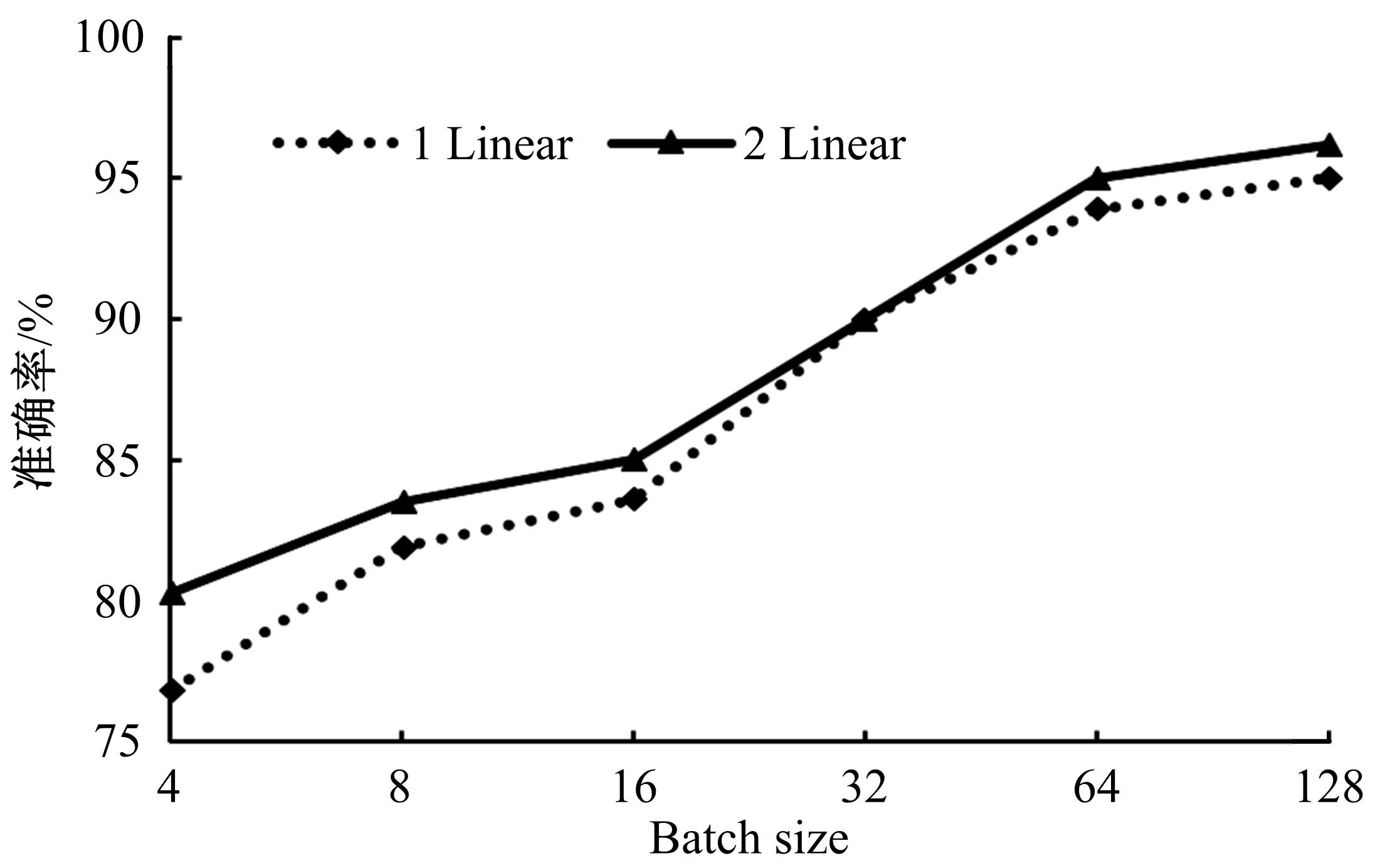

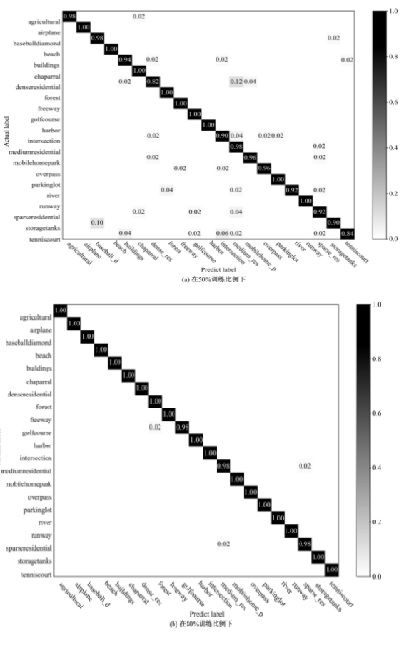

为解决监督学习在图像分类领域中需要耗费大量时间来完成数据集标注等问题,提出了一种自监督学习图像分类框架:SSIC(Self-supervised image classification)框架。SSIC框架是一种基于对比学习的自监督学习方法,比现有的无监督方法有更好的性能。设计了一种新的框架结构,并选择了更有效的代理任务来提高模型的鲁棒性。此外,提出了有针对性的损失函数来提升图像分类性能。模型在UC-Merced、NWPU、AID三个公开的数据集上进行实验,实验结果表明:SSIC框架与当前最新技术相比有明显的优势,并且在低分辨率图像分类中也表现出色。

中图分类号:

- TP391

| 1 | Lowe D G. Distinctive image features from scale-invariant key points[J]. International Journal of Computer Vision, 2004, 60(2): 91-110. |

| 2 | 赵宏伟, 霍东升, 王洁, 等. 基于显著性检测的害虫图像分类[J]. 吉林大学学报: 工学版, 2021, 51(6): 2174-2181. |

| Zhao Hong-wei, Huo Dong-sheng, Wang Jie,et al. Image classification of insect pests based on saliency detection[J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(6): 2174-2181. | |

| 3 | 许骞艺, 秦贵和, 孙铭会, 等.基于改进的ResNeSt驾驶员头部状态分类算法[J].吉林大学学报: 工学版, 2021, 51(2): 704-711. |

| Xu Qian-yi, Qin Gui-he, Sun Ming-hui, et al. Classification of drivers' head status based on improved ResNeSt[J]. Journal of Jilin University(Engineering and Technology Edition), 2021, 51(2): 704-711. | |

| 4 | Yu Y, Li X, Liu F. Attention GANs: unsupervised deep feature learning for aerial scene classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2020, 58(1): 519-531. |

| 5 | Lin D, Fu K, Wang Y, et al. MARTA GANs: unsupervised representation learning for remote sensing image classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2017, 14(11): 2092-2096. |

| 6 | Geoffrey E H, Simon O, Yee-Whye T. A fast-learning algorithm for deep belief nets[J]. Neural Computation, 2006, 18(7): 1527-1554. |

| 7 | Diederik P K, Max W. Auto-encoding variational bayes[J]. arXiv Preprint arXiv:. |

| 8 | Chen T, Kornblith S, Norouzi M, et al. A simple framework for contrastive learning of visual representations[C]∥In Proceedings of the International Conference on Machine Learning, Australia, 2020: 10709-10719. |

| 9 | He K, Fan H, Wu Y, et al. Momentum contrast for unsupervised visual representation learning[C]∥In Proceedings of the Conference on Computer Vision and Pattern Recognition, Los Alamitos, 2020: 9729-9738. |

| 10 | He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[C]∥In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, 2016: 770-778. |

| 11 | Wu Z, Xiong Y, Yu S, et al. Unsupervised feature learning via non-parametric instance discrimination[C]∥In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Los Alamitos, 2018: 3733-3742. |

| 12 | Yang Y, Newsam S. Bag-of-visual-words and spatial extensions for land-use classification[C]∥Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, 2010: 270-279. |

| 13 | Cheng G, Han J, Lu X. Remote sensing image scene classification: benchmark and state of the art[J]. Proceedings of the IEEE, 2017, 105(10): 1865-1883. |

| 14 | Xia G S. AID: a benchmark data set for performance evaluation of aerial scene classification[J]. IEEE Transactions on Geoscience and Remote Sensing, 2017, 55(7): 3965-3981. |

| 15 | Qi B, Kun Q, Zhang H, et al. APDC-Net: attention pooling-based convolutional network for aerial scene classification[J]. IEEE Geoscience and Remote Sensing Letters, 2020, 15(10): 1603-1607. |

| 16 | Sun H, Li S, Zheng X, et al. Remote sensing scene classification by gated bidirectional network[J]. IEEE Transactions on Geoscience and Remote Sensing, 2020, 58(1): 82-96. |

| 17 | Tan M, Le Q V. EfficientNet: rethinking model scaling for convolutional neural networks[C]∥In International Conference on Machine Learning, Long Beach, 2019: 10691-10700. |

| [1] | 周丰丰,朱海洋. 基于三段式特征选择策略的脑电情感识别算法SEE[J]. 吉林大学学报(工学版), 2022, 52(8): 1834-1841. |

| [2] | 周丰丰,张亦弛. 基于稀疏自编码器的无监督特征工程算法BioSAE[J]. 吉林大学学报(工学版), 2022, 52(7): 1645-1656. |

| [3] | 王军,徐彦惠,李莉. 低能耗支持完整性验证的数据融合隐私保护方法[J]. 吉林大学学报(工学版), 2022, 52(7): 1657-1665. |

| [4] | 杨怀江,王二帅,隋永新,闫丰,周跃. 简化型残差结构和快速深度残差网络[J]. 吉林大学学报(工学版), 2022, 52(6): 1413-1421. |

| [5] | 康耀龙,冯丽露,张景安,陈富. 基于谱聚类的高维类别属性数据流离群点挖掘算法[J]. 吉林大学学报(工学版), 2022, 52(6): 1422-1427. |

| [6] | 王文军,余银峰. 考虑数据稀疏的知识图谱缺失连接自动补全算法[J]. 吉林大学学报(工学版), 2022, 52(6): 1428-1433. |

| [7] | 陈雪云,贝学宇,姚渠,金鑫. 基于G⁃UNet的多场景行人精确分割与检测[J]. 吉林大学学报(工学版), 2022, 52(4): 925-933. |

| [8] | 方世敏. 基于频繁模式树的多来源数据选择性集成算法[J]. 吉林大学学报(工学版), 2022, 52(4): 885-890. |

| [9] | 李大湘,陈梦思,刘颖. 基于STA⁃LSTM的自发微表情识别算法[J]. 吉林大学学报(工学版), 2022, 52(4): 897-909. |

| [10] | 魏晓辉,苗艳微,王兴旺. Rhombus sketch:自适应和准确的流数据sketch[J]. 吉林大学学报(工学版), 2022, 52(4): 874-884. |

| [11] | 刘铭,杨雨航,邹松霖,肖志成,张永刚. 增强边缘检测图像算法在多书识别中的应用[J]. 吉林大学学报(工学版), 2022, 52(4): 891-896. |

| [12] | 毛琳,任凤至,杨大伟,张汝波. 双向特征金字塔全景分割网络[J]. 吉林大学学报(工学版), 2022, 52(3): 657-665. |

| [13] | 王雪,李占山,吕颖达. 基于多尺度感知和语义适配的医学图像分割算法[J]. 吉林大学学报(工学版), 2022, 52(3): 640-647. |

| [14] | 欧阳继红,郭泽琪,刘思光. 糖尿病视网膜病变分期双分支混合注意力决策网络[J]. 吉林大学学报(工学版), 2022, 52(3): 648-656. |

| [15] | 李向军,涂洁莹,赵志宾. 基于多尺度融合卷积神经网络的熔解曲线有效性分类[J]. 吉林大学学报(工学版), 2022, 52(3): 633-639. |

|

||