吉林大学学报(工学版) ›› 2025, Vol. 55 ›› Issue (1): 185-197.doi: 10.13229/j.cnki.jdxbgxb.20230321

• 交通运输工程·土木工程 • 上一篇

基于深度学习的车载图像车辆目标检测和测距

- 东北林业大学 土木与交通学院,哈尔滨 150040

Vehicle target detection and ranging in vehicle image based on deep learning

Hui-zhi XU( ),Shi-sen JIANG,Xiu-qing WANG,Shuang CHEN

),Shi-sen JIANG,Xiu-qing WANG,Shuang CHEN

- School of Civil Engineering and Transportation,Northeast Forestry University,Harbin 150040,China

摘要:

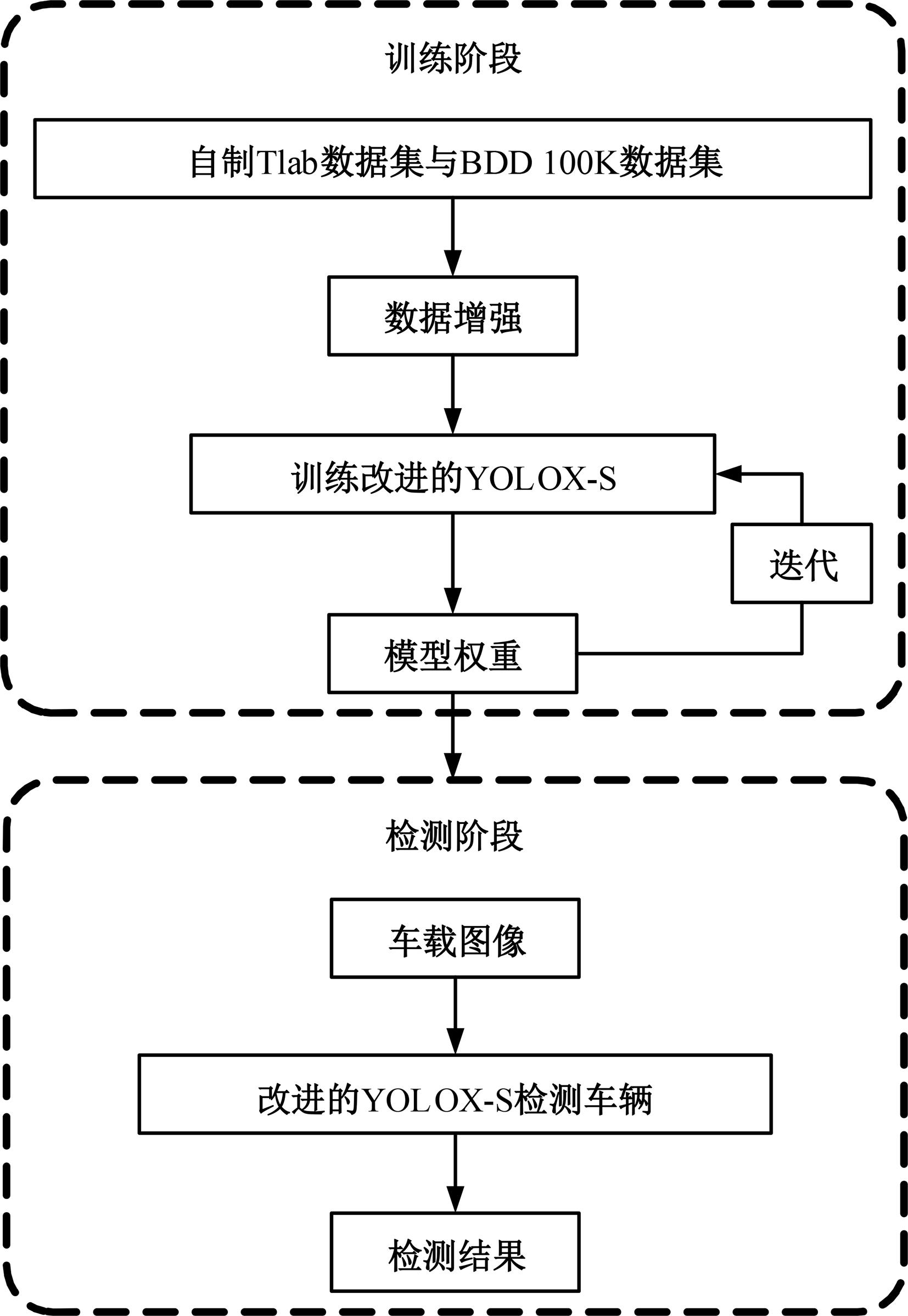

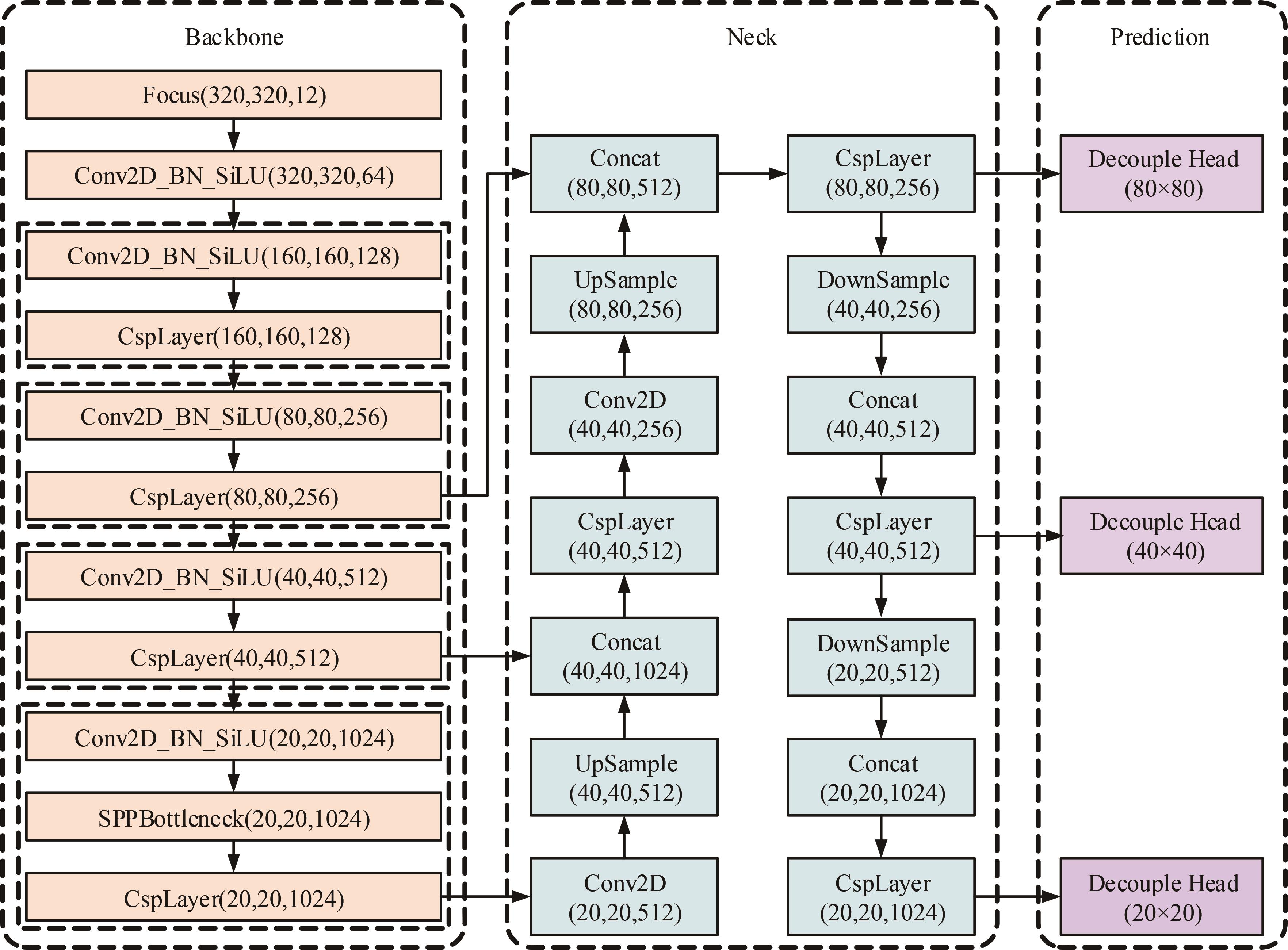

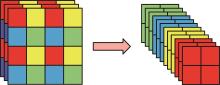

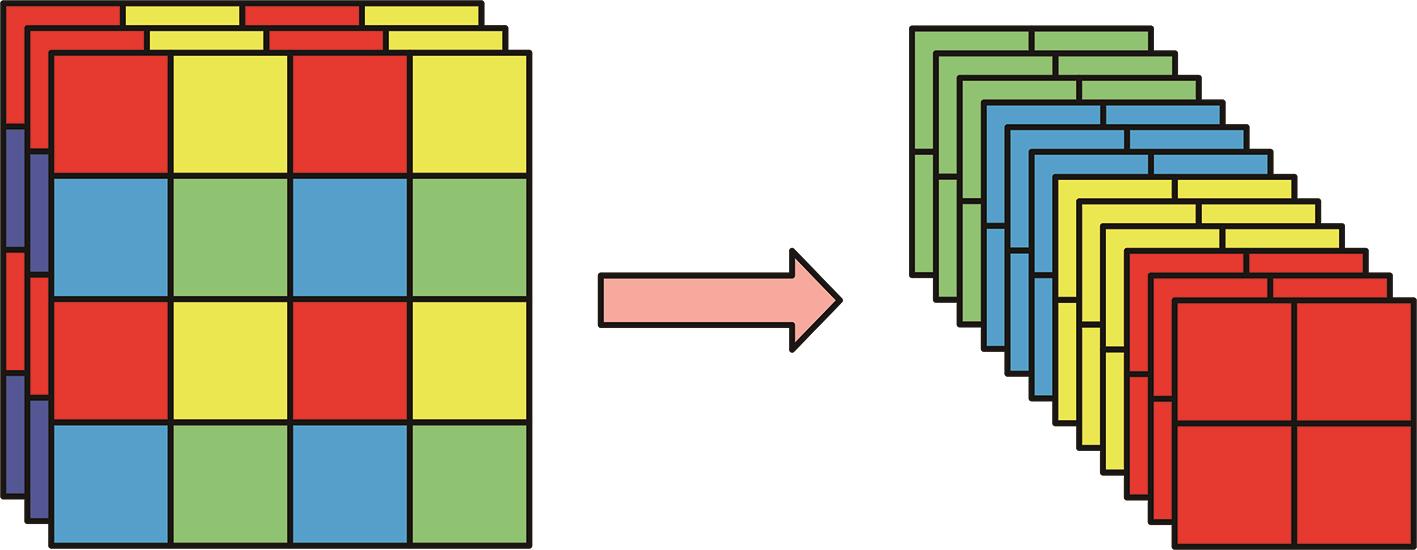

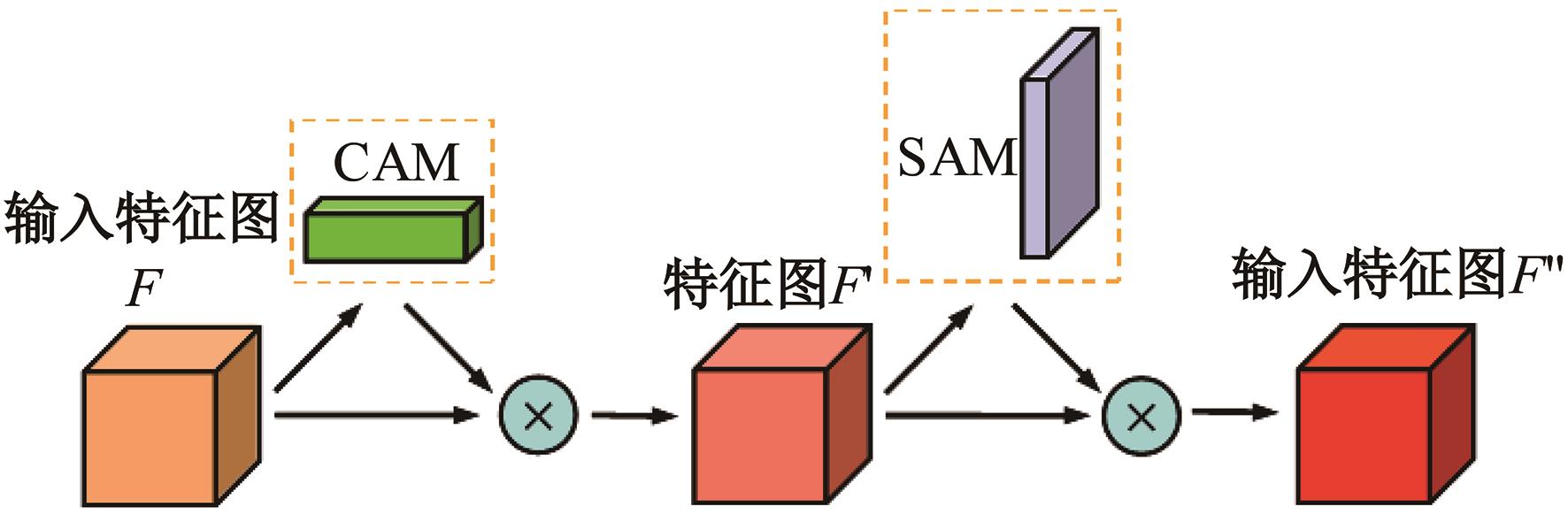

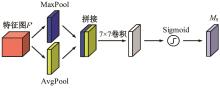

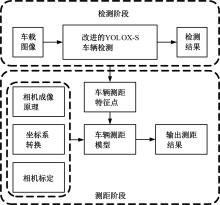

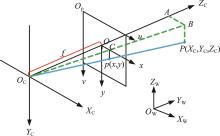

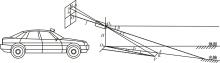

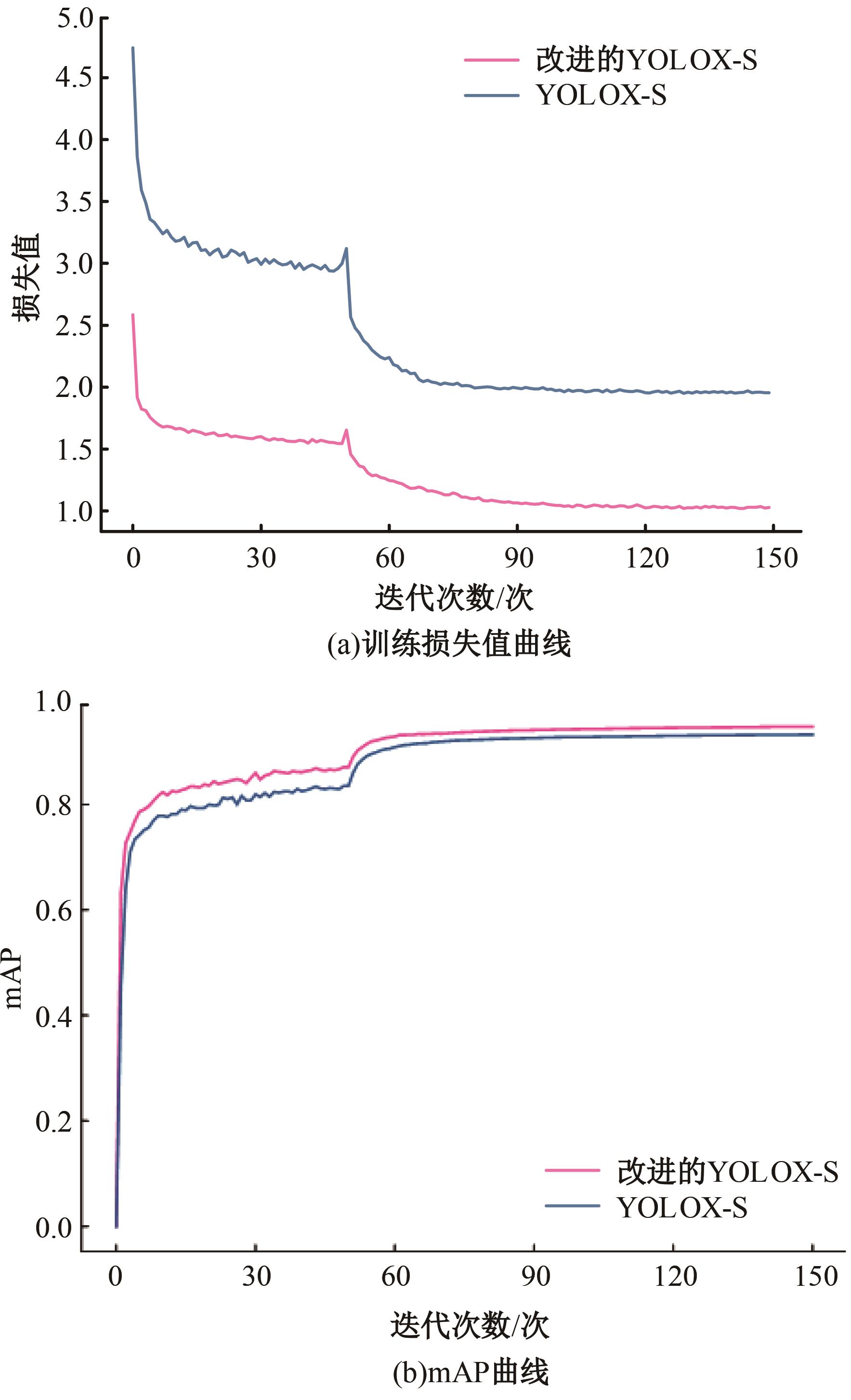

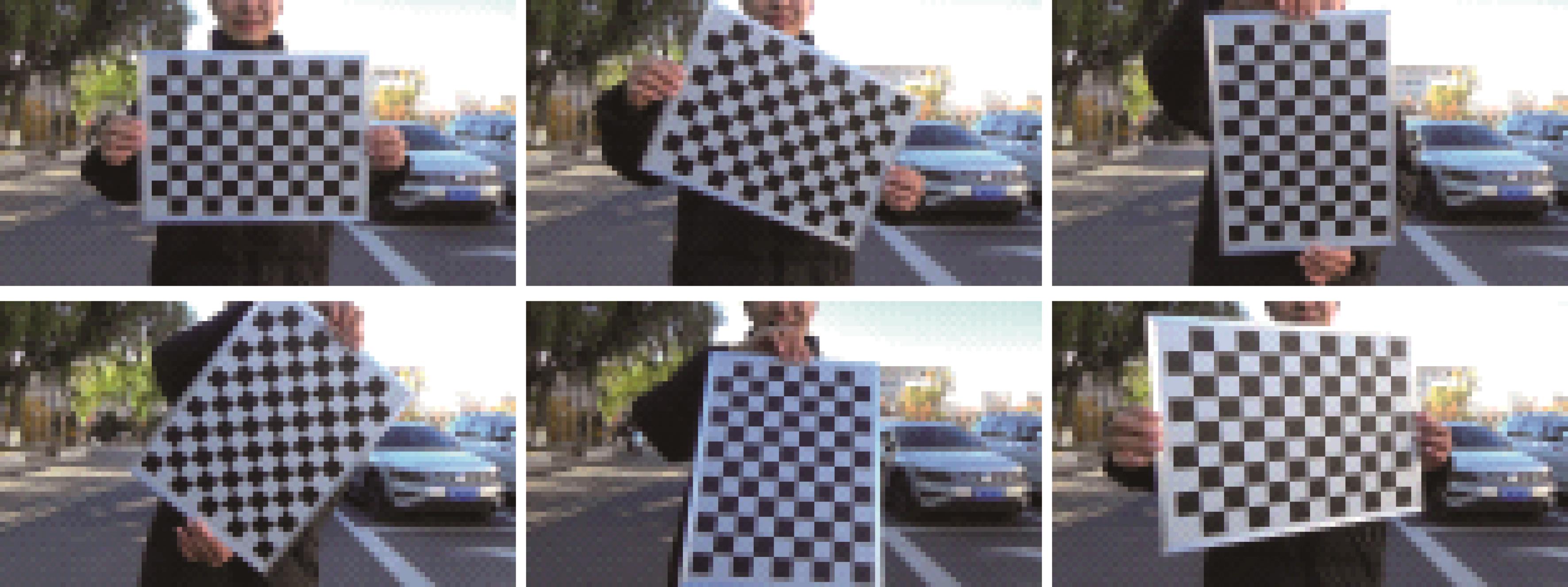

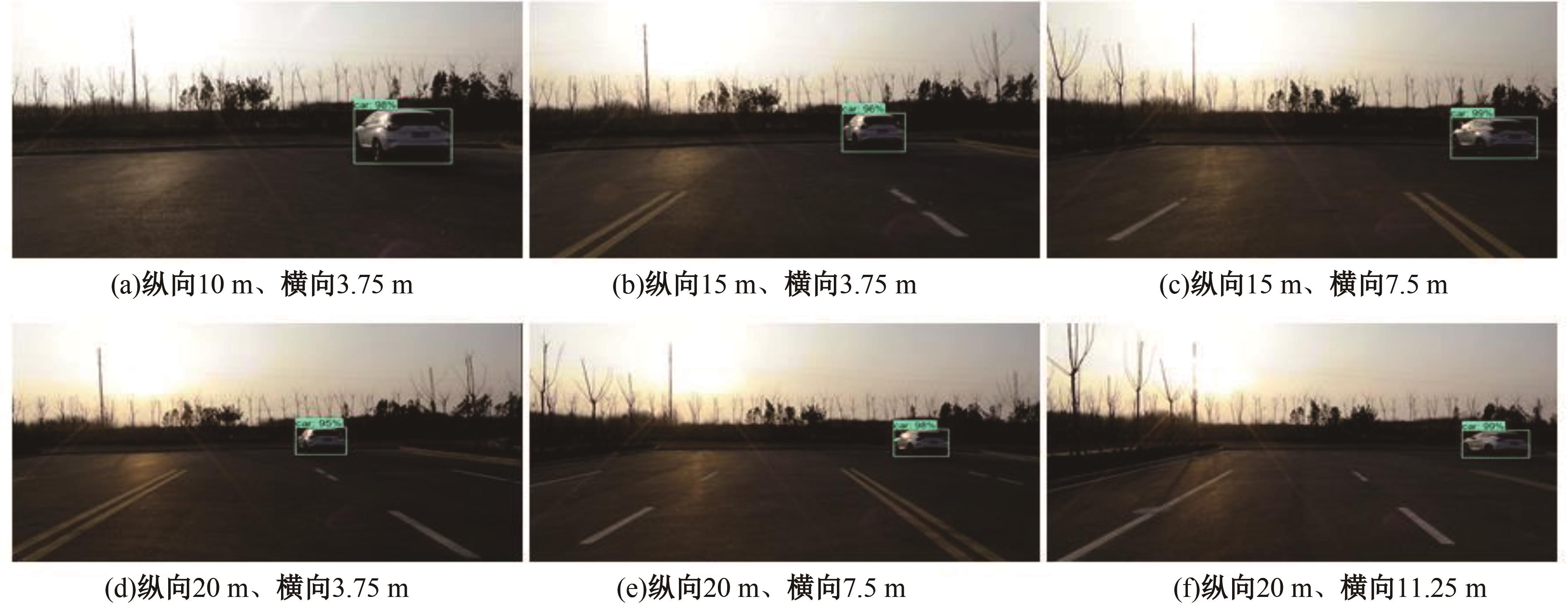

为提高驾驶环境中的车辆目标检测精度与测距稳定性,提出了一种基于深度学习的车辆目标检测与测距方法。以YOLOX-S算法为车辆目标检测框架进行改进:在原算法的基础上引入卷积块注意力模块,增强网络特征表达能力,并将置信度损失函数更换为Focal Loss,降低简单样本训练权重,提高正样本关注度。根据车载相机成像原理和几何关系建立车辆测距模型,并输入测距特征点坐标和相机内参得到测距结果。采用自制Tlab数据集和BDD 100K数据集对改进的YOLOX-S算法进行训练与评价,搭建静态测距实验场景对车辆测距模型进行验证。实验结果表明:改进的YOLOX-S算法在实验数据集上检测速度为70.14帧/s,与原算法相比精确率、召回率、F1值、mAP分别提高了0.86%、1.32%、1.09%、1.54%;在纵向50 m、横向11.25 m的测量范围内,平均测距误差保持在3.20%以内。可见,本文方法在满足车辆检测实时性要求的同时,具有良好的车辆测距准确性与稳定性。

中图分类号:

- U495

| 1 | 刘旖菲, 胡学敏, 陈国文, 等. 视觉感知的端到端自动驾驶运动规划综述[J]. 中国图象图形学报, 2021, 26(1): 49-66. |

| Liu Yi-fei, Hu Xue-min, Chen Guo-wen, et al. A review of end-to-end autonomous driving motion planning with visual perception[J]. Chinese Journal of Graphical Graphics, 2021, 26(1): 49-66. | |

| 2 | 原晓佩, 陈小锋, 廉明. 基于Haar-like和LBP的多特征融合目标检测算法[J]. 计算机科学, 2021, 48(11): 219-225. |

| Yuan Xiao-pei, Chen Xiao-feng, Lian Ming. A multi-feature fusion target detection algorithm based on Haar-like and LBP[J]. Computer Science, 2021, 48(11): 219-225. | |

| 3 | Lienhart R, Maydt J. An extended set of Haar-like features for rapid object detection[C]∥The IEEE International Conference on Image Processing, New York, USA, 2002: 900-903. |

| 4 | 辛元雪, 史朋飞, 薛瑞阳. 基于区域提取与改进LBP特征的运动目标检测[J]. 计算机科学, 2021, 48(7): 233-237. |

| Xin Yuan-xue, Shi Peng-fei, Xue Rui-yang. Motion target detection based on region extraction with improved LBP features[J]. Computer Science, 2021, 48(7): 233-237. | |

| 5 | Duan J, Ye H, Zhao H, et al. Deep cascade AdaBoost with unsupervised clustering in autonomous vehicles[J]. Electronics, 2022, 12(1):No.12010044. |

| 6 | Yang J, Jun Z. Improved HOG feature vehicle recognition algorithm based on sliding window[J]. Journal of Physics: Conference Series, 2020, 1627(1):No. 012013. |

| 7 | 耿庆田, 于繁华, 王宇婷, 等. 基于特征融合的车型检测新算法[J]. 吉林大学学报:工学版, 2018, 48(3): 929-935. |

| Geng Qing-tian, Yu Fan-hua, Wang Yu-ting, et al. A new algorithm for model detection based on feature fusion[J]. Journal of Jilin University(Engineering Edition), 2018, 48(3): 929-935. | |

| 8 | 高明华, 杨璨. 基于改进卷积神经网络的交通目标检测方法[J]. 吉林大学学报:工学版, 2022, 52(6): 1353-1361. |

| Gao Ming-hua, Yang Can. An improved convolutional neural network based traffic target detection method[J]. Journal of Jilin University(Engineering Edition), 2022, 52(6): 1353-1361. | |

| 9 | Redmon J, Farhadi A. YOLOv3: an incremental improvement[DB/OL].[2023-03-22].. |

| 10 | Yue Z, Wei Z, Nan L. A novel detection method using YOLOv5 for vehicle target under complex situation[J]. Traitement du Signal, 2022, 39(4): 1153-1158. |

| 11 | Guan X, Meng Y, Meng W, et al. Research on highway vehicle detection based on faster R-CNN and domain adaptation[J]. Applied Intelligence, 2022, 52(4): 3483-3498. |

| 12 | Ren S, He K, Girshick R, et al. Faster r-cnn: towards realtime object detection with region proposal networks[J]. Advances in Neural Information Processing Systems, 2015, 28: 91-99. |

| 13 | Mescheder L, Oechsle M, Niemeyer M, et al. Occupancy networks: learning 3D reconstruction in function space[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 4460-4470. |

| 14 | 黄孝慈, 舒方林, 曹文冠. 基于双目视觉的前方车辆测距方法研究[J]. 汽车技术, 2021(12): 16-21. |

| Huang Xiao-ci, Shu Fang-lin, Cao Wen-guan. Research on forward vehicle ranging method based on binocular vision[J]. Automotive Technology, 2021(12): 16-21. | |

| 15 | Hou A L, Chen J, Jin Z J, et al. Binocular vision measurement of distance based on vehicle logo location[J]. Applied Mechanics and Materials, 2012, 229(1): 1154-1157. |

| 16 | Ho H W, Croon G D, Chu Q P. Distance and velocity estimation using optical flow from a monocular camera[J]. International Journal of Micro Air Vehicles, 2017, 9(3): 198-208. |

| 17 | 高维岳, 陈宇拓, 刘洋, 等. 基于单目视觉的车辆前方障碍物测距方法[J]. 计算机工程与设计, 2022, 43(4): 1016-1022. |

| Gao Wei-yue, Chen Yu-tuo, Liu Yang, et al. A monocular vision-based approach to vehicle forward obstacle ranging[J]. Computer Engineering and Design, 2022, 43(4): 1016-1022. | |

| 18 | Ge Z, Liu S, Wang F, et al. YOLOX: exceeding YOLO Series in 2021[DB/OL].[2023-01-10]. . |

| 19 | He K M, Zhang X Y, Ren S Q, et al. Spatial pyramid pooling in deep convolutional networks for visual recognition[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1904-1916. |

| 20 | Woo S, Park J, Lee J Y, et al. CBAM: convolutional block attention module[C]∥Proceedings of the European Conference on Computer Vision. Munich: Springer, 2018: 3-19. |

| 21 | Lin T, Goyal P, Girshick R, et al. Focal loss for dense object detection[C]∥IEEE International Conference on Computer Vision, Venice, Italy, 2017: 2980-2988. |

| 22 | 刘佳涛, 张亚萍, 杨雨薇. 基于迁移学习的高效单目图像深度估计[J]. 激光与光电子学进展, 2022, 59(16): 236-244. |

| Liu Jia-tao, Zhang Ya-ping, Yang Yu-wei. Efficient monocular image depth estimation based on migration learning[J]. Advances in Lasers and Optoelectronics, 2022, 59(16): 236-244. | |

| 23 | 彭妍, 郭君斌, 于传强, 等. 基于平面变换的高精度相机标定方法[J]. 北京航空航天大学学报, 2022, 48(7): 1297-1303. |

| Peng Yan, Guo Jun-bin, Yu Chuan-qiang, et al. A high-precision camera calibration method based on planar transformation[J]. Journal of Beijing University of Aeronautics and Astronautics, 2022, 48(7): 1297-1303. |

| [1] | 顾明臣,熊慧媛,刘增军,罗清玉,刘宏. 融合多头注意力机制的货车载重估计模型[J]. 吉林大学学报(工学版), 2024, 54(10): 2771-2780. |

| [2] | 何永明,权聪,魏堃,冯佳,万亚楠,陈世升. 超高速公路车路协同路侧单元感知融合方法[J]. 吉林大学学报(工学版), 2024, 54(7): 1923-1934. |

| [3] | 胡钊政,孙勋培,张佳楠,黄戈,柳雨婷. 基于时空图模型的车-路-图协同定位方法[J]. 吉林大学学报(工学版), 2024, 54(5): 1246-1257. |

| [4] | 黄玲,崔躜,游峰,洪佩鑫,钟浩川,曾译萱. 适用于多车交互场景的车辆轨迹预测模型[J]. 吉林大学学报(工学版), 2024, 54(5): 1188-1195. |

| [5] | 惠冰,刘伟,周宇坤,李淑琪. 强侧风作用下桥隧连接段行车仿真与安全性分析[J]. 吉林大学学报(工学版), 2023, 53(8): 2312-2320. |

| [6] | 田彦涛,黄兴,卢辉遒,王凯歌,许富强. 基于注意力与深度交互的周车多模态行为轨迹预测[J]. 吉林大学学报(工学版), 2023, 53(5): 1474-1480. |

| [7] | 刘嫣然,孟庆瑜,郭洪艳,李嘉霖. 图注意力模式下融合高精地图的周车轨迹预测[J]. 吉林大学学报(工学版), 2023, 53(3): 792-801. |

| [8] | 田彦涛,季言实,唱寰,谢波. 深度强化学习智能驾驶汽车增广决策模型[J]. 吉林大学学报(工学版), 2023, 53(3): 682-692. |

| [9] | 田彦涛,许富强,王凯歌,郝子绪. 考虑周车信息的自车期望轨迹预测[J]. 吉林大学学报(工学版), 2023, 53(3): 674-681. |

| [10] | 裴莉莉,孙朝云,韩雨希,李伟,户媛姣. 基于SSC与XGBoost的高速公路异常收费数据修复算法[J]. 吉林大学学报(工学版), 2022, 52(10): 2325-2332. |

| [11] | 李志慧,钟涛,赵永华,胡永利,李海涛,赵景伟. 面向车辆自主驾驶的行人跟踪算法[J]. 吉林大学学报(工学版), 2019, 49(3): 680-687. |

| [12] | 吴骅跃,段里仁. 基于RGB熵和改进区域生长的非结构化道路识别方法[J]. 吉林大学学报(工学版), 2019, 49(3): 727-735. |

| [13] | 李志慧, 胡永利, 赵永华, 马佳磊, 李海涛, 钟涛, 杨少辉. 基于车载的运动行人区域估计方法[J]. 吉林大学学报(工学版), 2018, 48(3): 694-703. |

| [14] | 李志慧, 夏英集, 曲昭伟, 任景琛. 视频监控的数据驱动背景模型[J]. 吉林大学学报(工学版), 2017, 47(4): 1286-1294. |

| [15] | 李琳辉, 伦智梅, 连静, 袁鲁山, 周雅夫, 麻笑艺. 基于卷积神经网络的道路车辆检测方法[J]. 吉林大学学报(工学版), 2017, 47(2): 384-391. |

|

||