吉林大学学报(工学版) ›› 2025, Vol. 55 ›› Issue (1): 93-104.doi: 10.13229/j.cnki.jdxbgxb.20230313

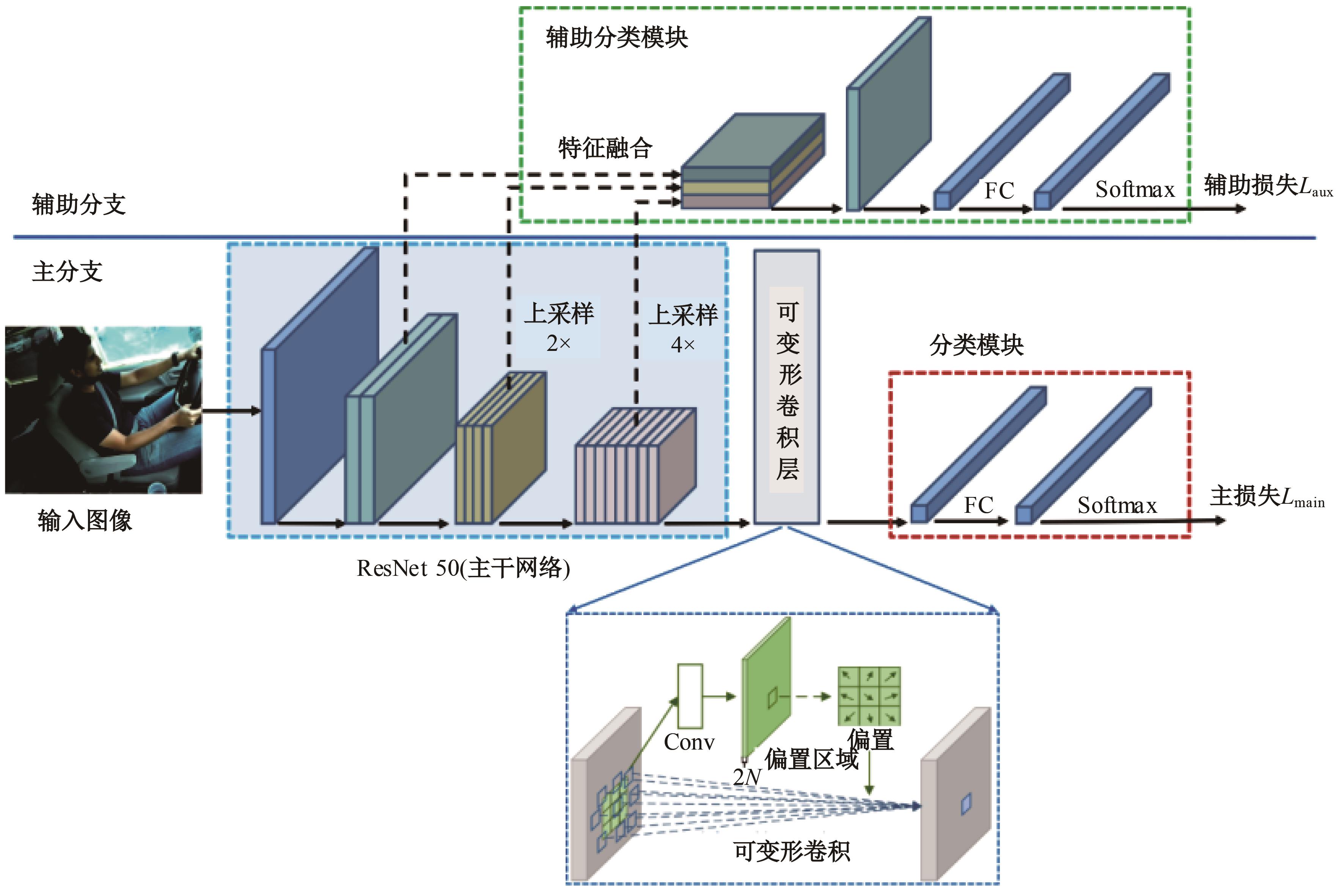

基于双分支和可变形卷积网络的驾驶员行为识别方法

- 吉林大学 汽车仿真与控制国家重点实验室,长春 130022

Driver behavior recognition method based on dual-branch and deformable convolutional neural networks

Hong-yu HU( ),Zheng-guang ZHANG,You QU,Mu-yu CAI,Fei GAO(

),Zheng-guang ZHANG,You QU,Mu-yu CAI,Fei GAO( ),Zhen-hai GAO

),Zhen-hai GAO

- State Key Laboratory of Automotive Simulation and Control,Jilin University,Changchun 130022,China

摘要:

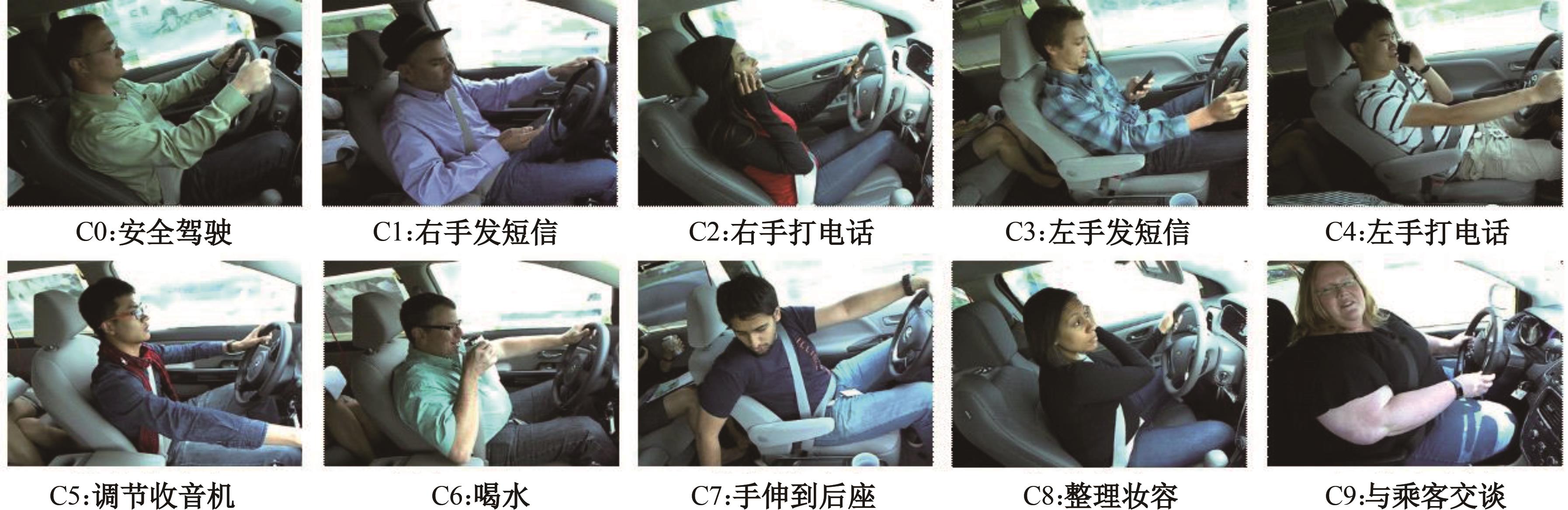

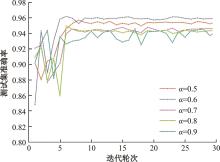

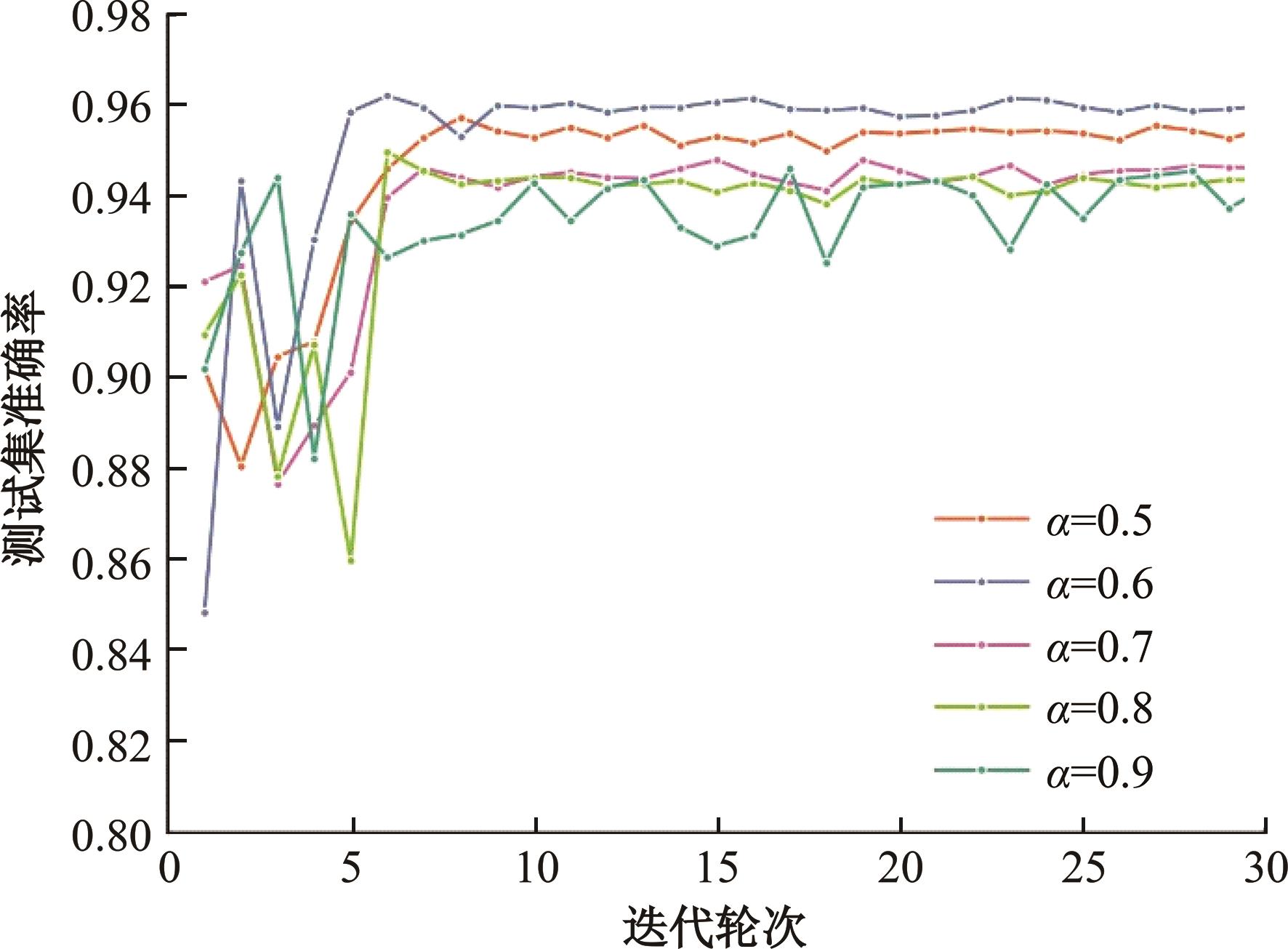

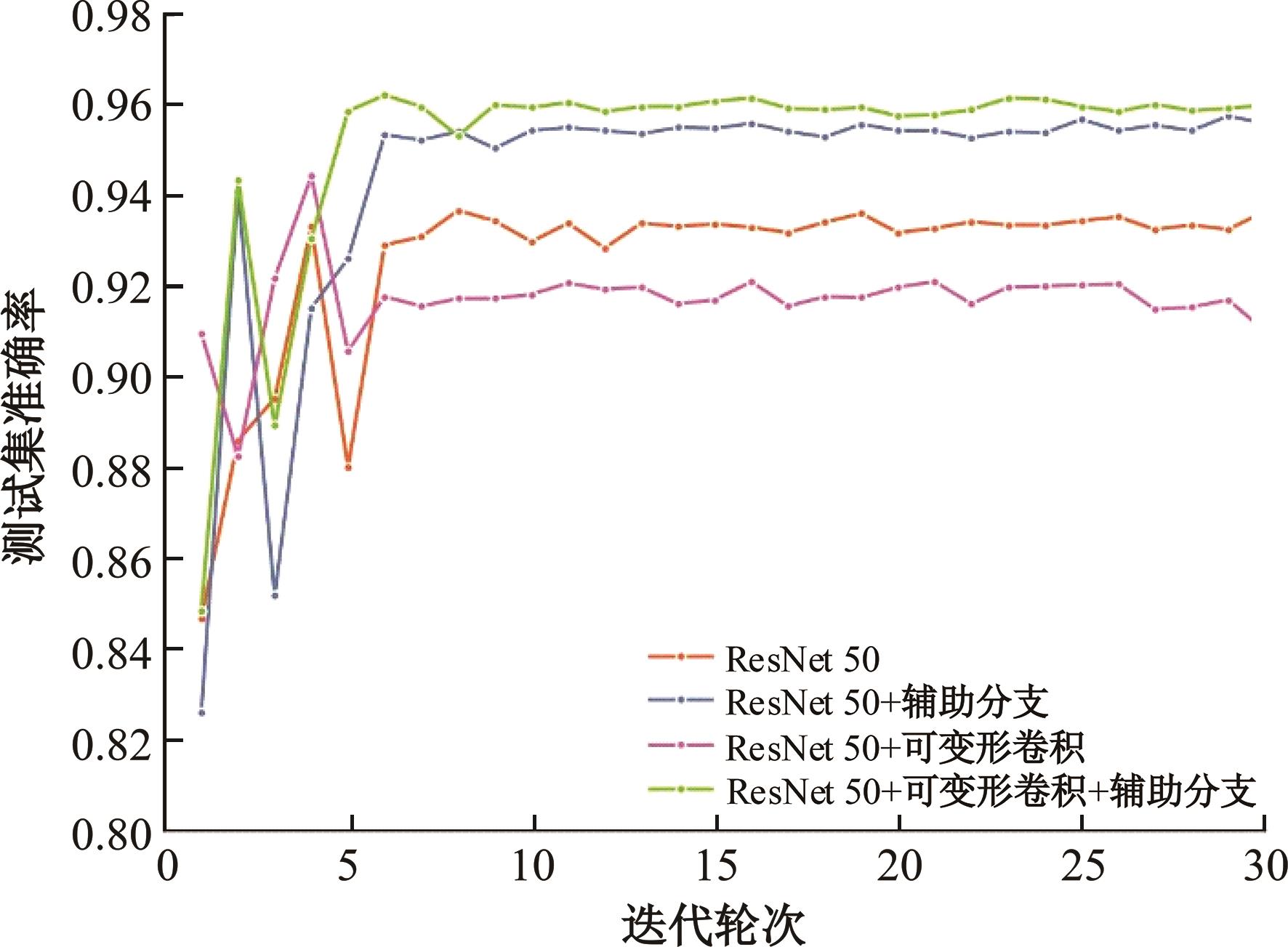

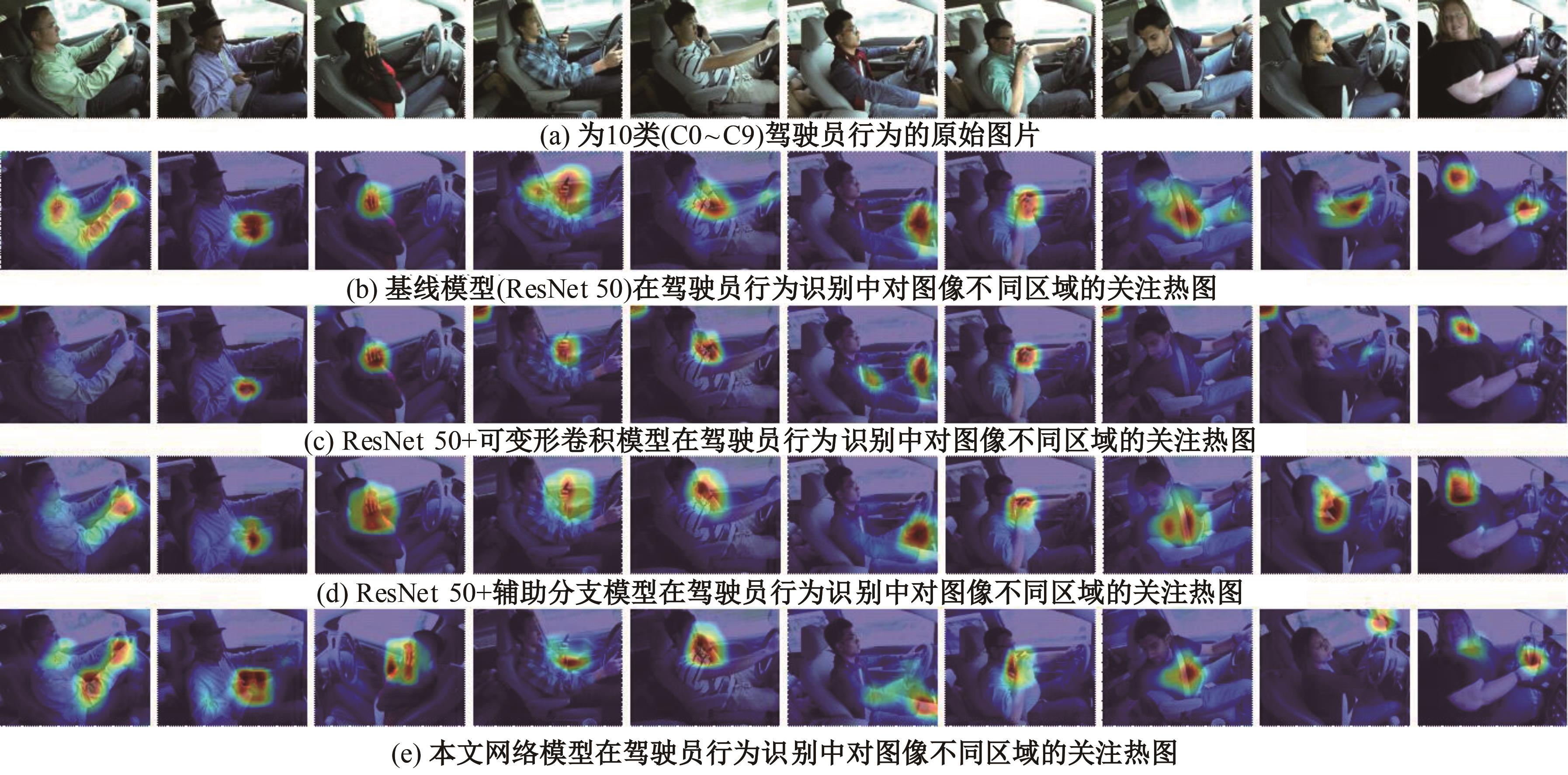

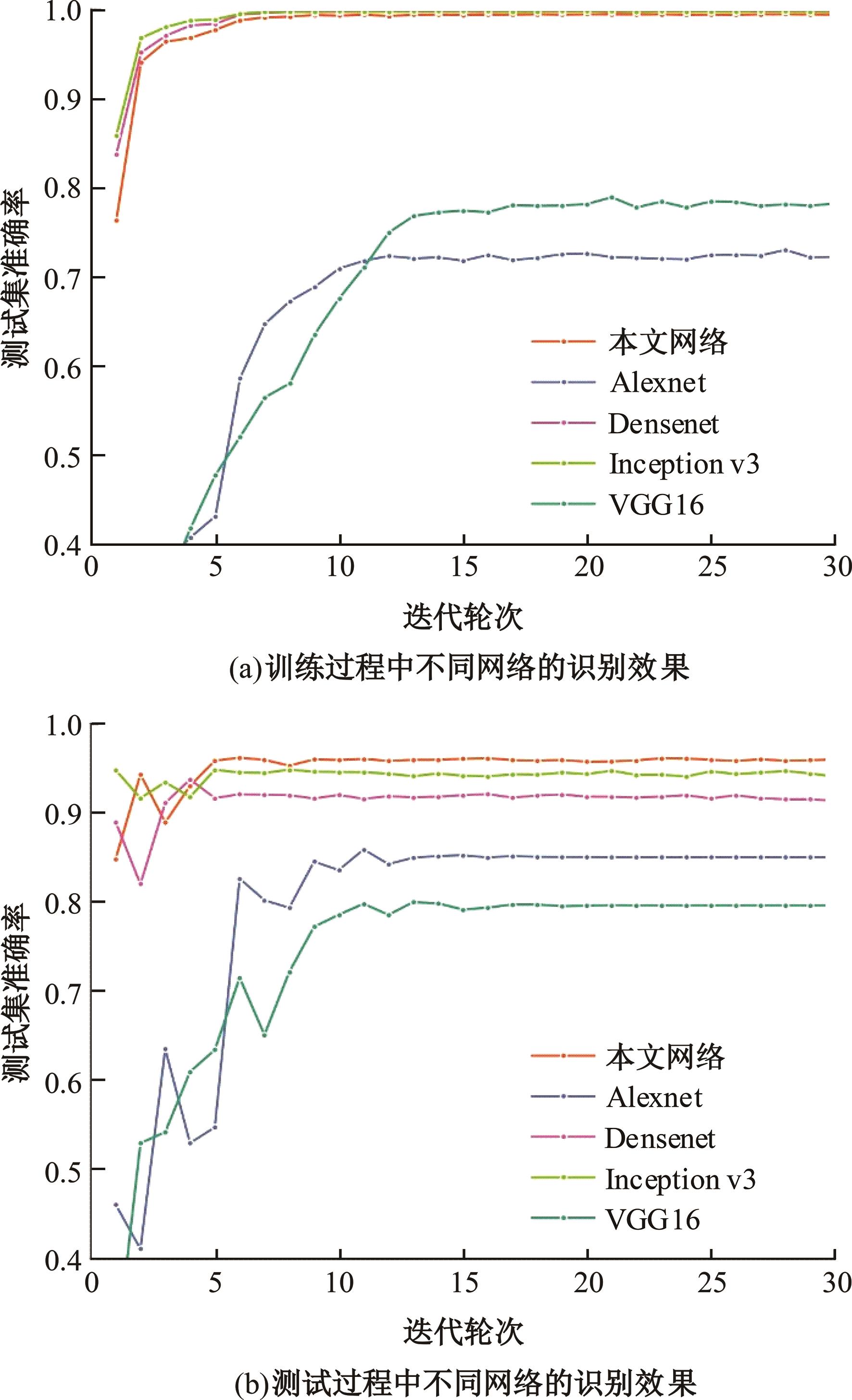

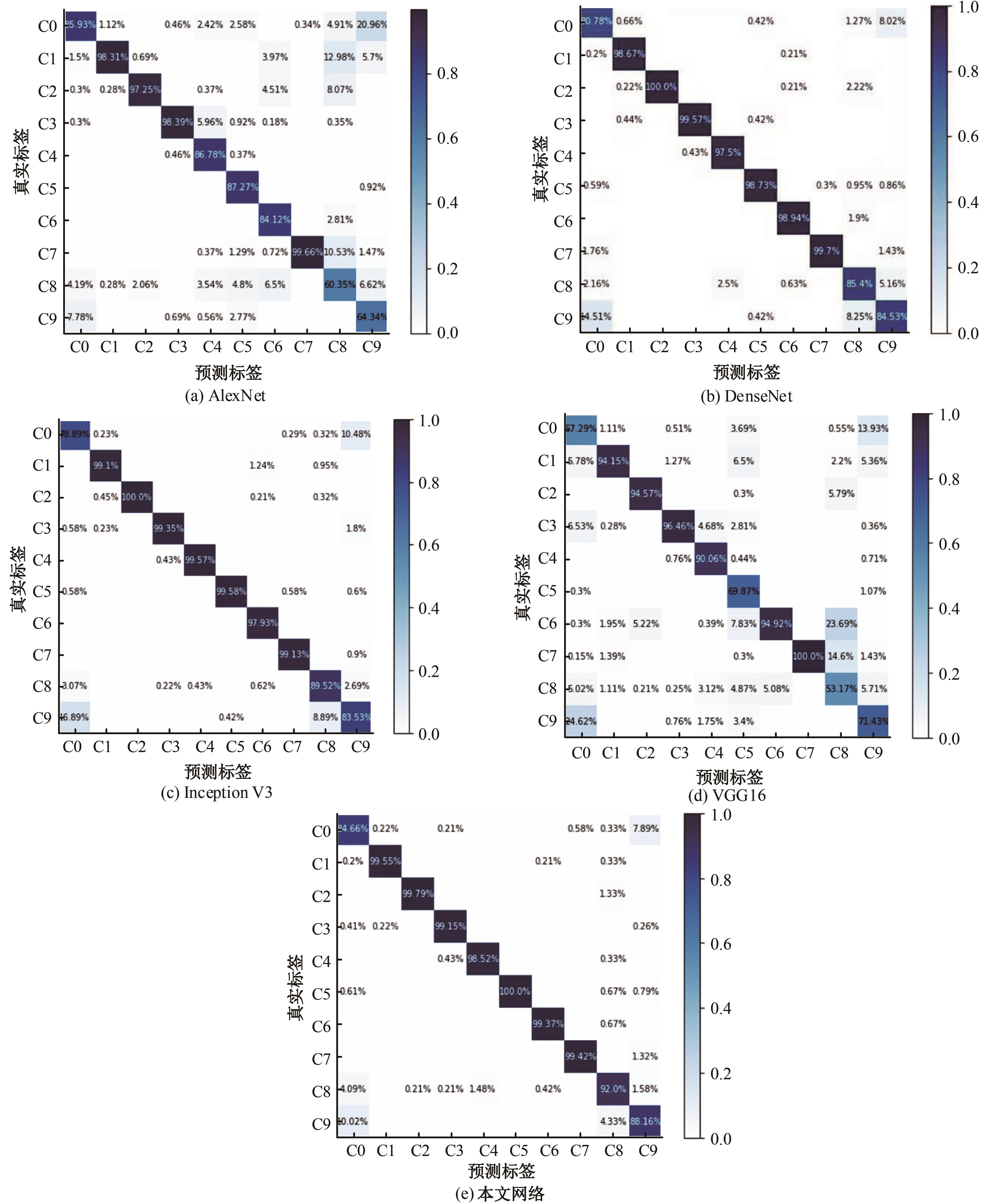

针对汽车座舱内的驾驶员行为识别任务,本文提出了一种基于双分支神经网络的识别方法。网络模型的主分支以ResNet 50作为主干网络进行特征提取,利用可变形卷积使模型适应驾驶员在图像中的形状和位置变化。辅助分支在梯度反向传播过程中辅助更新主干网络的参数,使主干网络能够更好地提取有利于驾驶员行为识别的特征,从而提高模型的识别性能。网络模型在State Farm 公开数据集的消融实验和对比实验结果表明:本文网络模型的识别准确率可以达到96.23%,针对易于混淆的行为类别识别效果更佳。研究结果对于汽车座舱内的驾驶员行为理解与保障行车安全具有重要意义。

中图分类号:

- U471.3

| 1 | Dogan E, Rahal M C, Deborne R, et al. Transition of control in a partially automated vehicle: effects of anticipation and non-driving-related task involvement [J]. Transportation Research Part F: Traffic Psychology and Behaviour, 2017, 46(2017): 205-215. |

| 2 | Deeb J, Zakaria F, Kamali W, et al. Method of detection of early falling asleep while driving using EOG analysis[C]∥Proceedings of the 4th International Conference on Advances in Biomedical Engineering (ICABME), Beirut, Lebanon, 2017:150-153. |

| 3 | Halomoan J, Ramli K, Sudiana D. Statistical analysis to determine the ground truth of fatigue driving state using ECG recording and subjective reporting[C]∥Proceedings of the 1st International Conference on Information Technology, Advanced Mechanical and Electrical Engineering, Yogyakarta, Indonesia, 2020: 244-248. |

| 4 | Satti A T, Kim J, Yi E, et al. Microneedle array electrode-based wearable EMG system for detection of driver drowsiness through steering wheel grip[J]. Sensors, 2021, 21(15): 5091-5104. |

| 5 | Ebrahimian S, Nahvi A, Tashakori M, et al. Multi-level classification of driver drowsiness by simultaneous analysis of ECG and respiration signals using deep neural networks[J]. International Journal of Environmental Research and Public Health, 2022, 19(17): 10736-10752. |

| 6 | Jantan S, Ahmad S A, Soh A C, et al. A multi-model analysis for driving fatigue detection using EEG signals[C]∥Proceedings of the 7th IEEE-EMBS Conference on Biomedical Engineering and Sciences(IECBES), Kuala Lumpur, Malaysia, 2022: 183-188. |

| 7 | Pakdamanian E, Sheng S, Baee S, et al. DeepTake: prediction of driver takeover behavior using multimodal data[C]∥Proceedings of the CHI Conference on Human Factors in Computing Systems, Yokohama,Japan, 2021:No.3445563. |

| 8 | Wang X, Xu R, Zhang S, et al. Driver distraction detection based on vehicle dynamics using naturalistic driving data[J]. Transportation Research Part C: Emerging Technologies, 2022, 136(2022): 103561-103572. |

| 9 | Li Z, Chen L, Nie L, et al. A novel learning model of driver fatigue features representation for steering wheel angle[J]. IEEE Transactions on Vehicular Technology, 2022, 71(1): 269-281. |

| 10 | Tang M, Wu F, Zhao L L, et al. Detection of distracted driving based on MultiGranularity and Middle-Level features[C]∥Chinese Automation Congress (CAC),Shanghai, China, 2020: 2717-2722. |

| 11 | Lin Y, Cao D, Fu Z, et al. A lightweight attention-based network towards distracted driving behavior recognition[J]. Applied Sciences, 2022, 12(9): 4191-4208. |

| 12 | Huang T, Fu R, Chen Y, et al. Real-time driver behavior detection based on deep deformable inverted residual network with an attention mechanism for human-vehicle co-driving system[J]. IEEE Transactions on Vehicular Technology, 2022, 71(12): 12475-12488. |

| 13 | Liu D, Yamasaki T, Wang Y, et al. Toward extremely lightweight distracted driver recognition with distillation-based neural architecture search and knowledge transfer[J]. IEEE Transactions on Intelligent Transportation Systems, 2022, 24(1): 764-777. |

| 14 | He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA,2016:770-778. |

| 15 | Deng J, Dong W, Socher R, et al. Imagenet: a large-scale hierarchical image database[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, USA,2009:248-255. |

| 16 | Dai J F, Qi H Z, Xiong Y W, et al. Deformable convolutional networks[C]∥Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy,2017:764-773. |

| 17 | Szegedy C, Liu W, Jia Y, et al. Going deeper with convolutions[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, USA, 2015:No.7298594. |

| 18 | Inc Kaggle. State farm distracted driver detection[DB/OL].[2023-03-25].. |

| 19 | Omeiza D, Speakman S, Cintas C, et al. Smooth grad-cam++: an enhanced inference level visualization technique for deep convolutional neural network models [J/OL].[2023-03-26]. . |

| 20 | Krizhevsky A, Sutskever I, Hinton G E. Imagenet classification with deep convolutional neural networks [J]. Communications of the ACM, 2017, 60(6): 84-90. |

| 21 | Huang G, Liu Z, Van Der Maaten L, et al. Densely connected convolutional networks[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu,USA,2017: 4700-4708. |

| 22 | Szegedy C, Vanhoucke V, Ioffe S, et al. Rethinking the inception architecture for computer vision[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas,USA,2016: 2818-2826. |

| 23 | Su J, Vargas D V, Sakurai K. One pixel attack for fooling deep neural networks[J]. IEEE Transactions on Evolutionary Computation, 2019, 23(5): 828-841. |

| [1] | 王军年,曹宇靖,罗智仁,李凯旋,赵文伯,孟盈邑. 基于双目视觉的道路水深在线检测算法[J]. 吉林大学学报(工学版), 2025, 55(1): 175-184. |

| [2] | 汪豪,赵彬,刘国华. 基于时间和运动增强的视频动作识别[J]. 吉林大学学报(工学版), 2025, 55(1): 339-346. |

| [3] | 谭草,任浩鑫,葛文庆,宋亚东,陆佳瑜. 直驱阀控液压振动平台改进自抗扰控制[J]. 吉林大学学报(工学版), 2025, 55(1): 84-92. |

| [4] | 李寿涛,杨路,屈如意,孙鹏鹏,于丁力. 基于模型预测控制的滑移率控制方法[J]. 吉林大学学报(工学版), 2024, 54(9): 2687-2696. |

| [5] | 吴量,顾义凡,邢彪,马芳武,倪利伟,贾微微. 基于线性二次型调节器的四轮转向与分布式集成控制方法[J]. 吉林大学学报(工学版), 2024, 54(9): 2414-2422. |

| [6] | 特木尔朝鲁朝鲁,张亚萍. 基于卷积神经网络的无线传感器网络链路异常检测算法[J]. 吉林大学学报(工学版), 2024, 54(8): 2295-2300. |

| [7] | 朱圣杰,王宣,徐芳,彭佳琦,王远超. 机载广域遥感图像的尺度归一化目标检测方法[J]. 吉林大学学报(工学版), 2024, 54(8): 2329-2337. |

| [8] | 王玉海,李晓之,李兴坤. 面向高速工况的混合动力卡车预见性节能算法[J]. 吉林大学学报(工学版), 2024, 54(8): 2121-2129. |

| [9] | 常胜,刘宏飞,邹乃威. 汽车变曲率路径循迹H∞回路成形鲁棒控制[J]. 吉林大学学报(工学版), 2024, 54(8): 2141-2148. |

| [10] | 赵宏伟,武鸿,马克,李海. 基于知识蒸馏的图像分类框架[J]. 吉林大学学报(工学版), 2024, 54(8): 2307-2312. |

| [11] | 张锦洲,姬世青,谭创. 融合卷积神经网络和双边滤波的相贯线焊缝提取算法[J]. 吉林大学学报(工学版), 2024, 54(8): 2313-2318. |

| [12] | 刘建泽,柳江,李敏,章新杰. 基于最小二乘的车速解耦路面辨识方法[J]. 吉林大学学报(工学版), 2024, 54(7): 1821-1830. |

| [13] | 谢宪毅,张明君,金立生,周彬,胡涛,白宇飞. 考虑舒适度的智能汽车人工蜂群轨迹规划方法[J]. 吉林大学学报(工学版), 2024, 54(6): 1570-1581. |

| [14] | 孙铭会,薛浩,金玉波,曲卫东,秦贵和. 联合时空注意力的视频显著性预测[J]. 吉林大学学报(工学版), 2024, 54(6): 1767-1776. |

| [15] | 刘从臻,陈高,刘洪柱,马强,徐成伟,孟辉,王国林. 湿滑路面轮胎接地力学特性模型[J]. 吉林大学学报(工学版), 2024, 54(6): 1501-1511. |

|

||