吉林大学学报(工学版) ›› 2023, Vol. 53 ›› Issue (10): 2923-2931.doi: 10.13229/j.cnki.jdxbgxb.20211299

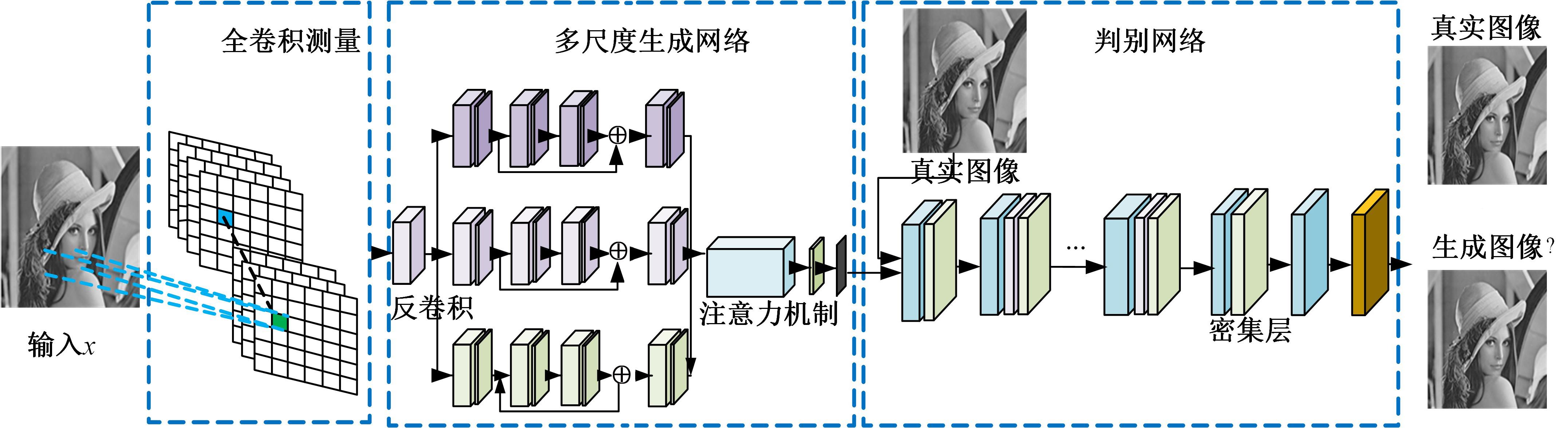

多尺度生成对抗网络下图像压缩感知重建算法

- 1.湖北工业大学 太阳能高效利用及储能运行控制湖北省重点实验室,武汉 430068

2.华中师范大学 教育信息技术学院,武汉 430079

Multi-scale generative adversarial network for image compressed sensing and reconstruction algorithm

Chun-yan ZENG1( ),Kang YAN1,Zhi-feng WANG2(

),Kang YAN1,Zhi-feng WANG2( ),Zheng-hui WANG1

),Zheng-hui WANG1

- 1.Hubei Key Laboratory for High-efficiency Utilization of Solar Energy and Operation Control of Energy Storage System,Hubei University of Technology,Wuhan 430068,China

2.School of Education Information Technology,Central China Normal University,Wuhan 430079,China

摘要:

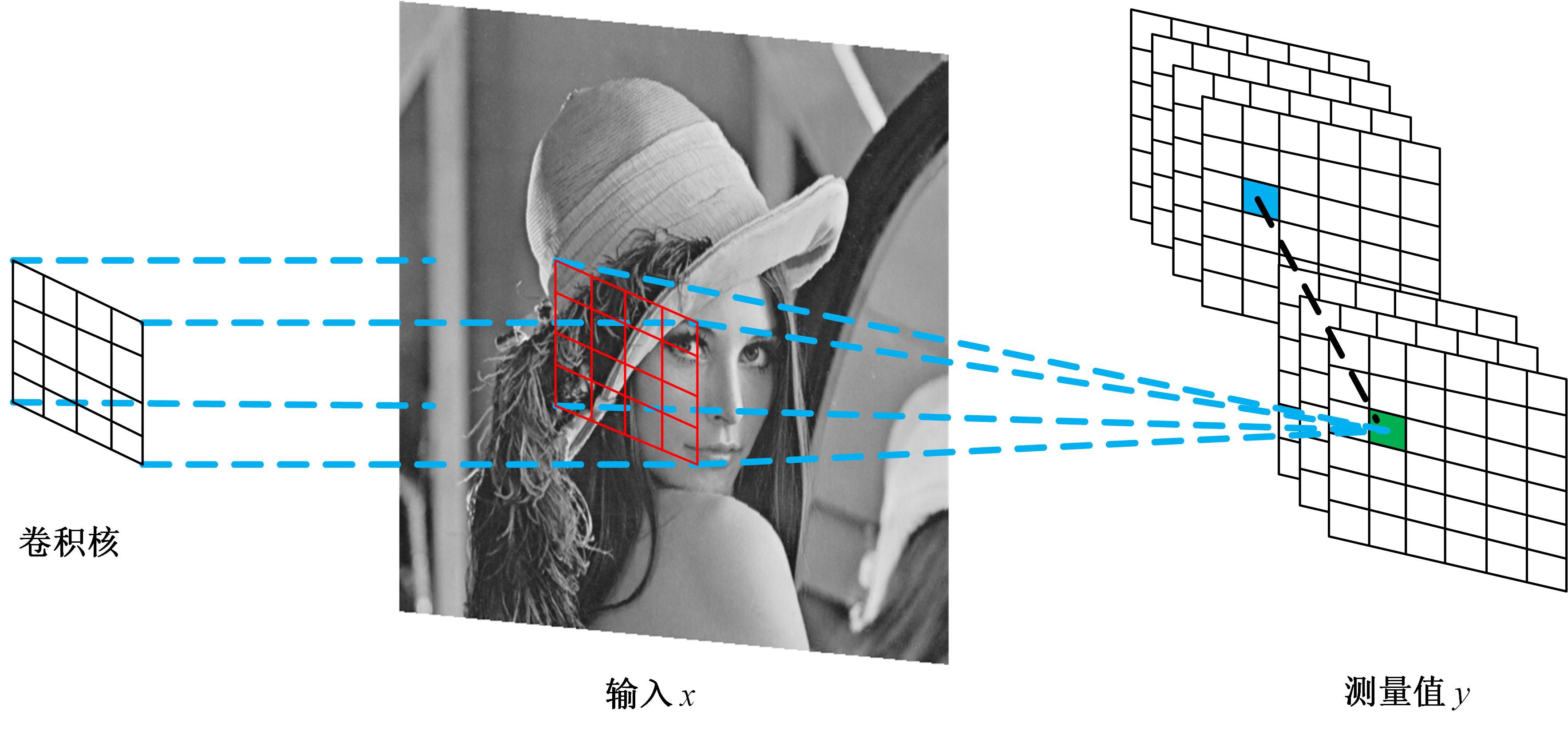

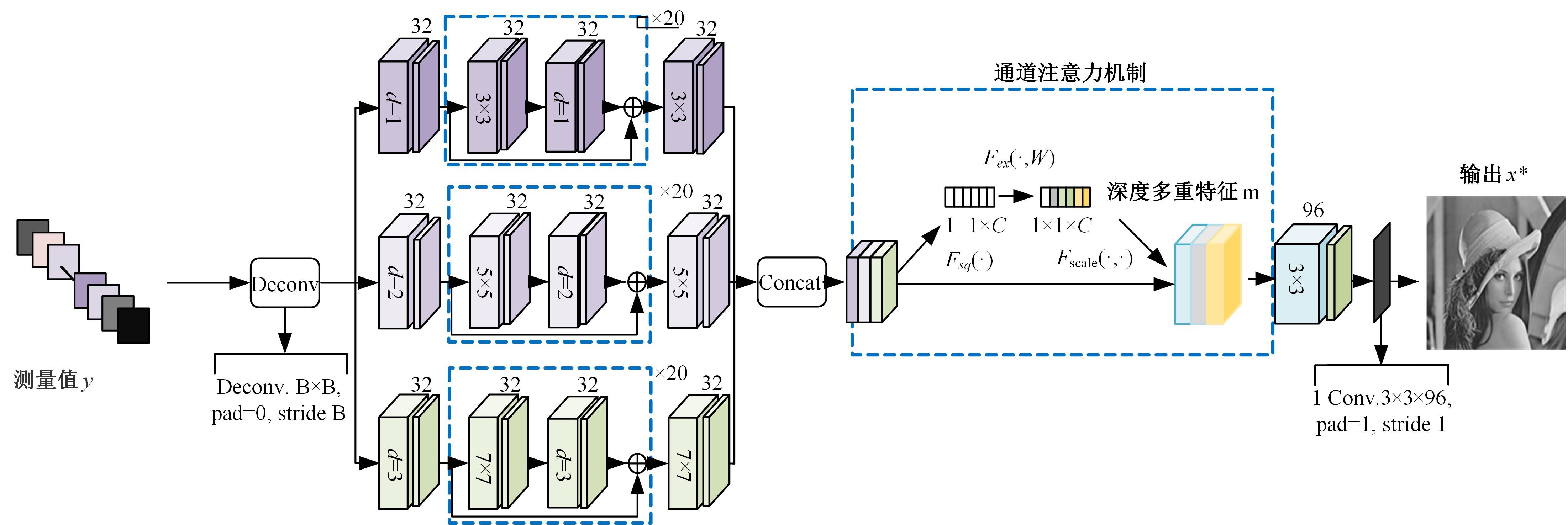

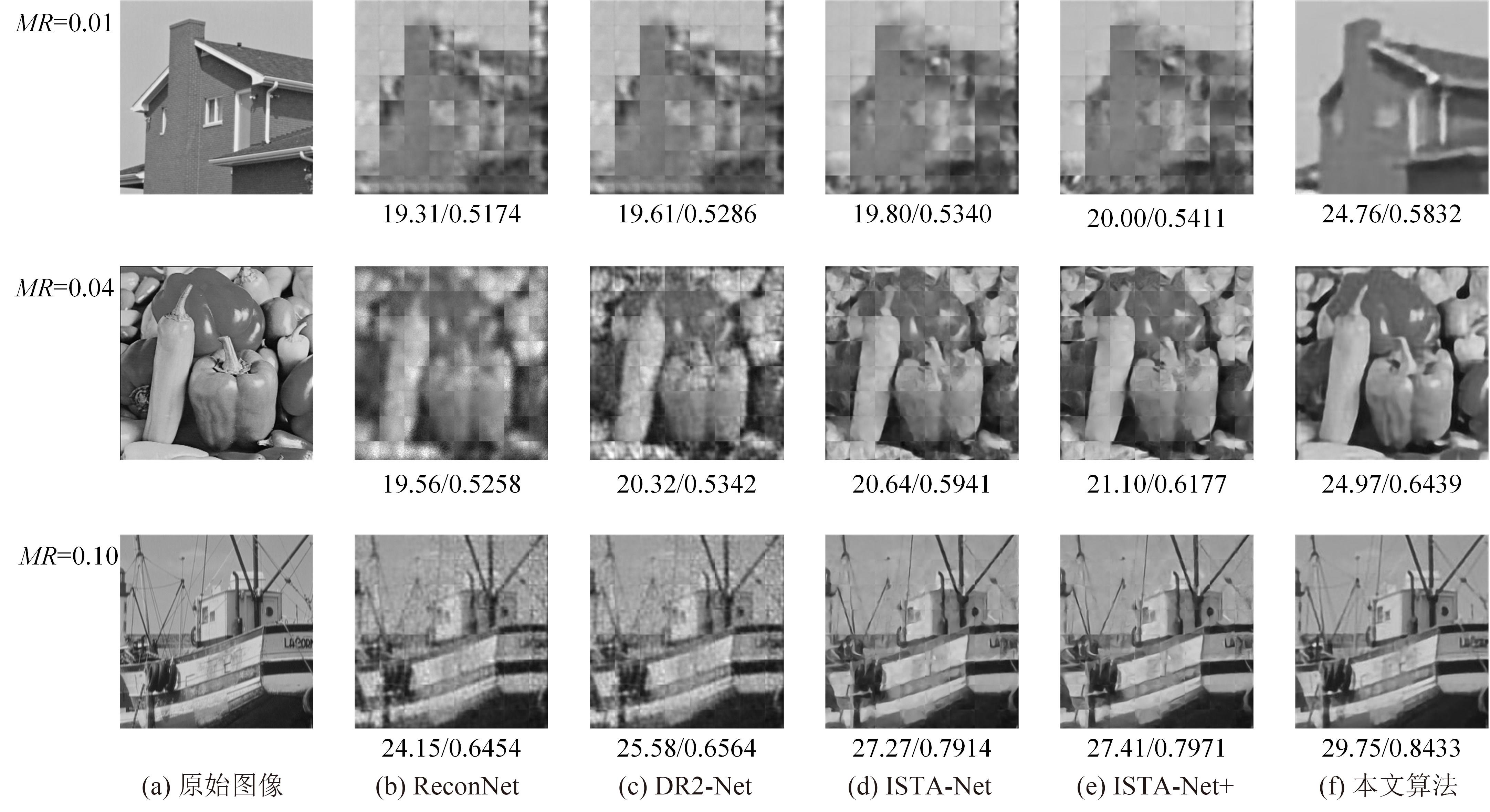

针对目前基于深度学习的压缩感知重建网络存在单通道重建网络没有深入挖掘图像的多尺度特征,缺乏对重建网络的反馈机制,并且重建网络缺乏与测量矩阵的关联,制约了重建质量的进一步提升的问题,提出了一种多尺度生成对抗网络下图像压缩感知与重建算法。该算法先通过多通道残差块提取图像的多尺度信息,加入判别网络形成对多尺度生成网络的反馈,再将全卷积测量网络与重建网络联合训练,以提升图像重建质量。实验结果表明:本文方法相对于ISTA-Net+方法在3种采样率下重建精度提高了2.02~4.09 dB。

中图分类号:

- TN911.73

| 1 | Donoho D. Compressed sensing[J]. IEEE Transactions on Information Theory, 2006, 52(4): 1289-1306. |

| 2 | Shi W, Jiang F, Liu S, et al. Image compressed sensing using convolutional neural network[J]. IEEE Transactions on Image Processing, 2020, 29: 375-388. |

| 3 | Mousavi A, Patel A B, Baraniuk R G. A deep learning approach to structured signal recovery[C]∥The 53rd Annual Allerton Conference on Communication, Control and Computing, Monticello,USA, 2015: 1336-1343. |

| 4 | Kulkarni K, Lohit S, Turaga P, et al. ReconNet: non-iterative reconstruction of images from compressively sensed measurements[C]∥IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 117-124. |

| 5 | Yao H T, Dai F, Zheng D M. DR2-Net:deep residual reconstruction network for image compressive sensing[C]∥IEEE Conference on Computer Vision and Pattern Recognition, Honolulu,USA, 2017: 451-462. |

| 6 | 练秋生, 富利鹏, 陈书贞, 等. 基于多尺度残差网络的压缩感知重构算法[J]. 自动化学报, 2019, 45(11):10-19. |

| Lian Qiu-sheng, Fu Li-peng, Chen Shu-zhen, et al. A compressed sensing algorithm based on multi-scale residual reconstruction network[J]. Acta Automatica Sinica, 2019, 45(11): 10-19. | |

| 7 | Gunning D, Aha D W. Darpa's explainable artificial intelligence program[J]. Magazine, 2019, 40(2): 44-58. |

| 8 | Monga V, Li Y, Eldar Y C. Algorithm unrolling: interpretable, efficient deep learning for signal and image processing[J]. IEEE Signal Processing Magazine, 2021, 38(2): 18-44. |

| 9 | Zhang J. ISTANet: interpretable optimization-inspired deep network for image compressive sensing[C]∥IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, USA, 2018: 1828-1837. |

| 10 | Fergus R, Taylor G W. Adaptive deconvolutional networks for mid and high level feature learning[C]∥International Conference on Computer Vision of IEEE Computer Society, Barcelona,Spain, 2011: 2018-2025. |

| 11 | Zeng K, Wang Y, Mao J. Deep residual deconvolutional networks for defocus blur detection[J]. IET Image Processing, 2021, 15: 1-3. |

| 12 | Woo S, Park J, Lee J Y, et al. CBAM: convolutional block attention module[C]∥Proceedings of the European Conference on Computer Vision, Munich, Germany, 2018: 3-19.. |

| 13 | Hou Q, Zhou D, Feng J. Coordinate attention for efficient mobile network design[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 2021: 13713-13722. |

| 14 | Hu A, Chen S, Wu L. WSGAN: an improved generative adversarial network for remote sensing image road network extraction by weakly supervised processing[J]. Remote Sensing, 2021, 13(13): 2506-2516. |

| 15 | Cui T, Gou G, Xiong G.6GAN: IPv6 multi-pattern target generation via generative adversarial nets with reinforcement learning[C]∥IEEE Conference on Computer Communications, Beijing, China, 2021: 1-10. |

| 16 | LEDIG C. Photo-realistic single image super-resolution using a generative adversarial network[C]∥IEEE Conference on Computer Vision and Pattern Recognition, Honolulu,USA, 2017: 105-114. |

| 17 | Deng Z, Zhu L, Hu X. Deep multi-model fusion for single-image dehazing[C]∥International Conference on Computer Vision, Seoul, South Korea, 2019: 165-174. |

| [1] | 霍光,林大为,刘元宁,朱晓冬,袁梦,盖迪. 基于多尺度特征和注意力机制的轻量级虹膜分割模型[J]. 吉林大学学报(工学版), 2023, 53(9): 2591-2600. |

| [2] | 何颖,王卓然,周旭,刘衍珩. 融合社交地理信息加权矩阵分解的兴趣点推荐算法[J]. 吉林大学学报(工学版), 2023, 53(9): 2632-2639. |

| [3] | 张云佐,董旭,蔡昭权. 拟合下肢几何特征的多视角步态周期检测[J]. 吉林大学学报(工学版), 2023, 53(9): 2611-2619. |

| [4] | 肖明尧,李雄飞,朱芮. 基于NSST域像素相关分析的医学图像融合[J]. 吉林大学学报(工学版), 2023, 53(9): 2640-2648. |

| [5] | 赵亚慧,李飞雨,崔荣一,金国哲,张振国,李德,金小峰. 基于跨语言预训练模型的朝汉翻译质量评估[J]. 吉林大学学报(工学版), 2023, 53(8): 2371-2379. |

| [6] | 车翔玖,徐欢,潘明阳,刘全乐. 生物医学命名实体识别的两阶段学习算法[J]. 吉林大学学报(工学版), 2023, 53(8): 2380-2387. |

| [7] | 王连明,吴鑫. 基于姿态估计的物体3D运动参数测量方法[J]. 吉林大学学报(工学版), 2023, 53(7): 2099-2108. |

| [8] | 张则强,梁巍,谢梦柯,郑红斌. 混流双边拆卸线平衡问题的精英差分进化算法[J]. 吉林大学学报(工学版), 2023, 53(5): 1297-1304. |

| [9] | 张振海,季坤,党建武. 基于桥梁裂缝识别模型的桥梁裂缝病害识别方法[J]. 吉林大学学报(工学版), 2023, 53(5): 1418-1426. |

| [10] | 刘培勇,董洁,谢罗峰,朱杨洋,殷国富. 基于多支路卷积神经网络的磁瓦表面缺陷检测算法[J]. 吉林大学学报(工学版), 2023, 53(5): 1449-1457. |

| [11] | 姜宇,潘家铮,陈何淮,符凌智,齐红. 基于分割方法的繁体中文报纸文本检测[J]. 吉林大学学报(工学版), 2023, 53(4): 1146-1154. |

| [12] | 吕卫,韩镓泽,褚晶辉,井佩光. 基于多模态自注意力网络的视频记忆度预测[J]. 吉林大学学报(工学版), 2023, 53(4): 1211-1219. |

| [13] | 于鹏,朴燕. 基于多尺度特征的行人重识别属性提取新方法[J]. 吉林大学学报(工学版), 2023, 53(4): 1155-1162. |

| [14] | 潘弘洋,刘昭,杨波,孙庚,刘衍珩. 基于新一代通信技术的无人机系统群体智能方法综述[J]. 吉林大学学报(工学版), 2023, 53(3): 629-642. |

| [15] | 何颖,樊俊松,王巍,孙庚,刘衍珩. 无人机空地安全通信与航迹规划的多目标联合优化方法[J]. 吉林大学学报(工学版), 2023, 53(3): 913-922. |

|

||