吉林大学学报(工学版) ›› 2022, Vol. 52 ›› Issue (8): 1857-1864.doi: 10.13229/j.cnki.jdxbgxb20211096

• 计算机科学与技术 • 上一篇

像素级卷积神经网络多聚焦图像融合算法

- 1.吉林大学 计算机科学与技术学院,长春 130012

2.吉林大学 符号计算与知识工程教育部重点实验室,长春 130012

3.长春爱思博特信息科技有限公司,长春 130012

Multi⁃focus image fusion algorithm based on pixel⁃level convolutional neural network

Xuan-jing SHEN1,2( ),Xue-feng ZHANG1,2,Yu WANG1,2,Yu-bo JIN3(

),Xue-feng ZHANG1,2,Yu WANG1,2,Yu-bo JIN3( )

)

- 1.College of Computer Science and Technology,Jilin University,Changchun 130012,China

2.Key Laboratory of Symbolic Computation and Knowledge Engineering of Ministry of Education,Jilin University,Changchun 130012,China

3.Changchun Expert Information Technology Co. ,Ltd. ,Changchun 130012,China

摘要:

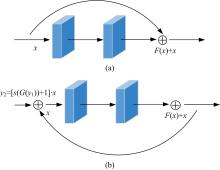

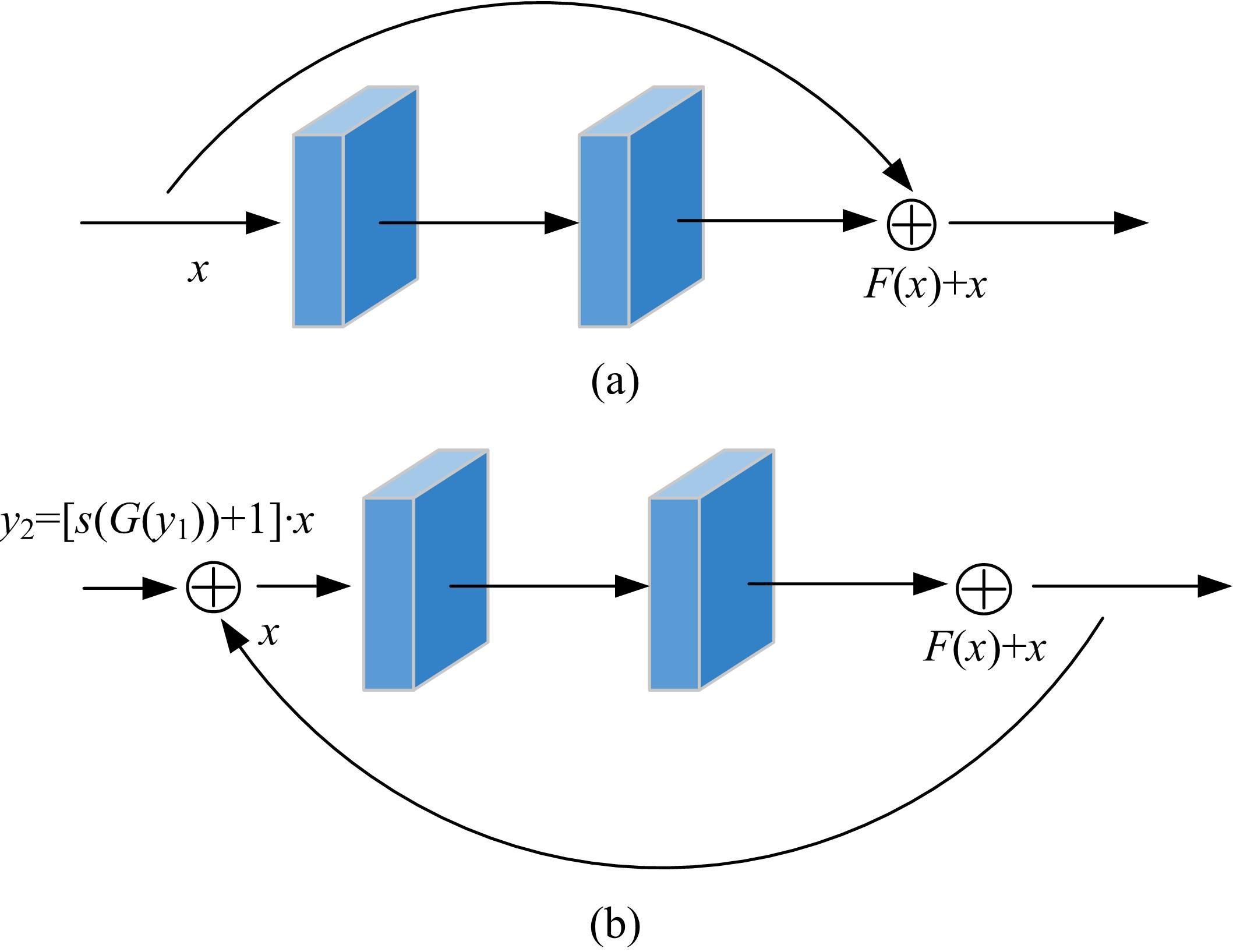

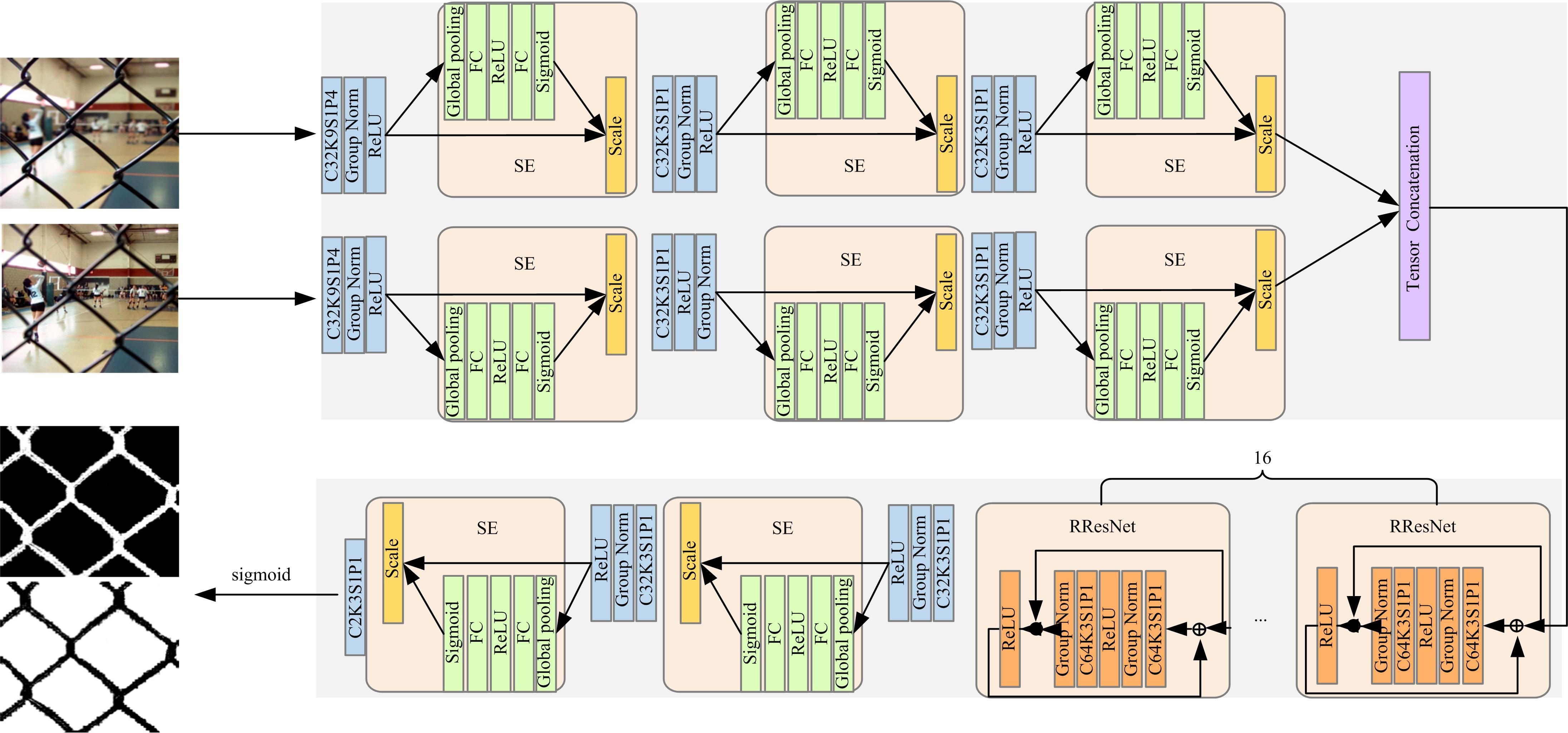

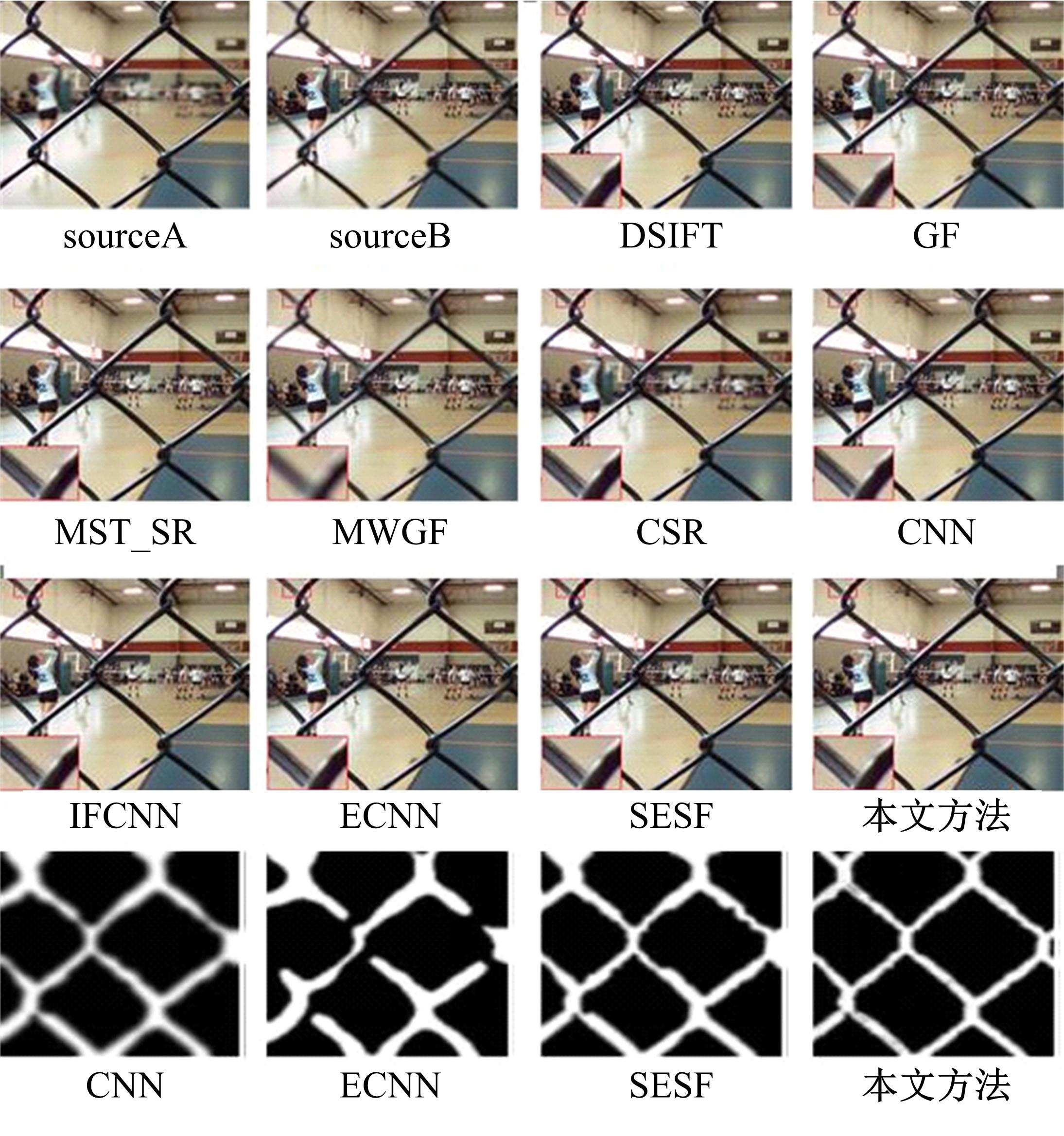

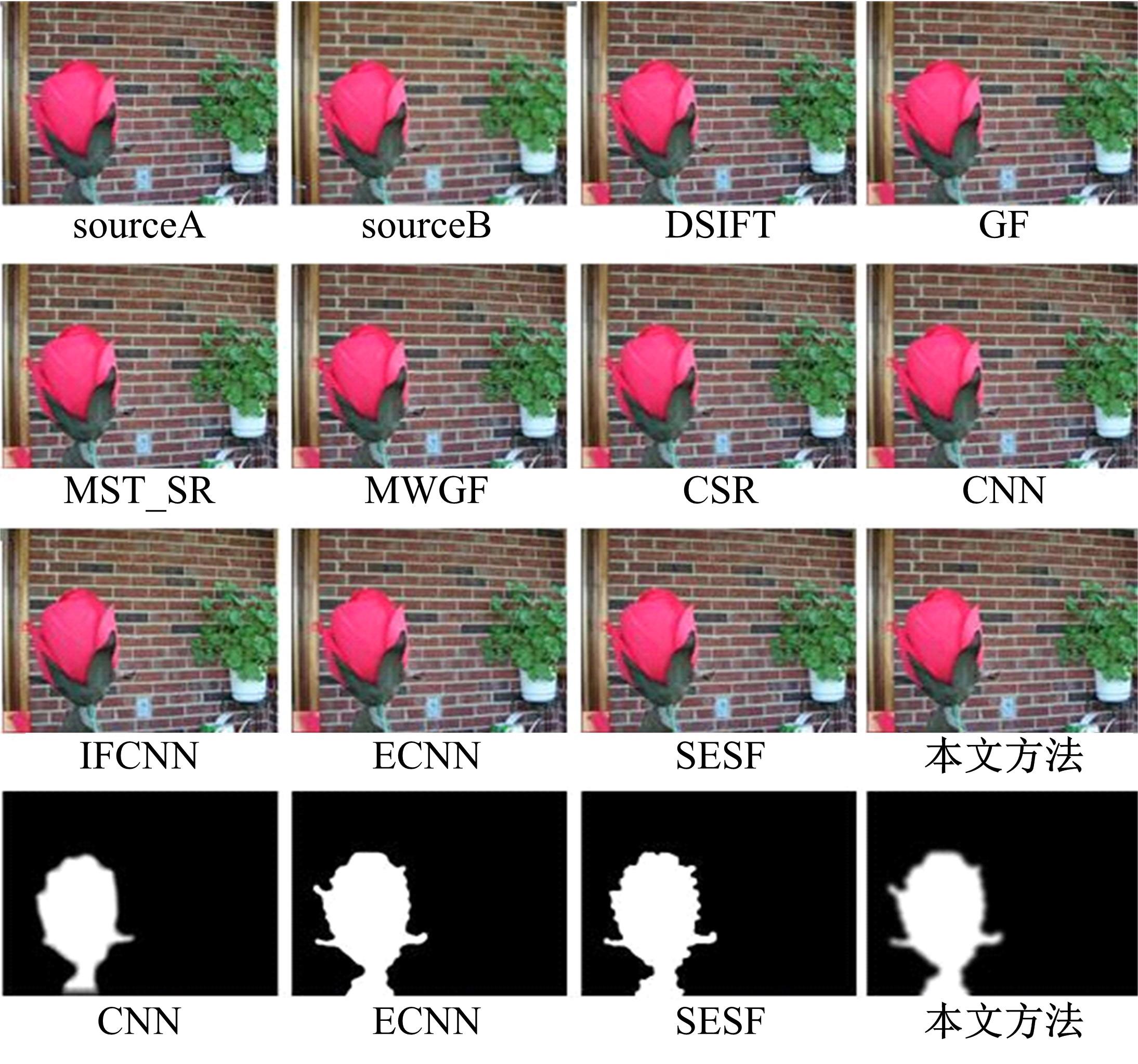

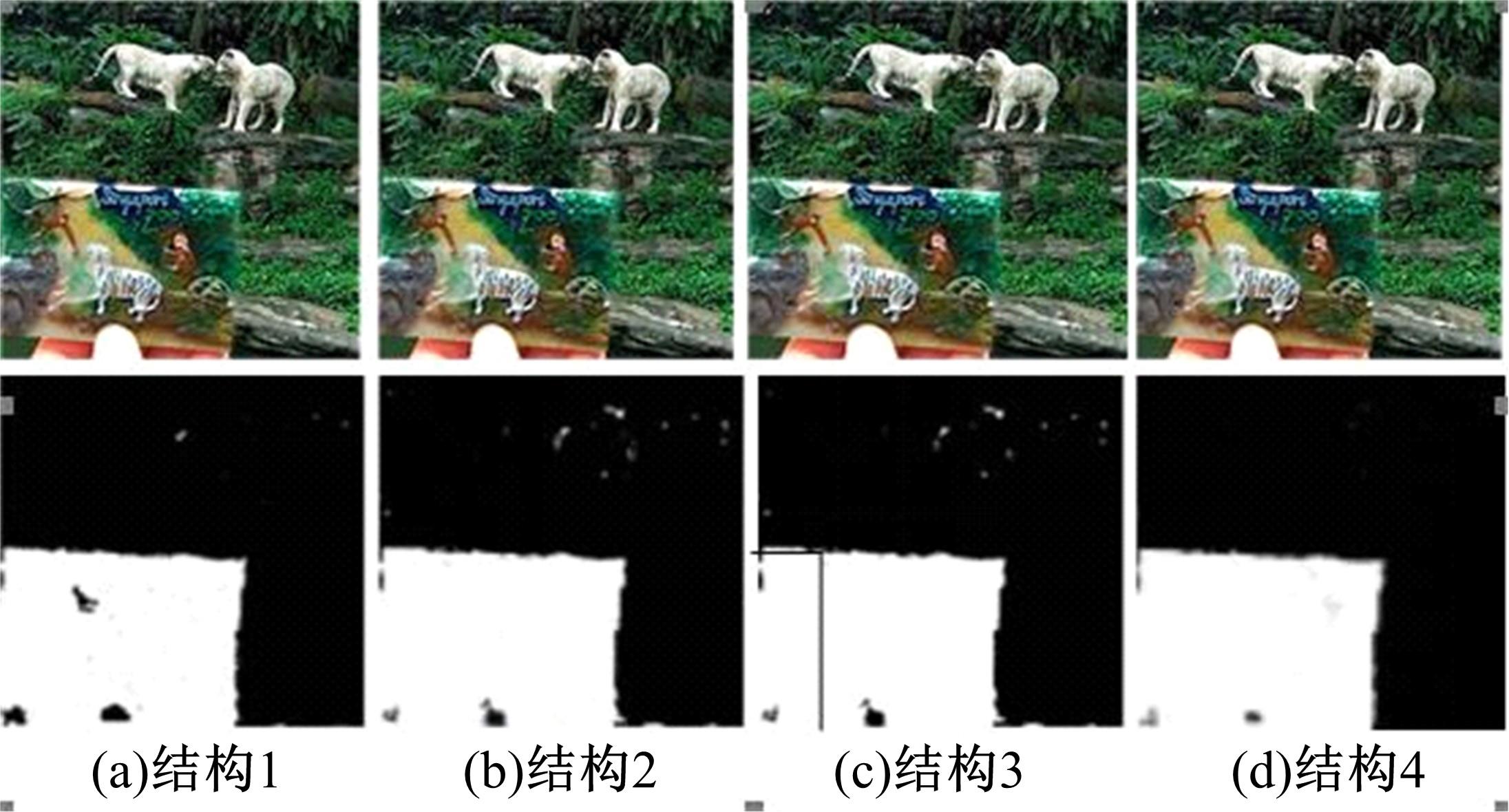

提出了一种用于多聚焦图像融合的卷积神经网络(CNN)。与现有的基于CNN的图像融合方法将源图像分解成几个小块,然后使用一个分类器来估计图像块是否聚焦相比,本文方法直接将整个图像转换成一个决策图。像素级回归策略可以充分利用互补信息,解决了聚焦/散焦区域周围模糊程度估计的困难。此外,在图像融合领域,应用环形残差网络(RResNet)模块来提取更多聚焦区域的语义信息。同时,利用结构相似度(SSIM)估计生成的融合图像与参考图像之间的结构相似性以提高融合图像的质量,同时采用边缘保留损失函数来保留源图像中更多的梯度信息。实验结果表明:该方法在主观视觉效果和客观评价方面均优于其他融合算法。

中图分类号:

- TP391

| 1 | Li S, Kang X, Fang L, et al. Pixel-level image fusion: a survey of the state of the art[J]. Information Fusion, 2017, 33: 100-112. |

| 2 | Huang W, Jing Z. Evaluation of focus measures in multi-focus image fusion[J]. Pattern Recognition Letters, 2007, 28(4): 493-500. |

| 3 | Aslantas V, Kurban R. Fusion of multi-focus images using differential evolution algorithm[J]. Expert Systems with Applications, 2010, 37(12): 8861-8870. |

| 4 | Liu Y, Liu S, Wang Z. Multi-focus image fusion with dense SIFT[J]. Information Fusion, 2015, 23: 139-155. |

| 5 | Hill P, Al-Mualla M E, Bull D. Perceptual image fusion using wavelets[J]. IEEE Transactions on Image Processing, 2016, 26(3): 1076-1088. |

| 6 | Yu B, Jia B, Ding L, et al. Hybrid dual-tree complex wavelet transform and support vector machine for digital multi-focus image fusion[J]. Neurocomputing, 2016, 182: 1-9. |

| 7 | 张强,郭宝龙.基于Curvelet变换的图像融合算法[J]. 吉林大学学报: 工学版, 2007, 37(2): 458-463. |

| Zhang Qiang, Guo Bao-long. Image fusion algorithm based on Curvelet transform[J]. Journal of Jilin University(Engineering and Technology Edition), 2007, 37(2): 458-463. | |

| 8 | 孙伟, 郭宝龙, 陈龙. 非降采样Contourlet域方向区域多聚焦图像融合算法[J]. 吉林大学学报: 工学版, 2009, 39(5): 1384-1389. |

| Sun Wei, Guo Bao-long, Chen Long. Multi-focus image fusion algorithm for non-down-sampling Contourlet domain direction region[J]. Journal of Jilin University(Engineering and Technology Edition), 2009, 39(5): 1384-1389. | |

| 9 | Bhatnagar G, Wu Q M J, Liu Z. Directive contrast based multimodal medical image fusion in NSCT domain[J]. IEEE Transactions on Multimedia, 2013, 15(5): 1014-1024. |

| 10 | Liu X, Mei W, Du H. Multi-modality medical image fusion based on image decomposition framework and nonsubsampled shearlet transform[J]. Biomedical Signal Processing and Control, 2018, 40: 343-350. |

| 11 | Liu Y, Chen X, Peng H, et al. Multi-focus image fusion with a deep convolutional neural network[J]. Information Fusion, 2017, 36: 191-207. |

| 12 | Zhang Y, Liu Y, Sun P, et al. IFCNN: a general image fusion framework based on convolutional neural network[J]. Information Fusion, 2020, 54: 99-118. |

| 13 | Amin-Naji M, Aghagolzadeh A, Ezoji M. Ensemble of CNN for multi-focus image fusion[J]. Information fusion, 2019, 51: 201-214. |

| 14 | Gai D, Shen X, Chen H, et al. Multi-focus image fusion method based on two stage of convolutional neural network[J]. Signal Processing, 2020, 176: 107681. |

| 15 | Ma B, Zhu Y, Yin X, et al. Sesf-fuse: an unsupervised deep model for multi-focus image fusion[J]. Neural Computing and Applications, 2021, 33(11): 5793-5804. |

| 16 | Zhang H, Le Z, Shao Z, et al. MFF-GAN: an unsupervised generative adversarial network with adaptive and gradient joint constraints for multi-focus image fusion[J]. Information Fusion, 2021, 66: 40-53. |

| 17 | Li S, Kang X, Hu J. Image fusion with guided filtering[J]. IEEE Transactions on Image Processing, 2013, 22(7): 2864-2875. |

| 18 | Fakhari F, Mosavi M R, Lajvardi M M. Image fusion based on multi-scale transform and sparse representation: an image energy approach[J]. Iet Image Processing, 2017, 11(11): 1041-1049. |

| 19 | Zhou Z, Li S, Wang B. Multi-scale weighted gradient-based fusion for multi-focus images[J]. Information Fusion, 2014, 20: 60-72. |

| 20 | Liu Y, Chen X, Ward R K, et al. Image fusion with convolutional sparse representation[J]. IEEE Signal Processing Letters, 2016, 23(12): 1882-1886. |

| 21 | Liu Y, Chen X, Peng H, et al. Multi-focus image fusion with a deep convolutional neural network[J]. Information Fusion, 2017, 36: 191-207. |

| [1] | 高明华,杨璨. 基于改进卷积神经网络的交通目标检测方法[J]. 吉林大学学报(工学版), 2022, 52(6): 1353-1361. |

| [2] | 杨怀江,王二帅,隋永新,闫丰,周跃. 简化型残差结构和快速深度残差网络[J]. 吉林大学学报(工学版), 2022, 52(6): 1413-1421. |

| [3] | 王学智,李清亮,李文辉. 融合迁移学习的土壤湿度预测时空模型[J]. 吉林大学学报(工学版), 2022, 52(3): 675-683. |

| [4] | 李向军,涂洁莹,赵志宾. 基于多尺度融合卷积神经网络的熔解曲线有效性分类[J]. 吉林大学学报(工学版), 2022, 52(3): 633-639. |

| [5] | 欧阳继红,郭泽琪,刘思光. 糖尿病视网膜病变分期双分支混合注意力决策网络[J]. 吉林大学学报(工学版), 2022, 52(3): 648-656. |

| [6] | 李先通,全威,王华,孙鹏程,安鹏进,满永兴. 基于时空特征深度学习模型的路径行程时间预测[J]. 吉林大学学报(工学版), 2022, 52(3): 557-563. |

| [7] | 宋林,王立平,吴军,关立文,刘知贵. 基于信息物理融合和数字孪生的可靠性分析[J]. 吉林大学学报(工学版), 2022, 52(2): 439-449. |

| [8] | 曹洁,马佳林,黄黛麟,余萍. 一种基于多通道马尔可夫变迁场的故障诊断方法[J]. 吉林大学学报(工学版), 2022, 52(2): 491-496. |

| [9] | 高文志,王彦军,王欣伟,张攀,李勇,董阳. 基于卷积神经网络的柴油机失火故障实时诊断[J]. 吉林大学学报(工学版), 2022, 52(2): 417-424. |

| [10] | 张龙,徐天鹏,王朝兵,易剑昱,甄灿壮. 基于卷积门控循环网络的齿轮箱故障诊断[J]. 吉林大学学报(工学版), 2022, 52(2): 368-376. |

| [11] | 段亮,宋春元,刘超,魏苇,吕成吉. 基于机器学习的高速列车轴承温度状态识别[J]. 吉林大学学报(工学版), 2022, 52(1): 53-62. |

| [12] | 曲优,李文辉. 基于锚框变换的单阶段旋转目标检测方法[J]. 吉林大学学报(工学版), 2022, 52(1): 162-173. |

| [13] | 刘桂霞,裴志尧,宋佳智. 基于深度学习的蛋白质⁃ATP结合位点预测[J]. 吉林大学学报(工学版), 2022, 52(1): 187-194. |

| [14] | 张杰,景雯,陈富. 基于被动分簇算法的即时通信网络协议漏洞检测[J]. 吉林大学学报(工学版), 2021, 51(6): 2253-2258. |

| [15] | 钟辉,康恒,吕颖达,李振建,李红,欧阳若川. 基于注意力卷积神经网络的图像篡改定位算法[J]. 吉林大学学报(工学版), 2021, 51(5): 1838-1844. |

|

||