吉林大学学报(工学版) ›› 2024, Vol. 54 ›› Issue (1): 232-239.doi: 10.13229/j.cnki.jdxbgxb.20220239

• 计算机科学与技术 • 上一篇

基于BERT与密集复合网络的长文本语义匹配模型

- 1.桂林电子科技大学 机电工程学院,广西 桂林 541000

2.桂林电子科技大学 信息与通信学院,广西 桂林 541000

Long text semantic matching model based on BERT and dense composite network

Yue-lin CHEN1( ),Zhu-cheng GAO1,Xiao-dong CAI2(

),Zhu-cheng GAO1,Xiao-dong CAI2( )

)

- 1.School of Mechanical and Electrical Engineering,Guilin University of Electronic Technology,Guilin 541000,China

2.School of Information and Communication,Guilin University of Electronic Technology,Guilin 541000,China

摘要:

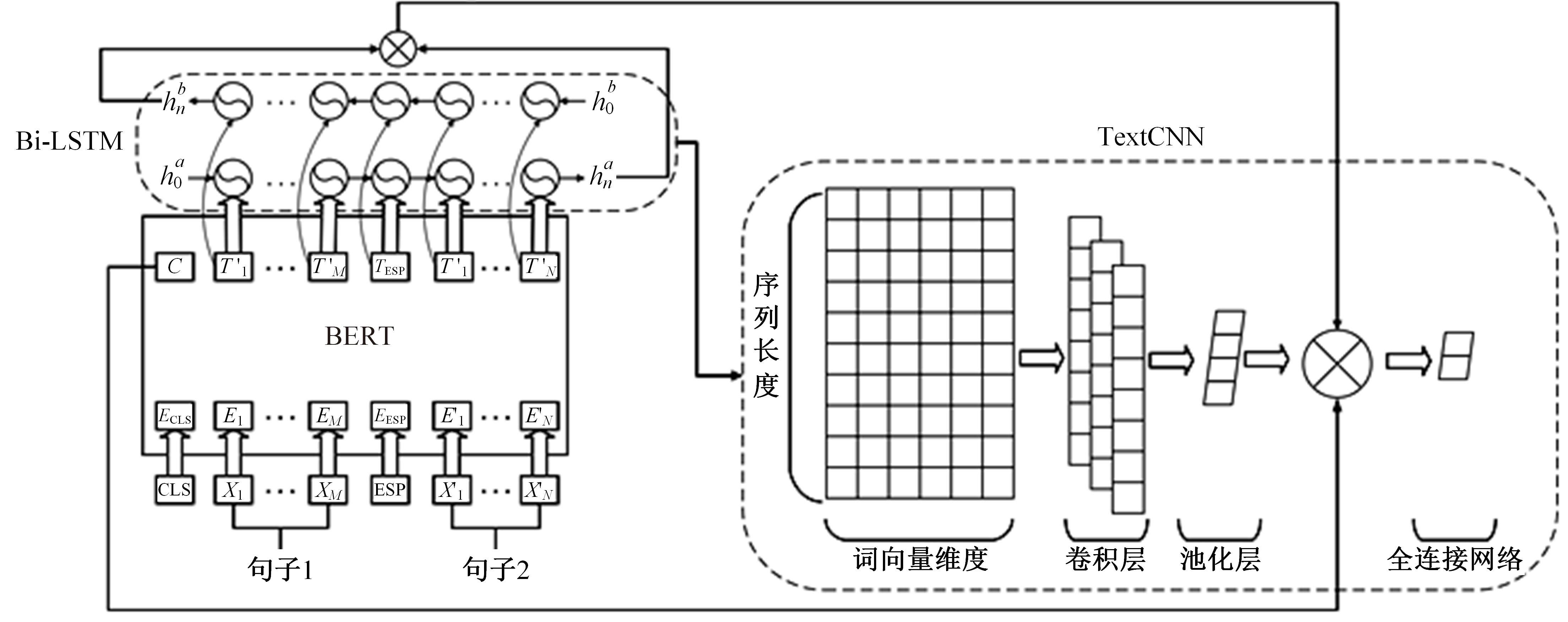

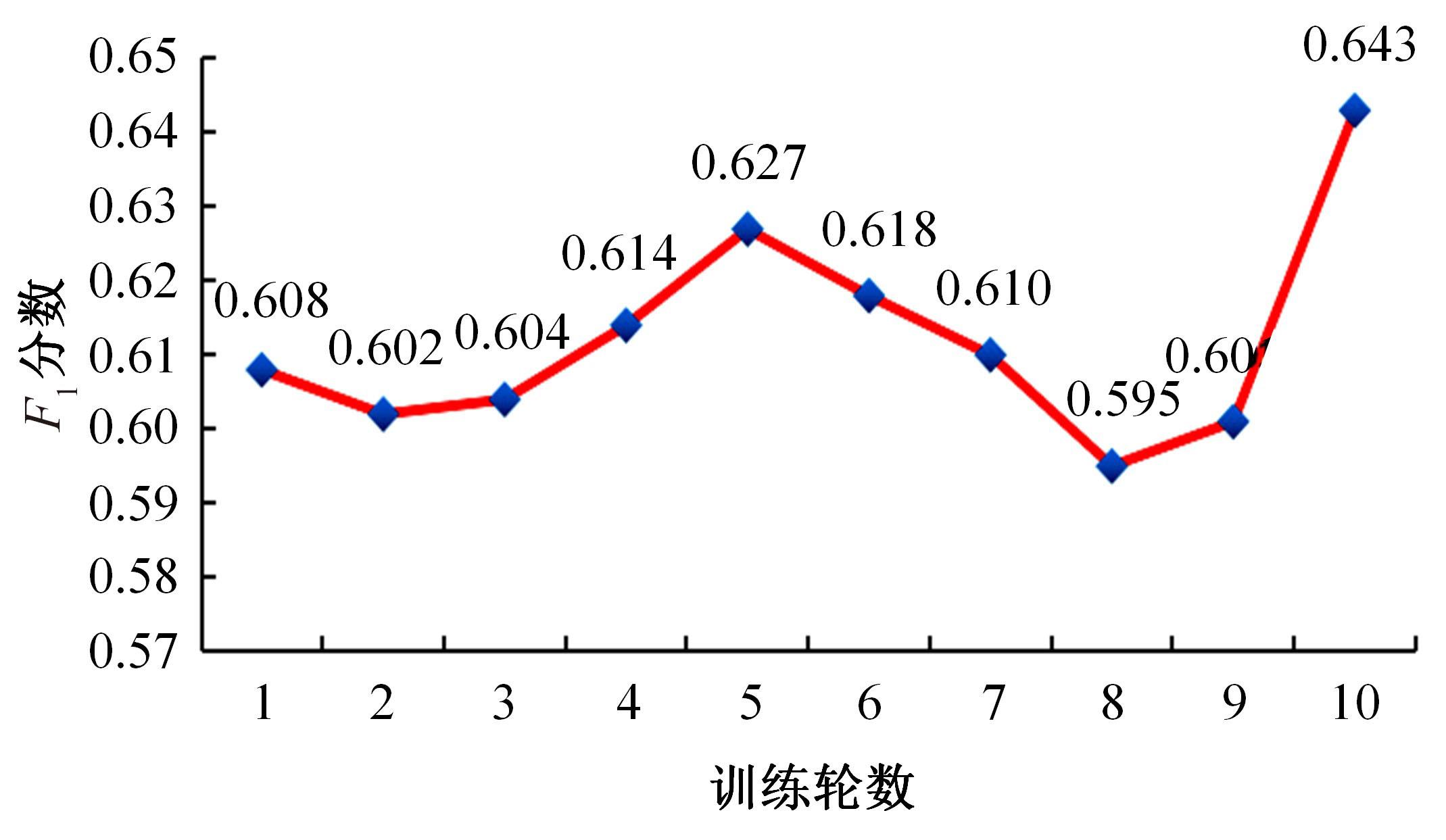

针对长文本语义匹配中词向量前后之间联系不易捕获以及主题信息可能不唯一,通常使得语义匹配效果不佳的问题,提出了一种基于BERT与密集复合网络的长文本语义匹配方法,通过BERT嵌入与复合网络的密集连接,显著提高了长语义匹配的准确率。首先,将句子对输入BERT预训练模型,通过迭代反馈得到精准的词向量表示,进而得到高质量的句子对语义信息。其次,设计了一种密集复合网络,先由双向长短期记忆网络(Bi-LSTM)获得句子对的全局语义信息,然后由TextCNN提取并整合局部语义信息得到每个句子的关键特征和句子对间的对应关系,并将BERT与Bi-LSTM的隐藏输出与TextCNN的池化输出融合。最后,汇总训练过程中网络之间的关联状态,可以有效防止网络退化和增强模型判断能力。实验结果表明,在社区问题回答(CQA)长文本数据集上,本文方法平均提升幅度达到45%。

中图分类号:

- TP391.1

| 1 | Filice Simone 1,Giovanni Da San Martinoao,Alessandro Moschitti,et al. SemEval-2017 task 3-learning pairwise patterns in community question answering[C]∥Proceedings of the 11th International Workshop on Semantic Evaluation, Vancouver, Canada, 2017: 326-333. |

| 2 | Wu Guo-shun, Sheng Yi-xuan, Lan Man, et al. Using traditional and deep learning methods to address community question answering task[C]∥Proceedings of the 11th International Workshop on Semantic Evaluation, Vancouver, Canada, 2017: 365-369. |

| 3 | Feng Wen-zheng, Wu Yu, Wu Wei, et al. Ranking system with neural matching features for community question answering[C]∥Proceedings of the 11th International Workshop on Semantic Evaluation, Vancouver, Canada, 2017: 280-286. |

| 4 | Koreeda Yuta, Hashito Takuya, Niwa Yoshiki, et al. Combination of neural similarity features and comment plausibility features[C]∥Proceedings of the 11th International Workshop on Semantic Evaluation, Vancouver, Canada, 2017: 353-359. |

| 5 | Wang Zhi-guo, Wael Hamza, Radu Florian. Bilateral multi-perspective matching for natural language sentences[C]∥Proceedings of the 26th International Joint Conference on Artificial Intelligence, Melbourne, Australia, 2017: 4144-4150. |

| 6 | Tan Chuan-qi, Wei Fu-ru, Wang Wen-hui, et al. Multiway attention networks for modeling sentence pairs[C]∥Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 2018: 4411-4417. |

| 7 | Jan Milan Deriu, Cieliebak Mark. Attention-based convolutional neural network for community question answering[C]∥Proceedings of the 11th International Workshop on Semantic Evaluation, Vancouver, Canada, 2017: 334-338. |

| 8 | Jacob Devlin, Chang Ming-wei, Lee Kenton, et al. BERT: pre-training of deep bidirectional transformers for language understanding[C]∥Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics, Minneapolis, USA, 2019: 4171-4186. |

| 9 | 陈源,丘心颖. 结合自监督学习的多任务文本语义匹配方法[J]. 北京大学学报: 自然科学版, 2022,58(1): 83-90. |

| Chen Yuan, Qiu Xin-ying. Multi-task semantic matching with self-supervised learning[J]. Acta Scientiarum Naturalium Universitatis Pekinensis,2022,58(1): 83-90. | |

| 10 | Reimers Nils, Gurevych Iryna. Sentence BERT: Sentence embeddings using siamese BERTnetworks[C]∥Proceedings of the 3rd Workshop on Neural Generation and Translation, Hong Kong, China, 2019: 3982-3992. |

| 11 | Li Bo-han, Zhou Hao, He Jun-xian, et al. On the sentence embeddings from pre-trained language models[C]∥The Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 2020: 9119-9130. |

| 12 | Peinelt N, Nguyen D, Liakata M. tBERT: topic models and BERT joining forces for semantic similarity detection[C]∥Proceedings of the 58rd Annual Meeting of the Association for Computational Linguistics, Tokyo, Japan, 2020: 7047-7055. |

| 13 | Chen Han-jie, Zheng Guang-tao, Ji Yang-feng. Generating hierarchical explanations on text classification via feature interaction detection[C]∥Proceedings of the 58rd Annual Meeting of the Association for Computational Linguistics, Tokyo, Japan,2020:5578-5593. |

| 14 | Tsang M, Cheng D, Liu H, et al. Feature interaction interpretability: a case for explaining ad-recommendation systems via neural interaction detection[J/OL]. [2020-12-11]. . |

| 15 | Gao J, He D, Tan X, et al. Representation degeneration problem in training natural language generation models[J/OL].[2019-12-10]. . |

| 16 | Ethayarajh K. How contextual are contextualized word representations?[C]∥Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing, Hong Kong, China, 2019, 55-65. |

| 17 | Yan Yuan-meng, Li Ru-mei, Wang Si-rui, et al. ConSERT: a contrastive framework for self-supervised sentence representation transfer[C]∥Association for Computational Linguistics and International Joint Conference on Natural Language Processing,Online, 2021: 5065–5075. |

| 18 | Schick T, Schütze H. Generating datasets with pretrained language models[J/OL][2021-10-21]. . |

| 19 | Chen Han-jie, Song Feng, Ganhotra Jatin, et al. Explaining neural network predictions on sentence pairs via learning word-group masks[C]∥Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics, Mexico City, Mexico, 2021: 3917-3930. |

| 20 | Balazs Jorge A, Matsuo Y. Gating mechanisms for combining character and word-level word representations: an empirical study[C]∥Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics, Minneapolis, USA, 2019: 110-124. |

| 21 | Choi H, Kim J, Joe S, et al. Evaluation of BERT and albert sentence embedding performance on downstream NLP tasks[C]∥The 25th International Conference on Pattern Recognition,Online, 2021: 5482-5487. |

| [1] | 霍光,林大为,刘元宁,朱晓冬,袁梦,盖迪. 基于多尺度特征和注意力机制的轻量级虹膜分割模型[J]. 吉林大学学报(工学版), 2023, 53(9): 2591-2600. |

| [2] | 金小俊,孙艳霞,于佳琳,陈勇. 基于深度学习与图像处理的蔬菜苗期杂草识别方法[J]. 吉林大学学报(工学版), 2023, 53(8): 2421-2429. |

| [3] | 耿庆田,刘植,李清亮,于繁华,李晓宁. 基于一种深度学习模型的土壤湿度预测[J]. 吉林大学学报(工学版), 2023, 53(8): 2430-2436. |

| [4] | 巫威眺,曾坤,周伟,李鹏,靳文舟. 基于多源数据和响应面优化的公交客流预测深度学习方法[J]. 吉林大学学报(工学版), 2023, 53(7): 2001-2015. |

| [5] | 张振海,季坤,党建武. 基于桥梁裂缝识别模型的桥梁裂缝病害识别方法[J]. 吉林大学学报(工学版), 2023, 53(5): 1418-1426. |

| [6] | 吴飞,农皓业,马晨浩. 基于粒子群优化算法⁃长短时记忆模型的刀具磨损预测方法[J]. 吉林大学学报(工学版), 2023, 53(4): 989-997. |

| [7] | 何科,丁海涛,赖宣淇,许男,郭孔辉. 基于Transformer的轮式里程计误差预测模型[J]. 吉林大学学报(工学版), 2023, 53(3): 653-662. |

| [8] | 刘春晖,王思长,郑策,陈秀连,郝春蕾. 基于深度学习的室内导航机器人避障规划算法[J]. 吉林大学学报(工学版), 2023, 53(12): 3558-3564. |

| [9] | 吕晓琪,李浩,谷宇. 基于深度学习算法的人脸图像活体特征变换尺度提取[J]. 吉林大学学报(工学版), 2023, 53(11): 3201-3206. |

| [10] | 王军,王华琳,黄博文,付强,刘俊. 基于联邦学习和自注意力的工业物联网入侵检测[J]. 吉林大学学报(工学版), 2023, 53(11): 3229-3237. |

| [11] | 孙舒杨,程玮斌,张浩桢,邓向萍,齐红. 基于深度学习的两阶段实时显式拓扑优化方法[J]. 吉林大学学报(工学版), 2023, 53(10): 2942-2951. |

| [12] | 曲优,李文辉. 基于多任务联合学习的多目标跟踪方法[J]. 吉林大学学报(工学版), 2023, 53(10): 2932-2941. |

| [13] | 高金武,贾志桓,王向阳,邢浩. 基于PSO-LSTM的质子交换膜燃料电池退化趋势预测[J]. 吉林大学学报(工学版), 2022, 52(9): 2192-2202. |

| [14] | 申铉京,张雪峰,王玉,金玉波. 像素级卷积神经网络多聚焦图像融合算法[J]. 吉林大学学报(工学版), 2022, 52(8): 1857-1864. |

| [15] | 李晓英,杨名,全睿,谭保华. 基于深度学习的不均衡文本分类方法[J]. 吉林大学学报(工学版), 2022, 52(8): 1889-1895. |

|

||