吉林大学学报(工学版) ›› 2024, Vol. 54 ›› Issue (5): 1407-1416.doi: 10.13229/j.cnki.jdxbgxb.20221367

• 计算机科学与技术 • 上一篇

基于注意力机制改进的无锚框舰船检测模型

- 中国科学院 长春光学精密机械与物理研究所,长春 130033

An improved anchor-free model based on attention mechanism for ship detection

Yun-long GAO( ),Ming REN,Chuan WU,Wen GAO

),Ming REN,Chuan WU,Wen GAO

- Changchun Institute of Optics,Fine Mechanics and Physics,Chinese Academy of Sciences,Changchun 130033,China

摘要:

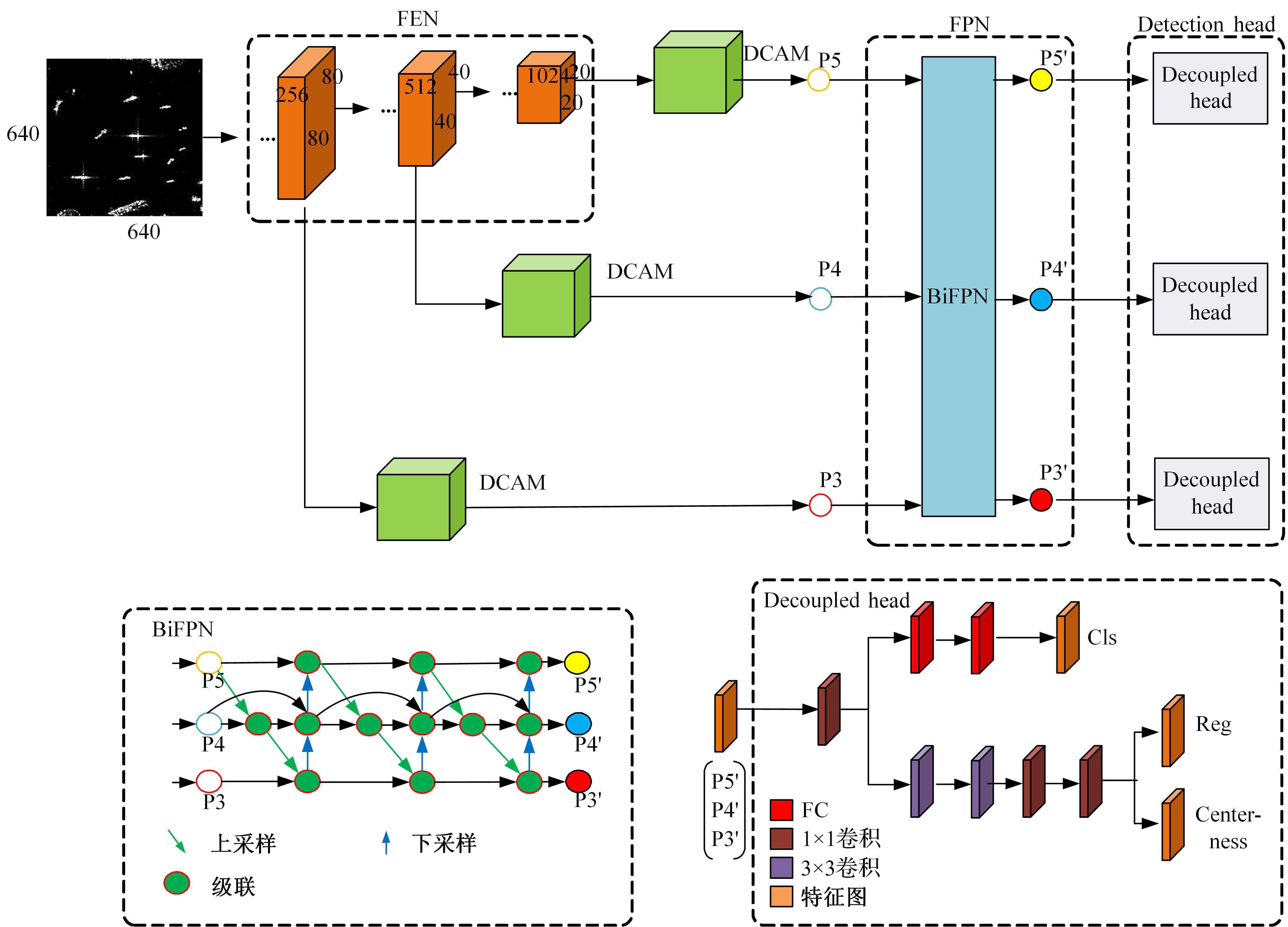

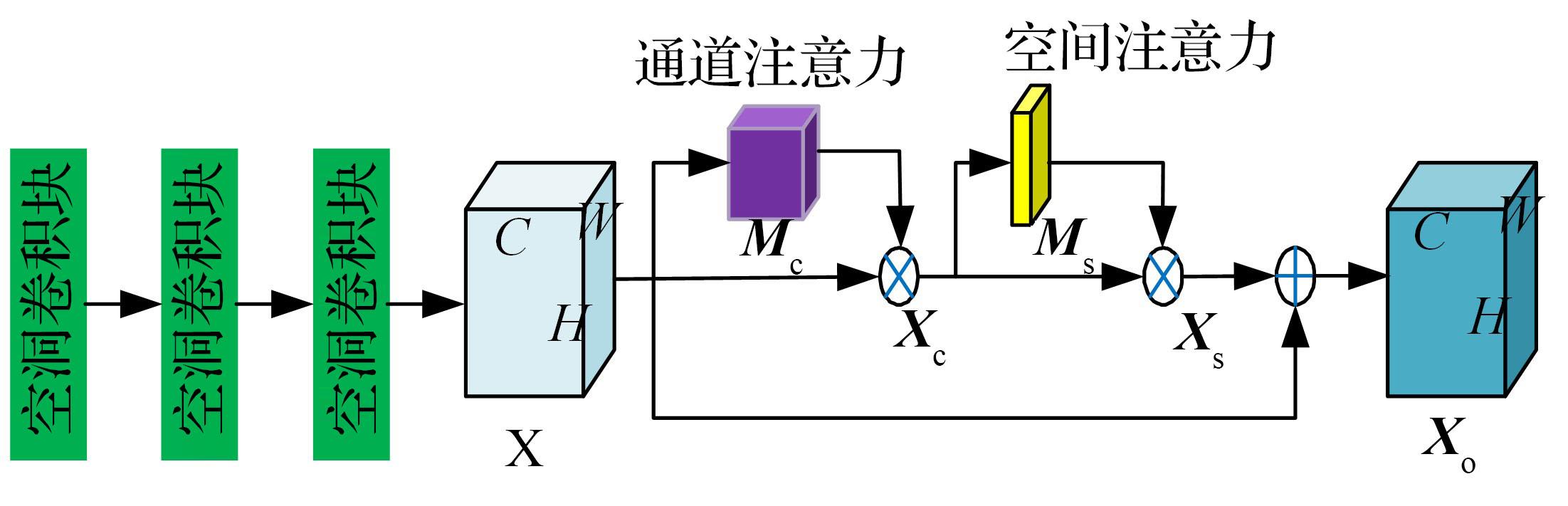

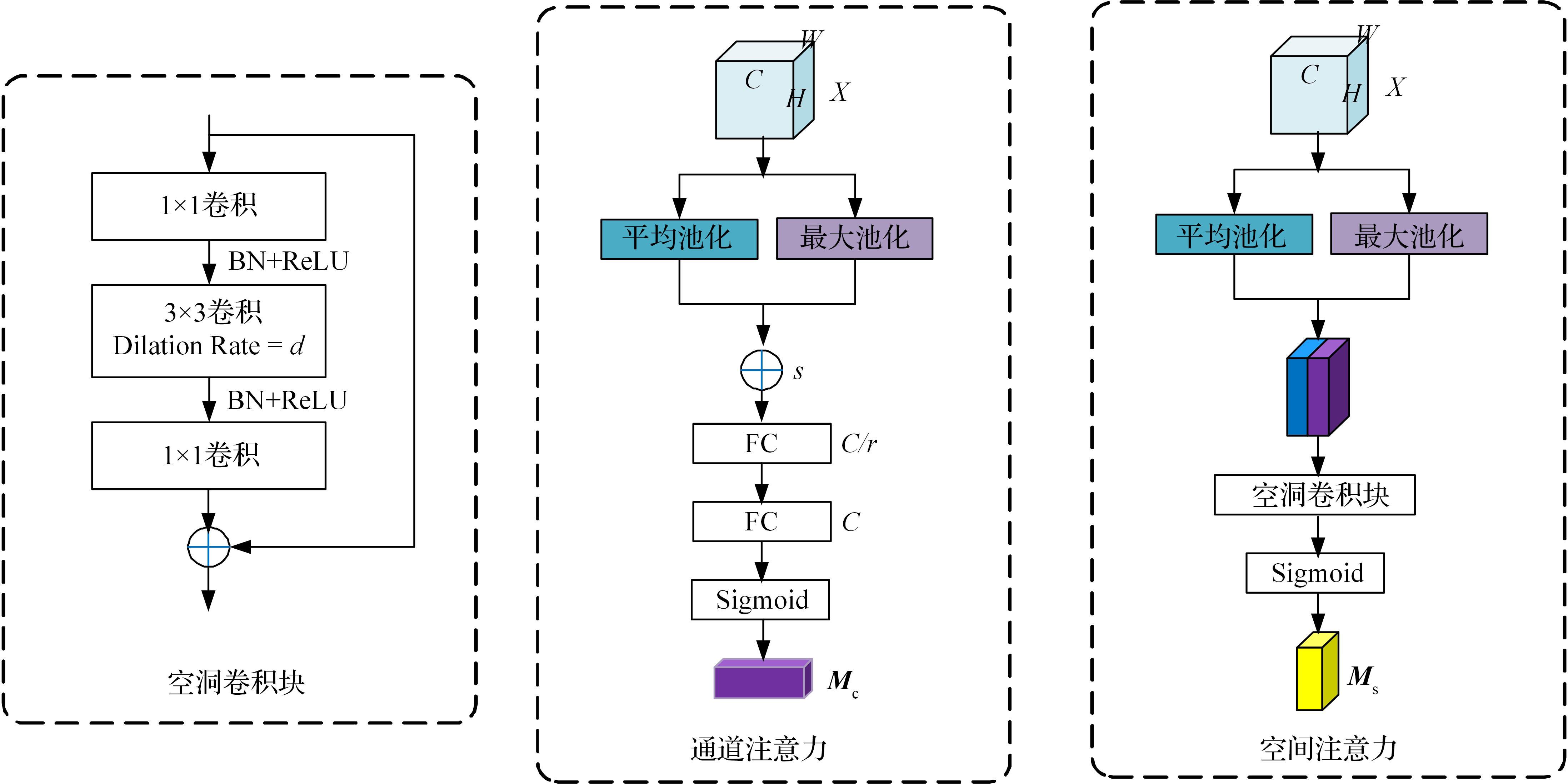

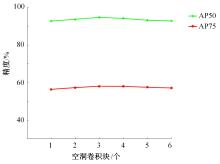

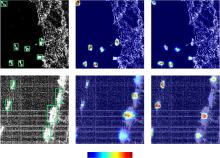

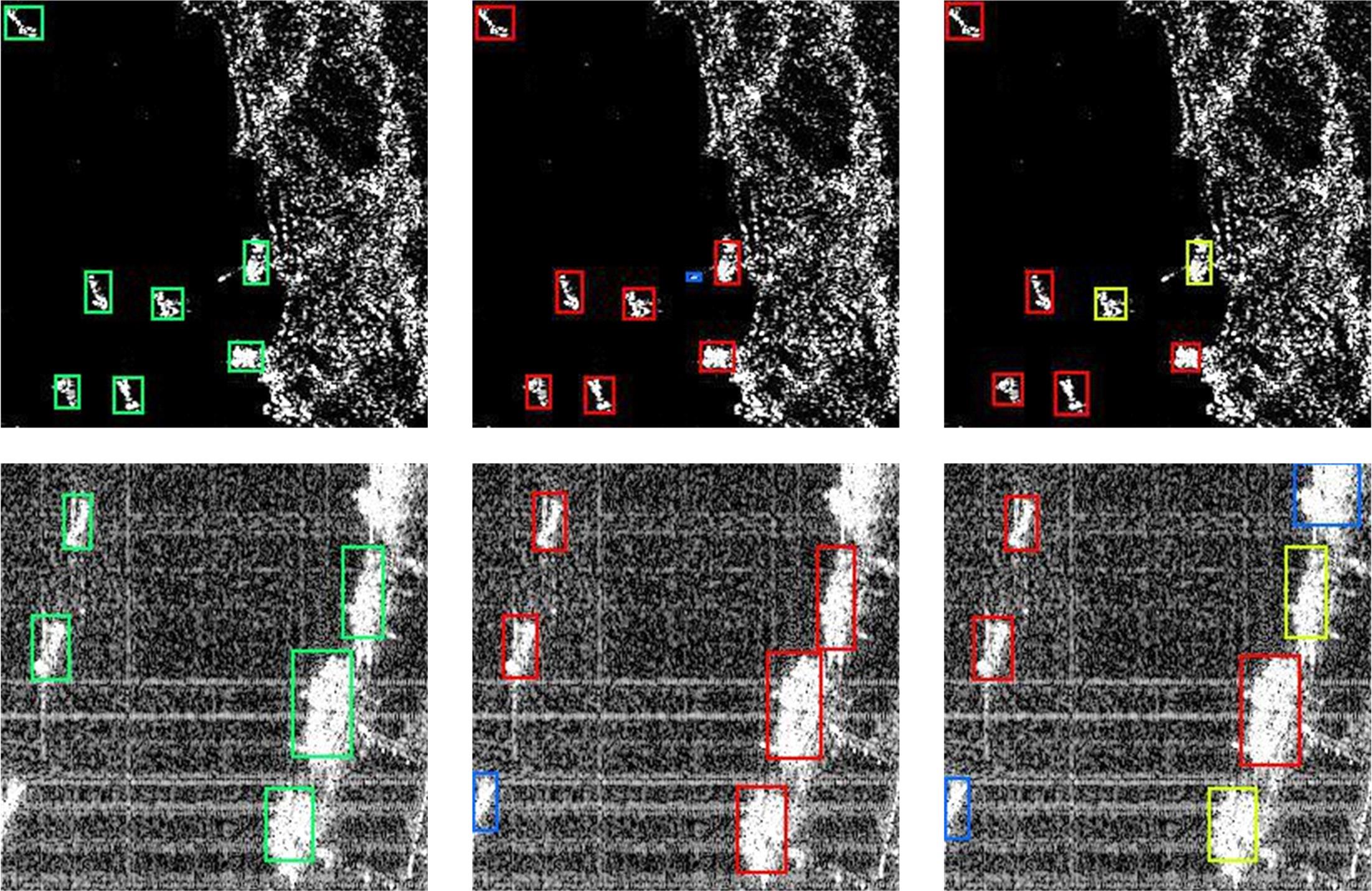

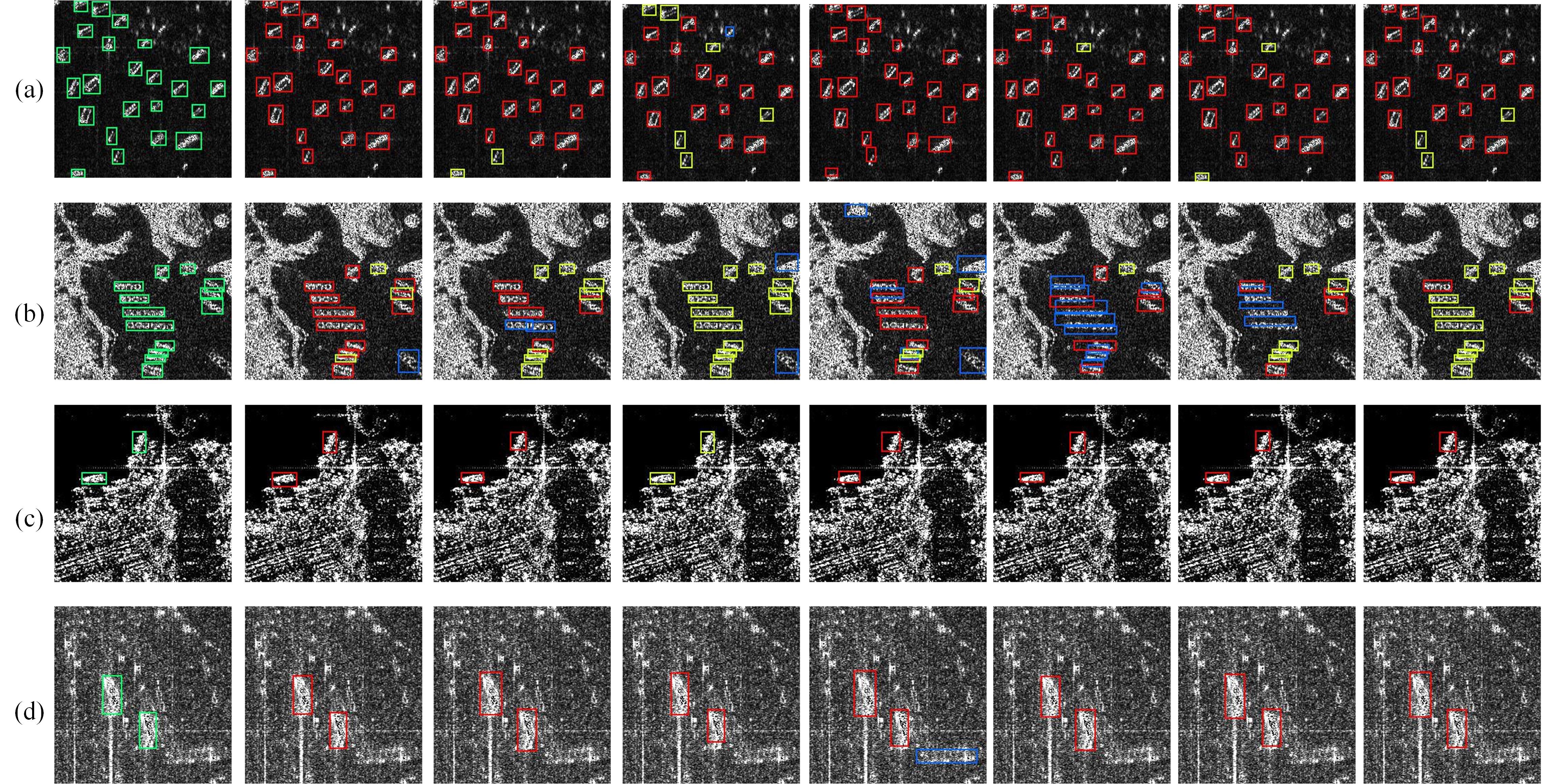

为提升模型对合成孔径雷达(SAR)图像多尺度舰船目标的检测能力,保证检测网络的实时性,提出一个基于注意力机制改进的无锚框舰船检测模型。在YOLOX网络特征金字塔处嵌入空洞注意力模块,调节感受野与多尺度融合的关系,强化特征的表示能力。在检测头部设计中心性预测分支,对锚点的分类得分进行加权处理,调整模型的损失函数,优化检测结果。在数据集SSDD上进行的对比实验结果表明:本文提出的模型优于主流的深度网络检测模型,精度达到94.73%,且在检测精度和检测速度中取得最佳平衡。

中图分类号:

- TP391

| 1 | Mao C, Huang L, Xiao Y, et al. Target recognition of SAR image based on CN-GAN and CNN in complex environment[J]. IEEE Access, 2021, 9: 39608-39617. |

| 2 | Wang C, Bi F, Zhang W, et al. An Intensity-space domain CFAR method for ship detection in HR SAR images[J]. IEEE Geoscience and Remote Sensing Letters, 2017, 14(4): 529-533. |

| 3 | Wang S, Wang M, Yang S, et al. New hierarchical saliency filtering for fast ship detection in high-resolution SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2017, 55(1): 351-362. |

| 4 | Leng X, Ji K, Xing X, et al. Area ratio invariant feature group for ship detection in SAR imagery[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2018, 11(7): 2376-2388. |

| 5 | Sun K, Liang Y, Ma X, et al. DSDet: a lightweight densely connected sparsely activated detector for ship target detection in high-resolution SAR images[J]. Remote Sensing, 2021, 13(14): No. 2743. |

| 6 | Yang R, Pan Z, Jia X, et al. A novel CNN-based detector for ship detection based on rotatable bounding box in SAR images[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2021, 14: 1938-1958. |

| 7 | Avi A, Roee D. CFAR detection algorithm for objects in sonar images[J]. IET Radar, Sonar Navigation, 2020, 14(11): 1757-1766. |

| 8 | Ren S, He K, Girshick R. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis & Machine Intelligence, 2017, 39(6): 1137-1149. |

| 9 | Lin T, Goyal P, Girshick R, et al. Focal loss for dense object detection[C]∥2017 IEEE International Conference on Computer Vision,Venice, Italy, 2017: 2999-3007. |

| 10 | Redmon J, Farhadi A. YOLOv3: an incremental improvement[EB/OL]. [2022-11-24]. . |

| 11 | Kang M, Leng X, Lin Z, et al. A modified faster R-CNN based on CFAR algorithm for SAR ship detection[J]. 2017 International Workshop on Remote Sensing with Intelligent Processing (RSIP), Shanghai,China, 2017: 1-4. |

| 12 | Yang X, Zhang X, Wang N, et al. A robust one-stage detector for multiscale ship detection with complex background in massive SAR images[J]. IEEE Transactions on Geoscience and Remote Sensing, 2022, 60: 1-12. |

| 13 | Miao T, Zeng H, Yang W, et al. An improved lightweight retinanet for ship detection in SAR images[J]. Remote Sensing, 2022, 15: 4667-4679. |

| 14 | Sun Z, Dai M, Leng X, et al. An anchor-free detection method for ship targets in high-resolution SAR images[J]. Remote Sensing, 2021, 14: 7799-7816. |

| 15 | Jiang Y, Li W, Liu L. R-CenterNet+: anchor-free detector for ship detection in SAR images[J]. Sensors, 2021, 21(17): No.5693. |

| 16 | Gao F, He Y, Wang J, et al. Anchor-free convolutional network with dense attention feature aggregation for ship detection in SAR images[J]. Remote Sensing, 2020, 12(16): No.2619. |

| 17 | Ge Z, Liu S, Wang F, et al. YOLOX: exceeding YOLO series in 2021[EB/OL].[2022-11-24]. . |

| 18 | Tian Z, Shen C, Chen H, et al. FCOS: fully convolutional one-stage object detection[C]∥2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 2019: 9626-9635. |

| 19 | Lin T, Goyal P, Girshick R, et al. Focal loss for dense object detection[C]∥2017 IEEE International Conference on Computer Vision,Venice, Italy, 2017: 2980-2988. |

| 20 | Rezatofighi H, Tsoi N, Gwak J, et al. Generalized intersection over union: a metric and a loss for bounding box regression[C]∥Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, USA, 2019: 658-666. |

| 21 | Wang Y, Wang C, Zhang H. A SAR dataset of ship detection for deep learning under complex backgrounds[J]. Remote Sensing, 2019, 11(7): No.765. |

| 22 | Huang L, Liu B, Li B, et al. OpenSARShip: a dataset dedicated to sentinel-1 ship interpretation[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2018, 11(1): 195-208. |

| 23 | Everingham M, Zisserman A, Williams C, et al. The 2005 PASCAL visual object classes challenge [J]. MLCW, 2005:117-176. |

| 24 | Sutanto A, Kang D. A novel diminish smooth L1 loss model with generative adversarial network[C]∥12th International Conference of Intelligent Human Computer Interaction, Daegu, Korea, 2020: 361-368. |

| 25 | Bochkovskiy A, Wang C, Liao M. YOLOv4: optimal speed and accuracy of object detection[EB/OL].[2022-11-24]. . |

| 26 | Tan M, Pang R, Le Q. EfficientDet: scalable and efficient object detection[C]∥Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, USA, 2020: 10778-10787. |

| 27 | Duan K, Bai S, Xie L, et al. CenterNet: keypoint triplets for object detection[C]∥2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 2019: 6568-6577. |

| [1] | 王宇,赵凯. 基于亚像素定位的人体姿态热图后处理[J]. 吉林大学学报(工学版), 2024, 54(5): 1385-1392. |

| [2] | 李晓旭,安文娟,武继杰,李真,张珂,马占宇. 通道注意力双线性度量网络[J]. 吉林大学学报(工学版), 2024, 54(2): 524-532. |

| [3] | 霍光,林大为,刘元宁,朱晓冬,袁梦,盖迪. 基于多尺度特征和注意力机制的轻量级虹膜分割模型[J]. 吉林大学学报(工学版), 2023, 53(9): 2591-2600. |

| [4] | 郭晓新,李佳慧,张宝亮. 基于高分辨率网络的视杯和视盘的联合分割[J]. 吉林大学学报(工学版), 2023, 53(8): 2350-2357. |

| [5] | 唐菲菲,周海莲,唐天俊,朱洪洲,温永. 融合动静态变量的滑坡多步位移预测方法[J]. 吉林大学学报(工学版), 2023, 53(6): 1833-1841. |

| [6] | 田彦涛,黄兴,卢辉遒,王凯歌,许富强. 基于注意力与深度交互的周车多模态行为轨迹预测[J]. 吉林大学学报(工学版), 2023, 53(5): 1474-1480. |

| [7] | 吕卫,韩镓泽,褚晶辉,井佩光. 基于多模态自注意力网络的视频记忆度预测[J]. 吉林大学学报(工学版), 2023, 53(4): 1211-1219. |

| [8] | 田彦涛,许富强,王凯歌,郝子绪. 考虑周车信息的自车期望轨迹预测[J]. 吉林大学学报(工学版), 2023, 53(3): 674-681. |

| [9] | 刘桂霞,田郁欣,王涛,马明睿. 基于双输入3D卷积神经网络的胰腺分割算法[J]. 吉林大学学报(工学版), 2023, 53(12): 3565-3572. |

| [10] | 刘晶红,邓安平,陈琪琪,彭佳琦,左羽佳. 基于多重注意力机制的无锚框目标跟踪算法[J]. 吉林大学学报(工学版), 2023, 53(12): 3518-3528. |

| [11] | 江晟,王鹏朗,邓志吉,别一鸣. 基于深度学习的交通事故救援图像融合算法[J]. 吉林大学学报(工学版), 2023, 53(12): 3472-3480. |

| [12] | 耿庆田,赵杨,李清亮,于繁华,李晓宁. 基于注意力机制的LSTM和ARIMA集成方法在土壤温度中应用[J]. 吉林大学学报(工学版), 2023, 53(10): 2973-2981. |

| [13] | 欧阳继红,郭泽琪,刘思光. 糖尿病视网膜病变分期双分支混合注意力决策网络[J]. 吉林大学学报(工学版), 2022, 52(3): 648-656. |

| [14] | 李先通,全威,王华,孙鹏程,安鹏进,满永兴. 基于时空特征深度学习模型的路径行程时间预测[J]. 吉林大学学报(工学版), 2022, 52(3): 557-563. |

| [15] | 毛琳,任凤至,杨大伟,张汝波. 双向特征金字塔全景分割网络[J]. 吉林大学学报(工学版), 2022, 52(3): 657-665. |

|

||