吉林大学学报(工学版) ›› 2024, Vol. 54 ›› Issue (8): 2307-2312.doi: 10.13229/j.cnki.jdxbgxb.20230128

基于知识蒸馏的图像分类框架

- 1.吉林大学 计算机科学与技术学院,长春 130012

2.吉林大学 机械与航空航天工程学院,长春 130025

Image classification framework based on knowledge distillation

Hong-wei ZHAO1( ),Hong WU1,Ke MA2,Hai LI1

),Hong WU1,Ke MA2,Hai LI1

- 1.College of Computer Science and Technology,Jilin University,Changchun 130012,China

2.School of Mechanical and Aerospace Engineering,Jilin University,Changchun 130025,China

摘要:

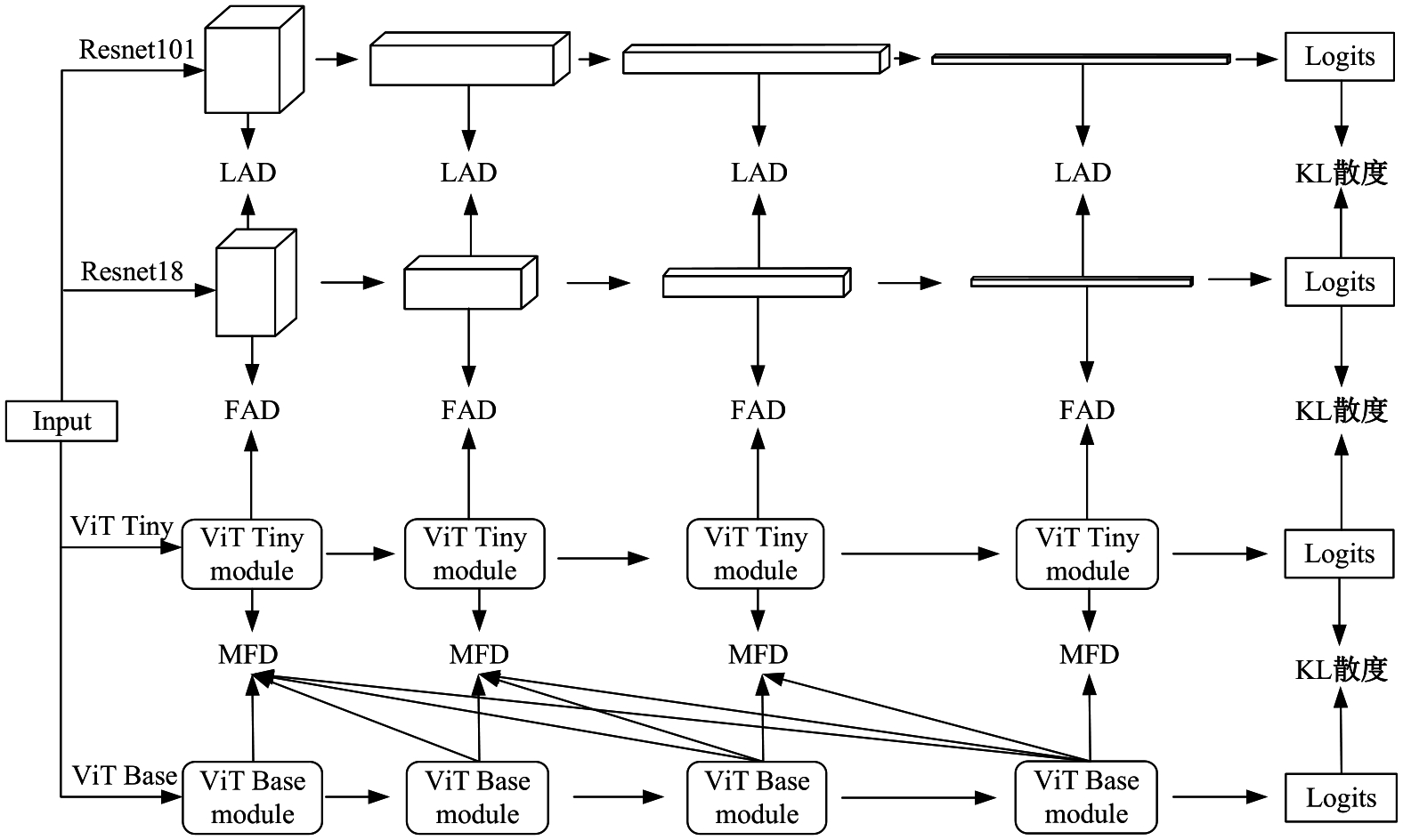

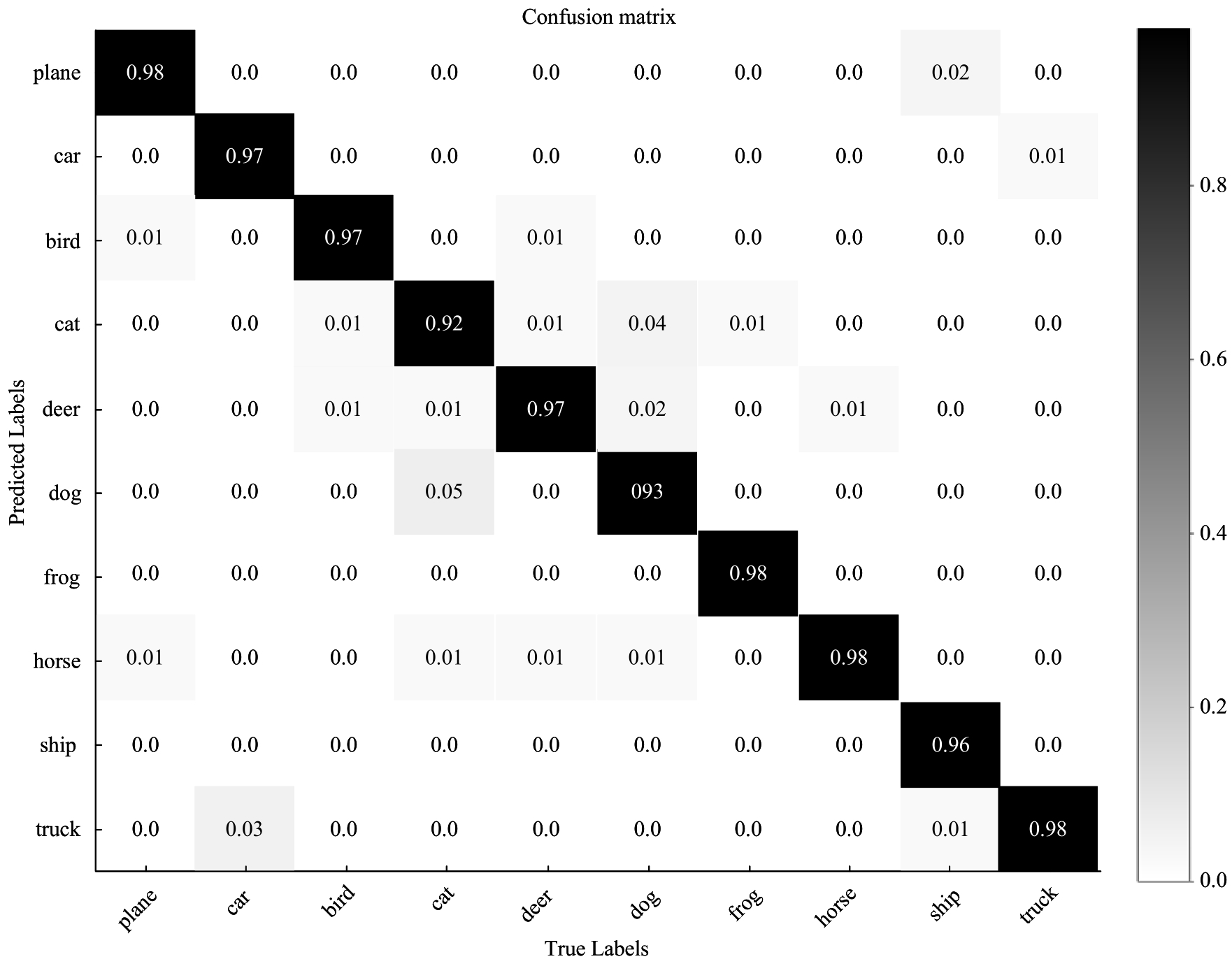

为解决在图像分类任务中难以有效融合CNNs与Transformer网络特征的问题,提出了一种基于知识蒸馏的图像分类框架(Knowledge distillation image classification,KDIC)。KDIC框架中根据CNNs与Transformer网络结构的差异设计了多种知识蒸馏方法:本方法有效地将CNNs的局部特征与Transformer的全局表示融入轻量的student模型中,并基于不同的知识蒸馏方法提出有效的损失函数来提升图像分类任务的性能。图像分类实验在CIFAR10、CIFAR100、UC-Merced 3个公开数据集上进行,实验结果表明;KDIC框架与当前的知识蒸馏方法相比有着明显的优势,同时KDIC在不同师生网络下仍然具有良好的性能和泛化性。

中图分类号:

- TP391

| 1 | Dosovitskiy A, Beyer L, Kolesnikov A, et al. An image is worth 16x16 words: transformers for image recognition at scale[DB/OL].[2023-01-05].. |

| 2 | Liu Z, Lin Y, Cao Y, et al. Swin transformer: hierarchical vision transformer using shifted windows[C]∥ Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, Canada, 2021: 10012-10022. |

| 3 | 赵宏伟, 张健荣, 朱隽平, 等. 基于对比自监督学习的图像分类框架[J]. 吉林大学学报: 工学版, 2022, 52(8): 1850-1856. |

| Zhao Hong-wei, Zhang Jian-rong, Zhu Jun-ping, et al. Image classification framework based on contrastive self⁃supervised learning[J]. Journal of Jilin University(Engineering and Technology Edition), 2022, 52(8): 1850-1856. | |

| 4 | 赵宏伟, 霍东升, 王洁, 等. 基于显著性检测的害虫图像分类[J]. 吉林大学学报: 工学版, 2021, 51(6): 2174-2181. |

| Zhao Hong-wei, Huo Dong-sheng, Wang Jie, et al. Image classification of insect pests based on saliency detection[J]. Journal of Jilin University (Engineering and Technology Edition), 2021, 51(6): 2174-2181. | |

| 5 | Mehta S, Rastegari M. Mobilevit: light-weight, general-purpose, and mobile-friendly vision transformer[DB/OL].[2023-01-05].. |

| 6 | Chen Y, Dai X, Chen D, et al. Mobile-former: bridging mobilenet and transformer[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, USA, 2022: 5270-5279. |

| 7 | Zagoruyko S, Komodakis N. Paying more attention to attention: improving the performance of convolutional neural networks via attention transfer[DB/OL].[2023-01-06]. |

| 8 | Gou J, Yu B, Maybank S J, et al. Knowledge distillation: a survey[J]. International Journal of Computer Vision, 2021, 129: 1789-1819. |

| 9 | 黄震华, 杨顺志, 林威, 等. 知识蒸馏研究综述[J]. 计算机学报, 2022, 45(3): 624-653. |

| Huang Zhen-hua, Yang Shun-zhi, Lin Wei, et al. Research review on knowledge distillation[J]. Chinese Journal of Computers, 2022, 45(3): 624-653. | |

| 10 | Raghu M, Unterthiner T, Kornblith S, et al. Do vision transformers see like convolutional neural networks?[J]. Advances in Neural Information Processing Systems, 2021, 34: 12116-12128. |

| 11 | He K, Zhang X, Ren S, et al. Deep residual learning for image recognition[C]∥Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770-778. |

| 12 | Yang Y, Newsam S. Bag-of-visual-words and spatial extensions for land-use classification[C]∥Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Joes, USA, 2010: 270-279. |

| 13 | Hinton G, Vinyals O, Dean J. Distilling the knowledge in a neural network[DB/OL].[2023-01-06]. |

| 14 | Romero A, Ballas N, Kahou S E, et al. Fitnets: hints for thin deep nets[DB/OL].[2023-01-07]. |

| 15 | Passalis N, Tzelepi M, Tefas A. Heterogeneous knowledge distillation using information flow modeling[C]∥Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, USA, 2020: 2339-2348. |

| 16 | Chen D, Mei J P, Zhang Y, et al. Cross-layer distillation with semantic calibration[J]. Proceedings of the AAAI Conference on Artificial Intelligence, 2021, 35(8): 7028-7036. |

| 17 | Howard A, Sandler M, Chu G, et al. Searching for mobilenetv3[C]∥Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea(South), 2019: 1314-1324. |

| 18 | Ma N, Zhang X, Zheng H T, et al. Shufflenet v2: practical guidelines for efficient CNNs architecture design[C]∥Proceedings of the European Conference on Computer Vision, Munich, Germany, 2018: 116-131. |

| [1] | 特木尔朝鲁朝鲁,张亚萍. 基于卷积神经网络的无线传感器网络链路异常检测算法[J]. 吉林大学学报(工学版), 2024, 54(8): 2295-2300. |

| [2] | 朱圣杰,王宣,徐芳,彭佳琦,王远超. 机载广域遥感图像的尺度归一化目标检测方法[J]. 吉林大学学报(工学版), 2024, 54(8): 2329-2337. |

| [3] | 张锦洲,姬世青,谭创. 融合卷积神经网络和双边滤波的相贯线焊缝提取算法[J]. 吉林大学学报(工学版), 2024, 54(8): 2313-2318. |

| [4] | 张云佐,郑宇鑫,武存宇,张天. 基于双特征提取网络的复杂环境车道线精准检测[J]. 吉林大学学报(工学版), 2024, 54(7): 1894-1902. |

| [5] | 魏晓辉,王晨洋,吴旗,郑新阳,于洪梅,岳恒山. 面向脉动阵列神经网络加速器的软错误近似容错设计[J]. 吉林大学学报(工学版), 2024, 54(6): 1746-1755. |

| [6] | 孙铭会,薛浩,金玉波,曲卫东,秦贵和. 联合时空注意力的视频显著性预测[J]. 吉林大学学报(工学版), 2024, 54(6): 1767-1776. |

| [7] | 李延风,刘名扬,胡嘉明,孙华栋,孟婕妤,王奥颖,张涵玥,杨华民,韩开旭. 基于梯度转移和自编码器的红外与可见光图像融合[J]. 吉林大学学报(工学版), 2024, 54(6): 1777-1787. |

| [8] | 张丽平,刘斌毓,李松,郝忠孝. 基于稀疏多头自注意力的轨迹kNN查询方法[J]. 吉林大学学报(工学版), 2024, 54(6): 1756-1766. |

| [9] | 夏超,王梦佳,朱剑月,杨志刚. 基于分层卷积自编码器的钝体湍流流场降阶分析[J]. 吉林大学学报(工学版), 2024, 54(4): 874-882. |

| [10] | 梁礼明,周珑颂,尹江,盛校棋. 融合多尺度Transformer的皮肤病变分割算法[J]. 吉林大学学报(工学版), 2024, 54(4): 1086-1098. |

| [11] | 张云佐,郭威,李文博. 遥感图像密集小目标全方位精准检测算法[J]. 吉林大学学报(工学版), 2024, 54(4): 1105-1113. |

| [12] | 李晓旭,安文娟,武继杰,李真,张珂,马占宇. 通道注意力双线性度量网络[J]. 吉林大学学报(工学版), 2024, 54(2): 524-532. |

| [13] | 杨国俊,齐亚辉,石秀名. 基于数字图像技术的桥梁裂缝检测综述[J]. 吉林大学学报(工学版), 2024, 54(2): 313-332. |

| [14] | 张云佐,董旭,蔡昭权. 拟合下肢几何特征的多视角步态周期检测[J]. 吉林大学学报(工学版), 2023, 53(9): 2611-2619. |

| [15] | 肖明尧,李雄飞,朱芮. 基于NSST域像素相关分析的医学图像融合[J]. 吉林大学学报(工学版), 2023, 53(9): 2640-2648. |

|